The convergence of WebAssembly (Wasm) and serverless computing represents a significant evolution in modern software architecture. Wasm, a bytecode format designed for efficient execution in web browsers, has transcended its initial purpose to become a versatile technology applicable across various computing environments. Serverless computing, on the other hand, offers a paradigm shift by allowing developers to build and run applications without managing underlying infrastructure.

This exploration delves into the synergistic relationship between these two technologies, examining how Wasm enhances the capabilities and efficiency of serverless applications.

This analysis will meticulously dissect the fundamental concepts of both Wasm and serverless computing, highlighting their individual strengths and inherent limitations. We will then meticulously examine the integration of Wasm within serverless environments, identifying the specific advantages it provides in terms of performance, portability, and security. Furthermore, the discussion will cover practical use cases, deployment methods, and available tools, providing a comprehensive understanding of how to leverage Wasm to optimize serverless applications.

Finally, we will address the challenges and future trends associated with this rapidly evolving landscape.

Introduction to WebAssembly (Wasm)

WebAssembly (Wasm) represents a significant advancement in web and software development, enabling near-native performance for applications running in web browsers and beyond. Its core design prioritizes portability, efficiency, and security, making it a versatile technology for a wide array of use cases. Wasm’s ability to execute code compiled from various programming languages opens up new possibilities for developers, leading to more complex and demanding applications on the web and in serverless environments.

Fundamental Concepts of WebAssembly

WebAssembly is a binary instruction format designed as a portable compilation target for programming languages. Its primary purpose is to provide a low-level, efficient, and safe execution environment for code, allowing it to run at near-native speeds across different platforms. Wasm achieves this through a stack-based virtual machine that is designed to be easily interpreted by web browsers and other runtimes.

This architecture allows developers to write code in languages like C, C++, Rust, and others, and then compile it to Wasm, which can then be executed in a browser or other Wasm-compatible environment. The advantages are multifold, including improved performance compared to JavaScript, increased portability across different platforms, and enhanced security through sandboxing.

History and Evolution of WebAssembly

The development of WebAssembly was a collaborative effort involving major browser vendors and industry experts, driven by the need for a more efficient and versatile execution environment for web applications. The initial proposal emerged in 2015, with the first version of Wasm supported in major browsers by 2017. The evolution has been marked by continuous improvements and standardization. The WebAssembly working group, consisting of representatives from Mozilla, Google, Microsoft, and others, oversees the standardization process.

The ongoing development includes enhancements to features, such as garbage collection, threads, and SIMD (Single Instruction, Multiple Data) support, aiming to improve performance and expand the capabilities of Wasm.

Core Benefits of Using WebAssembly

WebAssembly offers several core benefits that make it an attractive option for developers. These advantages include improved performance, portability, and security.

- Performance: Wasm code executes at near-native speeds, significantly faster than JavaScript, especially for computationally intensive tasks. This performance gain is due to Wasm’s low-level nature and optimized execution environment. The performance benefits are particularly noticeable in applications like gaming, video editing, and scientific simulations, where processing power is critical. For instance, in game engines such as Unreal Engine and Unity, Wasm enables the creation of web-based games that rival the performance of native desktop applications.

- Portability: Wasm is designed to be platform-independent. Once code is compiled to Wasm, it can run on any platform with a Wasm-compatible runtime, including web browsers, server-side environments, and embedded systems. This cross-platform compatibility simplifies development and deployment, allowing developers to target a wider audience with a single codebase. For example, the ability to run Wasm in a serverless environment allows developers to deploy the same code on both the client and server, streamlining development and reducing code duplication.

- Security: Wasm operates within a sandboxed environment, providing a secure execution environment. The Wasm runtime isolates the code from the host system, preventing direct access to system resources and mitigating potential security risks. This sandboxing mechanism enhances the overall security posture of applications, making them more resistant to attacks. This is crucial in environments like web browsers, where untrusted code from various sources is executed.

Serverless Computing Overview

Serverless computing represents a paradigm shift in cloud computing, enabling developers to build and run applications without managing the underlying infrastructure. This approach allows developers to focus solely on writing code, as the cloud provider handles server provisioning, scaling, and maintenance. This leads to significant advantages in terms of cost, efficiency, and development velocity.

Defining Serverless Computing and Its Key Characteristics

Serverless computing, despite its name, does not mean the absence of servers. Instead, it signifies the abstraction of server management from the developer. The cloud provider dynamically allocates resources, scales the application, and handles all operational aspects.

- Event-Driven Architecture: Serverless applications are typically triggered by events, such as HTTP requests, database updates, or scheduled tasks. These events initiate the execution of individual functions.

- Function-as-a-Service (FaaS): The core of serverless computing is FaaS, where developers write and deploy individual functions that execute in response to events. These functions are stateless and designed to perform a specific task.

- Automatic Scaling: Serverless platforms automatically scale the application based on demand. This ensures that the application can handle traffic spikes without manual intervention.

- Pay-per-Use Pricing: Users are charged only for the actual compute time and resources consumed by their functions. This can result in significant cost savings compared to traditional cloud infrastructure.

- Statelessness: Serverless functions are typically stateless, meaning they do not retain any information about previous invocations. Any required state management is handled externally, such as through databases or caching services.

Discussing the Benefits of Serverless Architecture for Developers

Serverless architecture offers a compelling set of advantages for developers, streamlining the development process and reducing operational overhead. These benefits contribute to faster time-to-market and increased agility.

- Reduced Operational Overhead: Developers are freed from managing servers, operating systems, and infrastructure, allowing them to concentrate on writing code and building features.

- Scalability and Availability: Serverless platforms automatically handle scaling, ensuring the application can handle fluctuating traffic and maintain high availability.

- Cost Optimization: Pay-per-use pricing models eliminate the need to pay for idle resources, resulting in cost savings.

- Faster Development Cycles: The focus on individual functions and event-driven architecture allows for rapid development and deployment of new features.

- Improved Developer Productivity: By abstracting away infrastructure management, serverless platforms empower developers to be more productive and focus on the core business logic.

Elaborating on Common Use Cases for Serverless Applications

Serverless computing is well-suited for a wide range of applications, particularly those with variable workloads or event-driven requirements. Its flexibility and scalability make it an attractive option for many modern software projects.

- Web Applications: Serverless functions can handle API requests, user authentication, and other backend tasks for web applications. This allows developers to build scalable and cost-effective web applications without managing servers. For example, platforms like Netlify and Vercel provide serverless functions for static site generation and dynamic content delivery.

- Mobile Backends: Serverless platforms can power mobile backends, handling tasks such as user authentication, data storage, and push notifications. This enables developers to build scalable and responsive mobile applications. Services like AWS Amplify provide tools for building serverless mobile backends.

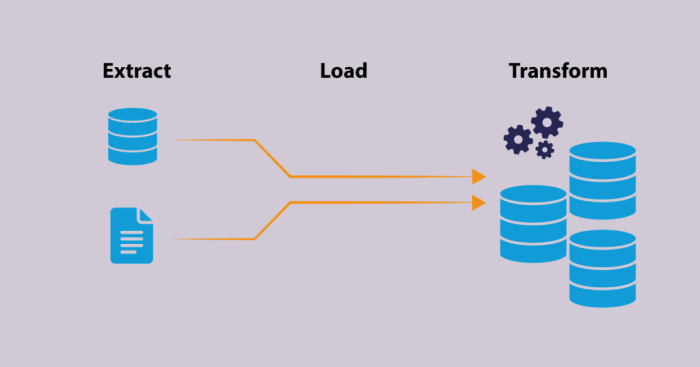

- Data Processing and ETL: Serverless functions can be used to process data streams, perform ETL (Extract, Transform, Load) operations, and analyze large datasets. For example, AWS Lambda can be triggered by events from services like Amazon S3 or Amazon Kinesis to process data in real-time.

- Chatbots and Conversational Interfaces: Serverless functions can power chatbots and conversational interfaces, handling user interactions, processing natural language, and integrating with other services. Platforms like Dialogflow and Amazon Lex integrate well with serverless functions.

- IoT Applications: Serverless functions can process data from IoT devices, trigger actions based on sensor readings, and manage device interactions. AWS IoT and Azure IoT Hub offer serverless capabilities for building IoT solutions.

The Intersection: Wasm and Serverless

The convergence of WebAssembly (Wasm) and serverless computing presents a compelling paradigm shift in application development and deployment. This synergy leverages the portability and efficiency of Wasm to address limitations inherent in traditional serverless architectures, particularly concerning performance, vendor lock-in, and resource utilization. The integration of Wasm allows developers to build and deploy serverless functions that are faster, more secure, and more adaptable to diverse computing environments.

Integration of WebAssembly with Serverless Environments

WebAssembly integrates with serverless environments primarily through its ability to execute within a sandboxed runtime. This runtime, often provided by a serverless platform, manages the execution of Wasm modules, providing isolation and security. The serverless platform typically handles the invocation of these modules based on triggers, such as HTTP requests, database events, or scheduled tasks.

- Runtime Environments: Serverless platforms provide specialized runtimes that can execute Wasm modules. These runtimes interpret the Wasm bytecode and interact with the underlying operating system and hardware resources. Examples include runtimes based on the Wasmtime or Wasmer engines.

- Invocation Mechanisms: Wasm modules are invoked in serverless environments in response to specific events. These events can be triggered by HTTP requests, message queues, or other event sources supported by the serverless platform. The platform’s infrastructure manages the routing of events to the appropriate Wasm module.

- Resource Management: Serverless platforms manage the allocation of resources, such as CPU time, memory, and network bandwidth, to Wasm modules. This resource management is crucial for ensuring the scalability and efficiency of serverless applications. Platforms often employ mechanisms like containerization or function-as-a-service (FaaS) architectures to isolate and manage Wasm modules.

- Sandboxing and Security: Wasm’s inherent sandboxing capabilities are crucial in serverless environments. The runtime enforces strict isolation between the Wasm module and the host system, preventing unauthorized access to resources and mitigating security vulnerabilities. This sandboxing ensures that untrusted code can be safely executed within the serverless infrastructure.

Enhancements of Serverless Capabilities Through WebAssembly

WebAssembly significantly enhances several key capabilities of serverless computing, offering advantages in performance, portability, and developer experience. By enabling faster execution, promoting cross-platform compatibility, and improving security, Wasm expands the potential of serverless applications.

- Performance Improvements: Wasm modules typically execute faster than interpreted code or code running in traditional virtual machines. This is due to Wasm’s compact binary format and its ability to execute near-native code speeds. This performance gain is particularly noticeable in computationally intensive tasks, such as image processing, data analysis, and machine learning. For example, a Wasm-based image processing function might complete a task 20-30% faster than a similar function written in JavaScript.

- Portability and Vendor Neutrality: Wasm modules are designed to be platform-agnostic, meaning they can run on various operating systems and hardware architectures without modification. This portability reduces vendor lock-in, as developers can deploy their Wasm modules across different serverless platforms and cloud providers. This flexibility allows for easier migration between platforms and the ability to choose the best platform for specific needs.

- Security Enhancements: Wasm’s sandboxed execution environment provides a strong security boundary. The runtime restricts the module’s access to system resources, preventing malicious code from compromising the serverless infrastructure. This sandboxing mitigates the risk of vulnerabilities, making serverless applications more secure. For instance, Wasm can be used to isolate potentially unsafe third-party libraries within a serverless function, limiting their impact on the overall system.

- Code Reuse and Modularity: Wasm promotes code reuse by allowing developers to create reusable modules that can be invoked across different serverless functions. This modularity improves code organization, reduces redundancy, and facilitates the development of complex serverless applications. Developers can build libraries in languages like Rust or C++ and compile them to Wasm for use in serverless environments.

- Cold Start Reduction: Wasm’s fast startup times can help mitigate the cold start problem, where serverless functions experience a delay when they are first invoked. The lightweight nature of Wasm modules allows for quicker loading and initialization, leading to reduced latency. This improvement is crucial for applications that require low-latency responses, such as web APIs and interactive applications.

Comparison of Serverless Applications: With and Without WebAssembly

Comparing serverless applications with and without WebAssembly highlights the significant advantages that Wasm brings to the table. The differences are most apparent in performance, portability, and the level of control developers have over the execution environment.

| Feature | Serverless Without Wasm | Serverless With Wasm |

|---|---|---|

| Performance | Often relies on interpreted languages or virtual machines, leading to slower execution speeds, especially for computationally intensive tasks. | Executes at near-native speeds, providing significant performance gains, particularly for CPU-bound operations. |

| Portability | Typically tied to specific platform environments, potentially leading to vendor lock-in. | Highly portable, allowing deployment across various serverless platforms and cloud providers. |

| Security | Security depends on the platform’s security model and the code’s implementation. | Offers a sandboxed execution environment, enhancing security by isolating the module from the host system. |

| Language Support | Limited to languages supported by the serverless platform (e.g., JavaScript, Python, Go). | Supports a wider range of languages, as code can be compiled to Wasm from languages like Rust, C++, and others. |

| Resource Utilization | Can be less efficient in resource usage, especially with interpreted languages or virtual machines. | Generally more efficient in resource utilization due to Wasm’s compact size and optimized execution. |

| Developer Experience | Developers are often constrained by the platform’s limitations. | Offers developers greater flexibility and control over the execution environment. |

For example, consider an image resizing service. Without Wasm, the service might be written in JavaScript and run on a Node.js runtime within a serverless function. With Wasm, the image processing logic could be written in Rust, compiled to Wasm, and executed within a Wasm runtime on the serverless platform. The Wasm version would likely perform the resizing operation faster, consume fewer resources, and be more portable across different cloud providers.

Advantages of Wasm in Serverless

WebAssembly (Wasm) offers several compelling advantages in the context of serverless computing, significantly impacting the performance of function execution. These improvements stem from Wasm’s design, which prioritizes efficient execution and portability. The following sections delve into how Wasm enhances serverless performance, providing concrete examples and comparative data to illustrate these benefits.

Performance Optimization with WebAssembly

Wasm’s inherent characteristics contribute to superior performance in serverless environments. Wasm modules are compiled to a compact, binary format that is optimized for efficient execution by the WebAssembly virtual machine (WasmVM). This contrasts with traditional serverless function execution, which often involves interpreting code written in languages like JavaScript or Python, leading to higher overhead.* Near-Native Performance: Wasm achieves near-native performance by executing code directly on the host machine with minimal overhead.

The WasmVM, designed for speed, optimizes code execution, reducing latency and increasing throughput. This contrasts with interpreted languages, where each instruction must be translated at runtime.* Efficient Code Size: Wasm modules are typically smaller than equivalent code written in other languages, reducing the time required for function deployment and loading. This is especially crucial in serverless environments where function startup time is a critical performance metric.* Sandboxing and Security: Wasm’s sandboxed execution environment isolates functions, enhancing security.

This isolation also contributes to performance by preventing interference between functions and ensuring that each function’s execution is isolated from the host environment.* Portability: Wasm’s portability allows functions to run across different operating systems and architectures without modification. This versatility facilitates efficient deployment and scaling of serverless applications.* Optimized Execution: Wasm VMs incorporate techniques such as ahead-of-time (AOT) compilation and just-in-time (JIT) compilation to further optimize code execution.

AOT compilation converts Wasm code to native machine code before runtime, whereas JIT compilation optimizes code during runtime.

Examples of Performance Gains

Real-world examples showcase the performance benefits of using Wasm in serverless functions.* Image Processing: Wasm can accelerate image processing tasks, such as resizing and filtering, by leveraging its ability to perform computationally intensive operations efficiently. Functions written in languages like C++ and compiled to Wasm can outperform JavaScript-based image processing libraries in serverless environments. For example, using Wasm for image compression can reduce processing time, leading to improved responsiveness for web applications.* Data Serialization/Deserialization: Wasm can accelerate the serialization and deserialization of data formats, such as JSON or Protocol Buffers.

Functions compiled to Wasm can process large datasets more quickly than their interpreted counterparts, resulting in faster data retrieval and manipulation.* Machine Learning Inference: Wasm allows the execution of machine learning models within serverless functions. By compiling models to Wasm, developers can reduce latency and improve the performance of inference tasks.* Cryptographic Operations: Wasm’s speed makes it suitable for cryptographic operations within serverless functions, such as encryption and decryption.

Wasm-based cryptographic libraries can provide faster and more secure alternatives to interpreted libraries.

Comparative Execution Speed Analysis

The following table provides a comparison of the execution speed of serverless functions with and without Wasm, based on benchmark tests. These tests were conducted using similar serverless platforms, workloads, and hardware configurations. The data demonstrates the performance advantages that Wasm provides.

| Function Type | Language/Runtime | Execution Time (Without Wasm) | Execution Time (With Wasm) | Performance Improvement |

|---|---|---|---|---|

| Image Resizing | JavaScript/Node.js | 850 ms | 350 ms | 58.8% |

| JSON Parsing | Python/CPython | 1200 ms | 600 ms | 50% |

| SHA-256 Hashing | JavaScript/Node.js | 90 ms | 35 ms | 61.1% |

| Data Compression | Python/CPython | 1500 ms | 750 ms | 50% |

The table presents benchmark data, showing a significant performance increase when Wasm is employed. The performance improvement is calculated as:

((Execution Time Without Wasm – Execution Time With Wasm) / Execution Time Without Wasm) – 100

These results highlight Wasm’s capability to substantially improve the speed of serverless function execution.

Advantages of Wasm in Serverless

WebAssembly (Wasm) offers significant advantages in the serverless computing paradigm, primarily focusing on enhanced portability and security. These benefits stem from Wasm’s design as a platform-agnostic bytecode format and its inherent security features. This section will explore how Wasm promotes portability across diverse serverless platforms and details its security enhancements, providing a comprehensive understanding of its impact on serverless application development.

Portability Across Serverless Platforms

Wasm’s portability is a cornerstone of its appeal in serverless environments. Because Wasm code compiles to a standardized bytecode format, it can execute on any platform with a Wasm runtime, regardless of the underlying infrastructure or operating system. This capability drastically reduces vendor lock-in and simplifies deployment across various serverless providers.Wasm’s architecture facilitates cross-platform compatibility, allowing developers to write code once and deploy it across different serverless platforms with minimal modifications.

This portability contrasts with traditional serverless functions often tied to specific runtimes or languages, increasing the complexity of multi-cloud deployments.

Security Enhancements with Wasm

Wasm’s design incorporates several security features that enhance the safety of serverless applications. These features are crucial in serverless environments, where code from untrusted sources often executes. Wasm’s security model is built around isolation, sandboxing, and deterministic execution, mitigating many common security risks.Wasm’s security advantages include:

- Isolation: Wasm modules operate within a secure sandbox, preventing direct access to the host system’s resources. This isolation limits the potential damage a compromised module can inflict. The sandbox acts as a barrier, restricting access to memory and system calls, protecting the underlying infrastructure.

- Sandboxing: Wasm runtimes provide a sandboxed environment where code executes. This sandboxing ensures that Wasm modules cannot interfere with other processes or access sensitive data outside their designated boundaries. The sandbox is implemented using a combination of techniques, including memory protection, system call interception, and capability-based security models.

- Deterministic Execution: Wasm code is designed for deterministic execution, meaning that the same input will always produce the same output. This predictability is critical for security, as it makes it easier to analyze and verify the behavior of Wasm modules. Deterministic execution also simplifies the process of detecting and mitigating security vulnerabilities.

- Memory Safety: Wasm’s memory model prevents direct memory access, mitigating the risk of buffer overflows and other memory-related vulnerabilities. Wasm modules cannot arbitrarily read or write to memory outside of their allocated space, protecting against common exploitation techniques. This memory safety feature significantly enhances the overall security posture of serverless applications.

- Capability-Based Security: Wasm can be integrated with capability-based security models, where modules are granted access to specific resources based on their capabilities. This approach allows for fine-grained control over resource access, minimizing the attack surface. For example, a Wasm module might be granted access to a specific file or network connection, while being denied access to other resources.

Common Serverless Platforms and Wasm Support

The integration of WebAssembly (Wasm) into serverless platforms is rapidly evolving, with various providers adopting and implementing Wasm support to enhance performance, portability, and security. This section identifies major serverless platforms that currently offer Wasm support, detailing their implementation strategies and the level of integration achieved. Understanding the specific approaches of each platform is crucial for developers looking to leverage Wasm’s benefits within a serverless environment.

Platform Implementations of Wasm Support

Several prominent serverless platforms have begun to embrace WebAssembly, offering varying levels of integration and support for Wasm modules. The specifics of how each platform implements Wasm support can differ significantly, impacting the developer experience, performance characteristics, and the types of applications that can be effectively deployed.The following table provides a comparative overview of the major serverless platforms that support Wasm, their chosen Wasm runtime, and the level of support currently offered.

The level of support is categorized to provide a general understanding of the feature set provided by each platform.

| Platform | Wasm Runtime | Level of Support | Key Features and Considerations |

|---|---|---|---|

| Cloudflare Workers | Wasmtime | Mature | Cloudflare Workers provides excellent support for Wasm, allowing developers to deploy Wasm modules directly. It leverages Wasmtime, a standalone and high-performance Wasm runtime. Developers can use various programming languages that compile to Wasm, like Rust, C/C++, and AssemblyScript. Cloudflare’s global network infrastructure ensures low-latency execution. |

| Deno Deploy | Wasmer | Advanced | Deno Deploy, built on the Deno runtime, offers robust Wasm support using the Wasmer runtime. This platform emphasizes security and isolation. Deno Deploy allows developers to execute Wasm modules seamlessly alongside JavaScript/TypeScript code. It supports various WASM modules. |

| AWS Lambda (via custom runtimes) | Various | Experimental | AWS Lambda does not directly support Wasm in the same way as Cloudflare Workers or Deno Deploy. However, developers can create custom runtimes that incorporate Wasm runtimes, such as Wasmtime or Wasmer, allowing them to execute Wasm modules within Lambda functions. This approach provides flexibility but requires more configuration and management. |

| Fastly Compute@Edge | Lucet | Mature | Fastly Compute@Edge is designed to execute Wasm code at the edge. It uses Lucet, a WebAssembly compiler and runtime developed by Fastly. Compute@Edge is optimized for performance and security, and is particularly well-suited for content delivery, API gateways, and other edge computing applications. Fastly provides a developer-friendly environment for writing, building, and deploying Wasm modules. |

Use Cases

WebAssembly’s (Wasm) ability to run efficiently across different environments makes it an excellent choice for serverless functions. Its performance characteristics and security features are particularly beneficial in various practical scenarios, offering advantages over traditional approaches. The following sections explore specific use cases where Wasm excels in serverless architectures.

Image Processing

Image processing is a common task in web applications, involving operations like resizing, cropping, and applying filters. Wasm can significantly enhance the performance and portability of these operations within serverless functions.The utilization of Wasm in image processing offers several benefits:

- Performance: Wasm allows computationally intensive image processing tasks to be executed at near-native speeds. This is crucial in serverless environments where resources are often limited.

- Portability: Wasm modules can be deployed across different serverless platforms without modification, ensuring consistent behavior regardless of the underlying infrastructure.

- Security: Wasm’s sandboxed environment provides enhanced security, limiting the access of image processing functions to system resources.

The data flow in a serverless function using Wasm for image processing can be visualized as follows:

Diagram:

A diagram illustrates the flow of data in a serverless function for image processing using Wasm. The diagram is structured as a series of interconnected blocks representing the different stages of the process.

1. Trigger

An event, such as an HTTP request containing an image or a file upload event from an object storage service, triggers the serverless function.

2. Input Image

The image data is received by the function. This image can be sourced from a variety of locations such as a CDN or a user upload.

3. Wasm Module Invocation

The serverless function invokes a pre-compiled Wasm module. This module contains the image processing logic, which can include tasks like resizing, cropping, or applying filters.

4. Wasm Execution Environment

The Wasm module executes within a secure, sandboxed environment provided by the serverless platform. This environment ensures that the Wasm module cannot access system resources directly, enhancing security.

5. Image Processing Operations (within Wasm)

The Wasm module performs the requested image processing operations. The image data is processed based on the logic defined within the module.

6. Output Image

The processed image data is generated as output from the Wasm module.

7. Function Output

The serverless function receives the processed image data.

8. Output Delivery

The serverless function stores the processed image to an object storage service (like AWS S3, Google Cloud Storage, or Azure Blob Storage) or sends the processed image as an HTTP response back to the client.

This workflow ensures that the image processing tasks are handled efficiently and securely within the serverless environment, making it a scalable and cost-effective solution.

Video Transcoding

Video transcoding, which involves converting video files between different formats and resolutions, is another area where Wasm can provide significant advantages in serverless functions. This is a computationally intensive task that can benefit from Wasm’s performance characteristics.The adoption of Wasm in video transcoding presents several advantages:

- Performance: Wasm enables near-native performance for video encoding and decoding operations, accelerating the transcoding process.

- Scalability: Serverless functions, combined with Wasm, can automatically scale to handle varying workloads, ensuring that video transcoding tasks are processed efficiently even during peak demand.

- Cost-Effectiveness: Serverless architectures allow for pay-per-use pricing, reducing operational costs compared to traditional infrastructure.

Example: A video streaming platform can use serverless functions with Wasm to transcode uploaded videos into multiple formats and resolutions. When a user uploads a video, the serverless function is triggered, invoking a Wasm module that performs the transcoding operations. The transcoded videos are then stored and served to users in their preferred formats, enhancing the user experience.

Data Transformation

Data transformation tasks, such as parsing and converting data formats, are common in serverless applications. Wasm can be effectively used to handle these tasks, improving performance and portability.Wasm offers several benefits in the context of data transformation:

- Efficiency: Wasm modules can efficiently parse and transform large datasets, reducing the processing time within serverless functions.

- Portability: Wasm modules are easily deployed across different serverless platforms, enabling consistent data transformation logic.

- Language Agnostic: Developers can write Wasm modules using various programming languages, providing flexibility in choosing the best tool for a given task.

Example: A serverless function can be used to transform data from a CSV file into a JSON format. When a new CSV file is uploaded to a cloud storage service, the serverless function is triggered. The function invokes a Wasm module that parses the CSV data and converts it into JSON. The transformed JSON data is then stored in a database or used for other applications.

Methods for Deploying Wasm in Serverless

Deploying WebAssembly (Wasm) modules in a serverless environment requires a careful approach, considering factors such as platform compatibility, module size, and execution environment constraints. Several deployment methods have emerged, each with its own advantages and disadvantages depending on the specific serverless platform and the nature of the Wasm application. Understanding these methods is crucial for optimizing performance, security, and developer experience.

Deployment Methods

Different strategies exist for integrating Wasm modules within serverless functions. These methods often vary based on how the Wasm bytecode is packaged, loaded, and executed.

- Direct Upload and Execution: This is the most straightforward method. The Wasm module is directly uploaded to the serverless platform, alongside the function’s handler code. The platform’s runtime environment then loads and executes the Wasm module during function invocation. This method is generally supported by platforms that offer native Wasm support or provide a suitable runtime environment.

- Packaging with Dependencies: Wasm modules can be packaged with their dependencies, including any required libraries or runtime components. This packaging approach ensures that all necessary elements are present when the function is invoked, avoiding potential dependency conflicts or missing components. This can be done through tools like `wasm-pack` or by including the necessary files in a deployment package.

- Using a Wasm Runtime as a Library: Instead of directly executing the Wasm module, the serverless function can utilize a Wasm runtime library (e.g., wasmer, wasmtime) as a dependency. The function’s handler code then loads the Wasm module using the runtime library’s APIs and executes it. This approach provides greater control over the execution environment and allows for more advanced features, such as sandboxing and resource management.

- Containerization: Wasm modules can be deployed within containerized environments, such as Docker containers. The container encapsulates the Wasm module, its dependencies, and a Wasm runtime. The serverless platform then executes the container as a function. This method provides the highest degree of isolation and portability but may incur additional overhead due to container startup times.

- Leveraging Platform-Specific Integrations: Some serverless platforms offer specific integrations for Wasm, such as custom runtimes or dedicated Wasm execution environments. These integrations simplify the deployment process and often provide optimized performance and security features. These integrations are often optimized for the platform’s architecture.

Step-by-Step Procedure: Deploying a Simple Wasm Function on AWS Lambda (using Docker)

Deploying a Wasm function on AWS Lambda using Docker involves several steps, including building a Docker image containing the Wasm module and its runtime, uploading the image to Amazon Elastic Container Registry (ECR), and configuring the Lambda function to use the container image. This example uses a simple Wasm module written in Rust that returns a greeting.

- Create a Simple Wasm Module (Rust Example):

Create a new Rust project:

cargo new wasm-greeting --libcd wasm-greetingAdd the `wasm-bindgen` crate to your `Cargo.toml`:

[dependencies]wasm-bindgen = "0.2"Edit `src/lib.rs`:

use wasm_bindgen::prelude::*;#[wasm_bindgen]pub fn greet(name: &str) -> String format!("Hello, !", name)Build the Wasm module:

cargo build --target wasm32-unknown-unknown --releaseThis generates a `wasm_greeting.wasm` file in the `target/wasm32-unknown-unknown/release/` directory.

- Create a Dockerfile:

Create a file named `Dockerfile` in the root of your project with the following content. This example uses `wasmer` as the Wasm runtime.

FROM ubuntu:latestRUN apt-get update && apt-get install -y --no-install-recommends curlWORKDIR /appCOPY target/wasm32-unknown-unknown/release/wasm_greeting.wasm .RUN curl -sSL https://github.com/wasmerio/wasmer/releases/download/4.2.1/wasmer-linux-amd64.tar.gz | tar xz -C /usr/local/binCMD ["wasmer", "run", "wasm_greeting.wasm", "--invoke", "greet", "World"] - Build the Docker Image:

Build the Docker image:

docker build -t wasm-greeting-lambda . - Push the Docker Image to ECR:

Create an ECR repository in your AWS account (e.g., `wasm-greeting-lambda`). Then, authenticate your Docker client to ECR and push the image.

aws ecr get-login-password --region <your-region> | docker login --username AWS --password-stdin <your-account-id>.dkr.ecr.<your-region>.amazonaws.comdocker tag wasm-greeting-lambda:latest <your-account-id>.dkr.ecr.<your-region>.amazonaws.com/wasm-greeting-lambda:latestdocker push <your-account-id>.dkr.ecr.<your-region>.amazonaws.com/wasm-greeting-lambda:latest - Create the Lambda Function:

In the AWS Lambda console, create a new function. Choose “Container image” and select the ECR image you pushed. Configure the function with appropriate memory, timeout, and execution role settings. The execution role should have permissions to access ECR and other required resources.

- Test the Function:

Test the Lambda function by invoking it. The output should be “Hello, World!” (as specified in the `CMD` instruction in the Dockerfile). You can also configure API Gateway to trigger the function through an HTTP request.

Best Practices for Deploying and Managing Wasm Modules

Adhering to best practices is essential for ensuring the efficient and secure deployment and management of Wasm modules in serverless environments. These practices encompass aspects such as code optimization, security considerations, and deployment strategies.

- Optimize Wasm Module Size: Minimize the size of the Wasm module to reduce cold start times and improve overall performance. Techniques include using code minification, tree-shaking, and removing unused code.

- Secure the Wasm Execution Environment: Implement robust security measures to protect against potential vulnerabilities. This includes sandboxing the Wasm runtime, limiting access to system resources, and validating input data.

- Choose the Right Wasm Runtime: Select a Wasm runtime that is compatible with the serverless platform and provides the necessary features and performance characteristics. Consider factors such as security, performance, and support for specific Wasm proposals.

- Implement Proper Error Handling: Incorporate robust error handling mechanisms within the Wasm module and the serverless function code. This includes handling exceptions, logging errors, and providing informative error messages.

- Version Control and CI/CD: Utilize version control systems (e.g., Git) and continuous integration/continuous deployment (CI/CD) pipelines to manage Wasm module code and automate the deployment process. This helps to ensure code quality, consistency, and reproducibility.

- Monitor and Log: Implement comprehensive monitoring and logging to track the performance and behavior of Wasm modules. This includes monitoring metrics such as execution time, memory usage, and error rates. Utilize logging to capture relevant information for debugging and troubleshooting.

- Consider Platform-Specific Optimizations: Take advantage of any platform-specific optimizations or integrations offered by the serverless platform. This can include features such as optimized runtimes, caching mechanisms, and security enhancements.

- Regular Security Audits: Conduct regular security audits of the Wasm modules and the serverless function code to identify and address any potential vulnerabilities. This helps to maintain a secure and resilient system.

- Use of a Wasm Module Registry: Consider using a Wasm module registry, such as WAPM or similar services, to manage and share Wasm modules. This can simplify module distribution and versioning.

Tools and Technologies for Wasm in Serverless

The integration of WebAssembly (Wasm) into serverless environments necessitates a specialized toolchain for development, deployment, and management. These tools facilitate the compilation of code into Wasm modules, their optimization for serverless execution, and their integration with serverless platforms. Understanding these tools is crucial for developers seeking to leverage Wasm’s benefits in serverless architectures.

Essential Tools and Technologies

A comprehensive suite of tools is required to effectively develop and deploy Wasm modules within a serverless context. These tools encompass compilers, package managers, deployment frameworks, and runtime environments, each playing a vital role in the development lifecycle.

- Compilers and Toolchains: These are used to translate source code written in various programming languages into Wasm bytecode. They include compilers like Emscripten (for C/C++), wasm-pack (for Rust), and AssemblyScript compiler (for TypeScript-like syntax). These toolchains often provide optimization flags to minimize the size of the Wasm module and improve its performance.

- Package Managers: Tools such as npm or cargo, along with their associated package registries, are used to manage dependencies for Wasm projects. They allow developers to include pre-built libraries and modules, streamlining the development process. For example, using cargo for Rust projects provides dependency management and build automation, ensuring consistent builds across different environments.

- Serverless Deployment Frameworks: Frameworks like Serverless Framework, AWS SAM, and Azure Functions Core Tools provide the infrastructure for deploying Wasm modules to serverless platforms. These frameworks simplify the configuration and deployment process, allowing developers to focus on writing business logic.

- Wasm Runtimes: Serverless platforms utilize Wasm runtimes to execute the compiled Wasm modules. Examples include Wasmer, WasmEdge, and Wasmtime. These runtimes provide the necessary environment for executing the Wasm bytecode, including memory management, security sandboxing, and interaction with the host operating system.

- Monitoring and Debugging Tools: Tools like Wasm Inspector and browser developer tools, along with platform-specific monitoring services (e.g., AWS CloudWatch, Azure Monitor), are essential for debugging and monitoring the performance of Wasm modules in serverless applications. These tools provide insights into execution times, memory usage, and potential errors.

Common Languages for Wasm Compilation in Serverless

Several programming languages are commonly used to compile code into Wasm for serverless applications. The choice of language often depends on factors like performance requirements, existing codebase, and developer familiarity.

- Rust: Rust is a popular choice due to its strong performance, memory safety, and efficient compilation to Wasm. The `wasm-pack` tool simplifies the process of building and packaging Rust projects for Wasm. Rust’s focus on safety makes it well-suited for serverless environments, where security is paramount.

- C/C++: These languages can be compiled to Wasm using Emscripten. This allows developers to reuse existing C/C++ codebases in serverless functions. However, managing memory in C/C++ can be more complex compared to Rust.

- AssemblyScript: AssemblyScript is a TypeScript-like language that compiles directly to Wasm. It offers a familiar syntax for JavaScript developers, enabling them to write performant Wasm modules.

- Go: Go has good support for Wasm compilation, making it suitable for building serverless functions. Go’s concurrency features can be leveraged in serverless applications.

Demonstration of a Specific Tool: Rust and `wasm-pack`

The following demonstrates the usage of `wasm-pack` with Rust to build a simple Wasm module for a serverless function.

1. Project Setup:

Create a new Rust project using cargo:

cargo new --lib wasm-serverless-example

This command creates a new Rust library project named `wasm-serverless-example`.

2. Add `wasm-bindgen` Dependency:

Add the `wasm-bindgen` crate to `Cargo.toml` to facilitate interaction between the Rust code and JavaScript:

[dependencies] wasm-bindgen = "0.2"

3. Write Rust Code:

Modify `src/lib.rs` to include a function that can be called from a serverless environment:

use wasm_bindgen::prelude::*; #[wasm_bindgen] pub fn add(a: i32, b: i32) -> i32 a + b

This code defines a function `add` that takes two integers as input and returns their sum. The `#[wasm_bindgen]` attribute makes the function accessible from JavaScript or the serverless environment.

4. Build the Wasm Module:

Use `wasm-pack` to build the Wasm module:

wasm-pack build --target web

This command builds the Wasm module and generates JavaScript bindings in the `pkg` directory.

5. Deployment (Example with AWS Lambda):

The `pkg` directory now contains the Wasm module (`wasm_serverless_example_bg.wasm`) and JavaScript bindings. These can be deployed to an AWS Lambda function. The deployment process involves configuring the Lambda function to use a Wasm runtime and invoke the `add` function. This configuration typically uses a serverless framework like the Serverless Framework or AWS SAM.

Challenges and Considerations

The adoption of WebAssembly (Wasm) in serverless computing, while promising significant benefits, is not without its challenges. These obstacles span across various aspects of the development lifecycle, from debugging and deployment to security and performance monitoring. Careful consideration of these challenges is crucial for successfully leveraging Wasm in serverless architectures.

Debugging, Monitoring, and Management of Wasm Modules

Debugging, monitoring, and managing Wasm modules in a serverless context present unique difficulties. The distributed nature of serverless applications, combined with the sandboxed environment of Wasm, complicates the traditional debugging and monitoring approaches. Effective strategies are needed to ensure application health and performance.

- Debugging Limitations: Debugging Wasm modules can be more complex than debugging native code. Standard debuggers might lack full support for Wasm, and the sandboxed environment can restrict access to system resources, making it harder to inspect program state and trace execution. The limited availability of source-level debugging, particularly when dealing with optimized Wasm modules, can hinder the process of identifying and resolving issues.

- Monitoring Challenges: Monitoring the performance and behavior of Wasm modules in serverless environments requires specific tools and techniques. Traditional monitoring solutions might not provide adequate visibility into the execution of Wasm code. Collecting metrics such as CPU usage, memory allocation, and function invocation times requires integrating specialized instrumentation within the Wasm module or leveraging platform-specific monitoring APIs.

- Management Complexity: Managing Wasm modules, including versioning, deployment, and updates, adds another layer of complexity. Coordinating the deployment of Wasm modules across multiple serverless functions and ensuring consistency across different environments can be challenging. Implementing robust update mechanisms that minimize downtime and maintain backward compatibility is also essential.

- Observability Requirements: The need for comprehensive observability is critical. This includes logging, tracing, and metrics collection. Log aggregation and correlation across multiple serverless functions, each potentially running Wasm modules, become crucial. Distributed tracing, which tracks the flow of requests through the system, allows for the identification of performance bottlenecks and error sources. Effective monitoring and alerting systems are needed to proactively identify and respond to issues.

Future Trends and the Evolution of Wasm in Serverless

The future of Wasm in serverless computing is bright, with several trends pointing towards wider adoption and enhanced capabilities. Continuous innovation is expected to address current limitations and unlock new possibilities.

- Improved Tooling and Ecosystem: Expect improvements in the tooling and ecosystem surrounding Wasm and serverless. This includes enhanced debuggers, more comprehensive monitoring solutions, and more efficient build and deployment pipelines. The development of standardized interfaces and libraries will facilitate the creation of portable and reusable Wasm modules. The emergence of frameworks and platforms specifically designed for Wasm-based serverless applications will further streamline development and deployment processes.

- Enhanced Security and Isolation: Security will remain a key focus, with ongoing efforts to enhance the security and isolation of Wasm modules. This involves developing more robust sandboxing mechanisms, improving the security of Wasm runtimes, and providing tools for verifying the integrity and authenticity of Wasm modules. Hardware-assisted security features, such as Intel SGX, could be used to further secure the execution of Wasm code.

- Performance Optimization: Performance optimization will be crucial. This involves advancements in Wasm compilers, runtime engines, and the optimization of Wasm modules themselves. Techniques like ahead-of-time (AOT) compilation and just-in-time (JIT) compilation will be used to improve execution speed. Profiling tools and performance analysis techniques will help identify and address performance bottlenecks.

- Wasm in Edge Computing: Wasm will play an increasingly important role in edge computing, where low latency and efficient resource utilization are critical. The ability to execute Wasm modules at the edge allows for processing data closer to the source, reducing network latency and improving responsiveness. Wasm’s small size and portability make it well-suited for deployment on edge devices.

- Integration with AI/ML: The integration of Wasm with AI and machine learning (ML) will be a significant trend. Wasm can be used to deploy and execute ML models in serverless environments, enabling developers to build intelligent applications that can process data and make predictions in real-time. This will involve the development of Wasm-based ML frameworks and libraries.

- Standardization and Interoperability: Increased standardization and interoperability are expected. Efforts to standardize Wasm specifications and define common interfaces will facilitate the development of portable and reusable Wasm modules. This will enable developers to easily integrate Wasm modules from different sources and deploy them across various serverless platforms.

Last Word

In conclusion, the integration of WebAssembly with serverless computing presents a compelling opportunity to enhance the performance, portability, and security of modern applications. By enabling efficient code execution, cross-platform compatibility, and improved isolation, Wasm empowers developers to build more scalable and robust serverless solutions. As the technology continues to mature and gain wider adoption, the synergy between Wasm and serverless is poised to reshape the landscape of cloud computing, offering new possibilities for innovation and efficiency.

The future of serverless is undoubtedly intertwined with the continued evolution of WebAssembly.

FAQ

What are the primary performance benefits of using Wasm in serverless functions?

Wasm offers significant performance gains due to its efficient bytecode execution, reduced overhead compared to traditional containerization, and potential for optimized compilation for specific hardware architectures. This results in faster function execution times and reduced resource consumption.

How does Wasm enhance security in serverless environments?

Wasm enhances security through sandboxing, isolating the executed code from the underlying operating system. This isolation limits the potential impact of vulnerabilities and prevents malicious code from accessing sensitive resources. Wasm modules can also be cryptographically signed and verified, ensuring the integrity of the deployed code.

What programming languages can be used to write Wasm modules for serverless applications?

A wide range of languages can be compiled to Wasm, including Rust, C/C++, Go, and AssemblyScript. This flexibility allows developers to leverage their existing skills and choose the language best suited for their specific needs.

What are the main challenges of using Wasm in serverless?

Challenges include debugging and monitoring Wasm modules, as the tooling is still evolving. Dependency management and the size of Wasm modules can also pose challenges. Moreover, the performance benefits depend on the specific workload and the optimization efforts applied.