Embarking on a cloud journey promises scalability and efficiency, yet beneath the surface lie hidden costs that can significantly impact your budget. Understanding these less-obvious expenses is crucial for effective cloud management and cost optimization. This comprehensive exploration delves into the intricacies of cloud computing costs, providing insights to help you navigate the complexities and make informed decisions.

From fundamental cost models and data transfer fees to security implementations and vendor lock-in implications, this analysis unveils the various factors that contribute to cloud spending. We will dissect pricing structures, examine optimization strategies, and highlight tools to empower you in controlling your cloud expenses. Furthermore, we’ll discuss compliance, performance, and scalability to offer a holistic view of cloud cost management.

Understanding the Basics

Cloud computing offers numerous benefits, but understanding its cost structures is crucial for effective financial management. This section delves into the fundamental pricing models and metrics employed by cloud providers, comparing them with traditional on-premises infrastructure. Understanding these elements empowers informed decision-making, optimizing cloud resource utilization and controlling expenses.

Cloud Computing Cost Structures

Cloud services are typically categorized into three primary models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Each model presents a different level of control and responsibility for the user, directly impacting the associated costs.

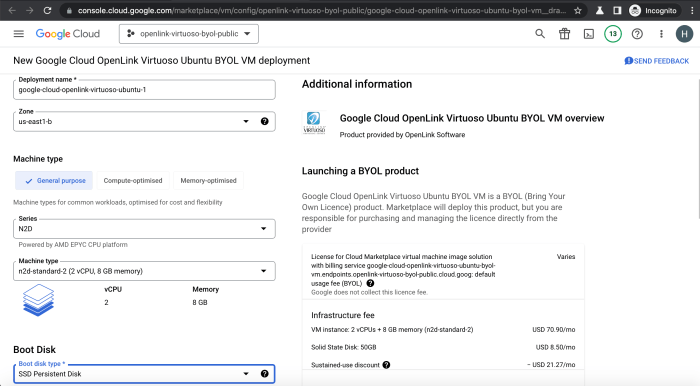

- Infrastructure as a Service (IaaS): IaaS provides access to fundamental computing resources, such as virtual machines, storage, and networks, over the internet. Users have the most control over the infrastructure, managing the operating system, middleware, and applications. The cost structure in IaaS is often based on a pay-as-you-go model, where users are charged for the resources they consume. Examples include Amazon Web Services (AWS) EC2, Microsoft Azure Virtual Machines, and Google Compute Engine.

Costs are primarily driven by compute hours, storage used (GB/month), data transfer (GB), and the operating system or software licenses.

- Platform as a Service (PaaS): PaaS offers a platform for developing, running, and managing applications without the complexities of managing the underlying infrastructure. Developers can focus on the application code, while the provider handles the operating system, servers, storage, and networking. Pricing models in PaaS can vary, often based on the resources consumed by the application, such as compute hours, storage, and the number of users or transactions.

Examples of PaaS offerings include AWS Elastic Beanstalk, Azure App Service, and Google App Engine. The cost depends on factors like application instances, database usage, and the amount of data processed.

- Software as a Service (SaaS): SaaS delivers software applications over the internet, on demand, typically on a subscription basis. Users access the software through a web browser or mobile app, without needing to manage the underlying infrastructure or software installation. SaaS pricing is usually subscription-based, often per user, per month, or based on the features used. Examples include Salesforce, Microsoft 365, and Google Workspace.

Costs are determined by the number of users, features used, and storage consumed.

Core Pricing Metrics

Cloud providers employ various pricing metrics to determine the cost of their services. Understanding these metrics is essential for forecasting and controlling cloud expenses.

- Compute: This metric reflects the cost of processing power. It is often measured in terms of the virtual machine instance type (e.g., vCPUs, memory), the number of hours the instance runs, and the region where the instance is located. Different instance types have different pricing, with higher performance instances costing more. For example, a virtual machine with 4 vCPUs and 8GB of RAM might cost $0.10 per hour, while a machine with 16 vCPUs and 32GB of RAM could cost $0.40 per hour.

- Storage: This metric refers to the cost of storing data. Pricing is typically based on the amount of storage used (measured in gigabytes or terabytes) and the type of storage (e.g., object storage, block storage, archive storage). Different storage tiers offer varying levels of performance and availability, which also affect the cost. For example, frequently accessed data might be stored on a higher-performance, more expensive tier, while infrequently accessed data could be stored on a lower-cost archive tier.

- Bandwidth: This metric represents the cost of data transfer, both inbound and outbound. Outbound data transfer (data leaving the cloud provider’s network) is typically charged, while inbound data transfer is often free. Pricing is based on the amount of data transferred (measured in gigabytes or terabytes) and the destination. Data transfer within the same region is usually less expensive than data transfer across different regions or to the internet.

For instance, transferring 1 GB of data out of AWS might cost $0.09, while transferring data within the same region could be free or cost a negligible amount.

On-Premises vs. Cloud Cost Structures

The following table compares the cost structures of on-premises infrastructure and cloud-based solutions. This comparison highlights the key differences in cost components and financial implications.

| Feature | On-Premises Infrastructure | IaaS (Cloud) | SaaS (Cloud) |

|---|---|---|---|

| Capital Expenditure (CapEx) | Significant upfront investment in hardware, software licenses, and infrastructure. | Minimal upfront investment; pay-as-you-go model. | Typically, minimal to no upfront investment; subscription-based. |

| Operational Expenditure (OpEx) | Ongoing costs include electricity, cooling, maintenance, IT staff salaries, and software renewals. | Ongoing costs include compute, storage, bandwidth, and any managed services used. | Ongoing costs include subscription fees, which may vary based on usage or features. |

| Scalability | Limited scalability; requires purchasing and deploying additional hardware. | Highly scalable; resources can be provisioned and de-provisioned on demand. | Highly scalable; the provider handles scaling based on user needs. |

| Maintenance | Requires in-house IT staff or external consultants for hardware and software maintenance. | Provider manages infrastructure maintenance; user focuses on application and data. | Provider handles all maintenance and updates; user focuses on using the software. |

Data Transfer and Egress Fees

Understanding data transfer and egress fees is crucial for accurately estimating the total cost of cloud computing. These fees, often overlooked in initial cost assessments, can significantly impact your budget, particularly for applications that involve frequent data movement. This section delves into the intricacies of data transfer charges, offering insights into how they are calculated and strategies to minimize these expenses.

Data Transfer Charges Explained

Data transfer charges in cloud computing encompass the costs associated with moving data into and out of the cloud. These charges are typically categorized into two main types: data ingress and data egress. Data ingress refers to the transfer of data into the cloud, while data egress refers to the transfer of data out of the cloud. While data ingress is often free, data egress typically incurs charges.

The pricing for data egress varies depending on the cloud provider, the region where the data is stored, and the destination of the data.

Scenarios with Significant Egress Fee Impacts

Certain usage patterns can lead to substantial egress fees, potentially increasing cloud costs.

- Large-Scale Data Downloads: Applications that involve frequent or large-volume downloads of data from the cloud, such as content delivery networks (CDNs) or data analytics platforms, are particularly susceptible to high egress fees.

- Data Backup and Disaster Recovery: Regularly backing up data from the cloud to an on-premises location or a different cloud provider involves data egress, generating costs. The frequency and volume of these backups directly influence the overall egress charges.

- Cross-Region Data Transfer: Moving data between different geographic regions within the same cloud provider can incur egress fees. This is especially relevant for applications that require global data distribution or disaster recovery solutions.

- Multi-Cloud Architectures: Utilizing a multi-cloud strategy, where data is transferred between different cloud providers, often leads to significant egress costs. Each provider charges for data egress, potentially doubling or tripling the transfer expenses.

- Data-Intensive Applications: Applications processing large datasets, such as machine learning models or video streaming services, are likely to incur high egress fees as data is frequently moved for processing, analysis, or distribution.

Minimizing Data Transfer Costs

Several strategies can be employed to mitigate data transfer costs and optimize cloud spending.

- Data Localization: Storing data in the region closest to the end-users or the data processing location can reduce egress charges, as data transfer within the same region is often cheaper or free.

- Data Compression: Compressing data before transferring it can reduce the amount of data that needs to be transferred, thereby lowering egress costs. Tools like gzip or other compression algorithms can be utilized.

- Caching: Implementing caching mechanisms, such as content delivery networks (CDNs) or in-memory caches, can reduce the frequency of data retrieval from the cloud, decreasing egress charges.

- Data Optimization: Analyzing data usage patterns and optimizing data access strategies can minimize unnecessary data transfers. This includes strategies like batching data requests and only retrieving necessary data.

- Choosing the Right Cloud Provider and Tier: Different cloud providers offer varying pricing models for data egress. Comparing prices and selecting the provider and storage tier that best aligns with your data transfer needs can significantly impact costs. Consider the different tiers available, such as standard, premium, or archival storage, which may have different egress fee structures.

- Monitoring and Analysis: Regularly monitoring data transfer usage and costs provides valuable insights into areas where optimization is needed. Analyzing logs and metrics can help identify applications or processes that generate high egress fees.

- Leveraging Data Transfer Services: Some cloud providers offer data transfer services, such as dedicated network connections or data transfer appliances, that can reduce data transfer costs for large datasets. These services often provide faster and more cost-effective data transfer options compared to standard data egress.

Storage Costs and Optimization

Cloud storage is a cornerstone of modern cloud computing, but understanding its costs and optimizing usage is crucial to avoid unexpected expenses. Cloud providers offer a variety of storage options, each with its own pricing structure and intended use case. Choosing the right storage solution and implementing effective optimization strategies can significantly impact your overall cloud bill.

Storage Options and Pricing

Cloud providers offer diverse storage options tailored to different needs and access patterns. These options are typically priced based on factors such as storage capacity, data access frequency, and data retrieval speed.

| Storage Type | Description | Typical Use Cases | Pricing Considerations |

|---|---|---|---|

| Object Storage | Highly scalable and durable storage for unstructured data (e.g., images, videos, documents). Data is stored as objects within buckets. | Data lakes, backups, archiving, content delivery, web application hosting. | Charged based on storage capacity, data transfer (egress), and request frequency. |

| Block Storage | Provides raw, block-level storage volumes that can be attached to virtual machines. | Operating system disks, databases, high-performance applications. | Charged based on storage capacity, I/O operations (input/output), and snapshot storage. |

| File Storage | Offers shared file systems accessible by multiple virtual machines or users. | File sharing, content management systems, application development. | Charged based on storage capacity, data transfer, and request frequency. |

| Archive Storage | Designed for infrequently accessed data with a focus on low cost. | Long-term data archiving, compliance requirements, disaster recovery. | Charged based on storage capacity and data retrieval costs. |

Storage Tiers and Cost Comparison

Cloud providers often categorize storage into tiers based on access frequency and performance characteristics. Each tier has a different cost structure, with higher-performance tiers typically costing more.

- Hot Storage: This tier offers the highest performance and lowest latency, suitable for frequently accessed data. It is the most expensive storage tier. For example, Amazon S3 Standard and Azure Blob Storage Hot are examples of hot storage.

- Cold Storage: Cold storage provides lower performance and higher latency compared to hot storage, but at a reduced cost. It is suitable for infrequently accessed data. Amazon S3 Standard-IA (Infrequent Access) and Azure Blob Storage Cool are examples of cold storage.

- Archive Storage: This tier is designed for data that is rarely accessed and offers the lowest storage cost. Retrieval times can be longer, making it suitable for archival purposes. Amazon S3 Glacier and Azure Blob Storage Archive are examples of archive storage.

The choice of storage tier should align with the access patterns of your data. Over-provisioning storage in a higher-performance tier can lead to unnecessary costs, while under-provisioning can impact application performance. Consider the following example: A company stores customer data, some of which is frequently accessed (e.g., recent orders) and some which is rarely accessed (e.g., historical purchase records). Moving the historical data to a cold or archive storage tier can result in significant cost savings.

Methods for Optimizing Storage Costs

Optimizing storage costs requires a proactive approach that considers data lifecycle management, storage tier selection, and other strategies. Implementing these methods can significantly reduce your cloud storage expenses.

- Data Lifecycle Management: This involves automating the movement of data between different storage tiers based on access frequency and age. Cloud providers offer features to define policies that automatically transition data from hot to cold or archive storage. For instance, Amazon S3 Lifecycle policies can be configured to move objects to a lower-cost tier after a specified period of inactivity.

- Storage Tier Selection: Choosing the appropriate storage tier for each dataset is crucial. Analyze your data access patterns to determine the optimal tier. Regularly review your storage tiering strategy to ensure it aligns with changing access patterns.

- Data Compression: Compressing data before storing it can reduce the amount of storage space required, leading to cost savings. This is particularly effective for text-based data and log files. Tools like gzip or more advanced compression algorithms can be employed.

- Data Deduplication: Deduplication identifies and eliminates redundant data, storing only unique data blocks. This reduces the overall storage footprint. Some storage solutions offer built-in deduplication capabilities.

- Object Versioning: While useful for data protection, object versioning can increase storage costs. Review your versioning policies and consider disabling it for less critical data or limiting the number of versions retained.

- Delete Unnecessary Data: Regularly review and delete data that is no longer needed. This can include temporary files, old backups, and obsolete data. Implement automated processes to identify and remove such data.

- Use Reserved Instances/Committed Use Discounts (for Block Storage): If you use block storage, investigate the option of using reserved instances or committed use discounts. This can provide significant cost savings compared to on-demand pricing.

Hidden Costs of Cloud Security

Cloud security, while offering significant advantages, introduces its own set of hidden costs. Understanding these expenses is crucial for accurately budgeting and managing cloud resources. This section delves into the shared responsibility model, security implementation costs, and the financial ramifications of security breaches.

Shared Security Responsibilities

Cloud security is not solely the responsibility of the cloud provider. Instead, it operates under a shared responsibility model. This model dictates that both the provider and the customer are accountable for specific aspects of security.The cloud provider is responsible for securing the cloud infrastructure itself. This includes:

- Physical security of the data centers: This encompasses measures like access control, surveillance, and environmental controls to protect the physical hardware.

- Security of the underlying infrastructure: This involves securing the network, servers, storage, and virtualization layers that support the cloud services.

- Providing security services and features: Cloud providers often offer security tools and services, such as firewalls, intrusion detection systems, and identity and access management (IAM) solutions.

The customer is responsible for securing their data, applications, and access to the cloud resources. This includes:

- Data security: Protecting data at rest and in transit through encryption, access controls, and data loss prevention (DLP) measures.

- Identity and access management (IAM): Managing user identities, authentication, and authorization to control access to cloud resources.

- Application security: Securing applications deployed in the cloud, including vulnerability assessments, penetration testing, and code reviews.

- Security configuration: Properly configuring cloud services and resources to ensure they meet security requirements.

Understanding this shared responsibility model is vital for determining the customer’s security costs. Failing to address their security responsibilities can lead to vulnerabilities and potential financial losses.

Implementing Security Measures Expenses

Implementing security measures in the cloud can incur significant expenses. These costs often extend beyond the basic cloud service fees.The implementation of security measures often involves several types of expenses:

- Security tools and services: This includes the cost of purchasing and deploying security solutions such as firewalls, intrusion detection and prevention systems (IDS/IPS), web application firewalls (WAFs), security information and event management (SIEM) systems, and vulnerability scanners.

- Security personnel: Hiring or contracting security professionals, such as security engineers, analysts, and incident responders, to manage and maintain security measures.

- Training and certifications: Investing in training and certifications for IT staff to ensure they have the skills and knowledge to implement and manage cloud security effectively.

- Compliance and auditing: Costs associated with achieving and maintaining compliance with industry regulations and standards, such as HIPAA, PCI DSS, and GDPR. This includes conducting security audits and implementing necessary controls.

- Configuration and management: The ongoing costs of configuring, managing, and monitoring security tools and services. This includes regular updates, patching, and tuning to maintain optimal security posture.

For example, implementing a WAF to protect web applications from attacks can involve initial setup costs, ongoing subscription fees, and the cost of managing and tuning the WAF rules. Similarly, investing in a SIEM system requires the initial investment in the system, along with the ongoing cost of data storage, log analysis, and incident response. The complexity and scale of security implementations will vary depending on the organization’s size, industry, and risk profile.

Escalating Cloud Costs due to Security Breaches

A security breach can significantly escalate cloud costs. The financial impact of a breach extends far beyond the immediate cost of remediation.The financial ramifications of a security breach can include:

- Incident response: The cost of investigating the breach, containing the damage, and restoring systems. This includes the time and resources of security professionals, forensic investigators, and potentially legal counsel.

- Data recovery: The cost of recovering lost or corrupted data, which may involve restoring from backups or engaging specialized data recovery services.

- Downtime: The financial impact of downtime, which can result in lost revenue, decreased productivity, and damage to reputation.

- Legal and regulatory fines: Penalties for non-compliance with data privacy regulations, such as GDPR or CCPA.

- Notification costs: The expenses associated with notifying affected individuals and regulatory bodies about the breach.

- Reputational damage: The loss of customer trust and the potential for long-term damage to the organization’s reputation, which can lead to lost business and decreased market value.

A real-world example is the 2017 Equifax data breach. The breach exposed the personal information of nearly 147 million people. The breach cost Equifax hundreds of millions of dollars in remediation efforts, legal fees, and settlements. Additionally, the company suffered significant reputational damage, leading to a decline in its stock price and a loss of customer trust. This case highlights the potential for a security breach to have a devastating financial impact on an organization.

Operational Overhead and Management Costs

Managing cloud resources effectively is a continuous process that demands significant time, effort, and financial investment. These costs are often overlooked during the initial cloud migration phase but can accumulate substantially over time, impacting the overall return on investment. Understanding these operational overheads is crucial for accurate cloud budgeting and cost optimization.

Monitoring and Automation Costs

Monitoring and automation are vital for maintaining the health, performance, and security of cloud environments. Implementing these capabilities, however, incurs costs associated with various tools and services.

- Monitoring Tools: Comprehensive monitoring solutions are essential for tracking resource utilization, identifying performance bottlenecks, and detecting security threats. These tools can range from open-source options to enterprise-grade platforms, each with varying pricing models. Consider the cost of tools like:

- Application Performance Monitoring (APM) tools.

- Infrastructure monitoring solutions.

- Log management and analysis services.

- Automation Tools: Automating tasks such as provisioning, scaling, and configuration management reduces manual effort and improves efficiency. However, automation requires investment in:

- Configuration management tools (e.g., Ansible, Chef, Puppet).

- Orchestration platforms (e.g., Kubernetes, Terraform).

- Scripting and coding expertise to build and maintain automation scripts.

- Alerting and Notification Systems: Effective alerting systems are crucial for timely responses to incidents. Costs include:

- Subscription fees for notification services.

- Development and maintenance of alerting rules and dashboards.

Personnel Expenses for Cloud Management

Managing cloud environments requires skilled personnel with expertise in cloud technologies, security, and operations. The costs associated with hiring or training these individuals are a significant part of the operational overhead.

- Hiring Costs: Recruiting and onboarding cloud professionals can be expensive.

- Salary and benefits for cloud engineers, architects, and security specialists.

- Recruitment agency fees and costs associated with job postings.

- Training and Certification: Cloud technologies evolve rapidly, necessitating ongoing training to keep staff skills current.

- Costs of training courses and certifications (e.g., AWS Certified Solutions Architect, Azure Solutions Architect Expert, Google Cloud Professional Cloud Architect).

- Time spent on training, which can impact productivity.

- Staff Augmentation: In some cases, organizations may choose to supplement their internal teams with external consultants or managed service providers.

- Fees for consulting services.

- Ongoing support and maintenance costs.

Cloud Resource Management Process Flowchart and Cost Areas

The following flowchart illustrates the cloud resource management process, highlighting potential cost areas. This visual representation can aid in understanding the lifecycle of cloud resources and the associated expenses.

Flowchart Description:The process begins with the “Planning & Design” phase, where resource requirements are assessed, and the cloud infrastructure is designed. The cost areas here include:

- Planning & Design:

- Consulting fees for cloud architects.

- Cost modeling and simulation tools.

Next, “Provisioning & Deployment” takes place, where resources are created and configured. This involves:

- Provisioning & Deployment:

- Automation tool costs (e.g., Terraform, Ansible).

- Cost of the cloud resources themselves (compute, storage, networking).

The “Monitoring & Optimization” phase involves continuous tracking and adjustment of resources. Key cost areas include:

- Monitoring & Optimization:

- Monitoring tool subscriptions.

- Cost of performance tuning and optimization efforts.

The “Security & Compliance” stage ensures the environment is secure and meets regulatory requirements:

- Security & Compliance:

- Security tool subscriptions (e.g., SIEM, vulnerability scanners).

- Costs of compliance audits and assessments.

Finally, the “Maintenance & Support” phase involves ongoing operations and troubleshooting. Costs include:

- Maintenance & Support:

- Staff salaries for operations teams.

- Incident response and remediation costs.

The flowchart then loops back to Planning & Design, representing the continuous nature of cloud resource management. This cycle highlights the ongoing expenses associated with managing a cloud environment.

Vendor Lock-in and its Financial Implications

The potential for vendor lock-in is a significant consideration when adopting cloud computing services. It refers to the situation where a customer becomes overly reliant on a specific cloud provider’s services, making it difficult and costly to switch to another provider. This dependence can result in increased costs, reduced flexibility, and limited bargaining power. Understanding the nuances of vendor lock-in and its financial implications is crucial for making informed decisions about cloud adoption and management.

Identifying Vendor Lock-in in Cloud Services

Vendor lock-in manifests in various ways within the cloud environment. This can involve technical, contractual, and operational dependencies. Recognizing these factors is the first step in mitigating their impact.

- Proprietary Technologies and APIs: Cloud providers often offer unique services or use proprietary APIs that are not compatible with other platforms. This makes it challenging to migrate applications and data to a different provider without significant code rewriting or architectural changes. For example, a company heavily invested in a specific cloud provider’s database service, with custom extensions and features, would face considerable effort to move to a different database system on another cloud.

- Data Format and Storage Dependencies: Data stored in proprietary formats or specific storage solutions can be difficult to extract and transfer. The process of converting data formats, and ensuring compatibility with a new platform can be time-consuming and expensive. Imagine a business storing large amounts of data in a cloud provider’s object storage with a unique data structure; migrating this data to another provider would require significant effort to adapt the data to the new platform’s structure.

- Contractual Obligations and Pricing Structures: Long-term contracts, volume discounts, and complex pricing models can create financial incentives to stay with a provider, even if better options become available. Early termination fees or the loss of significant discounts can make switching providers economically unfeasible. A company locked into a multi-year contract with a cloud provider, receiving substantial discounts based on committed usage, might find it financially disadvantageous to move to a new provider if they have to forfeit these discounts.

- Operational Complexity and Training: The specific tools, processes, and expertise required to manage a particular cloud provider’s services can create operational dependencies. Staff training and the development of internal expertise around a specific provider’s platform can also contribute to vendor lock-in. Organizations that have invested heavily in training their IT staff on a specific cloud provider’s platform would incur significant costs and delays in transitioning to a different platform.

Costs Associated with Migrating Data and Applications

Migrating data and applications between cloud providers can be a complex and expensive undertaking. The costs can be broadly categorized into several areas.

- Data Transfer Costs: Egress fees, or the charges for transferring data out of a cloud provider’s network, can be a significant expense, especially for large datasets. The cost varies depending on the volume of data and the destination.

- Application Re-platforming or Re-architecting: Applications designed for one cloud provider’s environment may need to be re-platformed or re-architected to work on a different provider’s platform. This involves code changes, infrastructure adjustments, and potentially, the adoption of new services. For example, an application built using a specific cloud provider’s serverless functions might require extensive modification to run on another provider’s serverless platform due to differences in function execution environments and API compatibility.

- Operational Costs and Downtime: The migration process itself can incur operational costs, including the time and effort of IT staff, and the potential for downtime during the transition. Downtime can lead to lost revenue and productivity.

- Training and Skill Gap: The need to retrain IT staff on a new platform or hire external consultants with the necessary expertise adds to the overall cost.

- Cost of Data Conversion: Data formats and structures might need conversion to ensure compatibility with the new provider. This process can be expensive and time-consuming.

Strategies for Mitigating Vendor Lock-in

Several strategies can help organizations mitigate the risks of vendor lock-in and maintain flexibility in their cloud strategy.

- Multi-Cloud Approach: Deploying applications and data across multiple cloud providers reduces dependence on a single vendor. This strategy allows organizations to leverage the strengths of different providers, avoid being locked into one platform, and negotiate better pricing. For example, a company could run its web applications on one provider and its data analytics on another.

- Adopting Open Standards and Technologies: Using open-source technologies, containerization (e.g., Docker, Kubernetes), and standardized APIs increases portability and reduces the impact of vendor-specific features. This allows for easier migration between providers.

- Designing for Portability: Building applications with a focus on portability from the outset is crucial. This involves decoupling components, using platform-agnostic services where possible, and designing applications with modular architectures.

- Using Abstraction Layers: Implementing abstraction layers can isolate applications from the underlying cloud infrastructure. This allows for easier switching between providers without modifying the application code.

- Regularly Evaluating Cloud Costs and Performance: Regularly monitoring and evaluating the costs and performance of cloud services allows organizations to identify potential inefficiencies and make informed decisions about whether to switch providers.

- Negotiating Contracts: Carefully negotiating cloud contracts to avoid long-term commitments and include clauses that facilitate data portability can help to reduce the risk of vendor lock-in.

Cost Optimization Tools and Techniques

Cloud cost optimization is a critical practice for managing and reducing cloud spending. It involves a combination of tools, strategies, and ongoing monitoring to ensure efficient resource utilization and minimize unnecessary expenses. Implementing effective cost optimization can significantly improve the return on investment (ROI) of cloud infrastructure.

Cloud Cost Management Tools and Functionalities

Cloud providers and third-party vendors offer a variety of tools designed to help organizations understand, manage, and optimize their cloud costs. These tools provide valuable insights into spending patterns, resource utilization, and potential areas for improvement.

- Cloud Provider Native Tools: These tools are integrated directly into the cloud provider’s platform and offer core cost management features.

- AWS Cost Explorer: Provides a visual interface for analyzing AWS costs and usage over time. Users can filter and group data by various dimensions (e.g., service, region, tag) to identify cost drivers. The tool also offers cost forecasting capabilities.

- Azure Cost Management + Billing: Offers comprehensive cost management features for Azure resources, including cost analysis, budgeting, and alerts. It helps users track spending, identify anomalies, and optimize resource utilization.

- Google Cloud Cost Management: Provides tools for analyzing Google Cloud costs and usage. It includes features for creating budgets, setting alerts, and generating reports. Users can also leverage recommendations for cost optimization.

- Third-Party Cost Management Tools: These tools often provide more advanced features and cross-cloud support.

- CloudHealth by VMware: Offers comprehensive cloud cost management, governance, and security solutions. It provides insights into cloud spending, resource utilization, and compliance.

- Apptio Cloudability: Helps organizations manage and optimize cloud costs by providing detailed cost analysis, reporting, and recommendations. It supports multiple cloud providers and offers features for forecasting and budgeting.

- Spot by NetApp: Focuses on automating and optimizing cloud infrastructure for cost efficiency. It uses machine learning to predict and manage spot instances, as well as provides tools for resource right-sizing.

- Key Functionalities: Most cost management tools offer the following core functionalities:

- Cost Analysis: Allows users to analyze spending trends, identify cost drivers, and understand resource consumption patterns.

- Budgeting and Forecasting: Enables users to set budgets, track spending against those budgets, and forecast future costs.

- Recommendations: Provides recommendations for optimizing resource utilization, such as right-sizing instances, deleting unused resources, and leveraging reserved instances.

- Reporting and Dashboards: Offers customizable reports and dashboards to visualize cost data and track key metrics.

- Alerting: Sends alerts when spending exceeds predefined thresholds or when anomalies are detected.

Implementing Cost Optimization Strategies

Implementing cost optimization strategies requires a systematic approach that involves assessment, planning, and continuous monitoring.

- Assessment: Conduct a thorough assessment of current cloud spending and resource utilization.

- Analyze current spending patterns using cost management tools.

- Identify top cost drivers and areas of high spending.

- Evaluate resource utilization metrics (e.g., CPU utilization, memory utilization).

- Planning: Develop a cost optimization plan based on the assessment findings.

- Define specific cost optimization goals and objectives.

- Prioritize optimization strategies based on potential impact and feasibility.

- Establish a timeline for implementing the chosen strategies.

- Implementation: Implement the cost optimization strategies.

- Right-size instances based on actual resource needs.

- Eliminate unused or underutilized resources.

- Leverage reserved instances or committed use discounts.

- Automate resource scaling based on demand.

- Implement a tagging strategy to categorize resources and track costs.

- Monitoring and Optimization: Continuously monitor cloud spending and resource utilization.

- Regularly review cost reports and dashboards.

- Track the impact of implemented optimization strategies.

- Identify new opportunities for cost savings.

- Adjust the optimization plan as needed.

Cost-Saving Techniques and Applications

Several cost-saving techniques can be applied to optimize cloud spending. These techniques can be implemented individually or in combination, depending on the specific cloud environment and business requirements.

- Reserved Instances (RIs) and Committed Use Discounts (CUDs): Reserved instances and committed use discounts provide significant discounts on compute resources in exchange for a commitment to use those resources for a specific period.

- Application: Ideal for workloads with predictable resource requirements. For example, a company running a database server 24/7 could purchase reserved instances to reduce compute costs by up to 72% compared to on-demand pricing (the actual discount varies depending on the cloud provider, instance type, and term).

- Spot Instances and Preemptible VMs: Spot instances and preemptible VMs offer significant discounts on compute resources, but they can be terminated by the cloud provider if the spot price exceeds the user’s bid price or if the capacity is needed.

- Application: Suitable for fault-tolerant workloads that can withstand interruptions, such as batch processing, data analysis, and testing. For instance, a data processing pipeline could use spot instances to process large datasets, leveraging the cost savings while being designed to handle potential interruptions gracefully.

- Right-Sizing Resources: Right-sizing involves matching resource allocations to actual needs, which avoids over-provisioning and reduces costs.

- Application: Regularly review resource utilization metrics (CPU, memory, storage) and adjust instance sizes or storage capacity accordingly. For example, if a web server is consistently using only 20% of its CPU capacity, the instance can be downsized to a smaller, more cost-effective instance type.

- Deleting Unused Resources: Identify and delete unused resources to avoid paying for resources that are not being utilized.

- Application: Regularly review the cloud environment and identify resources that are no longer needed, such as unused virtual machines, storage volumes, or databases. For instance, a development team might spin up test environments that are left running even after the testing phase is over.

Identifying and deleting these environments can save significant costs.

- Application: Regularly review the cloud environment and identify resources that are no longer needed, such as unused virtual machines, storage volumes, or databases. For instance, a development team might spin up test environments that are left running even after the testing phase is over.

- Automated Scaling: Implement automated scaling to dynamically adjust resource capacity based on demand.

- Application: Configure auto-scaling rules to automatically scale compute resources up or down based on metrics like CPU utilization or network traffic. For example, an e-commerce website can use auto-scaling to automatically increase the number of web servers during peak shopping seasons and reduce them during off-peak hours.

- Storage Tiering: Utilize storage tiers to optimize storage costs based on data access frequency.

- Application: Move less frequently accessed data to lower-cost storage tiers (e.g., cold storage, archive storage). For instance, archived backups of customer data could be stored in a lower-cost storage tier.

- Data Transfer Optimization: Optimize data transfer costs by minimizing data transfer between regions and using content delivery networks (CDNs).

- Application: Design applications to minimize data transfer between regions. Utilize CDNs to cache content closer to users, reducing data transfer costs and improving performance. For example, a global media company can use a CDN to deliver video content to users worldwide, reducing both latency and data transfer costs.

The Impact of Performance and Scalability

Cloud computing offers unparalleled flexibility in terms of performance and scalability. However, this flexibility comes with a direct impact on costs. Understanding how performance requirements and scaling strategies influence spending is crucial for effective cloud cost management. This section delves into the specific costs associated with achieving optimal performance and scalability within a cloud environment.

Performance Requirements Influence on Cloud Costs

Performance requirements, which dictate the speed and responsiveness of applications, significantly impact cloud costs. Demanding high performance often necessitates the selection of more powerful and, consequently, more expensive cloud resources. The need for increased compute power, higher memory allocations, and faster storage solutions directly translates to higher hourly or monthly charges.

Costs Associated with Auto-Scaling

Auto-scaling is a key feature of cloud computing, enabling resources to automatically adjust based on demand. While it offers significant benefits in terms of application availability and responsiveness, it can also lead to unexpected costs if not managed carefully. Auto-scaling works by monitoring resource utilization and automatically adding or removing instances of a particular resource, such as virtual machines.

- Over-Provisioning: Configuring auto-scaling rules too aggressively can result in over-provisioning, where more resources are allocated than are actually needed. This leads to unnecessary spending, particularly during periods of low demand.

- Under-Provisioning: Conversely, under-provisioning can lead to performance degradation and a poor user experience. This may require manual intervention to address the performance bottlenecks, which consumes time and resources.

- Monitoring and Alerting: Effective auto-scaling requires robust monitoring and alerting systems to track resource utilization and trigger scaling events. The cost of these monitoring tools, along with the time and expertise needed to configure and maintain them, contributes to the overall cost.

- Scaling Policies: Auto-scaling policies are critical to cost management. Scaling policies can be based on metrics like CPU utilization, memory usage, or custom metrics. The choice of these metrics and the thresholds that trigger scaling actions directly influence the cost.

Cost Comparison: Static vs. Dynamic, Scalable Infrastructure

Comparing the cost of static infrastructure with dynamic, scalable infrastructure highlights the trade-offs between upfront investment and ongoing operational expenses.

| Component | Static Infrastructure (On-Premises) | Dynamic, Scalable Infrastructure (Cloud) |

|---|---|---|

| Servers | Fixed number of servers, sized for peak load. High upfront capital expenditure (CAPEX). | Virtual machines (VMs) or containers. Pay-as-you-go model. Operational expenditure (OPEX). |

| Storage | Fixed storage capacity. Requires upfront investment. | Elastic storage options (e.g., object storage, block storage). Pay for what is used. |

| Network | Fixed network infrastructure. Capacity planned for peak usage. | Virtual networks, load balancers, and Content Delivery Networks (CDNs). Pay-per-use or tiered pricing. |

| Operating Costs | High operational costs including hardware maintenance, power, cooling, and IT staff. | Lower operational costs. Cloud provider manages infrastructure. Focus on application management. |

| Scalability | Limited scalability. Requires significant time and cost to add capacity. | Highly scalable. Resources automatically adjust based on demand. |

| Cost Model | CAPEX-intensive. Significant upfront investment. | OPEX-intensive. Pay-as-you-go model. Variable costs based on usage. |

The static infrastructure model incurs significant upfront costs and is often over-provisioned to handle peak loads, leading to wasted resources during periods of low demand. In contrast, the dynamic, scalable infrastructure model allows for resources to be provisioned on demand, minimizing waste and optimizing costs. For example, a retail website that experiences seasonal traffic spikes would find the dynamic model far more cost-effective than a static infrastructure.

The website can automatically scale up during peak seasons and scale down during slower periods, optimizing costs by paying only for the resources used. However, careful monitoring and management are still required to ensure cost-effectiveness, and auto-scaling policies need to be carefully configured to prevent unexpected charges.

Compliance and Governance Costs

Meeting regulatory requirements in the cloud can introduce significant costs beyond the core cloud services. These expenses are often overlooked during initial cloud adoption planning, potentially leading to budget overruns and compliance violations. Effective data governance and compliance frameworks are crucial, but they also come with their own set of associated costs.

Regulatory Compliance Requirements

Cloud environments must adhere to a wide array of regulations, varying by industry and geographic location. This often necessitates specialized tools, processes, and expertise.

- Audit and Assessment Costs: Regular audits and assessments are essential to ensure compliance with regulations like GDPR, HIPAA, PCI DSS, and others. These audits can be performed by internal teams or, more commonly, by third-party auditors.

- Example: A healthcare provider using cloud services to store patient data must undergo annual HIPAA compliance audits. The cost of these audits can range from $10,000 to $50,000 or more, depending on the size and complexity of the cloud infrastructure.

- Compliance Software and Tools: Implementing and maintaining compliance often requires specialized software solutions for tasks such as data loss prevention (DLP), security information and event management (SIEM), and vulnerability scanning.

- Example: Companies handling credit card data must implement PCI DSS-compliant security measures. This includes purchasing and maintaining SIEM software, which can cost tens of thousands of dollars annually.

- Data Residency and Localization: Some regulations mandate that data be stored within specific geographic regions. This can lead to additional costs related to data replication, cross-region data transfer, and potentially the need to establish cloud infrastructure in multiple locations.

- Example: Organizations operating in the European Union may need to store data within the EU to comply with GDPR. This could involve deploying cloud resources in multiple EU regions, incurring costs for data transfer, storage, and management.

- Training and Staffing: Compliance efforts require skilled personnel. Organizations may need to hire compliance officers, security analysts, and other specialists or invest in training existing staff.

- Example: A financial institution must train its employees on data security best practices to comply with regulations like SOX. The cost of training programs, including materials and instructor fees, can add up significantly.

Data Governance and Compliance Frameworks

Data governance frameworks ensure data quality, security, and compliance with regulations. These frameworks involve defining policies, implementing controls, and establishing processes.

- Data Governance Policies and Procedures: Establishing and documenting data governance policies, including data classification, access control, and retention policies, is a critical step. This involves the time and expertise of data governance professionals.

- Example: A company must develop a data retention policy outlining how long different types of data are stored. This requires defining retention periods, which can range from months to years, based on legal and business requirements.

- Data Cataloging and Metadata Management: Maintaining a comprehensive data catalog with metadata about data assets helps in understanding data lineage, data quality, and data access. This can involve the use of specialized tools and dedicated staff.

- Example: A company using a data lake must implement a data catalog to track the origin, transformation, and usage of data. This helps with compliance and allows for better data quality.

- Data Security and Access Controls: Implementing robust security measures, including encryption, access controls, and monitoring, is crucial for protecting sensitive data. This includes the cost of security tools and the effort of security personnel.

- Example: A company must encrypt sensitive data stored in the cloud. This includes the cost of encryption software, key management, and the ongoing effort of managing encryption keys.

- Data Privacy and Consent Management: Managing user consent and ensuring data privacy compliance requires implementing consent management platforms and processes for handling data subject requests.

- Example: A website collects user data and must provide users with options to manage their consent. This involves the implementation of a consent management platform and the effort to comply with user requests.

Compliance and Governance Cost Categories

The following table summarizes key aspects and associated costs of compliance and governance in the cloud:

| Category | Key Aspects | Associated Costs | Examples |

|---|---|---|---|

| Audit and Assessment | Regular compliance audits, vulnerability assessments, penetration testing. | Auditor fees, internal resource time, remediation costs. | HIPAA audits, PCI DSS assessments, GDPR audits. |

| Compliance Tools | Data loss prevention (DLP), SIEM, vulnerability scanning, data encryption. | Software licensing, implementation costs, maintenance fees. | SIEM software, DLP solutions, encryption tools. |

| Data Residency & Localization | Data storage within specific geographic regions. | Data replication costs, cross-region data transfer fees, infrastructure costs in multiple regions. | Deploying cloud resources in the EU to comply with GDPR. |

| Data Governance | Data cataloging, metadata management, data lineage tracking. | Data governance software, personnel costs, data quality initiatives. | Implementing a data catalog to track data assets. |

| Training and Staffing | Compliance officer salaries, security analyst salaries, training programs. | Training materials, instructor fees, hiring costs. | Training employees on data security best practices. |

| Data Privacy and Consent Management | Consent management platforms, user data request handling. | Software licensing, implementation costs, personnel costs. | Implementing a consent management platform. |

Final Conclusion

In conclusion, navigating the landscape of cloud computing costs requires a proactive approach, with a focus on understanding the hidden expenses. By carefully considering data transfer, storage, security, and operational overhead, businesses can effectively manage their cloud spending. Employing cost optimization tools, adopting multi-cloud strategies, and staying informed about compliance requirements will help to unlock the full potential of the cloud while staying within budget.

By understanding these hidden costs, organizations can harness the power of the cloud without encountering unexpected financial surprises.

Quick FAQs

What are egress fees, and why are they important?

Egress fees are charges for data leaving the cloud. They are significant because large data transfers can quickly increase your cloud bill, making it crucial to optimize data transfer strategies to minimize these costs.

How does vendor lock-in affect cloud costs?

Vendor lock-in can increase costs by limiting your ability to switch providers or negotiate better pricing. Migration costs, application refactoring, and potential service disruptions can all contribute to higher expenses.

What are reserved instances, and how do they save money?

Reserved instances are a commitment to use a specific cloud resource for a set period, typically one or three years, in exchange for a significant discount compared to on-demand pricing. They are useful for workloads that are consistent and predictable.

How can I monitor and manage my cloud costs effectively?

Use cloud cost management tools, set up budgets and alerts, regularly review resource utilization, and optimize your infrastructure based on performance needs. Monitoring is key to identifying and addressing cost inefficiencies promptly.