What are the challenges of multi-cloud migration? This complex undertaking presents a myriad of hurdles, demanding careful consideration and strategic planning. Organizations are increasingly adopting multi-cloud strategies to leverage the unique strengths of different cloud providers, mitigate vendor lock-in, and enhance resilience. However, this shift is not without its difficulties, requiring a deep understanding of the potential pitfalls.

This analysis will delve into the multifaceted challenges inherent in multi-cloud migration. We will explore the complexities of managing resources, ensuring security and compliance, handling data migration, optimizing costs, and addressing vendor lock-in. Furthermore, we will examine the importance of addressing skill gaps, network connectivity, monitoring, governance, and business continuity to successfully navigate the multi-cloud landscape.

Understanding Multi-Cloud Migration Challenges

Multi-cloud migration, the strategic deployment of applications and services across multiple cloud providers, presents a complex landscape of opportunities and obstacles. Successful navigation of this environment requires a deep understanding of the underlying components, the driving forces behind its adoption, and its distinctions from related cloud strategies. This section will delve into these foundational aspects to provide a comprehensive overview of the challenges inherent in multi-cloud migration.

Defining Multi-Cloud Migration

Multi-cloud migration involves the deliberate distribution of workloads and data across two or more cloud infrastructure providers. This approach contrasts with single-cloud deployments, where all resources reside within a single vendor’s ecosystem. The core components of a multi-cloud strategy encompass various aspects, including application architecture, data management, security protocols, and operational procedures.Multi-cloud migration’s core components can be further elaborated:

- Cloud Infrastructure Providers: The foundation of a multi-cloud strategy involves selecting and utilizing services from multiple cloud providers, such as Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), and others. The choice of providers depends on specific business needs, geographic requirements, and cost considerations.

- Application Architecture: The design of applications must be adaptable to operate across different cloud environments. This often involves containerization (e.g., using Docker and Kubernetes) and the adoption of microservices architectures to ensure portability and scalability.

- Data Management: Data consistency and accessibility are critical in a multi-cloud environment. Strategies include data replication, data synchronization, and the use of database technologies that support multi-cloud deployments.

- Security Protocols: Implementing robust security measures across multiple cloud platforms is paramount. This involves consistent identity and access management (IAM), encryption, and threat detection mechanisms.

- Operational Procedures: Efficient management requires standardized operational processes, including monitoring, logging, and automation. Tools and platforms that provide a unified view and control across different cloud environments are essential.

Motivations for Multi-Cloud Adoption

Organizations embrace multi-cloud strategies for a variety of compelling reasons, each contributing to enhanced business agility, resilience, and cost efficiency. These motivations often work in concert to drive adoption.Key motivations include:

- Avoiding Vendor Lock-in: Multi-cloud allows organizations to reduce dependence on a single cloud provider, preventing them from being locked into specific technologies or pricing models. This flexibility enables organizations to negotiate better terms and leverage the best services from different vendors.

- Improving Resilience and Availability: Distributing workloads across multiple clouds enhances application resilience. If one cloud provider experiences an outage, the application can continue to operate on another cloud, minimizing downtime and ensuring business continuity.

- Optimizing Costs: Different cloud providers offer varying pricing structures for different services. Multi-cloud enables organizations to select the most cost-effective services for specific workloads, optimizing overall cloud spending. For example, an organization might choose AWS for compute-intensive tasks and Google Cloud for data analytics, based on their respective pricing models.

- Leveraging Best-of-Breed Services: Each cloud provider excels in certain areas. Multi-cloud allows organizations to choose the best services for their specific needs, regardless of the provider. For instance, an organization might use Azure for its strong Active Directory integration and AWS for its machine learning capabilities.

- Meeting Regulatory and Compliance Requirements: Some industries and geographies have specific data residency requirements. Multi-cloud enables organizations to deploy workloads in specific regions to meet these requirements, ensuring compliance with regulations such as GDPR or HIPAA.

Differentiating Multi-Cloud and Hybrid Cloud

While often discussed in tandem, multi-cloud and hybrid cloud represent distinct cloud strategies with unique characteristics and implications. Understanding the differences is critical for making informed decisions about cloud deployments.Key differences between multi-cloud and hybrid cloud include:

- Cloud Environment Composition: Multi-cloud involves the use of multiple public cloud providers, such as AWS, Azure, and GCP. Hybrid cloud, on the other hand, combines a public cloud with a private cloud (on-premises or hosted) and/or legacy IT infrastructure.

- Management Complexity: Multi-cloud environments can be complex to manage due to the need to integrate and orchestrate services across different cloud providers. Hybrid cloud environments can also be complex, especially when integrating on-premises infrastructure with cloud services.

- Primary Focus: Multi-cloud primarily focuses on leveraging multiple public cloud providers for various benefits, such as vendor diversification, cost optimization, and access to specialized services. Hybrid cloud focuses on integrating public cloud services with existing on-premises or private cloud infrastructure to provide flexibility, scalability, and the ability to maintain control over sensitive data.

- Workload Distribution: In multi-cloud, workloads are distributed across multiple public clouds. In hybrid cloud, workloads can be distributed between public and private clouds, based on factors such as performance requirements, security needs, and compliance regulations.

- Use Cases: Multi-cloud is often used for vendor diversification, disaster recovery, and leveraging specialized services from different providers. Hybrid cloud is often used for extending on-premises infrastructure to the cloud, enabling workload portability, and complying with regulatory requirements.

Complexity and Management Overhead

Multi-cloud environments, while offering significant advantages, introduce substantial complexity in resource management and operational overhead. This complexity stems from the need to integrate and orchestrate services across diverse platforms, each with its own unique management tools, APIs, and operational models. This heterogeneity increases the cognitive load on IT teams, potentially leading to inefficiencies, errors, and increased operational costs. The following sections detail the specific challenges associated with this increased complexity.

Increased Complexity in Managing Resources Across Multiple Cloud Providers

Managing resources across multiple cloud providers necessitates a comprehensive understanding of each provider’s infrastructure, services, and operational procedures. This complexity arises from several factors, including differing APIs, resource naming conventions, and service availability zones.

- API Differences: Each cloud provider offers a unique set of APIs for interacting with its services. This requires developers and operations teams to learn and adapt to multiple API sets, increasing the development time and the potential for errors. For example, deploying a containerized application might involve using AWS’s Elastic Container Service (ECS), Azure Container Instances (ACI), and Google Kubernetes Engine (GKE), each requiring different API calls and configuration parameters.

- Resource Heterogeneity: Different cloud providers offer varying levels of maturity and features for similar services. This heterogeneity creates challenges in selecting the optimal service for a given workload and ensuring consistent performance across all platforms. For instance, the compute instances available from AWS, Azure, and GCP differ in terms of their underlying hardware, pricing models, and performance characteristics, which may impact application performance and cost optimization strategies.

- Monitoring and Observability Challenges: Monitoring and logging across multiple cloud providers necessitate integrating various monitoring tools and correlating data from disparate sources. This complexity makes it challenging to gain a unified view of application performance, identify performance bottlenecks, and troubleshoot issues effectively. Achieving comprehensive observability requires integrating diverse monitoring solutions like AWS CloudWatch, Azure Monitor, and Google Cloud Operations Suite.

- Security Posture Management: Ensuring a consistent security posture across multiple cloud providers requires implementing and managing security controls that are compatible with each platform’s security features. This complexity involves configuring security groups, access control lists, and other security policies across multiple consoles, potentially leading to configuration errors and security vulnerabilities. Implementing a centralized security information and event management (SIEM) system can mitigate these challenges.

Challenges Associated with Consistent Policy Enforcement Across Different Cloud Environments

Consistent policy enforcement across different cloud environments is crucial for maintaining security, compliance, and operational efficiency. However, achieving this is challenging due to the heterogeneity of cloud provider policies, configurations, and enforcement mechanisms.

- Policy Differences: Each cloud provider defines its own set of policies for resource management, security, and compliance. These policies can differ in terms of their syntax, scope, and enforcement mechanisms, requiring IT teams to adapt their policies to each platform. For example, implementing data encryption policies might involve using AWS KMS, Azure Key Vault, and Google Cloud KMS, each with its own set of configuration parameters and best practices.

- Configuration Drift: Configuration drift, where resources deviate from their intended configurations, is a significant challenge in multi-cloud environments. This can occur due to manual configuration changes, automation errors, or inconsistencies in policy enforcement. Regular audits and automated configuration management tools are essential to mitigate this risk.

- Compliance Management: Meeting regulatory compliance requirements across multiple cloud providers requires a deep understanding of each provider’s compliance certifications and the ability to map these certifications to specific workloads. Automating compliance checks and generating compliance reports can help streamline the compliance process.

- Lack of Centralized Policy Enforcement: Cloud providers often offer their own native policy enforcement mechanisms, but these mechanisms are typically siloed within each provider’s environment. Implementing a centralized policy enforcement system that can apply policies consistently across all cloud providers can be complex.

Comparison of Management Tools Offered by AWS, Azure, and GCP

The following table provides a comparative analysis of the management tools offered by AWS, Azure, and GCP. This comparison highlights the strengths and weaknesses of each platform’s management capabilities, aiding in the selection of appropriate tools for a multi-cloud environment.

| Feature | AWS | Azure | GCP |

|---|---|---|---|

| Resource Management | AWS CloudFormation (Infrastructure as Code), AWS Management Console (Web Interface), AWS CLI (Command Line Interface) | Azure Resource Manager (ARM templates), Azure Portal (Web Interface), Azure CLI (Command Line Interface), Azure PowerShell | Google Cloud Deployment Manager (Infrastructure as Code), Google Cloud Console (Web Interface), Google Cloud SDK (Command Line Interface) |

| Monitoring and Logging | Amazon CloudWatch (Monitoring, Logging, Metrics), AWS CloudTrail (Audit Logging) | Azure Monitor (Monitoring, Logging, Metrics), Azure Log Analytics (Log Analysis), Azure Activity Log (Audit Logging) | Google Cloud Operations Suite (formerly Stackdriver) (Monitoring, Logging, Metrics), Google Cloud Audit Logs (Audit Logging) |

| Identity and Access Management | AWS IAM (Identity and Access Management), AWS Organizations (Account Management) | Azure Active Directory (Identity and Access Management), Azure Role-Based Access Control (RBAC) | Google Cloud IAM (Identity and Access Management), Google Cloud Resource Manager (Account Management) |

| Cost Management | AWS Cost Explorer, AWS Budgets | Azure Cost Management + Billing | Google Cloud Cost Management |

Security and Compliance Concerns

Multi-cloud migration introduces a complex security landscape, expanding the attack surface and increasing the challenges associated with maintaining regulatory compliance. The distributed nature of resources across different cloud providers necessitates robust security measures and a unified approach to governance to mitigate risks and ensure data protection. Failure to adequately address these concerns can lead to data breaches, non-compliance penalties, and reputational damage.

Security Vulnerabilities During Migration

The migration process itself presents numerous opportunities for security breaches. Data in transit, misconfigured cloud services, and inconsistent security policies across different providers are common sources of vulnerability. A comprehensive understanding of these risks is crucial for developing effective mitigation strategies.

- Data Exposure During Transfer: Data transferred between cloud providers is vulnerable to interception if not properly secured. Encryption, both in transit and at rest, is essential, but the key management process must also be secure. Misconfigured Secure Socket Layer (SSL) certificates, weak encryption algorithms, or compromised keys can expose sensitive data.

- Misconfiguration of Cloud Services: Cloud services are complex, and misconfigurations are a frequent source of security breaches. This includes improperly configured storage buckets (e.g., Amazon S3 buckets) that are publicly accessible, allowing unauthorized access to data. Security groups, network access control lists (ACLs), and identity and access management (IAM) policies must be meticulously configured to restrict access to authorized users and services.

- Inconsistent Security Policies: Different cloud providers have different security models and default settings. Implementing and maintaining consistent security policies across multiple clouds is a significant challenge. For example, the implementation of network segmentation, intrusion detection systems, and vulnerability scanning may vary between providers. A lack of standardization can create gaps in security coverage.

- Lack of Visibility and Monitoring: Gaining comprehensive visibility into security events across multiple cloud environments is difficult. Log aggregation, security information and event management (SIEM) systems, and centralized dashboards are necessary to monitor security threats and detect anomalies. Without proper monitoring, security breaches may go unnoticed for extended periods, increasing the potential damage.

- Insider Threats and Compromised Credentials: Insider threats, whether malicious or accidental, pose a significant risk. Compromised credentials can allow attackers to gain access to sensitive data and systems. Strong authentication, authorization, and access control mechanisms are essential to mitigate this risk. Regularly auditing user access and implementing least privilege principles are critical.

Challenges in Maintaining Compliance

Meeting industry regulations, such as the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA), across multiple cloud providers requires careful planning and execution. Each cloud provider has its own compliance certifications and security features, which must be understood and integrated to ensure compliance.

- Data Residency Requirements: Regulations often dictate where data can be stored and processed. Ensuring data residency compliance requires careful consideration of the geographical locations of cloud provider data centers and the specific requirements of the regulations.

- Data Protection and Privacy: GDPR, for example, requires organizations to implement robust data protection measures, including data encryption, access controls, and data minimization. These measures must be applied consistently across all cloud environments.

- Data Breach Notification: Regulations often require organizations to notify regulatory authorities and affected individuals in the event of a data breach. The notification process must be clearly defined and implemented across all cloud environments.

- Auditability and Reporting: Compliance requires the ability to audit data access, security events, and system configurations. Organizations must implement robust logging and monitoring systems to provide the necessary audit trails.

- Vendor Management: Managing compliance with multiple cloud providers requires careful vendor management. Organizations must ensure that their cloud providers meet the necessary compliance requirements and that their service level agreements (SLAs) address security and compliance obligations.

Secure Multi-Cloud Architecture Illustration

A secure multi-cloud architecture prioritizes security and compliance by implementing a layered approach to protect data and applications. This architecture leverages various security controls to provide defense-in-depth.

Description of the Architecture:

The architecture is represented as a diagram with three distinct layers: a user/client layer, an intermediary layer, and the cloud provider layer. The user/client layer is where end-users and applications interact. The intermediary layer, situated between the user/client and cloud providers, is the core of the security design, containing security controls that are provider-agnostic. The cloud provider layer represents the different cloud providers (e.g., AWS, Azure, Google Cloud) where applications and data are hosted.

User/Client Layer: The user/client layer is the entry point. This layer includes the users and the applications accessing the system. Security controls at this layer are primarily focused on authentication and authorization, like multi-factor authentication (MFA) and secure access protocols (HTTPS/TLS) to establish a secure connection.

Intermediary Layer: The intermediary layer is the most important part of the architecture. It is the central security control point, including:

- Identity and Access Management (IAM): A centralized IAM system manages user identities, access permissions, and roles. This ensures consistent access control across all cloud providers.

- Security Information and Event Management (SIEM): A SIEM system collects and analyzes security logs from all cloud environments. This provides a centralized view of security events and facilitates threat detection and incident response.

- Web Application Firewall (WAF): A WAF protects web applications from common attacks, such as cross-site scripting (XSS) and SQL injection.

- Data Loss Prevention (DLP): DLP systems monitor and prevent sensitive data from leaving the organization’s control.

- API Gateway: The API gateway acts as a single entry point for all API requests. It can enforce security policies, rate limiting, and authentication.

- Network Segmentation: This divides the network into isolated segments, limiting the impact of security breaches.

Cloud Provider Layer: This layer includes the cloud providers. Each cloud provider has its own security services and features, such as:

- Virtual Private Cloud (VPC) / Virtual Network: These provide isolated network environments within the cloud.

- Encryption: Data at rest and in transit is encrypted using provider-specific encryption services.

- Compliance Certifications: Each cloud provider maintains compliance certifications (e.g., ISO 27001, SOC 2) to meet industry standards.

Connectivity and Data Flow:

The diagram illustrates the flow of data and the application of security controls:

- Users connect to applications through secure channels (e.g., HTTPS).

- The API gateway receives requests and enforces security policies.

- The IAM system authenticates and authorizes users.

- The WAF and DLP systems protect against attacks and data leakage.

- The SIEM system monitors security events.

- Data and applications are hosted in the cloud providers, secured by their respective security services.

This layered approach provides a robust security posture. By centralizing security controls in the intermediary layer, organizations can achieve consistent security policies across multiple cloud environments and simplify compliance management.

Data Migration and Integration

Data migration and integration represent significant hurdles in multi-cloud environments. The movement of data, encompassing vast datasets and complex dependencies, between diverse cloud platforms necessitates meticulous planning, execution, and ongoing management. Effective strategies are critical for minimizing downtime, ensuring data integrity, and optimizing application performance across the multi-cloud landscape.

Difficulties in Migrating Large Datasets

Migrating substantial datasets between different cloud platforms presents several technical and logistical challenges. These difficulties stem from factors such as network bandwidth limitations, data format incompatibilities, and the diverse architectures of cloud providers.The sheer volume of data often necessitates extended migration timelines, increasing the potential for disruptions and associated costs. Furthermore, the “last mile” problem, where data transfer speeds are constrained by network bottlenecks at the source or destination, can significantly impact overall migration efficiency.

Cloud providers offer varying levels of network performance and data transfer services, requiring careful evaluation and selection based on specific needs.Data format discrepancies pose another significant hurdle. Data stored in proprietary formats or using specific cloud-provider-dependent features requires transformation and compatibility adjustments. This process, often involving data transformation and validation, adds complexity and the potential for data loss or corruption.Consider the example of a retail company migrating its product catalog (petabytes of data) from Amazon Web Services (AWS) to Google Cloud Platform (GCP).

The migration process would involve:* Network Assessment: Evaluating network bandwidth and identifying potential bottlenecks.

Data Transformation

Converting data from AWS-specific formats to GCP-compatible formats.

Data Validation

Ensuring data integrity and consistency after the migration.

Downtime Planning

Scheduling the migration during periods of low activity to minimize disruption.Failure to address these challenges can lead to prolonged migration times, increased costs, and potential data loss, impacting business operations.

Common Data Integration Challenges

Integrating data across multiple cloud environments introduces a range of integration challenges. These challenges include differences in data models, API incompatibilities, and the need for consistent data governance policies.Data model discrepancies arise when different cloud platforms employ varying data structures and schemas. This can necessitate complex data mapping and transformation processes to ensure data compatibility and consistency across applications. For example, a financial services company might use different data models for customer information in AWS and Azure, requiring complex data mapping logic for consistent reporting.API incompatibilities between cloud platforms can also hinder data integration efforts.

Applications and services built on one cloud platform may not seamlessly communicate with those on another, requiring the development of custom integrations or the use of middleware solutions.Data governance is crucial for ensuring data quality, security, and compliance. Establishing consistent data governance policies across multiple clouds is challenging. Data lineage, access control, and data quality standards must be carefully defined and enforced to maintain data integrity and regulatory compliance.

For instance, adhering to GDPR (General Data Protection Regulation) across various cloud environments requires consistent data access control and data anonymization policies.

Best Practices for Data Migration Strategies

Implementing effective data migration strategies is essential for minimizing risks and maximizing the benefits of a multi-cloud environment. The following best practices provide a framework for successful data migration:

- Assess and Plan: Begin by thoroughly assessing the existing data landscape, including data volume, data types, dependencies, and compliance requirements. Develop a detailed migration plan outlining the scope, timeline, resources, and risk mitigation strategies.

- Choose the Right Tools: Select appropriate data migration tools and services based on the specific needs of the migration. Consider factors such as data volume, data formats, and migration speed. Tools like AWS DataSync, Google Cloud Storage Transfer Service, and Azure Data Box offer various data migration capabilities.

- Select the Migration Approach: Choose the appropriate migration approach based on business requirements and technical constraints. Options include:

- Lift and Shift: Migrating data as-is with minimal changes.

- Re-platforming: Making minor changes to the data to optimize it for the new platform.

- Re-architecting: Redesigning the data and application architecture for the new platform.

- Test and Validate: Conduct thorough testing and validation throughout the migration process. Verify data integrity, data accuracy, and application functionality after migration. Perform user acceptance testing (UAT) to ensure that the migrated data meets business requirements.

- Automate and Orchestrate: Automate the data migration process as much as possible. Use orchestration tools to manage and monitor the migration workflow, reducing manual effort and minimizing errors.

- Monitor and Optimize: Continuously monitor the data migration progress and performance. Identify and address any bottlenecks or issues that arise during the migration. Optimize the migration process based on real-time data and feedback.

- Implement Data Security: Implement robust data security measures throughout the migration process. Encrypt data in transit and at rest, and enforce strict access controls to protect sensitive information.

Cost Management and Optimization

Multi-cloud environments introduce significant complexity to cost management, demanding sophisticated strategies to avoid overspending and maximize return on investment. The distributed nature of resources across different providers, each with its own pricing structure and billing mechanisms, requires a proactive and granular approach to monitoring and control. Without careful planning and execution, the benefits of multi-cloud can be quickly eroded by escalating costs.

Challenges in Monitoring and Controlling Costs Across Multiple Cloud Providers

Effectively managing costs in a multi-cloud setup presents several hurdles. These challenges necessitate a multi-faceted strategy to ensure cost efficiency.

- Heterogeneous Pricing Models: Each cloud provider, such as AWS, Azure, and GCP, employs unique pricing structures for similar services. This can involve varying costs for compute instances, storage, data transfer, and other resources. Understanding these nuances and how they impact total cost of ownership (TCO) is crucial.

- Lack of Unified Visibility: Gaining a comprehensive view of spending across multiple platforms is often difficult. Different billing portals, data formats, and reporting capabilities can make it challenging to aggregate and analyze cost data. This lack of centralized visibility hinders the ability to identify cost drivers and inefficiencies.

- Complexity of Resource Allocation: Accurately attributing costs to specific projects, teams, or applications becomes more complex in a multi-cloud environment. Without proper tagging and cost allocation strategies, it’s difficult to understand where the money is being spent and to hold teams accountable for their resource usage.

- Difficulty in Forecasting and Budgeting: Predicting future cloud spending is inherently complex, especially with the dynamic nature of cloud resource consumption. The variability in pricing, combined with the potential for unexpected usage spikes, can make it challenging to create accurate budgets and forecasts.

- Governance and Policy Enforcement: Implementing and enforcing cost governance policies across multiple clouds can be challenging. This includes setting budgets, defining resource limits, and automating cost optimization recommendations. Ensuring consistent policy enforcement across all providers requires robust automation and monitoring capabilities.

Comparing Pricing Models of AWS, Azure, and GCP for Similar Services

Cloud providers offer similar services, but their pricing models vary significantly. Understanding these differences is crucial for making informed decisions about resource allocation and optimizing costs.

Let’s examine the pricing of compute instances, storage, and data transfer across AWS, Azure, and GCP.

Compute Instances:

Consider the pricing for a standard virtual machine with similar specifications (e.g., 4 vCPUs, 16 GB RAM). Note that prices are approximate and subject to change.

| Cloud Provider | Instance Type (Example) | Hourly Price (USD – Example) | Pricing Model |

|---|---|---|---|

| AWS | m5.large | $0.192 | On-Demand, Reserved Instances, Spot Instances |

| Azure | Standard_D4s_v3 | $0.194 | Pay-as-you-go, Reserved Instances, Spot VMs |

| GCP | n1-standard-4 | $0.189 | On-Demand, Committed Use Discounts, Preemptible VMs |

Note: The table provides example pricing and may vary based on region, operating system, and other factors. The prices are as of the last data update, and are subject to change.

Storage:

Storage pricing also differs. For object storage, consider the cost per GB per month.

| Cloud Provider | Storage Type (Example) | Price per GB/Month (USD – Example) |

|---|---|---|

| AWS | S3 Standard | $0.023 |

| Azure | Azure Blob Storage (Hot) | $0.023 |

| GCP | Cloud Storage (Standard) | $0.020 |

Note: Storage pricing can vary based on storage class (e.g., hot, cold, archive) and data access frequency.

Data Transfer:

Data transfer costs, especially for outbound data (data leaving the cloud provider’s network), can be significant. The pricing varies by provider and region.

Example (Outbound Data Transfer from US East Region to Internet):

| Cloud Provider | Price per GB (USD – Example) |

|---|---|

| AWS | $0.09 per GB for the first 1 GB, $0.085/GB up to 10 TB |

| Azure | $0.087 per GB |

| GCP | $0.08 per GB for the first 1 TB |

Note: Data transfer pricing is tiered, with lower rates for larger volumes of data.

These examples demonstrate the need for a thorough analysis of pricing across providers. Factors to consider include:

- Instance Type and Size: Choosing the right instance size based on workload requirements.

- Pricing Models: Utilizing reserved instances, committed use discounts, or spot/preemptible instances to reduce costs.

- Storage Class: Selecting the appropriate storage class based on data access frequency and durability needs.

- Data Transfer Patterns: Optimizing data transfer to minimize costs, considering regional pricing and data egress charges.

Demonstrating the Use of Cost Optimization Tools in a Multi-Cloud Setup

Several tools and strategies can be employed to optimize costs in a multi-cloud environment. These tools provide insights, recommendations, and automation capabilities.

- Cloud Provider Native Tools: Each cloud provider offers its own cost management tools. AWS has AWS Cost Explorer and AWS Budgets. Azure has Azure Cost Management and Azure Budgets. GCP has Google Cloud Cost Management and Google Cloud Budgets. These tools provide detailed cost breakdowns, budget alerts, and cost optimization recommendations specific to each platform.

- Third-Party Cost Management Platforms: Several third-party platforms, such as CloudHealth by VMware, Apptio Cloudability, and Flexera, provide multi-cloud cost management capabilities. These tools aggregate cost data from multiple providers, offer unified dashboards, and provide advanced analytics and optimization recommendations.

- Cost Allocation and Tagging: Implementing a consistent tagging strategy across all cloud providers is crucial for cost allocation. Tagging resources with project names, departments, or applications allows for accurate cost tracking and reporting.

- Automated Cost Optimization Recommendations: Many cost management tools provide automated recommendations for optimizing costs. This can include identifying idle resources, right-sizing instances, and recommending the use of reserved instances or committed use discounts.

- Budgeting and Alerting: Setting budgets and configuring alerts to monitor spending is essential. This allows for proactive identification of potential cost overruns and enables timely action to mitigate them.

- Right-Sizing Instances: Regularly analyzing resource utilization and adjusting instance sizes to match actual needs is crucial. Over-provisioned instances lead to unnecessary costs.

- Automated Resource Shutdowns: Implement automation to shut down non-production resources (e.g., development or testing environments) during off-peak hours.

- Cost Monitoring Dashboards: Create custom dashboards to visualize cost trends, identify cost drivers, and track the effectiveness of cost optimization efforts.

Example: Using a Third-Party Tool

Let’s consider a hypothetical scenario where a company uses CloudHealth to manage its multi-cloud environment (AWS and Azure). The tool aggregates cost data from both providers and provides a unified view of spending.

Step 1: Data Aggregation: CloudHealth automatically pulls cost data from AWS and Azure using APIs. The tool presents this data in a single dashboard.

Step 2: Tagging and Cost Allocation: The company has implemented a consistent tagging strategy, tagging all resources with project names and department codes. CloudHealth uses these tags to allocate costs to specific projects and departments, providing insights into which areas are driving the most spending.

Step 3: Optimization Recommendations: CloudHealth analyzes the data and identifies potential cost savings. For example, it might recommend right-sizing a specific EC2 instance in AWS or suggesting the use of reserved instances in Azure. It may also identify unused resources that can be shut down.

Step 4: Automated Actions: Based on the recommendations, the company can implement automated actions. For instance, they might automate the shutdown of development instances outside of business hours using CloudHealth’s automation capabilities. This reduces costs without manual intervention.

Step 5: Reporting and Analysis: The tool generates reports and dashboards showing cost trends, savings achieved, and the overall efficiency of the cloud environment. This allows the company to continuously monitor and optimize its cloud spending.

By using a combination of cloud provider native tools, third-party platforms, tagging, and automation, organizations can effectively manage and optimize costs in their multi-cloud environments. The specific tools and strategies employed will depend on the size and complexity of the environment, the level of cost control required, and the specific goals of the organization.

Vendor Lock-in and Portability Issues

The allure of multi-cloud environments is often tempered by the realities of vendor lock-in and the challenges of application portability. These issues can significantly impact the flexibility, cost-effectiveness, and overall success of a multi-cloud strategy. Understanding and proactively addressing these concerns is crucial for organizations aiming to leverage the benefits of a diversified cloud approach.

Risks Associated with Vendor Lock-in

Vendor lock-in in a multi-cloud context presents several potential risks that can undermine the intended benefits of cloud diversification. These risks stem from the proprietary nature of some cloud services and the complexities of data migration and application refactoring.

- Dependency on Proprietary Services: Utilizing vendor-specific services, such as databases, machine learning platforms, or specialized storage solutions, creates dependencies that make it difficult to migrate applications to other cloud providers. This limits the ability to switch providers to optimize costs, performance, or availability. For example, an organization heavily reliant on a specific cloud provider’s serverless computing platform would face significant challenges in migrating its applications to a different provider’s serverless offering due to code compatibility issues and service differences.

- Increased Costs: Vendor lock-in can lead to inflated costs over time. Cloud providers may increase prices, knowing that customers are less likely to switch due to the complexities of migration. Furthermore, the lack of competition can stifle innovation and prevent organizations from benefiting from more cost-effective solutions offered by other providers.

- Reduced Flexibility and Agility: Being locked into a single vendor limits an organization’s ability to respond to changing business needs and technological advancements. The inability to quickly adopt new technologies or leverage the best-of-breed services offered by different providers can hinder innovation and competitive advantage. For example, if a new, more efficient machine learning algorithm becomes available on a competitor’s platform, a locked-in organization may be unable to quickly integrate it.

- Operational Complexity: Managing multiple instances of the same application across different cloud providers, each with its own proprietary tools and interfaces, adds significant operational complexity. This can increase the risk of errors, downtime, and security vulnerabilities. Managing disparate security models, monitoring systems, and deployment pipelines across different cloud environments adds to the administrative overhead.

Difficulties in Achieving Application Portability

Application portability, the ability to seamlessly move an application between different cloud platforms, is a cornerstone of a successful multi-cloud strategy. However, achieving true portability is a complex undertaking.

- Incompatible APIs and Services: Cloud providers offer different APIs and services, making it challenging to write code that works consistently across multiple platforms. Applications that rely on vendor-specific APIs or services must be refactored or rewritten to work on other platforms. This process is time-consuming, expensive, and can introduce errors.

- Data Migration Challenges: Migrating data between different cloud platforms can be a complex and time-consuming process. Data formats, storage mechanisms, and database schemas may differ, requiring data transformation and conversion. The volume of data, network bandwidth, and downtime requirements can further complicate the migration process.

- Configuration and Infrastructure Differences: Even if the application code is portable, the underlying infrastructure configurations can vary significantly between cloud providers. This includes networking configurations, security settings, and monitoring tools. Ensuring consistent infrastructure configurations across multiple platforms requires careful planning and automation.

- Operational Skillset Requirements: Managing applications across multiple cloud platforms requires a diverse skillset. Teams need to be proficient in the specific tools, services, and best practices of each provider. This can lead to increased training costs and operational overhead.

Strategies to mitigate vendor lock-in include:

- Adopting Open Standards: Prioritizing the use of open-source technologies, open APIs, and industry standards to minimize dependencies on proprietary vendor solutions.

- Containerization: Utilizing containerization technologies, such as Docker and Kubernetes, to package applications and their dependencies into portable units that can run consistently across different cloud environments.

- Microservices Architecture: Designing applications as a collection of independent, loosely coupled microservices that can be deployed and managed independently, making it easier to migrate or replace individual components.

- Abstraction Layers: Implementing abstraction layers that decouple applications from the underlying cloud infrastructure, allowing for easier portability and switching between providers.

- Multi-Cloud Management Tools: Leveraging multi-cloud management platforms to provide a unified view of resources, automate deployments, and simplify operations across multiple cloud environments.

Skill Gap and Training Requirements

The transition to a multi-cloud environment introduces a significant challenge: the need for specialized skills across various cloud platforms. Organizations often face a skill gap, where their existing IT teams lack the expertise required to effectively manage and optimize resources across multiple cloud providers. This deficiency can hinder the successful implementation, operation, and ongoing management of a multi-cloud strategy, leading to inefficiencies, security vulnerabilities, and increased operational costs.

Addressing this skill gap through targeted training and resource allocation is crucial for realizing the full potential of a multi-cloud approach.

Challenges of Managing Skill Sets in Multi-Cloud Environments

Managing a multi-cloud setup necessitates a diverse skill set that extends beyond the traditional IT expertise. This includes proficiency in multiple cloud platforms, automation tools, security protocols, and cost management strategies. Successfully navigating these complexities requires a strategic approach to talent acquisition, training, and skill development.

- Platform-Specific Expertise: Deep understanding of each cloud provider’s services, APIs, and management consoles is essential. This includes knowledge of AWS, Azure, and GCP, covering areas like compute, storage, networking, databases, and serverless computing. For example, a team might need to understand AWS’s EC2 instances, Azure’s Virtual Machines, and GCP’s Compute Engine, along with their respective pricing models and performance characteristics.

- Automation and Orchestration Skills: Automation is key to managing multi-cloud environments efficiently. Skills in infrastructure-as-code (IaC) tools like Terraform, CloudFormation (AWS), Azure Resource Manager (ARM), and Google Cloud Deployment Manager are critical. These tools allow for the automated provisioning, configuration, and management of cloud resources across different platforms.

- Security and Compliance Proficiency: Security is paramount in a multi-cloud setup. Expertise in cloud security best practices, identity and access management (IAM), data encryption, and compliance frameworks (e.g., HIPAA, GDPR) is vital. Teams must be able to implement security controls consistently across different cloud providers.

- Cost Management and Optimization: Managing cloud costs effectively is crucial. This requires skills in cost analysis, resource optimization, and the use of cost management tools provided by each cloud provider. The ability to identify and eliminate wasted resources, leverage reserved instances, and implement cost-saving strategies is essential.

- Networking Expertise: Understanding cloud networking concepts, including virtual private clouds (VPCs), subnets, routing, and load balancing, is necessary to design and manage network connectivity between different cloud environments and on-premises infrastructure. This includes skills in configuring and troubleshooting network issues across different cloud platforms.

- Monitoring and Observability Skills: The ability to monitor the performance and health of applications and infrastructure across multiple clouds is critical. This involves using monitoring tools like Prometheus, Grafana, and cloud-specific monitoring services to collect, analyze, and visualize data.

Training Requirements for Multi-Cloud Implementation and Maintenance

Comprehensive training programs are vital to equip IT teams with the necessary skills to succeed in a multi-cloud environment. These programs should cover a range of topics, from foundational cloud concepts to advanced platform-specific skills and best practices. Training should be continuous and adapted to keep up with the evolving cloud landscape.

- Foundational Cloud Training: This covers the fundamental concepts of cloud computing, including IaaS, PaaS, and SaaS models, cloud deployment models (public, private, hybrid), and the benefits and challenges of cloud adoption.

- Platform-Specific Training: In-depth training on AWS, Azure, and GCP, covering their core services, management consoles, and best practices. This should include hands-on labs and practical exercises to reinforce learning.

- Automation and DevOps Training: Training on IaC tools, CI/CD pipelines, containerization (Docker, Kubernetes), and automation tools like Ansible and Chef.

- Security Training: Training on cloud security best practices, IAM, data encryption, compliance frameworks, and security tools.

- Cost Management Training: Training on cost analysis, resource optimization, and the use of cloud provider cost management tools.

- Monitoring and Observability Training: Training on monitoring tools, log analysis, and performance optimization techniques.

- Soft Skills Development: Training in communication, collaboration, and problem-solving, as these skills are essential for working effectively in cross-functional teams and managing complex multi-cloud environments.

Resources for Learning Cloud-Specific Skills

Numerous resources are available to help individuals and organizations acquire the necessary cloud skills. These resources range from official cloud provider training programs to third-party certifications and online courses. The choice of resources should be based on individual learning preferences, skill level, and career goals.

- AWS Resources:

- AWS Training and Certification: Official AWS training courses and certifications, covering a wide range of topics from foundational to advanced levels.

- AWS Documentation: Comprehensive documentation, tutorials, and best practices guides.

- AWS Skill Builder: A platform offering a variety of digital courses and learning paths.

- AWS Whitepapers and Technical Guides: In-depth technical documents on various AWS services and solutions.

- Azure Resources:

- Microsoft Learn: Microsoft’s online learning platform offering free, interactive modules and learning paths for Azure.

- Microsoft Azure Training and Certification: Official Azure training courses and certifications.

- Azure Documentation: Comprehensive documentation, tutorials, and best practices guides.

- Azure Architecture Center: Guidance on designing and implementing cloud solutions on Azure.

- GCP Resources:

- Google Cloud Training: Official Google Cloud training courses and certifications.

- Google Cloud Documentation: Comprehensive documentation, tutorials, and best practices guides.

- Qwiklabs: Hands-on labs and training environments for GCP.

- Google Cloud Architecture Center: Guidance on designing and implementing cloud solutions on GCP.

- Third-Party Training and Certifications:

- Cloud Providers’ Partner Programs: These programs often provide access to specialized training and resources.

- Online Learning Platforms: Platforms like Coursera, Udemy, and A Cloud Guru offer a wide variety of cloud computing courses.

- Industry Certifications: Certifications from organizations like CompTIA and (ISC)² can validate cloud skills and knowledge.

Network Connectivity and Performance

Establishing and maintaining robust network connectivity and ensuring optimal application performance are critical pillars for the success of any multi-cloud migration strategy. The inherent complexities of integrating disparate cloud environments necessitate a meticulous approach to network design and management. Poorly planned network architecture can lead to increased latency, reduced bandwidth, and ultimately, compromised application performance, hindering the benefits of multi-cloud deployments.

Challenges in Establishing and Maintaining Reliable Network Connectivity

The primary challenge in establishing reliable network connectivity in a multi-cloud environment stems from the fundamental differences in network architectures and security protocols employed by various cloud providers. These differences necessitate the implementation of sophisticated solutions to ensure seamless communication between workloads hosted across different cloud platforms.

- Inter-Cloud Connectivity Options: Establishing connectivity between cloud providers can be achieved through several methods, each with its own set of trade-offs:

- Public Internet: Utilizing the public internet for inter-cloud communication is the simplest approach, but it introduces significant security and performance risks due to its inherent unreliability and vulnerability to cyber threats. Data traversing the public internet is susceptible to eavesdropping, man-in-the-middle attacks, and denial-of-service attacks.

Furthermore, the unpredictable nature of internet routing can lead to inconsistent latency and bandwidth limitations.

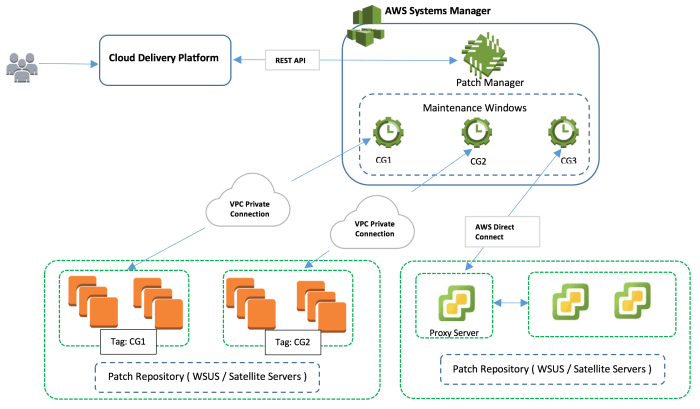

- Virtual Private Networks (VPNs): VPNs provide a more secure alternative by encrypting network traffic and establishing a secure tunnel over the public internet. However, VPNs can still suffer from performance limitations due to the overhead of encryption and the reliance on the underlying internet infrastructure. Site-to-site VPNs connect entire networks, while client-to-site VPNs enable individual users or devices to connect.

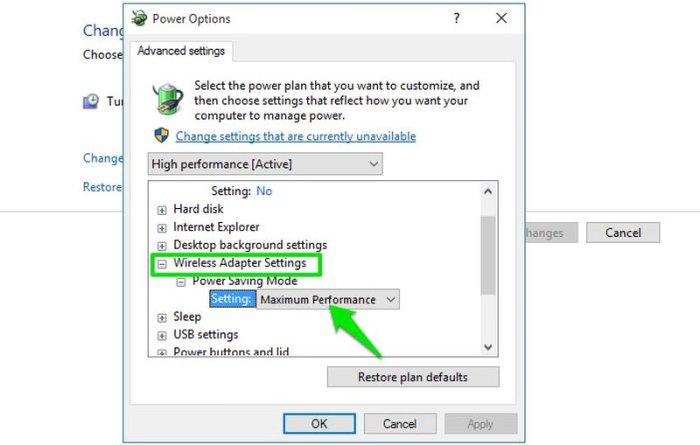

- Direct Connect/Dedicated Connections: Dedicated connections, such as AWS Direct Connect, Azure ExpressRoute, and Google Cloud Interconnect, offer the highest levels of performance and reliability. These services provide a private, dedicated network connection between the customer’s on-premises infrastructure or a third-party data center and the cloud provider’s network. This eliminates the performance bottlenecks and security risks associated with the public internet. However, dedicated connections can be more expensive and require more complex configuration.

- Public Internet: Utilizing the public internet for inter-cloud communication is the simplest approach, but it introduces significant security and performance risks due to its inherent unreliability and vulnerability to cyber threats. Data traversing the public internet is susceptible to eavesdropping, man-in-the-middle attacks, and denial-of-service attacks.

- Network Address Translation (NAT) and IP Addressing: Managing IP address conflicts and network address translation (NAT) across different cloud environments can be a significant challenge. Cloud providers often use overlapping IP address ranges, which can lead to routing conflicts and communication failures. NAT is used to translate private IP addresses to public IP addresses, enabling communication with the outside world. However, excessive NAT can add latency and complexity to the network configuration.

- Security and Firewall Management: Implementing and managing security policies across multiple cloud providers can be complex. Each cloud provider has its own firewall and security group configuration options. Consistent security policies and enforcement across all cloud environments are crucial to prevent security breaches. Centralized security management tools can help streamline the configuration and monitoring of security policies across multiple cloud providers.

- Monitoring and Troubleshooting: Monitoring network performance and troubleshooting connectivity issues across multiple cloud providers requires a comprehensive set of tools and expertise. Identifying the root cause of performance problems can be difficult when the network spans multiple cloud environments. Centralized logging and monitoring solutions are essential for collecting and analyzing network traffic data, identifying performance bottlenecks, and proactively addressing connectivity issues.

Factors Impacting Application Performance in a Multi-Cloud Environment

Several factors can significantly impact application performance in a multi-cloud environment. Understanding these factors is crucial for optimizing application performance and ensuring a positive user experience.

- Latency: Network latency, the time it takes for data to travel between two points, is a critical factor in application performance. Higher latency can lead to slower response times and a degraded user experience. Latency can be affected by several factors, including the physical distance between cloud regions, network congestion, and the routing paths taken by network traffic. Minimizing latency is essential for applications that require real-time interaction or low response times.

For example, a financial trading application needs to minimize latency to ensure quick execution of trades.

- Bandwidth: Bandwidth, the amount of data that can be transmitted over a network connection in a given time, is another critical factor. Insufficient bandwidth can lead to slow data transfer rates and performance bottlenecks. Bandwidth limitations can be particularly problematic for applications that involve large data transfers, such as video streaming or data backups. Selecting cloud providers with sufficient bandwidth capacity and optimizing data transfer methods are essential for mitigating bandwidth limitations.

- Network Congestion: Network congestion occurs when too much traffic is competing for the available network resources. Congestion can lead to packet loss, increased latency, and reduced application performance. Network congestion can be caused by various factors, including peak traffic periods, network hardware limitations, and poorly designed network architectures. Monitoring network traffic and implementing traffic shaping techniques can help mitigate network congestion.

- Routing Optimization: The routing paths taken by network traffic can significantly impact application performance. Suboptimal routing can lead to longer travel times and increased latency. Optimizing routing paths by selecting the most direct and efficient routes between cloud regions and data centers is essential for minimizing latency and maximizing performance. Using content delivery networks (CDNs) can help optimize routing for content delivery by caching content closer to users.

- Application Architecture: The application architecture itself can impact performance in a multi-cloud environment. Applications that are not designed to handle the distributed nature of multi-cloud environments may experience performance issues. Optimizing application architecture for multi-cloud environments involves techniques such as:

- Microservices: Breaking down applications into smaller, independent microservices that can be deployed and scaled independently.

- Data Locality: Storing data closer to the users or applications that need it.

- Caching: Implementing caching mechanisms to reduce the load on backend systems and improve response times.

Network Architecture for Inter-Cloud Communication

The network architecture for inter-cloud communication needs to be carefully designed to ensure secure, reliable, and high-performing connectivity between different cloud providers. A typical architecture involves a combination of various components, the specific choice of which depends on the requirements of the application and the constraints of the environment.

A simplified illustration of a multi-cloud network architecture is as follows:

Diagram Description: The diagram illustrates a multi-cloud network architecture. It shows two cloud providers, Cloud Provider A and Cloud Provider B, connected through a central network. Cloud Provider A contains a Virtual Private Cloud (VPC) and an application server. Cloud Provider B also contains a VPC and an application server. Each cloud provider has its own set of internal subnets and virtual machines.

Connectivity between the VPCs is established via a VPN connection, or through a dedicated connection, such as Direct Connect. Firewalls are implemented at the edge of each VPC to control inbound and outbound traffic. A central monitoring and management platform provides visibility into network performance and security across both cloud providers.

Diagram elements and their functions:

- Cloud Providers: These represent the different cloud environments (e.g., AWS, Azure, Google Cloud).

- VPCs/Virtual Networks: Isolated network environments within each cloud provider.

- Application Servers: The servers hosting the application workloads.

- VPN/Direct Connect: The secure and dedicated network connections between the cloud providers.

- Firewalls: Security devices that control network traffic and enforce security policies.

- Monitoring and Management Platform: A centralized system for monitoring network performance, security, and overall health.

This architecture allows for:

- Secure communication between workloads in different cloud environments.

- Centralized management and monitoring of network resources.

- Scalability and flexibility to adapt to changing application needs.

Monitoring and Observability

Migrating to a multi-cloud environment significantly increases the complexity of monitoring and observability. The distributed nature of applications and infrastructure across different cloud providers introduces challenges in gaining a unified view of system health, performance, and security. Effective monitoring is crucial for proactive issue detection, performance optimization, and ensuring compliance across all cloud platforms. Without robust monitoring capabilities, organizations risk increased downtime, performance bottlenecks, and difficulty in identifying and resolving issues efficiently.

Challenges in Monitoring Applications and Infrastructure Across Different Cloud Platforms

Monitoring applications and infrastructure across disparate cloud environments presents several hurdles. Each cloud provider offers its own set of monitoring tools, data formats, and APIs, leading to fragmented data and a lack of consistent visibility.

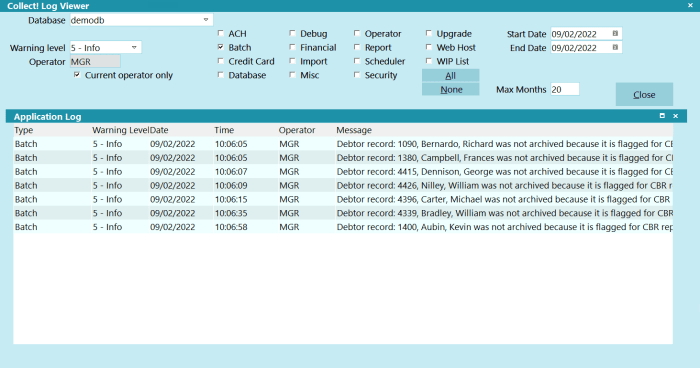

- Data Siloing: Data from each cloud provider resides in isolated monitoring systems, making it difficult to correlate events and identify root causes across the entire multi-cloud landscape. This lack of correlation hinders effective troubleshooting and performance analysis.

- Inconsistent Metrics and Logging: Different cloud providers may use varying naming conventions, units of measurement, and data formats for similar metrics, making it challenging to compare performance and identify trends. This inconsistency can lead to inaccurate analysis and misinformed decisions.

- Alerting and Notification Complexity: Configuring and managing alerts across multiple cloud platforms requires integrating various alerting systems, which can be complex and time-consuming. This complexity can lead to delayed responses to critical issues and increased operational overhead.

- Security Monitoring Fragmentation: Security monitoring becomes more complex as security logs and events are dispersed across different platforms. This fragmentation can make it difficult to detect and respond to security threats effectively, increasing the risk of breaches.

- Cost Management: Monitoring costs can escalate rapidly as organizations utilize various monitoring tools from different providers. Managing these costs requires careful planning and optimization strategies to avoid unnecessary expenses.

Tools for Centralized Logging and Monitoring in a Multi-Cloud Setup

Centralized logging and monitoring tools are essential for addressing the challenges of observability in a multi-cloud environment. These tools aggregate data from various sources, provide a unified view of system health, and enable proactive issue detection and resolution. Several tools offer comprehensive features for monitoring applications, infrastructure, and security across different cloud platforms.

- Centralized Logging: Tools collect and aggregate logs from various sources, including applications, servers, and network devices, into a central repository. This allows for easier searching, analysis, and correlation of events.

- Performance Monitoring: Tools track key performance indicators (KPIs) such as CPU usage, memory consumption, network latency, and application response times to identify performance bottlenecks and optimize resource allocation.

- Alerting and Notification: Tools provide alerting capabilities based on predefined thresholds and anomaly detection algorithms, enabling proactive notification of critical issues.

- Security Monitoring: Tools integrate with security information and event management (SIEM) systems to collect and analyze security logs, identify threats, and provide security incident response capabilities.

- Cost Monitoring: Tools track cloud spending across different platforms, providing insights into resource utilization and cost optimization opportunities.

Comparison of Monitoring and Observability Tools

The following table compares several popular monitoring and observability tools, highlighting their key features and capabilities in a multi-cloud context. The features compared include data collection, analysis and visualization, alerting, integration capabilities, and cost considerations.

| Tool | Data Collection | Analysis and Visualization | Alerting | Integration Capabilities | Cost Considerations |

|---|---|---|---|---|---|

| Datadog | Agent-based and API-driven data collection from various sources across different cloud providers. | Comprehensive dashboards, customizable visualizations, and advanced analytics capabilities for performance monitoring and log analysis. | Flexible alerting rules, anomaly detection, and automated incident management workflows. | Extensive integrations with popular cloud services, applications, and DevOps tools. | Subscription-based pricing with usage-based components; offers a free trial and various pricing tiers. |

| New Relic | Agent-based and API-driven data collection across various cloud platforms, including AWS, Azure, and GCP. | Powerful dashboards, real-time visualizations, and advanced analytics for performance monitoring, application performance monitoring (APM), and log management. | Customizable alerts, anomaly detection, and automated incident response features. | Integrates with various cloud services, applications, and DevOps tools. | Subscription-based pricing with usage-based components; offers a free tier and various pricing plans. |

| Prometheus and Grafana | Prometheus scrapes metrics from configured targets; Grafana visualizes data from Prometheus and other data sources. | Grafana provides highly customizable dashboards and visualizations for real-time monitoring and analysis. | Alerting rules configured in Prometheus; Grafana integrates with various notification channels. | Prometheus and Grafana have broad community support and integrations with numerous data sources and applications. | Open-source, with the option for managed services; primarily focused on infrastructure and application monitoring. |

| Splunk | Agent-based and API-driven data collection, including logs, metrics, and events, from diverse sources. | Powerful search capabilities, advanced analytics, and customizable dashboards for log analysis, security monitoring, and operational intelligence. | Flexible alerting rules, anomaly detection, and automated incident response workflows. | Extensive integrations with various cloud services, security tools, and applications. | Subscription-based pricing with usage-based components; offers a free trial and various pricing tiers. |

Governance and Policy Enforcement

Establishing consistent governance and enforcing policies across a multi-cloud environment presents significant challenges. The distributed nature of multi-cloud deployments, coupled with the varying policy frameworks of different cloud providers, creates a complex landscape for maintaining control and ensuring compliance. The lack of a unified management plane often leads to inconsistencies, security vulnerabilities, and increased operational overhead. Effective governance requires a strategic approach to policy definition, implementation, and ongoing management, ensuring alignment with organizational objectives and regulatory requirements.

Difficulties in Establishing Consistent Governance Policies

The primary difficulty lies in the inherent heterogeneity of cloud environments. Each cloud provider operates with its own set of services, APIs, and management consoles, each potentially offering different levels of control and visibility. This divergence complicates the creation of a single, unified governance model. Furthermore, the rapid pace of innovation in the cloud space means that services and features are constantly evolving, necessitating continuous adaptation of governance policies.

The distributed nature of teams, often operating across multiple cloud providers, also exacerbates the problem, making it challenging to ensure consistent application and enforcement of policies.

Policy Enforcement Mechanisms

Several mechanisms can be employed to enforce policies in a multi-cloud setup, often working in concert to achieve comprehensive governance. These mechanisms are critical for maintaining security, compliance, and operational consistency across all cloud environments.

- Cloud-Native Policy Engines: Most major cloud providers offer their own policy engines, such as AWS IAM (Identity and Access Management) policies, Azure Policy, and Google Cloud’s Resource Manager policies. These engines can be used to define and enforce policies within the specific cloud environment. However, managing policies across multiple cloud providers using only these native tools becomes cumbersome.

- Third-Party Policy Management Tools: Several third-party tools specialize in multi-cloud policy management. These tools often provide a centralized interface for defining, deploying, and monitoring policies across different cloud providers. They typically offer features like policy templates, automated remediation, and compliance reporting. Examples include tools that provide policy-as-code capabilities, allowing for version control and automated deployment of policies.

- Infrastructure-as-Code (IaC) with Policy Integration: IaC tools like Terraform, Ansible, and CloudFormation can be used to define and deploy infrastructure resources, including security configurations and network settings. Many IaC tools now integrate with policy engines, allowing policies to be enforced as part of the infrastructure deployment process. This ensures that infrastructure is configured in compliance with defined policies from the outset.

- Centralized Security Information and Event Management (SIEM) Systems: SIEM systems can be used to collect and analyze security logs from multiple cloud environments. By analyzing these logs, organizations can identify policy violations, security threats, and other anomalies. SIEM systems often include built-in policy enforcement capabilities, such as the ability to trigger alerts or automatically remediate policy violations.

- Automated Remediation: Automation plays a crucial role in policy enforcement. When a policy violation is detected, automated remediation can be triggered to correct the issue. This can involve tasks such as modifying security group rules, deleting non-compliant resources, or applying security patches. Automation significantly reduces the time and effort required to address policy violations.

Best Practices for Governance and Policy Management

Implementing effective governance and policy management in a multi-cloud environment requires a strategic approach and adherence to best practices. These practices help to ensure consistency, security, and compliance across all cloud deployments.

- Establish a Centralized Policy Framework: Define a unified set of policies that apply across all cloud environments. This framework should cover areas such as security, access control, data protection, and cost management. This framework is often represented as a document.

- Use Policy-as-Code: Implement policies as code to enable version control, automated deployment, and consistent enforcement. This approach improves manageability and reduces the risk of human error.

- Automate Policy Enforcement: Automate the enforcement of policies using tools like IaC, cloud-native policy engines, and third-party policy management tools. Automation reduces manual effort and ensures consistent application of policies.

- Implement Continuous Monitoring and Auditing: Continuously monitor cloud environments for policy violations and conduct regular audits to ensure compliance. This involves collecting and analyzing logs, reviewing configurations, and conducting penetration tests.

- Define Clear Roles and Responsibilities: Clearly define roles and responsibilities for governance and policy management. This includes assigning ownership for policy creation, enforcement, and monitoring.

- Provide Comprehensive Training: Provide training to cloud users on governance policies and best practices. This helps to ensure that users understand their responsibilities and can operate within the defined governance framework.

- Regularly Review and Update Policies: Regularly review and update policies to reflect changes in business requirements, regulatory requirements, and cloud technologies. This ensures that policies remain relevant and effective.

- Implement a Centralized Logging and Monitoring System: Centralize logging and monitoring data from all cloud environments to gain a holistic view of security and compliance posture. A unified view facilitates faster threat detection and incident response.

- Choose Tools that Support Multi-Cloud: Select governance and policy management tools that natively support multi-cloud environments. This simplifies the management and enforcement of policies across different cloud providers.

- Adopt a “Shift-Left” Approach: Integrate policy checks early in the development lifecycle (e.g., during code reviews or in CI/CD pipelines) to prevent policy violations from occurring in the first place.

Business Continuity and Disaster Recovery

Implementing robust business continuity and disaster recovery (BCDR) strategies becomes significantly more complex in a multi-cloud environment. The distributed nature of resources across different cloud providers introduces a multitude of challenges, ranging from data replication and failover orchestration to consistent policy enforcement and cost management. Successfully navigating these complexities is critical for minimizing downtime and ensuring business resilience.

Challenges in Implementing Business Continuity and Disaster Recovery Strategies in a Multi-Cloud Environment

The shift to a multi-cloud environment presents unique hurdles to establishing effective BCDR. These challenges stem from the inherent differences in cloud provider architectures, service offerings, and geographical footprints.

- Data Replication Complexity: Replicating data across different cloud platforms can be complex due to varying storage technologies, data transfer rates, and security protocols. Ensuring data consistency and integrity during replication is crucial. This is further complicated by data gravity, where data tends to “attract” applications. Moving large datasets across clouds introduces significant latency and cost.

- Failover Orchestration and Automation: Automating failover processes across different cloud providers requires sophisticated orchestration tools and scripts. These tools must be able to detect failures, initiate failover procedures, and ensure that applications and data are accessible in the recovery environment. The interoperability of orchestration tools across different cloud platforms is a major concern.

- Network Connectivity and Performance: Establishing reliable and performant network connectivity between different cloud environments is essential for BCDR. This includes configuring virtual private networks (VPNs), direct connections, or other network solutions to ensure that data can be replicated and applications can be accessed in the recovery site. Network latency and bandwidth limitations can significantly impact recovery time objectives (RTOs) and recovery point objectives (RPOs).

- Compliance and Regulatory Requirements: BCDR strategies must comply with relevant industry regulations and data privacy requirements. This may involve replicating data to specific geographic regions or ensuring data sovereignty. Navigating the varying compliance requirements of different cloud providers can be challenging.

- Cost Management and Optimization: Implementing BCDR in a multi-cloud environment can be expensive. Costs include data replication, storage, compute resources, and network bandwidth. Optimizing these costs while maintaining adequate levels of resilience requires careful planning and monitoring.

- Testing and Validation: Regularly testing and validating BCDR plans is crucial to ensure their effectiveness. Testing across multiple cloud providers can be complex and time-consuming. Automating the testing process and simulating various failure scenarios are essential for validating the resilience of the system.

Steps Involved in Creating a Disaster Recovery Plan Across Multiple Cloud Providers

Developing a comprehensive disaster recovery plan for a multi-cloud environment requires a structured approach. The plan should address the specific needs of the organization and consider the unique characteristics of each cloud provider.

- Assess Business Requirements and Risk: Begin by assessing the business’s critical applications, data, and infrastructure. Identify the RTOs and RPOs for each application. Perform a risk assessment to identify potential threats and vulnerabilities. Consider the impact of a disaster on different business functions.

- Choose Recovery Strategies: Select appropriate recovery strategies based on the business requirements and risk assessment. Options include active-active, active-passive, and warm/cold standby. Consider the trade-offs between cost, RTO, and RPO for each strategy.

- Design the Recovery Architecture: Design the recovery architecture, including the selection of cloud providers, data replication methods, and failover mechanisms. Choose technologies that are compatible with all cloud providers and that meet the performance and security requirements. Consider using infrastructure-as-code (IaC) to automate the deployment of resources in the recovery environment.

- Implement Data Replication and Synchronization: Implement data replication and synchronization mechanisms to ensure that data is consistent and available in the recovery environment. Select appropriate data replication tools and techniques based on the type of data and the RPO requirements. Test the data replication process regularly.

- Automate Failover and Failback Procedures: Automate failover and failback procedures to minimize downtime. Develop scripts or use orchestration tools to automatically detect failures and initiate failover. Test the failover and failback procedures regularly to ensure that they function as expected.

- Define Monitoring and Alerting: Establish monitoring and alerting mechanisms to detect potential failures and trigger recovery procedures. Monitor key performance indicators (KPIs) such as latency, CPU utilization, and network bandwidth. Configure alerts to notify relevant personnel of any issues.

- Develop and Document the Plan: Document the entire disaster recovery plan, including the business requirements, recovery strategies, architecture, procedures, and contact information. Ensure that the plan is easily accessible and understood by all relevant personnel.

- Test and Validate the Plan: Regularly test and validate the disaster recovery plan through simulations and exercises. Document the results of the tests and make necessary adjustments to the plan. Conduct annual reviews to ensure the plan remains relevant and effective.