Navigating the serverless landscape can be daunting, and the interview process often reflects this complexity. Understanding what are common serverless interview questions is crucial for anyone seeking to demonstrate proficiency in this rapidly evolving field. This analysis will dissect the core areas assessed during serverless interviews, offering insights into the types of questions asked and the expected responses. We’ll explore the foundational concepts, delve into practical considerations, and equip you with the knowledge to confidently address these inquiries.

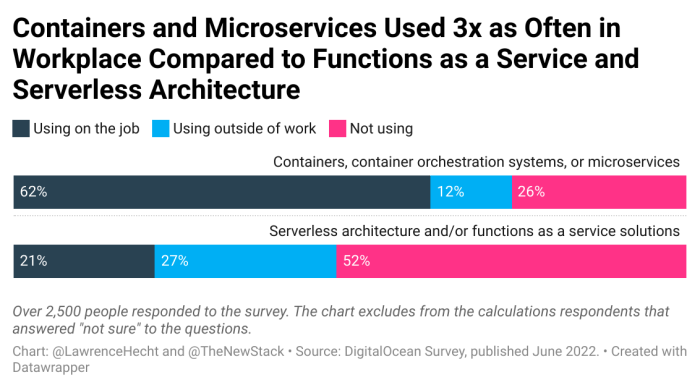

The serverless paradigm, with its promise of scalability, cost-effectiveness, and reduced operational overhead, has revolutionized software development. However, this shift introduces new challenges and requires a different mindset. Serverless interviews evaluate a candidate’s grasp of these nuances, testing their ability to design, implement, debug, and optimize serverless applications. This examination will cover key aspects such as Function-as-a-Service (FaaS), event-driven architectures, security considerations, and cost optimization strategies, providing a comprehensive overview of the interview landscape.

Introduction to Serverless Computing

Serverless computing represents a paradigm shift in cloud computing, abstracting away the underlying infrastructure management from developers. This allows them to focus solely on writing and deploying code without the burden of provisioning, scaling, and maintaining servers. This approach promotes agility, efficiency, and cost optimization in application development and deployment.

Core Principles of Serverless Computing

Serverless computing is built upon several key principles that differentiate it from traditional server-based architectures. Understanding these principles is crucial for grasping the advantages and limitations of this technology.

- Event-Driven Architecture: Serverless applications are primarily triggered by events. These events can originate from various sources, such as HTTP requests, database updates, scheduled tasks, or messages from message queues. This event-driven nature allows for highly responsive and scalable applications. For instance, a function can be triggered by a new file upload to an object storage service, automatically processing the file.

- Function-as-a-Service (FaaS): FaaS is a core component of serverless. It enables developers to deploy individual functions, which are small units of code, to the cloud. These functions execute in response to events and are typically stateless. This statelessness simplifies scaling and reduces the complexity of managing application state.

- Automatic Scaling: Serverless platforms automatically scale the resources allocated to functions based on demand. This eliminates the need for manual scaling and ensures that applications can handle varying workloads without performance degradation. The platform dynamically allocates compute resources as needed, and then releases them when the demand decreases.

- Pay-per-Use Pricing: Serverless providers typically offer a pay-per-use pricing model. Developers are only charged for the actual compute time and resources consumed by their functions. This can significantly reduce costs, especially for applications with intermittent or unpredictable workloads.

Benefits of Adopting a Serverless Architecture

Serverless architecture offers a range of benefits that contribute to its growing popularity. These benefits often translate into tangible advantages for businesses and developers alike.

- Scalability: Serverless platforms automatically scale applications based on demand. This eliminates the need for manual scaling and ensures that applications can handle traffic spikes without performance degradation. For example, a web application using serverless functions can seamlessly handle a sudden surge in user requests during a promotional campaign.

- Cost-Effectiveness: The pay-per-use pricing model of serverless computing can lead to significant cost savings, especially for applications with unpredictable workloads. Developers only pay for the actual compute time and resources consumed, reducing the overhead associated with idle server capacity. A simple example would be a website that is only accessed during specific hours.

- Faster Development and Deployment: Serverless platforms simplify the development and deployment process. Developers can focus on writing code without worrying about infrastructure management, resulting in faster development cycles and quicker time-to-market. Serverless platforms abstract away the complexities of server management, such as provisioning, patching, and monitoring.

- Improved Operational Efficiency: Serverless platforms automate many operational tasks, such as scaling, patching, and monitoring. This reduces the operational burden on development teams, allowing them to focus on building and improving applications. The platform manages the underlying infrastructure, allowing developers to focus on the application logic.

Differences Between Serverless and Traditional Server-Based Architectures

The transition from traditional server-based architectures to serverless represents a fundamental shift in how applications are built, deployed, and managed. Several key differences distinguish these two approaches.

- Infrastructure Management: In traditional architectures, developers are responsible for managing the underlying infrastructure, including provisioning, configuring, and maintaining servers. In serverless, the cloud provider handles infrastructure management, allowing developers to focus solely on code.

- Scaling: Traditional architectures often require manual scaling or the use of auto-scaling groups. Serverless platforms automatically scale applications based on demand, eliminating the need for manual intervention.

- Cost Model: Traditional architectures typically involve paying for server capacity, regardless of usage. Serverless platforms offer a pay-per-use pricing model, where developers are only charged for the actual compute time and resources consumed.

- Deployment Model: Traditional architectures typically involve deploying entire applications to servers. Serverless architectures allow developers to deploy individual functions, which are triggered by events.

- Resource Utilization: Traditional servers may experience idle time, leading to wasted resources and increased costs. Serverless functions are only executed when triggered, optimizing resource utilization.

Core Serverless Concepts

Serverless computing, at its core, revolves around a set of fundamental concepts that facilitate the development and deployment of applications without the need for managing servers. Understanding these components and their interactions is crucial for grasping the benefits and limitations of this architectural approach. This section delves into the key serverless building blocks and their operational principles.

Functions as a Service (FaaS)

FaaS represents the foundational element of serverless architectures. It allows developers to execute individual functions in response to events without provisioning or managing the underlying infrastructure.

- Definition and Functionality: FaaS platforms enable the execution of code in the form of functions. These functions are typically triggered by events, such as HTTP requests, database updates, or scheduled timers. The platform manages the execution environment, scaling resources dynamically based on demand, and handles tasks like server provisioning, operating system management, and scaling. The developer focuses solely on writing and deploying the function code.

- Key Characteristics: FaaS functions are generally characterized by their stateless nature, short execution times, and event-driven triggers. They are designed to be lightweight and quickly deployable, allowing for rapid development cycles. Furthermore, FaaS solutions often offer automatic scaling, meaning the platform adjusts the number of function instances based on the incoming load.

- Example: Consider an image resizing service. A developer could write a FaaS function that triggers whenever a new image is uploaded to a cloud storage service. The function would resize the image and store the resized version, all without the developer managing any servers.

Backend as a Service (BaaS)

BaaS provides pre-built backend services that serverless applications can leverage. These services handle common functionalities, allowing developers to focus on front-end development and business logic.

- Definition and Purpose: BaaS platforms offer a range of services, including databases, authentication, storage, and push notifications, accessible via APIs. This approach eliminates the need for developers to build and maintain these backend components from scratch. BaaS solutions abstract away the complexities of managing infrastructure, allowing developers to concentrate on the user interface and core application features.

- Common BaaS Services: Typical BaaS offerings include database services (e.g., NoSQL databases), user authentication and authorization systems, file storage solutions, and notification services. They often provide SDKs and APIs that simplify integration with front-end applications and FaaS functions.

- Example: An e-commerce application could utilize a BaaS platform for user authentication, storing product data in a database, and handling payment processing. The developers can build the front-end user interface and the application’s business logic, relying on the BaaS platform for backend services.

Event-Driven Architectures

Event-driven architectures are a core principle of serverless applications. They enable loosely coupled components to communicate and react to events in real-time.

- Definition and Principles: In an event-driven architecture, components communicate by producing and consuming events. Events are signals that something has happened, such as a user action or a data update. Components subscribe to specific events and react accordingly. This approach promotes scalability, flexibility, and resilience.

- Role in Serverless: Serverless applications heavily rely on event-driven architectures. FaaS functions are typically triggered by events, and BaaS services often emit events. This allows for building highly responsive and scalable applications. For example, a function could be triggered when a file is uploaded to cloud storage, automatically processing the file.

- Benefits: Event-driven architectures offer several advantages, including improved scalability, increased resilience, and greater flexibility. Because components are loosely coupled, they can be scaled independently, and failures in one component do not necessarily affect others. The system can also adapt to changing requirements more easily.

Common Serverless Platforms and Key Features

Several cloud providers offer comprehensive serverless platforms. These platforms provide the infrastructure, services, and tools needed to build and deploy serverless applications.

- Amazon Web Services (AWS): AWS offers a suite of serverless services, including AWS Lambda (FaaS), Amazon API Gateway (API management), Amazon DynamoDB (NoSQL database), and Amazon S3 (object storage). Key features include pay-per-use pricing, automatic scaling, and tight integration with other AWS services. AWS Lambda supports multiple programming languages, providing flexibility in code development.

- Microsoft Azure: Azure provides Azure Functions (FaaS), Azure API Management (API management), Azure Cosmos DB (NoSQL database), and Azure Blob Storage (object storage). Key features include event-driven programming models, support for various programming languages, and integration with other Azure services. Azure also offers a comprehensive set of tools for monitoring and debugging serverless applications.

- Google Cloud Platform (GCP): GCP offers Cloud Functions (FaaS), Cloud Endpoints (API management), Cloud Firestore (NoSQL database), and Cloud Storage (object storage). Key features include automatic scaling, integration with Google Cloud services, and support for multiple programming languages. GCP provides a strong focus on containerization and Kubernetes for serverless deployments.

- Key Features Comparison: While each platform offers similar core functionalities, they have unique strengths. For instance, AWS excels in its breadth of services and mature ecosystem. Azure provides strong integration with Microsoft technologies. GCP is known for its focus on containerization and data analytics capabilities.

Serverless Interview Question Categories

Understanding serverless computing necessitates a multifaceted approach to evaluating a candidate’s expertise. Interview questions should be categorized to assess different aspects of their knowledge, including design principles, implementation skills, and debugging capabilities. This structured approach ensures a comprehensive evaluation of their serverless proficiency.

Design Interview Questions

Design questions assess a candidate’s ability to conceptualize and architect serverless solutions. These questions probe their understanding of architectural patterns, service selection, and the tradeoffs inherent in serverless design.The following table provides examples of question types that can be used:“`html

| Category | Question Type | Focus | Example |

|---|---|---|---|

| Architectural Design | System Design | Evaluating the ability to design serverless systems. | Design a serverless architecture for a photo-sharing application, considering aspects like image storage, user authentication, and API access. |

| Service Selection | Service Choice Rationale | Assessing the candidate’s understanding of various serverless services and their applicability. | Explain the rationale behind choosing AWS Lambda over AWS Fargate for a specific task, such as processing large datasets. |

| Scalability and Performance | Performance Optimization | Understanding of optimizing serverless applications for performance and scalability. | Describe strategies to optimize the performance of a serverless API, focusing on factors such as cold starts, function memory allocation, and caching. |

| Security Design | Security Best Practices | Understanding of secure serverless design principles. | How would you secure a serverless API against common threats like SQL injection or cross-site scripting? |

“`The examples in the table showcase how design questions delve into the candidate’s ability to translate requirements into a functioning serverless architecture. The questions encourage the candidate to articulate their design choices, justify their selections of services, and consider the implications of those choices on scalability, performance, and security.

Implementation Interview Questions

Implementation questions evaluate a candidate’s hands-on skills in developing serverless applications. These questions assess their ability to write code, configure services, and deploy serverless functions.The following examples provide insights into the kinds of questions that can be asked:

- Coding and Function Development: Questions focus on the ability to write code that performs specific tasks within a serverless environment. For instance, the interviewer might ask, “Write a Python function that processes a JSON payload from an API Gateway request, extracts specific data, and stores it in a DynamoDB table.” This type of question directly assesses coding proficiency and the candidate’s grasp of serverless event-driven programming.

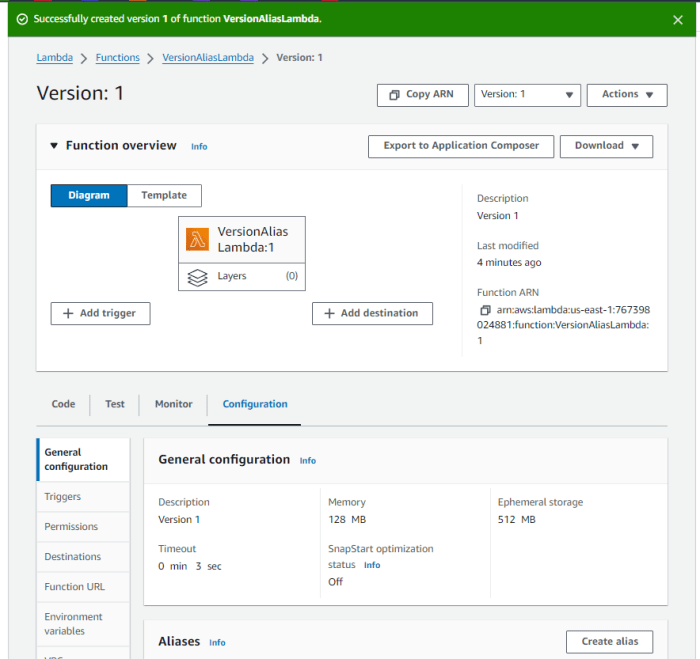

- Service Configuration: These questions gauge the candidate’s ability to configure and integrate serverless services. For example, “Configure an AWS Lambda function to trigger automatically upon new objects being added to an S3 bucket.” This evaluates the candidate’s knowledge of service interactions and the configuration options available in a serverless platform.

- Deployment and CI/CD: The interviewer could ask questions about deploying and managing serverless applications, such as, “Describe your preferred CI/CD pipeline for deploying serverless functions, including version control, automated testing, and infrastructure as code.” This explores the candidate’s ability to automate the deployment process and manage the lifecycle of serverless applications.

Implementation questions are designed to expose a candidate’s ability to translate theoretical knowledge into practical, working solutions. These questions are crucial for assessing a candidate’s hands-on capabilities and ensuring they can contribute effectively to serverless development projects.

Debugging Interview Questions

Debugging questions assess a candidate’s ability to troubleshoot and resolve issues within a serverless environment. These questions evaluate their understanding of monitoring tools, logging, and common serverless failure scenarios.Here are some examples:

- Log Analysis and Monitoring: Questions focusing on log analysis and monitoring are important. For instance, “How would you troubleshoot a Lambda function that is consistently timing out?” This assesses the candidate’s ability to use logs and monitoring tools to identify performance bottlenecks or errors.

- Error Handling: Questions on error handling can be asked. An example would be, “Describe your approach to handling errors in a serverless application. How do you implement retry mechanisms or error notifications?” This tests the candidate’s understanding of building resilient and fault-tolerant systems.

- Performance Profiling: Performance profiling is another area to be tested. The interviewer could ask, “How do you identify and address performance issues in a serverless application, such as cold starts or inefficient database queries?” This assesses the candidate’s ability to diagnose performance problems and optimize code.

Debugging questions aim to uncover a candidate’s practical problem-solving skills in the context of serverless applications. These questions are essential for ensuring that the candidate can effectively troubleshoot and resolve issues that arise during the development and operation of serverless systems.

Function as a Service (FaaS) Deep Dive

Function as a Service (FaaS) represents a core architectural component of serverless computing. It enables developers to execute code without managing servers, scaling automatically based on demand. This paradigm shift has revolutionized software development by focusing on individual functions and their execution, promoting agility, and reducing operational overhead.

Function as a Service (FaaS) Definition and Significance

FaaS is a cloud computing execution model where the cloud provider manages the allocation of server resources. Developers deploy individual functions – small, self-contained pieces of code that perform a specific task. These functions are triggered by events, such as HTTP requests, database updates, or messages from a message queue. The FaaS provider handles the provisioning, scaling, and management of the underlying infrastructure.

This allows developers to focus solely on writing code and building applications.The significance of FaaS lies in several key areas:

- Reduced Operational Overhead: Developers no longer need to manage servers, operating systems, or scaling infrastructure. The cloud provider handles these tasks automatically.

- Automatic Scaling: FaaS functions automatically scale up or down based on the number of incoming requests, ensuring optimal resource utilization and performance.

- Pay-per-use Pricing: Users are only charged for the actual time their functions are executed, leading to cost savings compared to traditional server-based deployments.

- Increased Agility: FaaS enables rapid development and deployment cycles. Developers can quickly deploy and update individual functions without affecting the entire application.

- Event-Driven Architecture: FaaS promotes the use of event-driven architectures, where functions are triggered by events, leading to more loosely coupled and scalable systems.

FaaS Provider Examples and Features

Various cloud providers offer FaaS platforms, each with its unique features and capabilities. Here are some prominent examples:

- AWS Lambda: Amazon Web Services (AWS) Lambda is a widely adopted FaaS platform. It supports multiple programming languages, including Node.js, Python, Java, Go, and C#. AWS Lambda integrates seamlessly with other AWS services, such as Amazon S3, Amazon DynamoDB, and Amazon API Gateway. Key features include:

- Event Sources: Supports a wide range of event sources, including HTTP requests, database updates, and message queues.

- Concurrency Control: Allows developers to control the maximum number of concurrent function invocations.

- Layers: Enables the sharing of common code and dependencies across multiple functions.

- Provisioned Concurrency: Pre-provisions function instances to reduce cold start times.

- Google Cloud Functions: Google Cloud Functions is Google’s FaaS offering. It supports Node.js, Python, Go, Java, .NET, and Ruby. Google Cloud Functions integrates with Google Cloud services like Cloud Storage, Cloud Pub/Sub, and Cloud Firestore. Key features include:

- Triggers: Supports various triggers, including HTTP requests, Cloud Storage events, and Pub/Sub messages.

- Automatic Scaling: Automatically scales based on demand.

- Cloud Build Integration: Integrates with Cloud Build for continuous integration and continuous deployment (CI/CD).

- Eventarc: A unified eventing service for building event-driven architectures.

- Azure Functions: Microsoft Azure Functions is Microsoft’s FaaS platform. It supports C#, JavaScript, Python, PowerShell, Java, and TypeScript. Azure Functions integrates with other Azure services, such as Azure Blob Storage, Azure Cosmos DB, and Azure Event Hubs. Key features include:

- Bindings: Provides input and output bindings that simplify interactions with other Azure services.

- Durable Functions: Enables the development of stateful serverless workflows.

- Easy Integration: Provides easy integration with Visual Studio and other development tools.

- Consumption Plan and Premium Plan: Offers flexible pricing options based on usage.

These are just a few examples; other providers, such as IBM Cloud Functions and Cloudflare Workers, also offer FaaS platforms. The specific features and capabilities of each platform may vary, so it’s important to evaluate the options based on the specific needs of the project.

Best Practices for FaaS Function Design and Deployment

Designing and deploying FaaS functions effectively requires careful consideration of several best practices:

- Single Responsibility Principle: Each function should perform a single, well-defined task. This promotes code reusability and maintainability.

- Stateless Functions: Functions should be designed to be stateless, meaning they do not store any state between invocations. This allows for horizontal scaling. If state is required, it should be stored in an external service, such as a database or a cache.

- Idempotency: Functions should be idempotent, meaning they can be executed multiple times without changing the result beyond the initial execution. This helps to handle potential errors and retries.

- Error Handling: Implement robust error handling to gracefully handle exceptions and unexpected situations. Log errors effectively for debugging and monitoring.

- Code Organization: Organize code into logical modules and use dependency injection to improve testability and maintainability.

- Project Structure: Adopt a well-defined project structure. For example, a common structure could include directories for the function code, dependencies, and configuration files.

- Dependency Management: Utilize a package manager (e.g., npm, pip, Maven) to manage dependencies and ensure consistent deployments.

- Configuration Management: Store configuration settings separately from the code, using environment variables or configuration files. This allows for easy configuration updates without modifying the code.

- Monitoring and Logging: Implement comprehensive monitoring and logging to track function performance, identify errors, and gain insights into usage patterns. Use logging to capture detailed information about function execution, including input, output, and any errors that occur. Utilize metrics to monitor key performance indicators (KPIs), such as invocation count, execution time, and error rate.

- Security Best Practices: Secure FaaS functions by following security best practices:

- Least Privilege: Grant functions only the necessary permissions to access resources.

- Input Validation: Validate all inputs to prevent security vulnerabilities, such as injection attacks.

- Secrets Management: Securely store and manage sensitive information, such as API keys and database credentials, using a secrets management service.

- Testing: Write unit tests and integration tests to ensure that functions behave as expected. Implement automated testing as part of the CI/CD pipeline.

- Versioning and Deployment: Implement a versioning strategy for functions to enable rolling back to previous versions if necessary. Use a CI/CD pipeline to automate the deployment process.

- Cold Start Optimization: Minimize cold start times by optimizing code, using appropriate memory and CPU resources, and pre-warming functions.

- Code Optimization: Reduce the size of the function code and its dependencies.

- Memory Allocation: Allocate sufficient memory to the function to reduce execution time.

- Provisioned Concurrency: Use provisioned concurrency to pre-warm function instances and reduce cold start times.

By adhering to these best practices, developers can create robust, scalable, and cost-effective serverless applications using FaaS.

Event-Driven Architectures and Serverless

Serverless computing aligns exceptionally well with event-driven architectures, providing a powerful combination for building scalable, resilient, and cost-effective applications. This section explores the mechanics of event-driven architectures within serverless environments, examines common event triggers and their practical applications, and contrasts different event processing models. The focus is on understanding how these elements synergize to create sophisticated, reactive systems.

How Event-Driven Architectures Function in Serverless Environments

Event-driven architectures in serverless environments rely on the concept of events – significant occurrences within a system – triggering the execution of serverless functions. This approach decouples different parts of an application, allowing them to operate independently and react to events in real-time. This architectural pattern leverages a publish-subscribe (pub/sub) model, where event producers (sources that generate events) publish events to an event bus, and event consumers (serverless functions) subscribe to specific event types.

This loose coupling enhances scalability and fault tolerance.The core components include:

- Event Producers: These are the sources that generate events. They can be anything from user actions (e.g., uploading a file) to scheduled tasks (e.g., a cron job).

- Event Bus: This acts as a central hub for events. It receives events from producers and routes them to the appropriate consumers. Examples include cloud-specific services like Amazon EventBridge, Azure Event Grid, and Google Cloud Pub/Sub.

- Event Consumers (Serverless Functions): These are the functions that are triggered by events. They perform the actions necessary to respond to the event. They are triggered automatically by the event bus.

This architecture provides several benefits, including:

- Scalability: Serverless functions automatically scale based on the volume of events, ensuring that the system can handle fluctuating workloads.

- Resilience: If one function fails, it does not necessarily impact other functions. Events can be retried or routed to different consumers.

- Cost-effectiveness: You only pay for the compute time used by the functions when they are invoked, which aligns with the event-driven nature of the architecture.

- Loose Coupling: Components are independent, allowing for easier updates and maintenance without affecting the entire system.

Common Serverless Event Triggers and Their Use Cases

Serverless event triggers come in various forms, each catering to different use cases. These triggers initiate the execution of serverless functions in response to specific events.Here are some common examples:

- HTTP Requests: Functions can be triggered by HTTP requests, allowing for the creation of APIs and web applications.

- Use Case: Building a REST API for a mobile application, where each API endpoint corresponds to a serverless function.

- Object Storage Events: Events generated when objects are created, updated, or deleted in object storage services (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage).

- Use Case: Automatically resizing images when they are uploaded to an object storage bucket.

- Database Changes: Events triggered by changes in a database, such as new records, updates, or deletions.

- Use Case: Updating a search index when a product is added or modified in an e-commerce database.

- Scheduled Events (Cron Jobs): Functions triggered at scheduled intervals.

- Use Case: Generating daily reports or cleaning up old data.

- Message Queue Events: Functions triggered by messages placed on a message queue (e.g., Amazon SQS, Azure Service Bus, Google Cloud Pub/Sub).

- Use Case: Processing large batches of data asynchronously by placing messages on a queue.

The choice of trigger depends on the specific requirements of the application. The versatility of these triggers allows for building highly responsive and adaptable systems.

Comparison of Event Processing Models in Serverless

Serverless event processing models primarily revolve around synchronous and asynchronous approaches. The choice between these models impacts the system’s performance, scalability, and fault tolerance.Here’s a comparison:

- Synchronous Event Processing:

- The event producer waits for the event consumer (serverless function) to complete its processing before continuing.

- Characteristics: Simpler to implement, but can lead to higher latency and potential bottlenecks if the consumer takes a long time to process the event.

- Use Case: Processing a user registration where the system needs to immediately verify the user’s identity and create their account before allowing further interaction.

- Asynchronous Event Processing:

- The event producer does not wait for the event consumer to complete its processing. The event is placed on a queue or sent to an event bus, and the consumer processes it independently.

- Characteristics: Improves responsiveness and scalability. It is suitable for handling high volumes of events. Also, it allows for decoupling, which enhances fault tolerance.

- Use Case: Processing an order in an e-commerce application, where the order details are added to a queue, and separate functions handle tasks like inventory updates, shipping notifications, and payment processing.

The selection of the appropriate processing model depends on the specific needs of the application. For example, real-time interactions might benefit from synchronous processing, while batch processing and tasks that do not require immediate responses are better suited for asynchronous models. The advantages of asynchronous processing, particularly in serverless environments, are significant because of the focus on scalability and resilience.

Serverless Design Patterns

Serverless design patterns are reusable solutions to common challenges in serverless application development. They provide architectural blueprints for building scalable, resilient, and cost-effective applications by leveraging the capabilities of serverless technologies. Understanding and applying these patterns is crucial for maximizing the benefits of serverless computing.

Fan-Out Pattern

The Fan-Out pattern is employed to distribute a single event to multiple downstream services or functions concurrently. This is particularly useful when processing tasks that can be executed independently and in parallel, significantly improving processing time and overall application performance.

- Description: An event trigger (e.g., an API request, a message in a queue) invokes a central function. This function then fans out the event by invoking multiple other functions or services, each responsible for a specific task related to the original event. The central function typically doesn’t wait for the completion of these downstream tasks, enabling asynchronous processing.

- Advantages:

- Improved Scalability: Parallel processing distributes the workload, allowing the system to handle a large volume of events efficiently.

- Reduced Latency: By executing tasks concurrently, the overall processing time is minimized.

- Increased Resilience: If one downstream function fails, the others can continue to process events, improving the application’s fault tolerance.

- Disadvantages:

- Complexity in Orchestration: Managing the fan-out and the potential for errors across multiple functions can increase complexity.

- Monitoring Challenges: Tracking the status of individual downstream functions and aggregating results can be difficult.

- Idempotency Considerations: Downstream functions must be designed to handle duplicate events gracefully, as retries or other mechanisms may lead to multiple invocations.

- Example: Consider an image processing application. An uploaded image triggers a function that uses the Fan-Out pattern. This central function invokes multiple functions: one to resize the image, one to generate thumbnails, and another to store metadata in a database. Each of these functions operates independently and in parallel.

Circuit Breaker Pattern

The Circuit Breaker pattern is a design pattern used to prevent cascading failures in distributed systems. It monitors the health of remote services and, when a service becomes unavailable or exhibits high latency, it prevents requests from being sent to that service, protecting the application from further degradation.

- Description: The Circuit Breaker acts as a proxy between a service client and a remote service. It has three states: Closed, Open, and Half-Open.

- Closed: Requests are passed directly to the remote service. The Circuit Breaker monitors the success and failure rates of these requests.

- Open: After a certain number of failures or a timeout threshold is exceeded, the Circuit Breaker “opens,” and subsequent requests are immediately rejected, preventing further load on the failing service.

- Half-Open: After a pre-defined time, the Circuit Breaker enters the Half-Open state, allowing a limited number of requests to pass through. If these requests succeed, the Circuit Breaker closes, allowing normal traffic. If they fail, it reopens.

- Advantages:

- Improved Resilience: Prevents cascading failures by isolating failing services.

- Reduced Latency: Prevents requests from being blocked by unresponsive services.

- Increased Stability: Protects the application from the impact of service outages.

- Disadvantages:

- Added Complexity: Requires implementing the Circuit Breaker logic and monitoring the health of remote services.

- Potential for False Positives: Incorrectly identifying a service as unavailable can lead to unnecessary rejection of requests.

- Configuration Overhead: Requires careful configuration of failure thresholds, retry intervals, and timeout values.

- Example: In an e-commerce application, if the payment processing service becomes unavailable, the Circuit Breaker pattern can prevent the main application from being overwhelmed with requests that will fail. The Circuit Breaker would “open,” and the application would either reject new payment requests or provide a fallback mechanism (e.g., displaying a message to the user).

API Gateway Pattern

The API Gateway pattern provides a single entry point for all client requests to a backend serverless application. It handles routing, authentication, authorization, rate limiting, and other common API management tasks.

- Description: An API Gateway sits in front of one or more backend serverless functions or services. It receives all client requests and routes them to the appropriate backend service based on the request’s path, method, or other criteria. It also handles cross-cutting concerns like security, monitoring, and transformation.

- Advantages:

- Simplified Client Interaction: Provides a single point of contact for clients, simplifying the API interaction.

- Enhanced Security: Centralizes security features like authentication, authorization, and rate limiting.

- Improved Performance: Enables caching, request transformation, and other optimizations to improve API performance.

- Decoupling of Backend Services: Allows backend services to evolve independently without affecting client applications.

- Disadvantages:

- Added Complexity: Introduces an additional component to manage and maintain.

- Single Point of Failure: If the API Gateway fails, the entire application becomes unavailable. (Mitigation strategies such as redundancy are often employed.)

- Potential Bottleneck: If the API Gateway is not properly scaled, it can become a bottleneck.

- Example: Consider a mobile application that needs to access multiple serverless functions for different functionalities (e.g., user authentication, product catalog, order processing). The API Gateway can handle all incoming requests, route them to the appropriate functions, and apply security policies. Without the API Gateway, the mobile application would need to interact with each function directly, which would be more complex and less secure.

Serverless Security Considerations

Serverless architectures, while offering significant advantages in terms of scalability and cost-efficiency, introduce a unique set of security challenges. The distributed nature, reliance on third-party services, and ephemeral nature of serverless functions require a proactive and comprehensive security strategy. Addressing these challenges is critical to protect serverless applications from various threats, including unauthorized access, data breaches, and denial-of-service attacks.

Security Challenges in Serverless Architectures

The inherent characteristics of serverless environments create complexities that traditional security approaches may not adequately address. Understanding these challenges is the first step towards building secure serverless applications.

- Increased Attack Surface: Serverless applications often leverage numerous third-party services and APIs. Each of these integrations expands the attack surface, as vulnerabilities in any of these components can potentially be exploited.

- Ephemeral Nature of Functions: Serverless functions are designed to be short-lived. This makes it challenging to perform traditional security tasks like long-term monitoring, forensic analysis, and incident response. The transient nature of function instances means that security logs and other diagnostic information may be lost quickly.

- Shared Responsibility Model: The security responsibility in serverless environments is shared between the cloud provider and the application developer. Understanding the division of responsibilities is crucial for ensuring proper security coverage. For example, the cloud provider is typically responsible for the security

-of* the cloud (infrastructure), while the developer is responsible for the security

-in* the cloud (application code and configurations). - Configuration Complexity: Serverless applications involve complex configurations, including permissions, access control policies, and event triggers. Misconfigurations are a leading cause of security breaches in serverless environments.

- Dependency Management: Serverless functions rely on external libraries and dependencies. Managing these dependencies and keeping them updated with the latest security patches is a continuous process that requires careful attention.

- Lack of Visibility: Gaining comprehensive visibility into the execution of serverless functions can be challenging. Without proper logging, monitoring, and auditing, it can be difficult to detect and respond to security incidents promptly.

Recommendations for Securing Serverless Applications

Implementing robust security measures is essential to mitigate the risks associated with serverless deployments. A layered approach, incorporating authentication, authorization, data encryption, and other security best practices, is recommended.

- Authentication: Verify the identity of users and services accessing serverless resources. This can be achieved through various methods, including API keys, tokens, and federated identity providers (e.g., AWS Cognito, Google Cloud Identity Platform).

- Example: Implement API key authentication for API endpoints to restrict access to authorized clients.

- Authorization: Control access to resources based on the authenticated identity and defined permissions. This involves defining roles and policies that grant specific privileges. Use the principle of least privilege, granting only the necessary permissions to each function and user.

- Example: Use AWS IAM policies to restrict a Lambda function’s access to only the necessary S3 buckets and other resources.

- Data Encryption: Protect sensitive data at rest and in transit. Encrypt data stored in databases, object storage, and other data repositories. Use HTTPS for all communication between clients and serverless applications.

- Example: Enable server-side encryption for S3 buckets to encrypt data at rest using encryption keys managed by AWS KMS.

- Input Validation: Validate all user inputs to prevent injection attacks, such as SQL injection and cross-site scripting (XSS). Sanitize inputs to remove or neutralize malicious code.

- Example: Use a web application firewall (WAF) to filter malicious traffic and protect against common web vulnerabilities.

- Code Security: Secure the code of serverless functions. Perform regular code reviews, use static analysis tools to identify vulnerabilities, and apply security patches promptly.

- Example: Regularly scan the code for known vulnerabilities using tools like SonarQube or Snyk.

- Logging and Monitoring: Implement comprehensive logging and monitoring to track function invocations, errors, and security events. Use centralized logging and monitoring tools to collect and analyze logs from all serverless components.

- Example: Use AWS CloudWatch Logs to collect logs from Lambda functions and create custom metrics and alarms to detect suspicious activity.

- Secrets Management: Securely store and manage sensitive information, such as API keys, database credentials, and other secrets. Avoid hardcoding secrets in function code. Use a secrets management service (e.g., AWS Secrets Manager, Google Cloud Secret Manager) to securely store and retrieve secrets.

- Example: Store database credentials in AWS Secrets Manager and retrieve them dynamically within Lambda functions.

- Network Security: Configure network security measures, such as virtual private clouds (VPCs) and security groups, to restrict network access to serverless functions and other resources.

- Example: Deploy Lambda functions within a VPC to control their network access and isolate them from the public internet.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and address vulnerabilities in serverless applications.

- Example: Perform regular penetration tests to simulate real-world attacks and identify security weaknesses.

Handling Security Vulnerabilities in a Serverless Context

Addressing security vulnerabilities requires a proactive and responsive approach, including vulnerability detection, remediation, and incident response.

- Vulnerability Detection: Employ various methods to identify vulnerabilities in serverless applications, including static code analysis, dynamic testing, and vulnerability scanning. Use automated tools to scan for known vulnerabilities in dependencies and libraries.

- Example: Integrate security scanning tools into the CI/CD pipeline to automatically detect vulnerabilities during the development process.

- Remediation: Implement appropriate measures to fix identified vulnerabilities. This may involve patching dependencies, updating code, and reconfiguring security settings.

- Example: Immediately update vulnerable dependencies to the latest versions that include security patches.

- Incident Response: Develop and implement an incident response plan to address security incidents promptly and effectively. This plan should include procedures for detecting, containing, eradicating, and recovering from security breaches.

- Example: Establish a process for rapidly identifying and isolating compromised resources, such as disabling affected Lambda functions or revoking API keys.

- Security Updates and Patching: Establish a system for quickly applying security updates and patches to dependencies and the underlying infrastructure. Automate the patching process where possible.

- Example: Automate the process of updating dependencies using tools like Dependabot.

- Immutable Infrastructure: Utilize immutable infrastructure principles to simplify patching and vulnerability remediation. This involves rebuilding the infrastructure (e.g., functions) with the updated code and configurations rather than patching existing instances. This approach reduces the risk of configuration drift and ensures consistency.

- Example: Deploy new versions of Lambda functions with each code update, ensuring that all instances are running the latest secure code.

Serverless Monitoring and Debugging

Serverless architectures, while offering significant advantages in terms of scalability and cost-efficiency, introduce unique challenges in monitoring and debugging. The ephemeral nature of serverless functions, coupled with distributed execution across multiple services, necessitates robust monitoring and debugging strategies. Effective monitoring ensures application health, performance, and resource utilization are optimized, while efficient debugging tools enable rapid identification and resolution of issues.

This section delves into the critical aspects of serverless monitoring and debugging, providing practical methods and a structured troubleshooting procedure.

Importance of Monitoring Serverless Applications

Monitoring is crucial for maintaining the operational health and performance of serverless applications. The dynamic and distributed nature of serverless deployments requires continuous observation to identify and address potential issues proactively. Without comprehensive monitoring, issues such as performance bottlenecks, error spikes, and unexpected costs can go unnoticed, leading to degraded user experience and financial inefficiencies.

- Performance Optimization: Monitoring provides insights into function execution times, latency, and throughput. By analyzing these metrics, developers can identify performance bottlenecks and optimize code or resource allocation for improved efficiency. For example, if a function consistently exceeds a specific execution time threshold, it could indicate a need for code optimization, increased memory allocation, or caching strategies.

- Cost Management: Serverless platforms often employ a pay-per-use model. Monitoring resource consumption, such as function invocations, memory usage, and network traffic, is essential for cost optimization. Analyzing these metrics allows developers to identify functions that are consuming excessive resources and adjust their configurations or optimize their code to reduce costs. For example, by monitoring invocation counts and execution times, you can pinpoint functions that are being over-invoked or taking longer than expected, leading to unnecessary costs.

- Error Detection and Resolution: Monitoring systems capture error logs and exceptions generated by serverless functions. Analyzing these logs helps developers quickly identify the root cause of issues and implement corrective actions. Error monitoring also enables the establishment of alerting mechanisms to notify developers of critical failures, allowing for prompt intervention and minimizing downtime.

- Security and Compliance: Monitoring plays a vital role in security and compliance. Monitoring logs can reveal security incidents, such as unauthorized access attempts or data breaches. Furthermore, monitoring ensures that serverless applications comply with regulatory requirements by tracking user activity, data access, and other security-related events.

Methods for Monitoring and Debugging Serverless Functions

Effective monitoring and debugging of serverless functions rely on a combination of techniques and tools. Logging, tracing, and metrics collection are fundamental components of a comprehensive monitoring strategy. These methods provide visibility into the behavior of serverless applications, enabling developers to diagnose and resolve issues efficiently.

- Logging: Logging is the process of recording events and messages generated by serverless functions. It provides a chronological record of function executions, including input parameters, output results, error messages, and timestamps. Logging is essential for debugging, auditing, and understanding the behavior of serverless applications.

- Structured Logging: Using structured logging formats, such as JSON, enhances the usability of logs. Structured logs make it easier to search, filter, and analyze log data. This allows for more efficient identification of patterns and anomalies.

- Log Aggregation: Log aggregation tools collect logs from multiple sources and centralize them for analysis. Tools like AWS CloudWatch Logs, Google Cloud Logging, and Azure Monitor provide centralized logging capabilities, allowing developers to search and analyze logs from various functions and services.

- Log Filtering and Alerting: Setting up filters and alerts based on specific log events helps developers identify and respond to critical issues quickly. For example, an alert can be triggered when a function encounters an error or when a specific threshold is exceeded.

- Tracing: Tracing provides a way to track the execution of a request as it flows through multiple serverless functions and services. Distributed tracing tools, such as AWS X-Ray, Google Cloud Trace, and Azure Application Insights, correlate events across different components, providing a comprehensive view of the request’s journey.

- Span Creation: Tracing tools create spans to represent individual operations within a request. Each span contains information about the operation, such as its start and end times, duration, and any associated metadata.

- Correlation IDs: Correlation IDs are used to link spans together, enabling the reconstruction of the request’s complete flow.

- Performance Analysis: Tracing data helps identify performance bottlenecks and latency issues by visualizing the time spent in each operation. This allows for the optimization of slow functions or services.

- Metrics Collection: Metrics provide quantitative data about the performance and health of serverless applications. Serverless platforms automatically collect a range of metrics, such as function invocations, execution times, memory usage, and error rates. These metrics are crucial for monitoring performance, identifying trends, and detecting anomalies.

- Custom Metrics: Developers can define custom metrics to track application-specific behavior. Custom metrics can provide insights into business processes, user behavior, or any other relevant aspects of the application.

- Dashboards: Dashboards provide a visual representation of metrics, allowing developers to monitor the health and performance of serverless applications at a glance. Dashboards can be customized to display relevant metrics and alerts.

- Alerting: Alerting mechanisms can be configured to notify developers when metrics exceed predefined thresholds. This allows for proactive response to potential issues.

Troubleshooting Procedure for Common Serverless Issues

A structured troubleshooting procedure is essential for efficiently resolving issues in serverless applications. This procedure involves a series of steps, from initial investigation to root cause analysis and remediation.

- Identify the Problem: Begin by gathering information about the issue. This may involve reviewing user reports, monitoring alerts, and examining error logs. Clearly define the symptoms of the problem. For example, is the function failing? Is it slow?

Are there error messages?

- Reproduce the Issue: Attempt to reproduce the issue in a controlled environment. This can help isolate the problem and verify the effectiveness of potential solutions. This might involve triggering the function with the same input data that caused the error.

- Check the Logs: Examine the logs generated by the function and related services. Look for error messages, exceptions, and other relevant information. Pay attention to timestamps and correlation IDs to trace the flow of requests across different components.

- Use Tracing: If available, use tracing tools to visualize the flow of requests and identify performance bottlenecks. Examine the spans associated with the failing function and related services. Look for long execution times, errors, or other anomalies.

- Analyze Metrics: Review metrics such as function invocations, execution times, and error rates. Identify any unusual patterns or trends. For example, a sudden increase in execution time could indicate a performance issue.

- Isolate the Root Cause: Based on the information gathered from logs, tracing, and metrics, determine the root cause of the issue. This might involve code errors, configuration issues, resource limitations, or external dependencies. For example, a common issue is exceeding the execution time limit.

- Implement a Solution: Once the root cause is identified, implement a solution. This might involve code changes, configuration updates, or resource adjustments. Ensure that the solution addresses the underlying problem and does not introduce new issues.

- Test the Solution: Test the solution thoroughly to ensure that it resolves the issue and does not introduce new problems. Reproduce the issue again to confirm that it is fixed.

- Monitor the Application: After implementing a solution, continue to monitor the application to ensure that the issue is resolved and that the application is performing as expected. Set up alerts to notify you of any future occurrences of the problem.

Serverless Cost Optimization

Serverless computing, while offering significant advantages in terms of scalability and operational efficiency, necessitates careful consideration of cost management. The pay-per-use model, a core tenet of serverless, presents both opportunities and challenges for cost optimization. Understanding the factors influencing costs and employing effective strategies is crucial for maximizing the economic benefits of serverless architectures.

Factors Impacting Serverless Application Costs

Several factors directly influence the cost of serverless applications. These factors interact, creating a complex relationship that demands a holistic approach to cost optimization.

- Function Execution Time: The duration for which a function runs is a primary cost driver. Longer execution times translate to higher costs, making efficient code and optimized resource allocation critical. For example, a function that processes a large image and takes 5 seconds to execute will cost more than a function that processes a smaller image and takes 1 second, assuming identical resource consumption per second.

- Memory Allocation: The amount of memory allocated to a function directly affects its performance and cost. Allocating more memory can improve execution speed but increases the cost per execution. Finding the optimal memory setting involves balancing performance needs with cost considerations.

- Number of Function Invocations: The frequency with which functions are invoked is a fundamental cost factor. Higher invocation rates lead to increased costs, especially for functions that are triggered frequently. Optimizing event triggers and reducing unnecessary invocations are key.

- Network Data Transfer: Data transfer in and out of serverless functions incurs costs, particularly for operations involving large datasets or frequent communication with external services. Reducing data transfer volume and employing efficient data compression techniques can help minimize these costs.

- Storage Costs: Serverless applications often rely on storage services for data persistence. The volume of data stored and the frequency of access impact storage costs. Efficient data management practices, such as data lifecycle management and object storage tiering, are crucial.

- Provisioned Concurrency: Some serverless platforms allow for pre-provisioning concurrency to handle bursts of traffic. While this can improve performance and reduce latency, it also incurs costs, even when the functions are not actively processing requests.

Strategies for Optimizing Serverless Costs

Effective cost optimization in serverless requires a proactive and multifaceted approach. The following strategies can significantly reduce operational expenses.

- Right-Sizing Functions: This involves allocating the appropriate memory and compute resources to each function. Over-provisioning leads to unnecessary costs, while under-provisioning can result in performance bottlenecks. Profiling and testing functions with varying memory settings are essential for determining the optimal configuration.

- Optimizing Code for Efficiency: Writing efficient code that minimizes execution time and resource consumption is paramount. This includes techniques such as:

- Reducing Dependencies: Minimize the number of external libraries and dependencies to reduce function size and cold start times.

- Optimizing Database Queries: Write efficient database queries to minimize data retrieval and processing costs.

- Using Efficient Algorithms: Employ algorithms with optimal time and space complexity.

- Implementing Event-Driven Architectures: Leveraging event-driven architectures can reduce the number of function invocations by triggering functions only when necessary. This can lead to significant cost savings compared to polling-based approaches.

- Batching and Aggregation: Batching multiple requests into a single function invocation can reduce the overhead associated with individual invocations, particularly for operations involving external services.

- Caching: Implementing caching mechanisms can reduce the need to repeatedly access external resources, such as databases or APIs, thereby reducing costs and improving performance.

- Monitoring and Alerting: Implementing robust monitoring and alerting systems allows for the early detection of cost anomalies and performance issues. Setting up alerts based on cost thresholds can help prevent unexpected cost overruns.

- Choosing the Right Platform and Region: Different cloud providers and regions may offer varying pricing models. Selecting the most cost-effective platform and region for a given workload is an important consideration.

- Using Serverless Frameworks and Tools: Utilizing serverless frameworks and tools can streamline the development and deployment process, making it easier to optimize code and manage costs.

Cost Comparison: Serverless vs. Traditional Architectures

Comparing the cost of serverless and traditional architectures requires a careful assessment of the specific workload and its characteristics. A simplified example demonstrates the potential cost differences.Consider a web application that processes user uploads. The application requires an API to handle file uploads, a database to store metadata, and a background process to resize images.

| Architecture | Cost Components | Serverless (Example) | Traditional (Example) |

|---|---|---|---|

| API Gateway | API Requests | $0.0000035 per request | $0 (Managed by Load Balancer) |

| Compute (Function/VM) | Execution Time/VM Instance Hours | $0.0000002 per GB-second | $0.05 per hour (VM with 1GB RAM) |

| Database | Storage, Requests | $0.20 per GB per month, $0.0000005 per request | $0.25 per GB per month, $0.000001 per request |

| Storage | Storage, Data Transfer | $0.023 per GB per month, $0.09 per GB data transfer out | $0.023 per GB per month, $0.09 per GB data transfer out |

| Monitoring/Logging | Usage-based | $0.0000005 per log event | $0.000001 per log event |

Assumptions for a sample workload (per month):

- 1,000,000 API Requests

- 1,000,000 Image Resizing Operations (average execution time 2 seconds, 128MB memory)

- 10 GB Database Storage, 1,000,000 Database Requests

- 10 GB Storage, 10 GB Data Transfer Out

Cost Calculation (Illustrative, based on example prices):

Serverless:

- API Gateway: 1,000,000 requests

– $0.0000035/request = $3.50 - Function (Image Resizing): 1,000,000 invocations

– 2 seconds

– $0.0000002/GB-second

– 0.128 GB = $51.20 - Database: (10GB

– $0.20) + (1,000,000 requests

– $0.0000005) = $20 + $0.50 = $20.50 - Storage: 10GB

– $0.023 + 10GB

– $0.09 = $0.23 + $0.90 = $1.13 - Monitoring/Logging: 1,000,000 log events

– $0.0000005 = $0.50 - Total Serverless Cost: $3.50 + $51.20 + $20.50 + $1.13 + $0.50 = $76.83

Traditional:

- Compute (VM): (730 hours/month

– $0.05/hour) = $36.50 - Database: (10GB

– $0.25) + (1,000,000 requests

– $0.000001) = $25 + $1 = $26 - Storage: 10GB

– $0.023 + 10GB

– $0.09 = $0.23 + $0.90 = $1.13 - Monitoring/Logging: 1,000,000 log events

– $0.000001 = $1 - Total Traditional Cost: $36.50 + $26 + $1.13 + $1 = $64.63

In this simplified example, the traditional architecture is slightly cheaper. However, this is highly dependent on the workload. For workloads with significantly variable traffic, serverless can often be more cost-effective. If the image resizing operations are infrequent, the serverless architecture will be cheaper due to the pay-per-use model. The traditional architecture’s cost is constant, regardless of usage.

Important Considerations:

This comparison is a simplified illustration. Real-world cost comparisons must factor in additional considerations, such as development time, operational overhead, and the potential for increased developer productivity with serverless. The cost effectiveness of each architecture depends on the specific characteristics of the workload, traffic patterns, and the efficiency of the implementation.

Serverless Deployment and CI/CD

Serverless architectures necessitate a streamlined and automated deployment process. Continuous Integration and Continuous Delivery (CI/CD) pipelines are crucial for managing the complexities of serverless application lifecycles, from code changes to production deployments. This approach ensures rapid iteration, minimizes manual intervention, and promotes operational efficiency.

CI/CD Pipeline for Serverless Applications

The CI/CD pipeline in a serverless environment automates the build, test, and deployment processes. It allows developers to rapidly and reliably release new features and updates.

- Source Code Management: The process begins with a source code repository (e.g., Git, GitHub, GitLab). Developers commit code changes to this repository.

- Build Stage: Upon code commit, the CI/CD system triggers a build process. This typically involves:

- Installing dependencies (e.g., using npm, pip).

- Packaging the code and dependencies into a deployable artifact (e.g., a ZIP file for AWS Lambda).

- Performing static code analysis and linting to identify potential issues.

- Testing Stage: Automated testing is a critical component. This involves running various types of tests:

- Unit Tests: Testing individual functions or modules in isolation.

- Integration Tests: Testing interactions between different functions and services.

- End-to-End Tests: Testing the entire application flow from the user’s perspective.

- Deployment Stage: Once the tests pass, the artifact is deployed to the serverless platform (e.g., AWS Lambda, Azure Functions, Google Cloud Functions). This includes:

- Provisioning infrastructure (e.g., creating or updating functions, APIs, and other resources).

- Configuring environment variables and other settings.

- Deploying the code artifact.

- Monitoring and Rollback: After deployment, the pipeline should monitor the application’s performance and health. If issues are detected, the pipeline should automatically roll back to a previous stable version.

Deploying Serverless Functions with Different Tools

Several tools are available for deploying serverless functions, each offering different features and levels of abstraction. The choice of tool depends on the complexity of the application and the development team’s preferences.

- Cloud Provider’s Native Tools: Each major cloud provider (AWS, Azure, Google Cloud) offers its own native tools for deploying serverless functions.

- AWS: AWS provides the AWS CLI, the Serverless Application Model (SAM), and the AWS Cloud Development Kit (CDK). SAM and CDK provide higher-level abstractions for defining and deploying serverless applications.

- Azure: Azure offers the Azure CLI, Azure Resource Manager (ARM) templates, and the Azure Functions Core Tools. ARM templates enable infrastructure-as-code deployments.

- Google Cloud: Google Cloud provides the gcloud CLI, Cloud Build, and Terraform. Cloud Build can be used to automate the build and deployment process.

- Serverless Framework: The Serverless Framework is a popular open-source framework that simplifies the deployment and management of serverless applications across multiple cloud providers. It uses a YAML-based configuration file to define the application’s resources.

- Terraform: Terraform is an infrastructure-as-code tool that can be used to provision and manage serverless infrastructure alongside other cloud resources. It uses a declarative configuration language.

- CI/CD Platforms: CI/CD platforms like Jenkins, GitLab CI, CircleCI, and GitHub Actions can be used to build and deploy serverless applications. These platforms provide features like build automation, testing, and deployment orchestration.

Benefits of Automated Testing in a Serverless Environment

Automated testing is particularly important in a serverless environment due to the rapid development cycles and the distributed nature of serverless applications. Automated testing helps ensure the quality, reliability, and maintainability of serverless applications.

- Faster Feedback Loops: Automated tests provide immediate feedback on code changes, allowing developers to identify and fix issues quickly.

- Reduced Manual Effort: Automated tests eliminate the need for manual testing, saving time and resources.

- Improved Code Quality: Automated tests help ensure that code meets quality standards and that changes do not introduce regressions.

- Increased Confidence in Deployments: With comprehensive testing, developers can deploy new versions of their applications with greater confidence.

- Scalability and Resilience: Automated tests can be used to simulate high-load scenarios and ensure that serverless functions can handle increased traffic. They also help verify the resilience of the application to failures.

- Cost Savings: Automated testing can help reduce the cost of debugging and fixing issues in production. It also reduces the risk of downtime, which can lead to lost revenue.

Serverless Use Cases and Examples

Serverless computing’s versatility has led to its adoption across diverse industries, offering benefits like reduced operational overhead, cost efficiency, and scalability. Real-world applications demonstrate its capacity to handle various workloads, from simple web applications to complex, data-intensive systems. This section explores several serverless use cases, providing detailed examples and analyzing their architectural designs, advantages, and potential challenges.

E-commerce Product Catalog and Search

E-commerce platforms leverage serverless functions to manage product catalogs and power search functionalities. This approach allows for dynamic scaling based on traffic fluctuations, ensuring optimal performance during peak shopping seasons.

Use Case: An online retailer uses serverless functions to manage its product catalog, including updates, search indexing, and image processing.

- Architecture:

- Product Data Storage: A NoSQL database (e.g., Amazon DynamoDB, Google Cloud Firestore) stores product information, offering high availability and scalability.

- Image Processing: When a new product is added or an image is updated, an event (e.g., an upload to an object storage service like Amazon S3 or Google Cloud Storage) triggers a serverless function (e.g., AWS Lambda, Google Cloud Functions). This function resizes, optimizes, and generates different image formats.

- Search Indexing: Another serverless function indexes product data in a search service (e.g., Amazon Elasticsearch Service, Google Cloud Search). This function is triggered by data changes in the product database.

- API Gateway: An API Gateway (e.g., Amazon API Gateway, Google Cloud API Gateway) handles incoming requests for product information and search queries, routing them to the appropriate serverless functions.

- Benefits:

- Scalability: The system automatically scales based on the number of product updates, image uploads, and search queries, ensuring optimal performance during peak traffic periods like Black Friday.

- Cost Efficiency: Pay-per-use pricing for serverless functions and storage reduces costs compared to traditional infrastructure.

- Faster Development: Serverless allows for faster development cycles, enabling quicker deployment of new features and product updates.

- Reduced Operational Overhead: The cloud provider manages the underlying infrastructure, reducing the need for manual server management.

- Challenges:

- Cold Starts: Initial function invocations can experience latency (cold starts), particularly for less frequently accessed functions. Optimizations include pre-warming functions or using provisioned concurrency.

- Vendor Lock-in: Depending on the chosen services, the application may become tightly coupled with a specific cloud provider.

- Debugging and Monitoring: Debugging and monitoring serverless applications can be more complex than traditional applications due to the distributed nature of the architecture. Effective logging and tracing are crucial.

- Security: Securing serverless applications requires careful consideration of function permissions, API gateway security, and data encryption.

The scalability of this architecture is demonstrated by real-world examples. For instance, e-commerce platforms have successfully handled massive traffic spikes during sales events, with serverless functions automatically scaling to process thousands of product updates and search queries per second. Performance characteristics include low latency for product searches, typically under 100 milliseconds, and the ability to process image transformations in near real-time.

These characteristics are achieved through optimized function configurations, efficient database indexing, and the use of Content Delivery Networks (CDNs) for image delivery. For example, one major online retailer reported a 40% reduction in infrastructure costs and a 30% improvement in site performance after migrating its product catalog and search functionality to a serverless architecture.

Closing Summary

In conclusion, mastering the art of answering serverless interview questions requires a solid understanding of the underlying principles, practical experience, and the ability to articulate complex concepts clearly. From grasping the fundamentals of FaaS and event-driven architectures to addressing security concerns and optimizing costs, a well-prepared candidate can confidently navigate the interview process. By understanding the common question categories, practicing with example scenarios, and staying current with industry best practices, you can showcase your expertise and secure your position in the serverless world.

Essential Questionnaire

What are the key differences between serverless and traditional architectures in terms of scaling and resource management?

In serverless, scaling is automatic and handled by the cloud provider, responding to demand in real-time. Resources are allocated only when needed, and you only pay for the actual compute time. Traditional architectures require manual scaling and pre-provisioning of resources, leading to potential over-provisioning and higher costs.

How does serverless improve cost efficiency compared to traditional architectures?

Serverless improves cost efficiency through pay-per-use pricing. You’re charged only for the compute time consumed by your functions. This contrasts with traditional models where you pay for idle resources, leading to significant cost savings, especially during periods of low or variable traffic.

What are some common security vulnerabilities in serverless applications, and how can they be mitigated?

Common vulnerabilities include injection attacks, insecure dependencies, and misconfigured access controls. Mitigation strategies involve input validation, regular dependency updates, least-privilege access management, and thorough security audits. Use of API gateways and WAFs can further enhance security.

Explain the concept of “cold starts” in serverless and how they impact performance.

A cold start is the initial delay when a serverless function is invoked, as the execution environment needs to be provisioned. This can impact performance, especially for latency-sensitive applications. Techniques to mitigate cold starts include keeping functions “warm” through scheduled invocations or using provisioned concurrency.