Cloud migration presents a transformative opportunity, yet it introduces complexities, especially concerning data integrity. The successful transfer of data to the cloud necessitates rigorous validation to ensure accuracy, completeness, and consistency. This guide delves into the critical processes and techniques required to validate data integrity following a cloud migration, providing a structured approach to minimize risks and maintain data reliability.

From meticulous planning and pre-migration checks to advanced validation techniques and ongoing monitoring, this exploration covers every facet of data integrity assurance. We examine migration strategies, data comparison methods, security considerations, testing procedures, compliance requirements, and the role of disaster recovery. Furthermore, the guide highlights the tools and technologies that empower organizations to confidently navigate the cloud migration journey while safeguarding their most valuable asset: their data.

Planning and Preparation for Data Integrity Validation

The cornerstone of a successful cloud migration lies in ensuring data integrity. Meticulous planning and preparation are paramount to prevent data loss, corruption, and inconsistencies during the migration process. This phase establishes the foundation for validating data accuracy and completeness post-migration, mitigating risks and safeguarding the organization’s critical information assets.

Pre-Migration Data Validation Checklist

A comprehensive pre-migration data validation checklist is essential to proactively identify and address potential issues before the migration. This checklist helps standardize the preparation process, ensuring all necessary steps are taken.

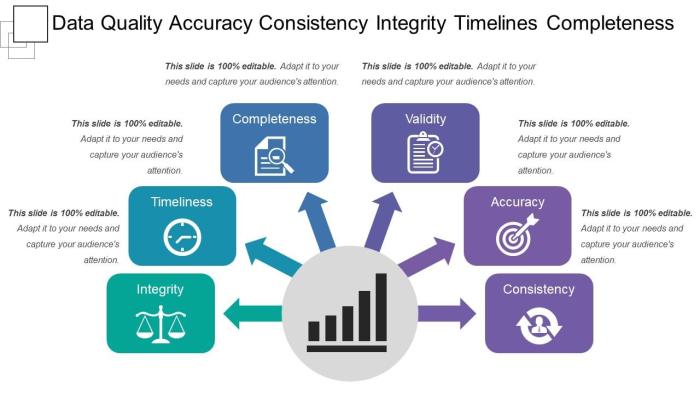

- Data Profiling and Assessment: This involves a thorough examination of the existing data. Assess data types, formats, and quality metrics, including completeness, accuracy, consistency, and validity. Identify potential data quality issues like null values, duplicate entries, and incorrect data formats.

- Data Schema Documentation: Documenting the existing data schema is a critical step. This includes table structures, column definitions, data types, relationships, and constraints. Understanding the schema is essential for mapping data during migration and validating data integrity in the cloud.

- Data Volume Analysis: Determine the volume of data to be migrated. This helps estimate migration time, storage requirements, and the resources needed for validation. Analyze data growth trends to predict future storage needs in the cloud.

- Data Transformation Requirements: Identify any necessary data transformations. This may include data cleansing, format conversions, and data aggregation. Document these transformations and create a plan for their implementation during the migration.

- Data Security and Compliance Assessment: Evaluate the existing data security and compliance posture. Determine security requirements for data in the cloud, including encryption, access controls, and data masking. Ensure compliance with relevant regulations.

- Data Backup and Recovery Plan: Implement a robust data backup and recovery plan before the migration. This plan should cover both on-premise and cloud environments, ensuring data protection during and after the migration.

- Environment Setup: Establish the target cloud environment and configure the necessary infrastructure. This includes setting up storage, compute resources, and network connectivity.

- Test Data Creation: Create a representative test dataset that mirrors the characteristics of the production data. This test data is used to validate the migration process and ensure data integrity.

- Migration Tool Selection: Select appropriate migration tools and validate them. Evaluate the capabilities of different tools, such as data replication, data synchronization, and data transformation.

- Migration Strategy Development: Define the migration strategy, including the approach (e.g., lift-and-shift, re-platforming, refactoring), timeline, and resource allocation.

Importance of Documenting Data Schemas and Structures

Detailed documentation of data schemas and structures is crucial for successful data migration. This documentation serves as a blueprint for understanding the data’s organization and relationships, guiding the migration process and ensuring data integrity.

- Data Mapping: Data schema documentation is essential for mapping data from the source environment to the target cloud environment. This involves identifying the corresponding tables, columns, and data types in the cloud.

- Data Transformation: The documentation helps determine the necessary data transformations. Data may need to be converted to different formats, cleansed, or aggregated to align with the cloud environment.

- Data Validation: Documentation enables effective data validation. By understanding the schema, it’s possible to define validation rules and ensure data integrity after the migration.

- Data Governance: Data schema documentation supports data governance. It provides a clear understanding of the data assets and their relationships, enabling better data management and compliance.

- Troubleshooting: Documentation assists in troubleshooting issues that arise during the migration or post-migration. It helps identify the root cause of data inconsistencies and errors.

- Communication: Data schema documentation facilitates communication among stakeholders, including data architects, developers, and business users.

Process for Identifying Critical Data Elements

Identifying critical data elements is a key step in data integrity validation. These elements are vital to the business and require rigorous validation to ensure accuracy and consistency. This process involves assessing data’s impact on business operations and prioritizing validation efforts.

- Business Impact Analysis: Determine the impact of data loss, corruption, or inconsistencies on business operations. This analysis involves identifying critical business processes that rely on data.

- Data Element Identification: Identify the specific data elements that are essential for these critical business processes. These elements might include customer data, financial transactions, and product information.

- Data Sensitivity Assessment: Evaluate the sensitivity of each data element. This involves considering the confidentiality, integrity, and availability requirements.

- Data Quality Assessment: Assess the current data quality of each element. This includes measuring accuracy, completeness, consistency, and validity.

- Risk Assessment: Evaluate the risks associated with data loss, corruption, or inconsistencies. This involves considering the likelihood of these events and their potential impact.

- Prioritization: Prioritize data elements based on their business impact, sensitivity, and data quality. Critical data elements should be given the highest priority for validation.

- Documentation: Document the identified critical data elements and their associated validation requirements.

Strategies for Establishing Baseline Data Integrity Metrics

Establishing baseline data integrity metrics is fundamental to measuring the success of the migration and ensuring ongoing data quality. These metrics provide a benchmark for comparison and help identify any data integrity issues.

- Define Key Performance Indicators (KPIs): Define KPIs to measure data integrity. These KPIs should be aligned with the business objectives and data quality requirements. Examples include data accuracy, completeness, consistency, and timeliness.

- Data Profiling: Conduct data profiling to understand the characteristics of the data. This includes assessing data types, formats, and quality metrics.

- Data Quality Rules: Define data quality rules to enforce data integrity. These rules specify the acceptable values, formats, and relationships for data elements.

- Data Validation: Implement data validation processes to check data against the defined quality rules. This can involve automated validation checks and manual reviews.

- Data Quality Monitoring: Establish a data quality monitoring system to track data integrity metrics over time. This system should generate reports and alerts when data quality issues are detected.

- Data Governance: Implement data governance practices to ensure data quality. This includes establishing data ownership, data stewardship, and data quality policies.

- Historical Data Analysis: Analyze historical data to establish baselines for data integrity metrics. This provides a reference point for comparison and helps identify trends.

- Reporting and Analysis: Generate reports on data integrity metrics and analyze the results. This helps identify areas for improvement and track progress.

- Continuous Improvement: Continuously monitor and improve data integrity. Regularly review and update data quality rules and processes to address evolving business needs.

Data Migration Strategies and Their Impact on Integrity

The choice of cloud migration strategy significantly influences the approach to data integrity validation. Different strategies present varying degrees of risk and necessitate tailored validation techniques. Understanding these implications is crucial for ensuring data consistency and accuracy throughout the migration process. This section explores the relationship between migration strategies and data integrity validation, providing insights into best practices and necessary precautions.

Cloud Migration Approaches and Implications for Data Integrity Validation

Several cloud migration approaches exist, each with unique characteristics that impact data integrity validation. Understanding these differences is essential for selecting the most appropriate strategy and implementing effective validation procedures.

- Lift-and-Shift (Rehosting): This approach involves migrating applications and data to the cloud with minimal changes. While seemingly straightforward, lift-and-shift can introduce risks. For example, the underlying infrastructure differences between on-premises and the cloud can lead to subtle data inconsistencies. Validation focuses on ensuring data replication accuracy and functionality. The primary benefit is speed, but the reliance on existing infrastructure can lead to compatibility issues that may affect data integrity.

- Re-platforming (Lift-Tinker-and-Shift): Re-platforming involves making some application modifications to leverage cloud-native features. This strategy can offer performance improvements and cost savings. However, it also introduces more complexity in data integrity validation. Changes in the application logic or database schema during re-platforming necessitate thorough validation of data transformations. For instance, if a database is migrated from an on-premises SQL Server to a cloud-based PostgreSQL instance, the data types, indexing, and stored procedures must be carefully validated to ensure data consistency and performance.

- Refactoring (Re-architecting): Refactoring involves redesigning and rewriting the application to fully utilize cloud-native services. This approach offers the greatest flexibility and scalability, but it also presents the most significant challenges for data integrity validation. Extensive data transformations and schema changes are typically involved. Validation must cover all aspects of data mapping, transformation, and integration with new cloud services. A key consideration is the impact on data lineage and traceability, which may be lost if not properly accounted for during refactoring.

- Re-purchasing (Replace): This strategy involves replacing the existing application with a SaaS solution. Data migration in this scenario typically involves extracting data from the legacy system and importing it into the new SaaS application. Validation focuses on ensuring the completeness, accuracy, and consistency of the data within the SaaS platform. The specific validation steps depend on the SaaS provider’s data import capabilities and the complexity of the legacy data model.

Selecting a Migration Strategy Based on Data Sensitivity and Volume

The selection of a cloud migration strategy must be guided by the sensitivity and volume of the data being migrated. These factors influence the complexity of the migration process and the required validation efforts.

- Data Sensitivity: Highly sensitive data, such as financial records or protected health information (PHI), demands a more cautious approach. Re-platforming or refactoring may be preferred to gain greater control over data security and compliance. Data encryption, access controls, and rigorous validation procedures are essential. For example, when migrating patient data, the migration strategy must adhere to HIPAA regulations, requiring detailed documentation of data handling procedures and robust validation of data privacy controls.

- Data Volume: Large data volumes can significantly impact the migration time and cost. Lift-and-shift may be suitable for large datasets, but it can also lead to extended downtime if not planned correctly. Re-platforming or refactoring might be considered if the existing infrastructure cannot efficiently handle the data transfer to the cloud. Techniques such as parallel processing and incremental data migration can be employed to minimize downtime and ensure data integrity during the migration of large datasets.

For example, a retail company migrating its point-of-sale transaction data would consider parallel processing to expedite the migration of a large historical dataset while maintaining data integrity.

- Data Structure Complexity: The complexity of the data structure (e.g., relational databases, unstructured data) also influences the choice of migration strategy. Re-architecting may be necessary to take advantage of cloud-native data services like NoSQL databases or data lakes. This would also require an adjustment of the data validation approach.

Data Transformation Processes and Their Impact on Integrity

Data transformation is a critical component of many cloud migration strategies. It involves modifying the data format, structure, or content to ensure compatibility with the target cloud environment. Data transformation processes can introduce risks to data integrity if not managed carefully.

- Data Mapping: Data mapping defines how data elements from the source system are transformed and mapped to elements in the target system. Incorrect or incomplete data mapping can lead to data loss or corruption. Rigorous testing and validation of the data mapping rules are essential. For example, mapping a date field from the source system to a different date format in the target system requires validation to ensure that the date values are correctly transformed and that no data is lost or misinterpreted.

- Data Cleansing: Data cleansing involves identifying and correcting errors, inconsistencies, and missing values in the data. Poor data cleansing can result in inaccurate or incomplete data in the cloud environment. Thorough data profiling and validation are necessary to ensure the quality of the cleansed data. For instance, cleansing customer address data to standardize formats and remove invalid entries helps ensure accurate delivery and improved data analysis.

- Data Enrichment: Data enrichment involves adding new data or attributes to the existing data. This process can introduce errors if the new data is inaccurate or inconsistent with the existing data. Validation must ensure the accuracy and consistency of the enriched data. For example, adding geographic coordinates to customer addresses during migration requires validating the coordinates against the address data to ensure accuracy.

Migration Steps and Validation Checks

The following table Artikels the key steps in a cloud migration process, including the actions, validation checks, and expected outcomes. This table serves as a framework for planning and executing data integrity validation throughout the migration.

| Step | Action | Validation Check | Outcome |

|---|---|---|---|

| 1. Planning and Assessment | Define migration scope, select migration strategy, assess data characteristics, and identify validation requirements. | Verify the completeness of data inventory, the accuracy of the migration strategy selection, and the adequacy of the validation plan. | A well-defined migration plan with clearly identified validation requirements. |

| 2. Data Extraction | Extract data from the source system. | Verify the completeness of data extraction, the accuracy of data formats, and the consistency of data extraction methods. | A complete and accurate data extraction process with data in a format suitable for migration. |

| 3. Data Transformation | Transform the extracted data according to the defined mapping rules, cleansing rules, and enrichment requirements. | Validate the data transformation rules, the accuracy of the transformed data, and the consistency of the transformed data with the source data. Use data profiling techniques to check for inconsistencies. | Accurate and consistent data transformation with data that is ready for loading into the target system. |

| 4. Data Loading | Load the transformed data into the target cloud environment. | Verify the successful loading of all data, the accuracy of the data loaded, and the consistency of the data loaded with the transformed data. | Complete and accurate data loading into the target environment, with data available for use. |

| 5. Functional Testing | Test the functionality of the migrated application and data in the cloud environment. | Verify the application’s functionality, data retrieval accuracy, and report generation accuracy. | A fully functional application with accurate data and reporting capabilities in the cloud environment. |

| 6. Performance Testing | Test the performance of the migrated application and data in the cloud environment. | Verify that the application meets performance benchmarks, including response times and throughput. | Optimal performance of the application in the cloud environment, meeting performance requirements. |

| 7. Data Reconciliation | Compare data in the source and target systems to identify any discrepancies. | Verify data consistency between source and target systems, identify and resolve data discrepancies. | Data consistency between source and target systems, with discrepancies resolved. |

| 8. Go-Live and Post-Migration Validation | Cutover to the cloud environment and continue monitoring and validating data integrity. | Continuously monitor data integrity, application performance, and user acceptance. | A successful migration to the cloud with continuous data integrity monitoring and validation. |

Validation Techniques for Data Integrity

Ensuring data integrity after cloud migration requires a multifaceted approach. This involves employing a variety of validation techniques to confirm the accuracy, consistency, and completeness of the data transferred to the cloud environment. These techniques are crucial for identifying and rectifying potential data corruption or loss during the migration process and for maintaining data quality post-migration.

Data Validation Techniques

Various techniques can be employed to validate data integrity after cloud migration. These methods range from simple checks to more sophisticated approaches that involve comparing data sets and verifying relationships. The selection of techniques depends on factors such as the complexity of the data, the sensitivity of the information, and the specific requirements of the cloud environment.

- Checksums and Hash Functions: These are mathematical functions that generate a unique “fingerprint” or value for a data set. They are used to verify that data has not been altered during transmission or storage.

- Data Comparison: This involves comparing data sets before and after migration to identify discrepancies. This can be performed using various methods, including manual review, automated scripts, and specialized data comparison tools.

- SQL Queries for Data Consistency: SQL queries are used to check data consistency, such as referential integrity and data type validation, within the cloud database.

- Data Profiling: Data profiling tools analyze data to identify patterns, anomalies, and inconsistencies. This can help detect data quality issues and assess the overall health of the migrated data.

- Business Rule Validation: Validating that the data adheres to the defined business rules, such as checking for valid ranges, formats, and relationships.

- Metadata Validation: This involves verifying that the metadata (data about data) has been correctly migrated and is consistent with the source system.

Checksums and Hash Functions for Data Verification

Checksums and hash functions provide a robust mechanism for data integrity verification. They operate by generating a concise representation of a data set, and any alteration to the data will result in a different checksum or hash value. This allows for the detection of data corruption or modification with high accuracy.

The primary advantage of using checksums and hash functions is their speed and efficiency in verifying data integrity.

- Checksums: A checksum is a value calculated from a block of data. It is typically a relatively simple calculation, such as a cyclic redundancy check (CRC). CRCs are widely used for error detection in data transmission and storage. For instance, a CRC-32 algorithm can be used to generate a 32-bit checksum for a file. If the checksum of the file after migration matches the original checksum, it suggests that the data has been transferred without errors.

- Hash Functions: Hash functions, such as SHA-256 or MD5, are more complex than checksums and generate a unique “fingerprint” of the data. Any change to the input data will result in a completely different hash value, making them highly effective for detecting even minor alterations. For example, after migrating a large database table, an SHA-256 hash can be computed for the entire table.

If the calculated hash matches the pre-migration hash, it confirms the data’s integrity.

- Implementation: These functions are implemented using software libraries and tools available in most programming languages and operating systems. They are often integrated into data transfer utilities and cloud storage services.

SQL Queries to Check Data Consistency in the Cloud Environment

SQL queries are essential for validating data consistency within the cloud database environment. They enable the verification of various data integrity constraints, such as referential integrity, data type validation, and business rules, to ensure data quality and reliability. The specific queries used will depend on the database schema and the nature of the data.

- Referential Integrity Checks: These queries verify that foreign key relationships are intact and that there are no orphaned records. This is crucial for maintaining data consistency across related tables.

- Data Type Validation: Queries can be used to ensure that data conforms to the defined data types (e.g., integers, dates, strings). This helps prevent data errors and inconsistencies.

- Range and Format Validation: Queries can check that data values fall within acceptable ranges and conform to specified formats.

- Example Queries:

These are examples of SQL queries and should be adapted to the specific database schema and requirements.

- Referential Integrity Check (SQL Server Example):

- Data Type Validation (PostgreSQL Example):

- Range Validation (MySQL Example):

SELECT COUNT(*) FROM Orders WHERE CustomerID NOT IN (SELECT CustomerID FROM Customers);

This query checks for orders with `CustomerID` values that do not exist in the `Customers` table, indicating a referential integrity violation.

SELECT COUNT(*) FROM Products WHERE NOT price::numeric IS NOT NULL;

This query checks for records in the `Products` table where the `price` column does not contain a valid numeric value.

SELECT COUNT(*) FROM Employees WHERE salary < 0 OR salary > 200000;

This query checks for employees with salaries outside an acceptable range (e.g., less than 0 or greater than 200,000).

Role of Data Profiling Tools in Assessing Data Integrity

Data profiling tools play a crucial role in assessing data integrity by analyzing data to identify patterns, anomalies, and inconsistencies. They provide valuable insights into the data’s structure, content, and quality, helping to detect potential issues before and after cloud migration.

- Data Discovery: Data profiling tools can automatically discover the data’s structure, including data types, lengths, and formats. This is particularly useful for understanding the data in a new cloud environment.

- Data Quality Assessment: These tools assess data quality by identifying missing values, invalid data, and outliers. They provide metrics on data completeness, accuracy, consistency, and validity.

- Anomaly Detection: Data profiling tools can detect anomalies, such as unusual data patterns or values that deviate significantly from the norm. This can help identify data corruption or other data quality issues.

- Data Relationship Analysis: They can analyze relationships between data elements, such as primary keys and foreign keys, to ensure data consistency and referential integrity.

- Reporting and Visualization: Data profiling tools generate reports and visualizations that summarize data quality issues and provide insights into the data.

- Examples of Data Profiling Tools:

Tools like Informatica Data Quality, IBM InfoSphere Information Analyzer, and AWS Glue DataBrew offer comprehensive data profiling capabilities.

- Use Case: A company migrating customer data to the cloud might use a data profiling tool to identify and correct data quality issues, such as missing addresses or inconsistent phone number formats, before the migration. The tool would analyze the data, generate reports on the quality of the data, and provide recommendations for data cleansing and transformation. This would ensure that the migrated data is accurate and reliable.

Data Comparison and Reconciliation Methods

Effective data comparison and reconciliation are critical components of a successful cloud migration, ensuring the integrity of data throughout the transition. The process involves systematically comparing data sets between the source and target environments, identifying discrepancies, and implementing strategies to resolve them. This rigorous approach minimizes data loss, corruption, and inconsistencies, ultimately leading to a reliable and trustworthy cloud-based system.

Methods for Comparing Data Between Source and Target Systems

Data comparison methodologies vary depending on the volume, structure, and complexity of the data being migrated. The choice of method is influenced by factors such as the required level of accuracy, the acceptable downtime, and the available resources.

- Full Data Comparison: This method involves comparing every data element from the source to the target system. It is the most comprehensive approach, guaranteeing the highest level of data integrity. However, it can be time-consuming and resource-intensive, especially for large datasets. Full data comparison is often performed using checksums or hash values to verify data consistency. A common checksum algorithm is MD5, which generates a 128-bit hash value.

MD5(data) = hash_value

This value is then compared between source and target. If the hashes match, the data is considered identical.

- Sample-Based Comparison: In cases where a full comparison is impractical, sampling techniques can be employed. This involves selecting a representative subset of data for comparison. The accuracy of this method depends on the size and representativeness of the sample. Statistical methods can be used to determine the appropriate sample size to achieve a desired level of confidence. For example, if a 95% confidence level is required with a margin of error of 1%, a larger sample size is needed.

- Metadata Comparison: This method focuses on comparing metadata, such as table schemas, data types, indexes, and constraints, rather than the actual data. It can be used as a preliminary check to identify structural differences between the source and target systems. Tools like database schema comparison utilities can automate this process.

- Business Rule Validation: Business rules that govern data integrity can be used to validate data after migration. This involves running a set of predefined rules against the migrated data to ensure that it meets the required criteria. For instance, verifying that all customer records have valid email addresses or that transaction amounts fall within acceptable ranges.

- Automated Testing: Automating the data comparison process through scripting or specialized tools can significantly improve efficiency and reduce the risk of human error. These automated tests can be scheduled to run regularly, providing continuous monitoring of data integrity. Tools such as Selenium or Appium can be used for UI-based data validation.

Approaches for Handling Discrepancies and Data Reconciliation

When discrepancies are identified, a systematic approach to reconciliation is essential. This involves analyzing the root cause of the discrepancies, implementing corrective actions, and verifying the resolution.

- Identifying the Root Cause: The first step is to determine why the discrepancies occurred. This may involve investigating data transformation processes, migration scripts, or network issues. Log files and audit trails are valuable resources for identifying the source of the problem.

- Data Correction: Based on the root cause analysis, appropriate corrective actions are taken. This could involve re-running migration processes, correcting data in the source system, or applying transformations in the target system.

- Data Transformation: Sometimes, data discrepancies arise from differences in data formats or structures between the source and target systems. Data transformation techniques, such as data type conversion, data cleansing, and data enrichment, can be applied to resolve these issues.

- Data Enrichment: In certain scenarios, data in the target system may need to be augmented with additional information to meet the business requirements. This process involves adding missing data or enhancing existing data fields.

- Documentation and Reporting: Thorough documentation of discrepancies, their causes, and the actions taken to resolve them is crucial. Reports should be generated to track the progress of reconciliation and provide insights into the overall data migration process.

Using Data Comparison Tools to Identify Data Loss or Corruption

Data comparison tools are essential for automating the data integrity validation process. These tools provide functionalities for comparing data sets, identifying discrepancies, and generating reports.

- Data Comparison Tool Functionality: These tools typically offer features like data profiling, data mapping, data comparison, and data reconciliation. They can compare data at various levels, from individual records to entire tables.

- Data Profiling: Before comparison, data profiling helps understand the data’s characteristics, such as data types, value ranges, and data quality issues. This information can be used to configure the comparison process and identify potential problems.

- Data Mapping: Data mapping defines how data elements from the source system are mapped to the target system. This ensures that data is correctly transformed and loaded during migration.

- Reporting: Data comparison tools generate detailed reports that highlight discrepancies, including the type of discrepancy, the affected data elements, and the severity of the issue.

- Examples of Data Comparison Tools: Popular data comparison tools include IBM InfoSphere DataStage, Informatica PowerCenter, and Microsoft SQL Server Integration Services (SSIS). Open-source alternatives like Apache NiFi and Talend Data Integration are also available.

Steps Involved in Data Reconciliation

The data reconciliation process is a structured approach to resolving data discrepancies. The following table Artikels the steps involved, including the type of discrepancy, the action taken, the resolution status, and the verification method.

| Discrepancy Type | Action Taken | Resolution Status | Verification Method |

|---|---|---|---|

| Missing Records | Re-run migration process for missing records, if the issue persists, manually input the missing records. | Resolved/Partially Resolved | Verify counts, checksum comparison, manual verification. |

| Data Type Mismatch | Correct the data type in the target system or apply data transformation to the migrated data. | Resolved | Verify data type conversion, validate against data profiling results. |

| Incorrect Values | Investigate the source of the incorrect values and correct them in the source system or apply transformation rules to correct the data. | Resolved | Manual review of corrected data, comparison of checksums. |

| Duplicated Records | Identify and remove duplicate records, or implement a deduplication process. | Resolved | Check record counts before and after deduplication, verify unique identifiers. |

| Data Corruption | Restore data from backups, or repair corrupted data using specialized tools. | Resolved | Verify data integrity with checksums or hash values, perform manual data review. |

Data Quality Monitoring and Reporting

Maintaining data integrity post-migration necessitates a robust data quality monitoring and reporting framework. This framework provides continuous insights into data health, enabling proactive identification and resolution of issues. It involves establishing a system for ongoing checks, automated alerts, and comprehensive reporting to ensure the migrated data remains accurate, complete, and consistent.

Design of a Data Quality Monitoring System

A well-designed data quality monitoring system is crucial for sustained data integrity. This system should encompass automated processes to assess data against predefined quality rules and thresholds.

- Defining Data Quality Rules: The initial step involves establishing specific data quality rules tailored to the migrated data. These rules are based on business requirements, data governance policies, and data characteristics. Examples include:

- Completeness: Ensuring all required fields are populated (e.g., customer names, addresses).

- Accuracy: Validating data against permissible ranges or formats (e.g., date formats, numeric values).

- Consistency: Checking for consistency across different data sources or systems (e.g., matching customer identifiers).

- Validity: Confirming data adheres to pre-defined business rules (e.g., product codes, order statuses).

- Automated Data Profiling: Implement automated data profiling to analyze data characteristics. This includes:

- Data Type Analysis: Identifying data types (e.g., integer, string, date) for each column.

- Null Value Analysis: Determining the percentage of null values in each column.

- Value Frequency Analysis: Analyzing the frequency of distinct values in columns.

- Pattern Analysis: Identifying patterns in data (e.g., email formats, phone number formats).

- Continuous Monitoring: The monitoring system should run on a schedule (e.g., daily, weekly) to continuously assess data quality. The frequency should be determined based on the criticality of the data and the rate of change.

- Data Quality Dashboards: Create interactive dashboards to visualize data quality metrics. These dashboards provide a real-time view of data quality status, allowing for quick identification of issues.

- Alerting and Notification: Set up automated alerts that trigger when data quality thresholds are breached. These alerts should be sent to relevant stakeholders to facilitate prompt issue resolution.

Automated Alerts for Data Quality Issues

Automated alerts are essential for timely identification and resolution of data quality problems. They enable proactive intervention, preventing data issues from propagating through the system.

- Threshold-Based Alerts: Configure alerts to trigger when specific data quality metrics exceed predefined thresholds. For example:

- If the percentage of missing values in a critical field exceeds 5%.

- If the number of invalid records in a customer address table exceeds 1%.

- Anomaly Detection: Implement anomaly detection techniques to identify unusual data patterns that might indicate data quality problems. Examples:

- Sudden spikes in the number of duplicate records.

- Unexpected changes in data distribution.

- Alert Escalation: Establish an alert escalation process to ensure issues are addressed promptly. This involves:

- Defining alert severity levels (e.g., critical, high, medium, low).

- Assigning alert recipients based on severity levels.

- Setting up escalation paths for unresolved alerts.

- Alert Management System: Utilize an alert management system to track alerts, document resolutions, and analyze trends. This system helps in understanding the root causes of data quality issues.

Key Metrics for a Data Integrity Report

A comprehensive data integrity report should include a set of key metrics that provide insights into the overall data health. These metrics should be tracked over time to identify trends and assess the effectiveness of data quality initiatives.

- Completeness Rate: The percentage of records with all required fields populated.

- Accuracy Rate: The percentage of data that meets accuracy rules (e.g., valid formats, correct values).

- Consistency Rate: The percentage of data that is consistent across different systems or data sources.

- Validity Rate: The percentage of data that adheres to business rules and constraints.

- Duplicate Record Count: The number of duplicate records identified.

- Data Volume Changes: The changes in the number of records or data size over time.

- Error Rate: The percentage of records with errors or anomalies.

- Issue Resolution Time: The average time taken to resolve data quality issues.

- Data Lineage Information: Tracking the origin and transformations applied to the data.

Use of Data Visualization Tools

Data visualization tools are essential for effectively communicating data integrity trends and insights. They transform complex data into easily understandable visual representations, facilitating better decision-making.

- Dashboards: Create interactive dashboards that display key data quality metrics in real-time. Dashboards should include charts, graphs, and tables that allow users to drill down into the data.

For example, a dashboard might display a bar chart showing the completeness rate of customer data over the last quarter, along with a table listing the top 10 fields with the highest number of missing values.

- Trend Analysis: Use line charts to visualize data quality trends over time. This helps in identifying patterns and anomalies.

For instance, a line chart showing the error rate over several months can reveal whether data quality is improving or deteriorating.

- Heatmaps: Employ heatmaps to visualize data quality across different data domains or dimensions. This allows for identifying areas of concern.

A heatmap could be used to show the accuracy rate of different data fields, with warmer colors indicating lower accuracy rates and cooler colors indicating higher accuracy rates.

- Geospatial Analysis: Use geospatial visualizations to analyze data quality issues related to location data.

For example, a map can highlight regions with a high number of invalid addresses.

- Alert Visualization: Integrate alerts and notifications directly into data visualizations. This enables users to quickly identify and address data quality issues.

A visual indicator (e.g., a red flag) can appear on a dashboard when a data quality threshold is breached, prompting immediate action.

Security Considerations and Data Integrity

Data security and data integrity are inextricably linked in the cloud environment. A robust security posture is crucial for maintaining data integrity throughout the migration process and post-migration operations. Weaknesses in security can expose data to corruption, unauthorized modification, or deletion, directly impacting the reliability and trustworthiness of the data. Addressing security vulnerabilities is, therefore, paramount for ensuring the successful migration and ongoing operation of data in the cloud.

Relationship Between Data Security and Data Integrity

Data security, encompassing measures to protect data from unauthorized access, use, disclosure, disruption, modification, or destruction, directly underpins data integrity. Data integrity, defined as the accuracy, consistency, and trustworthiness of data throughout its lifecycle, is inherently dependent on the effectiveness of security controls. Compromises to security, such as successful cyberattacks or insider threats, can directly lead to data integrity breaches.

Conversely, strong security measures, like encryption, access controls, and audit logging, act as safeguards, preserving data integrity by preventing unauthorized alterations and ensuring data remains in its original, verified state. The relationship is a symbiotic one; one cannot exist effectively without the other in the cloud.

Security Measures for Data Integrity During and After Migration

Implementing comprehensive security measures is essential for safeguarding data integrity during and after cloud migration. This involves a multi-layered approach encompassing various security controls:

- Encryption: Encryption protects data confidentiality and integrity by transforming data into an unreadable format. Encryption should be applied at rest (e.g., data stored in cloud storage) and in transit (e.g., data moving between on-premises and the cloud). Utilizing strong encryption algorithms (e.g., AES-256) and managing encryption keys securely are crucial. For instance, consider a healthcare organization migrating patient records.

Encrypting these records both while stored in cloud databases and during transmission to clinical systems ensures that even if unauthorized access occurs, the data remains unreadable and the integrity is maintained.

- Access Controls: Implementing robust access controls limits who can access and modify data. Role-Based Access Control (RBAC) and Attribute-Based Access Control (ABAC) are effective methods. RBAC assigns permissions based on job roles, while ABAC uses attributes to define access policies. For example, during a financial institution’s migration, RBAC can restrict access to sensitive financial data to specific teams (e.g., finance, auditing) based on their roles.

ABAC can further refine this by considering attributes like the user’s location or device used to access the data.

- Multi-Factor Authentication (MFA): MFA adds an extra layer of security by requiring users to provide multiple verification factors (e.g., password, code from a mobile device). This significantly reduces the risk of unauthorized access due to compromised credentials. During and after migration, all administrative accounts and any user accounts with access to sensitive data should have MFA enabled.

- Regular Security Audits and Penetration Testing: Periodic security audits and penetration testing identify vulnerabilities and weaknesses in the cloud environment. These assessments help ensure that security controls are effective and that data integrity is not at risk. The findings of these tests should be addressed promptly.

- Data Loss Prevention (DLP) Solutions: DLP solutions monitor and prevent sensitive data from leaving the cloud environment. They can identify and block unauthorized data transfers or modifications.

- Immutable Storage: Using immutable storage for critical data ensures that data cannot be altered or deleted after it is written. This provides an extra layer of protection against accidental or malicious data modification. For example, in the context of legal document storage, immutable storage guarantees the authenticity and integrity of the documents over time.

Addressing Potential Security Vulnerabilities that Could Compromise Data Integrity

Cloud environments are subject to various security vulnerabilities that can impact data integrity. Proactive measures are necessary to mitigate these risks.

- Configuration Errors: Misconfigured cloud services are a common source of vulnerabilities. Regularly reviewing and validating cloud configurations against security best practices and organizational policies is essential. Tools like cloud security posture management (CSPM) solutions can automate the detection of misconfigurations. For instance, if an Amazon S3 bucket is incorrectly configured to allow public access, it can lead to unauthorized data modification or deletion.

- Insider Threats: Malicious or negligent insiders can compromise data integrity. Implementing least privilege access, regularly monitoring user activity, and conducting background checks can help mitigate insider threats. Employing Data Loss Prevention (DLP) strategies can also help.

- Malware and Ransomware: Malware and ransomware attacks can encrypt or corrupt data. Implementing robust endpoint protection, network segmentation, and regular data backups can protect against these threats. For example, if a ransomware attack encrypts a database, a recent and verified backup is crucial for restoring data integrity.

- Supply Chain Attacks: Third-party services or software used in the cloud environment can introduce vulnerabilities. Carefully vetting third-party vendors and regularly patching software and dependencies are essential. Consider the scenario where a compromised library used in a cloud application allows attackers to inject malicious code, which then modifies the application’s data.

- Lack of Patching: Failing to apply security patches to cloud infrastructure and applications leaves systems vulnerable to known exploits. Establishing a robust patching and vulnerability management process is crucial.

Impact of Access Controls on Data Integrity and Solutions

Access controls play a critical role in maintaining data integrity by limiting who can access, modify, or delete data. Inadequate access controls can lead to data breaches, unauthorized modifications, and data corruption.

- Principle of Least Privilege: Granting users only the minimum level of access necessary to perform their job functions is a fundamental security principle. This reduces the potential impact of compromised credentials or insider threats. For example, an employee in a marketing department should not have access to financial data.

- Role-Based Access Control (RBAC): RBAC assigns permissions based on job roles, simplifying access management and ensuring consistency. Regularly reviewing and updating role assignments is necessary.

- Attribute-Based Access Control (ABAC): ABAC allows for more granular and dynamic access control policies based on attributes such as user location, device, and time of day. ABAC provides more flexibility and control. For instance, a user accessing sensitive data from an unrecognized device might be denied access.

- Regular Access Audits: Regularly auditing access logs helps identify and address any unauthorized access attempts or suspicious activity. This allows to detect and respond to potential data integrity breaches.

- Implementation of Zero Trust Security: Zero trust security models assume that no user or device, inside or outside the network, should be trusted by default. This approach requires strict verification for every user and device before granting access to resources. This is particularly useful in cloud environments where the perimeter is less defined.

Testing and Validation Procedures

Data integrity validation post-migration is a crucial phase, ensuring that the data transferred to the cloud environment remains consistent, accurate, and complete. This process involves a systematic approach to verify data quality across various dimensions, mitigating potential risks and maintaining data governance. The following sections detail a comprehensive testing plan and associated procedures for validating data integrity after cloud migration.

Creating a Comprehensive Testing Plan for Data Integrity Validation

A well-defined testing plan is essential for a successful data integrity validation process. It should encompass various aspects, including scope, objectives, test cases, resources, and timelines. The plan serves as a roadmap, guiding the validation efforts and ensuring a consistent and repeatable process.

- Define the Scope: Clearly delineate the data sets, systems, and processes that will be subject to validation. Specify the boundaries of the validation effort, including the source and target environments. For example, if migrating a customer relationship management (CRM) database, the scope should include all tables related to customer information, sales orders, and associated transactional data.

- Establish Objectives: State the specific goals of the testing process. Objectives should be measurable and aligned with the data integrity requirements. Examples include verifying data completeness (e.g., ensuring all records have been migrated), data accuracy (e.g., confirming the correctness of customer addresses), and data consistency (e.g., maintaining referential integrity across tables).

- Develop Test Cases: Design a comprehensive set of test cases covering different data scenarios and validation criteria. Each test case should have a clear objective, input data, expected results, and actual results. The test cases should be categorized based on the type of validation performed, such as data completeness, accuracy, and consistency.

- Allocate Resources: Identify and assign the necessary resources for the testing process, including personnel, tools, and infrastructure. This includes data analysts, database administrators, and testing specialists. Ensure that the team has the required skills and access to the necessary environments and data.

- Establish Timelines: Create a realistic schedule for the testing process, including milestones and deadlines. Consider the complexity of the data migration, the size of the data sets, and the availability of resources. The timeline should allow sufficient time for testing, analysis, and remediation of any identified issues.

Testing Scenarios: Positive, Negative, and Edge Cases

Employing diverse testing scenarios ensures thorough data integrity validation. This includes positive, negative, and edge cases to assess data behavior under various conditions.

- Positive Test Cases: These cases verify that the data migration process functions correctly under expected conditions. They validate the successful transfer of valid data and confirm that data integrity is maintained.

- Example: Migrating a valid customer record with complete contact information, including name, address, and phone number. The expected outcome is that the record is successfully migrated to the cloud environment with all fields populated correctly.

- Negative Test Cases: These cases assess the system’s ability to handle invalid or incomplete data. They verify that the system correctly identifies and handles errors, preventing data corruption or inconsistencies.

- Example: Migrating a customer record with an invalid email address format. The expected outcome is that the migration process identifies the invalid email address and either rejects the record or flags it for correction, without allowing it to corrupt the data.

- Edge Cases: These cases test the system’s behavior at the boundaries of its operational parameters. They identify potential vulnerabilities and ensure that the system can handle unusual or extreme data conditions.

- Example: Migrating a customer record with a very long name or address. The expected outcome is that the migration process correctly handles the long data strings without truncation or data corruption.

This could involve checking if the target database has sufficient capacity to store these values or employing data truncation strategies.

- Example: Migrating a customer record with a very long name or address. The expected outcome is that the migration process correctly handles the long data strings without truncation or data corruption.

Using Test Data Sets for Verifying Data Integrity

Test data sets are critical for simulating real-world scenarios and validating data integrity. These datasets should be carefully designed to represent different data types, volumes, and complexities. The creation and utilization of test data sets are essential for the effectiveness of the validation process.

- Create Representative Data: Construct test data sets that mirror the structure and characteristics of the production data. This includes various data types (numeric, text, date), data volumes (small, medium, large), and data relationships (primary keys, foreign keys).

- Include Data Variations: Introduce variations in the test data to cover different scenarios, including valid, invalid, and edge cases. This helps assess the system’s ability to handle different data conditions and identify potential issues. For instance, include records with missing values, incorrect formats, and values exceeding predefined limits.

- Utilize Synthetic Data: Consider using synthetic data generation tools to create large and diverse test data sets. These tools can generate data based on predefined rules and constraints, ensuring that the test data is representative and covers a wide range of scenarios.

- Implement Data Masking: When using sensitive production data for testing, implement data masking techniques to protect confidential information. This involves replacing sensitive data with anonymized or obfuscated values, while maintaining the data’s structure and relationships.

- Verify Data Relationships: Test data sets should include data designed to test referential integrity, such as primary and foreign key relationships. Verify that related data is correctly migrated and that relationships are maintained in the cloud environment.

- Document Test Data: Clearly document the test data sets, including the data structure, data types, and expected values. This documentation serves as a reference for the testing process and facilitates the analysis of test results.

Example: Consider a test data set for validating customer data. The data set should include records with various data types (e.g., customer names, addresses, phone numbers), data volumes (small, medium, large customer bases), and data relationships (e.g., customer to order relationships). This example is designed to assess the accuracy, completeness, and consistency of the customer data after migration. This also helps to evaluate if the cloud environment correctly handles data relationships.

The test data includes scenarios such as:

- Valid customer records with complete information.

- Customer records with missing address information.

- Customer records with invalid phone numbers.

- Customer records with a large number of associated orders.

Documenting Test Results

Thorough documentation of test results is essential for tracking progress, identifying issues, and ensuring accountability. A structured approach to documenting test results enhances transparency and supports effective remediation.

- Test Case Identification: Assign a unique identifier to each test case for easy reference and tracking. This could be a sequential number or a more descriptive code based on the test objective.

- Test Case Description: Provide a detailed description of each test case, including the objective, input data, and the steps involved in executing the test. This ensures that the test case is clear and understandable to all stakeholders.

- Expected Outcome: Specify the expected results of each test case. This should include the expected data values, data relationships, and any other criteria that must be met for the test to be successful.

- Actual Outcome: Record the actual results of each test case, including the data values, data relationships, and any deviations from the expected outcome. This is critical for identifying and analyzing any issues that may have occurred during the testing process.

- Status: Indicate the status of each test case (e.g., passed, failed, blocked, not run). This helps track the progress of the testing process and identify any areas that require further attention.

- Error Details: If a test case fails, provide detailed information about the error, including the error message, the affected data, and any other relevant information. This facilitates the diagnosis and resolution of any issues.

- Resolution: Document the steps taken to resolve any issues identified during the testing process. This includes the actions taken to correct the data, update the system, or modify the test case.

- Sign-off: Include a sign-off section to indicate that the test results have been reviewed and approved. This ensures that the testing process is complete and that the data migration is ready for deployment.

Example of Test Result Documentation:

Consider a table to document the test results for a customer address validation:

| Test Case ID | Description | Input Data | Expected Outcome | Actual Outcome | Status | Error Details | Resolution |

|---|---|---|---|---|---|---|---|

| TC001 | Validate customer address | Customer record with valid address | Address migrated correctly | Address migrated correctly | Pass | N/A | N/A |

| TC002 | Validate customer address with missing street | Customer record with missing street | Address migrated with null street | Address migrated with null street | Pass | N/A | N/A |

| TC003 | Validate customer address with invalid zip code | Customer record with invalid zip code | Record rejected or flagged for review | Record flagged for review | Pass | Zip code format error | Address corrected |

Compliance and Regulatory Requirements

Migrating data to the cloud necessitates meticulous attention to compliance and regulatory requirements. These requirements, varying by industry and jurisdiction, dictate how data must be handled, stored, and protected to ensure its integrity. Failure to comply can result in significant legal, financial, and reputational damage. This section details the relevant compliance standards, strategies for achieving compliance, and methods for auditing data integrity within a cloud environment.

Identifying Relevant Compliance and Regulatory Requirements

The landscape of data compliance is complex and geographically diverse. Identifying the specific requirements relevant to a particular cloud migration project is the first and most critical step. This involves a thorough assessment of the industry, the geographical locations of data processing and storage, and the types of data being migrated.

- General Data Protection Regulation (GDPR): GDPR, applicable to organizations processing the personal data of individuals within the European Union (EU), mandates stringent requirements for data integrity, security, and access control. It emphasizes the principles of data minimization, purpose limitation, and accuracy. Compliance necessitates robust data validation procedures, secure storage, and transparent data processing practices.

- California Consumer Privacy Act (CCPA) / California Privacy Rights Act (CPRA): These regulations, focused on consumer data privacy in California, establish rights for consumers regarding their personal information. They mandate that businesses maintain accurate data, provide data access and deletion rights, and implement security measures to protect data integrity.

- Health Insurance Portability and Accountability Act (HIPAA): HIPAA regulates the protection of protected health information (PHI) in the healthcare industry in the United States. Compliance requires rigorous data security measures, including encryption, access controls, and audit trails, to ensure the integrity and confidentiality of PHI stored in the cloud.

- Payment Card Industry Data Security Standard (PCI DSS): PCI DSS applies to organizations that handle credit card information. It sets requirements for secure data storage, transmission, and processing, including measures to prevent data breaches and ensure the integrity of cardholder data. Cloud environments must meet these standards to process and store credit card information securely.

- Federal Information Security Management Act (FISMA): FISMA, applicable to U.S. federal agencies, mandates a comprehensive framework for securing information systems. It requires agencies to implement security controls, conduct risk assessments, and establish incident response plans to maintain data integrity and confidentiality.

- Industry-Specific Regulations: Numerous other industry-specific regulations exist, such as those governing financial institutions (e.g., SOX, Basel III), energy companies, and other sectors. These regulations often include requirements for data integrity, auditability, and data retention.

Ensuring Data Integrity Meets Compliance Standards

Achieving and maintaining data integrity within a cloud environment to meet compliance standards requires a multi-faceted approach. This involves implementing technical controls, establishing robust data governance policies, and fostering a culture of data security and compliance.

- Data Encryption: Implement encryption both in transit and at rest to protect data from unauthorized access and modification. Encryption algorithms like AES-256 are commonly used for strong data protection.

- Access Controls: Implement strict access controls, including role-based access control (RBAC) and multi-factor authentication (MFA), to limit access to data based on the principle of least privilege. This prevents unauthorized users from modifying or deleting data.

- Data Validation: Implement comprehensive data validation procedures during data migration and ongoing data processing. This includes data type validation, range checks, and format validation to ensure data accuracy and consistency.

- Data Loss Prevention (DLP): Employ DLP solutions to monitor and prevent sensitive data from leaving the organization’s control. This helps to prevent data breaches and ensure data integrity.

- Audit Trails: Implement detailed audit trails that track all data access, modifications, and deletions. These audit trails are essential for detecting and investigating data integrity issues and for demonstrating compliance with regulatory requirements.

- Data Backup and Recovery: Implement robust data backup and recovery procedures to ensure that data can be restored in the event of data loss or corruption. This includes regular backups, offsite storage, and disaster recovery plans.

- Data Governance Policies: Establish clear data governance policies that define data ownership, data quality standards, data retention policies, and data access procedures. These policies provide a framework for managing data integrity and ensuring compliance.

- Regular Security Assessments: Conduct regular security assessments, including vulnerability scans and penetration testing, to identify and address potential security vulnerabilities that could compromise data integrity.

Strategies for Auditing Data Integrity to Meet Regulatory Needs

Auditing data integrity is a critical component of demonstrating compliance with regulatory requirements. A well-defined audit strategy should encompass regular reviews of data management practices, security controls, and data integrity procedures.

- Audit Planning: Define the scope, objectives, and methodology of the audit. This includes identifying the specific compliance requirements to be assessed and the data sources to be reviewed.

- Risk Assessment: Conduct a risk assessment to identify potential threats and vulnerabilities to data integrity. This helps to prioritize audit activities and allocate resources effectively.

- Data Sampling: Employ data sampling techniques to select a representative sample of data for review. This allows auditors to assess data integrity without reviewing the entire dataset.

- Data Verification: Verify data integrity by comparing data across different systems, validating data against business rules, and reviewing audit logs. This includes checking for data discrepancies, errors, and inconsistencies.

- Control Testing: Test the effectiveness of security controls and data management procedures. This includes verifying that access controls are properly implemented, data encryption is functioning correctly, and backup and recovery procedures are in place.

- Documentation Review: Review documentation, such as data governance policies, data migration plans, and security policies, to ensure that they are aligned with compliance requirements and that they are being followed.

- Reporting and Remediation: Prepare a comprehensive audit report that summarizes the findings, identifies any non-compliance issues, and recommends corrective actions. Implement the recommended corrective actions and track their progress.

- Continuous Monitoring: Implement continuous monitoring tools and processes to monitor data integrity on an ongoing basis. This includes automated data validation checks, real-time alerts for data anomalies, and regular reviews of audit logs.

Structured Approach to Documenting Compliance Activities

Maintaining detailed documentation of compliance activities is crucial for demonstrating adherence to regulatory requirements. A structured approach to documenting these activities, including the following sections, provides a clear and organized record of compliance efforts.

| Requirement | Implementation | Verification | Evidence |

|---|---|---|---|

| Specify the specific compliance requirement, e.g., GDPR Article 32: Security of Processing. | Describe the specific steps taken to meet the requirement, e.g., implementation of encryption at rest using AES-256. | Detail how the implementation was verified, e.g., security testing, review of configuration settings, and review of data encryption status reports. | Provide the supporting documentation, e.g., security assessment reports, configuration screenshots, and data encryption status reports. |

| Example: HIPAA Security Rule – Implementation of access controls. | Example: Implemented Role-Based Access Control (RBAC) with multi-factor authentication (MFA). Defined roles and permissions to ensure least privilege access. | Example: Verified through access control audits, reviewing user access logs, and testing access permissions. | Example: Access control policy, audit logs showing user access, screenshots of RBAC configuration. |

| Example: PCI DSS Requirement 3: Protect stored cardholder data. | Example: Encrypted all stored cardholder data using strong cryptography (e.g., AES-256). Implemented key management procedures. | Example: Verified through vulnerability scans, penetration testing, and key management audits. | Example: Vulnerability scan reports, penetration test reports, key management policy. |

Disaster Recovery and Data Integrity

Data integrity is paramount during and after a cloud migration, and its preservation is critical not only during normal operations but also in the event of a disaster. Robust disaster recovery (DR) plans are essential for mitigating risks and ensuring business continuity. These plans are designed to minimize downtime and data loss, thereby safeguarding the integrity of the migrated data assets.

Role of Disaster Recovery Plans in Maintaining Data Integrity

A well-defined DR plan acts as a safeguard against data corruption or loss due to unforeseen events such as natural disasters, cyberattacks, or hardware failures. The primary function of a DR plan is to ensure the rapid recovery of data and systems to a pre-defined, consistent state. This involves several key components.

- Data Backup and Replication: Regularly backing up data and replicating it to a geographically diverse location is a cornerstone of DR. This ensures that a secondary copy of the data is available if the primary site becomes unavailable.

- Recovery Point Objective (RPO): The RPO defines the maximum acceptable data loss in the event of a disaster. A lower RPO (e.g., minutes) requires more frequent backups and potentially real-time data replication, impacting costs and complexity.

- Recovery Time Objective (RTO): The RTO defines the maximum acceptable downtime. A shorter RTO necessitates automated recovery processes and failover mechanisms.

- Testing and Validation: Regular testing of DR plans is crucial to verify their effectiveness and identify any weaknesses. This includes simulating disaster scenarios and validating the data recovery process.

- Documentation and Training: Comprehensive documentation of the DR plan, including roles, responsibilities, and procedures, is essential. Training personnel on DR procedures ensures they can effectively execute the plan when needed.

Data Backup and Recovery Strategies

Effective data backup and recovery strategies are the core of any DR plan, ensuring data integrity in the face of adversity. The choice of strategy depends on factors like RPO, RTO, budget, and the criticality of the data.

- Full Backups: A full backup copies all data to a separate storage location. While providing the simplest recovery process, full backups are time-consuming and require significant storage space.

- Incremental Backups: Incremental backups only copy data that has changed since the last backup (full or incremental). This reduces backup time and storage requirements but increases the complexity of the recovery process, as all previous incremental backups must be restored sequentially.

- Differential Backups: Differential backups copy data that has changed since the last full backup. Recovery is faster than with incremental backups, requiring only the full backup and the latest differential backup, but backup times and storage space increase over time.

- Replication: Data replication involves creating a real-time or near real-time copy of data at a secondary site. This minimizes data loss and RTO, providing the fastest recovery time. Various replication methods exist, including synchronous and asynchronous replication. Synchronous replication ensures data consistency by writing data to both primary and secondary sites simultaneously, while asynchronous replication allows for a slight delay in replication.

- Cloud-Based Backups: Utilizing cloud-based backup solutions offers several advantages, including offsite storage, scalability, and cost-effectiveness. These solutions often provide automated backup and recovery capabilities. Examples include Amazon S3, Azure Blob Storage, and Google Cloud Storage.

- Immutable Backups: Immutable backups are a crucial part of data integrity protection, as they are designed to be unchangeable. Once a backup is created, it cannot be modified or deleted, protecting against data corruption or malicious attacks like ransomware.

Testing Disaster Recovery Procedures to Validate Data Integrity

Regular testing of DR procedures is a crucial step in validating data integrity. Testing helps to identify weaknesses in the DR plan and ensures that recovery processes function as expected. The frequency and type of testing should align with the criticality of the data and the defined RPO and RTO.

- Tabletop Exercises: These exercises involve a discussion-based review of the DR plan, including scenarios and recovery procedures. They are useful for identifying gaps in the plan and ensuring that personnel understand their roles and responsibilities.

- Failover Testing: Failover testing simulates a system outage and verifies the ability to switch to the secondary site. This tests the automated failover mechanisms and validates data recovery.

- Full System Recovery Tests: These tests involve restoring data and applications to a test environment, simulating a complete recovery process. They provide a comprehensive validation of the DR plan, including data integrity checks.

- Data Integrity Checks During Testing: During DR testing, it is crucial to verify the integrity of the recovered data. This can involve comparing data from the recovered environment with data from the primary environment or using checksums and hash values to detect data corruption.

- Documentation and Reporting: Thoroughly documenting the testing process, including the steps taken, the results obtained, and any issues encountered, is essential. The report should include recommendations for improvements to the DR plan.

Data Recovery Process Flowchart

The following flowchart illustrates a simplified data recovery process, incorporating key decision points and steps.

+-------------------------------------+| || 1. Disaster Declared || |+-------------------------------------+ | V+-------------------------------------+| || 2. Assess the Situation || -Type of Disaster || -Impacted Systems || -Data Loss Assessment || |+-------------------------------------+ | | Yes | +-------------------------->+-------------------------------------+ | | | | | 3. Initiate Failover/Recovery | | | -Activate Secondary Site | | | -Restore from Backup | | | -Initiate Replication (if applicable)| | | | +-------------------------->+-------------------------------------+ | | | | | No | +-------------------------->+-------------------------------------+ | | | | | 4. Implement Mitigation Strategies | | | -Manual Recovery | | | -Data Repair | | | | +-------------------------->+-------------------------------------+ | V+-------------------------------------+| || 5. Data Validation and Integrity Checks || -Checksums/Hashes || -Data Comparison || -Application Testing || |+-------------------------------------+ | | Data Integrity Validated? | +-------------------------->+-------------------------------------+ | | Yes | | | 6. System Operational | | | -Verify Functionality | | | -Resume Business Operations | | | | +-------------------------->+-------------------------------------+ | | | No | +-------------------------->+-------------------------------------+ | | 7. Data Repair/Re-recovery | | | -Data Corruption Investigation | | | -Restore from Alternate Source | | | -Repeat Validation Process | | | | +-------------------------->+-------------------------------------+ | V+-------------------------------------+| || 8. Post-Recovery Activities || -Incident Report || -Root Cause Analysis || -DR Plan Updates || |+-------------------------------------+ The flowchart depicts a decision-making process, beginning with the declaration of a disaster. It Artikels steps such as assessing the situation, initiating failover or recovery, and implementing mitigation strategies. Data validation and integrity checks are crucial, and if the data is not valid, the process loops back for data repair or re-recovery. Post-recovery activities, including incident reporting and DR plan updates, are essential for continuous improvement.

This structured approach ensures a methodical and effective data recovery process, thereby maintaining data integrity.

Tools and Technologies for Data Integrity Validation

The cloud environment necessitates a robust suite of tools and technologies for ensuring data integrity. These tools facilitate the verification of data consistency, accuracy, and completeness throughout the data migration and operational phases. Selecting the appropriate tools is crucial for minimizing data loss, preventing corruption, and maintaining regulatory compliance.

Key Tools and Technologies

Data integrity validation in the cloud leverages a variety of tools categorized by their function. These tools perform tasks such as data comparison, validation, monitoring, and reporting. They are often integrated into automated workflows to streamline the integrity verification process.

Data Comparison and Validation Tools

Data comparison tools are essential for verifying the consistency of data between source and target systems. Validation tools, on the other hand, assess the data’s adherence to predefined rules and constraints.

- Data Comparison Tools: These tools compare datasets across different systems or databases. They identify discrepancies, such as missing records, incorrect values, or data inconsistencies.

- Example: AWS DataSync. AWS DataSync is a data transfer service that simplifies, automates, and accelerates moving data between on-premises storage and Amazon S3, Amazon EFS, Amazon FSx for Windows File Server, or other AWS storage services. It includes built-in data validation capabilities, such as checksum verification, to ensure data integrity during transfers.

- Example: Azure Data Factory. Azure Data Factory (ADF) is a cloud-based data integration service that allows you to create data pipelines. ADF can be used to compare data between on-premises and cloud data stores using various activities, such as the “Copy activity” and “Data flow” activity, which support data validation and transformation.

- Functionality: Compare data at a record or field level, identify differences, and generate reports on discrepancies.

- Technologies: Utilize hashing algorithms (e.g., MD5, SHA-256) for checksum verification, metadata comparison (e.g., timestamps, file sizes), and data profiling.

- Example: SQL queries and stored procedures. Custom SQL queries and stored procedures can be developed to validate data against specific business rules. For example, a stored procedure can verify that all email addresses are valid, or that values fall within an acceptable range.