Transforming raw log data into actionable insights is a critical endeavor for modern businesses. This exploration delves into the fascinating world of treating logs as event streams, a paradigm shift that unlocks powerful capabilities for real-time monitoring, proactive issue resolution, and data-driven decision-making. We’ll navigate the intricacies of this process, from data ingestion and parsing to advanced analytics and security considerations, offering a practical roadmap for harnessing the full potential of your log data.

This comprehensive guide, focusing on “how to treat logs as event streams (factor XI),” will unravel the complexities of processing log data. It will cover essential aspects such as data ingestion, parsing, stream processing, enrichment, real-time monitoring, anomaly detection, data storage, security, and real-world applications. Each section will offer practical insights and actionable strategies, empowering you to build robust and efficient log management systems.

Defining Event Streams in the Context of Logs

Viewing logs as event streams transforms static data into a dynamic flow of information, enabling real-time analysis and proactive responses. This approach unlocks significant advantages for monitoring, troubleshooting, and security. Understanding the core characteristics of event streams derived from logs is crucial for effectively leveraging this powerful technique.

Core Characteristics of Event Streams from Log Data

Event streams derived from log data possess distinct characteristics that differentiate them from static log files. These characteristics are fundamental to the real-time processing and analysis that event streams enable.

- Timestamped Events: Each entry, or event, within the stream is associated with a specific timestamp. This temporal context is crucial for understanding the sequence of events, identifying trends, and correlating events across different sources. The accuracy of the timestamp is paramount for effective analysis.

- Ordered Sequence: Events are typically ordered chronologically, reflecting the order in which they occurred. This order is critical for reconstructing the history of system behavior and understanding the cause-and-effect relationships between events.

- Immutable Nature: Once an event is recorded in the stream, it is generally considered immutable. This immutability ensures data integrity and allows for consistent analysis over time. Modifications or deletions are typically avoided to preserve the historical record.

- Continuous Flow: Event streams are designed to be a continuous flow of data. New events are constantly added to the stream, providing a real-time view of the system’s activity. This continuous nature is essential for monitoring and responding to events as they happen.

- Structured or Semi-structured Data: While log data can vary in format, event streams often benefit from structured or semi-structured formats, such as JSON or key-value pairs. This structure simplifies parsing, analysis, and correlation of events.

Examples of Log Types as Event Streams

Various types of logs can be effectively treated as event streams, each providing valuable insights into different aspects of a system’s operation. These include application logs, system logs, and security logs.

- Application Logs: These logs record events related to the operation of specific software applications. They often contain information about user actions, errors, performance metrics, and internal application states. Treating application logs as event streams enables real-time monitoring of application health, performance optimization, and rapid troubleshooting of issues. For example, a web server’s access logs, containing information about each HTTP request, can be streamed to identify slow-loading pages or unusual traffic patterns.

- System Logs: System logs, often managed by the operating system, provide information about the underlying hardware and software. They include events such as system startup and shutdown, hardware errors, and resource usage. Analyzing system logs as event streams allows for proactive identification of hardware failures, resource bottlenecks, and security vulnerabilities. For instance, monitoring system logs for high CPU utilization can alert administrators to potential performance issues.

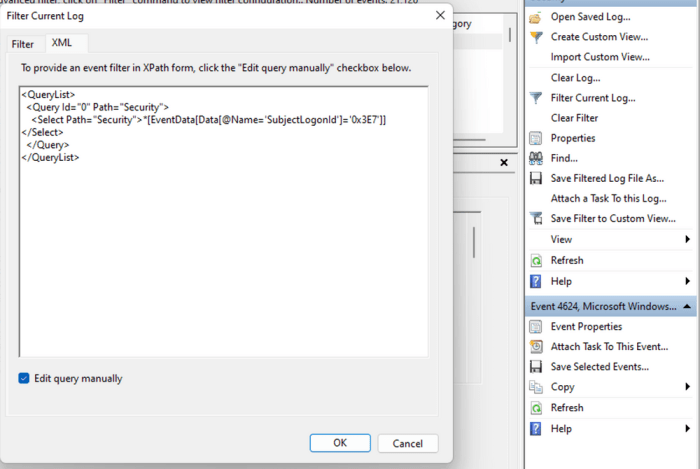

- Security Logs: Security logs track events related to security-relevant activities, such as authentication attempts, access control changes, and suspicious network traffic. Treating security logs as event streams is crucial for detecting and responding to security threats in real-time. Analyzing security logs for failed login attempts or unauthorized access attempts can help identify and mitigate potential security breaches. A firewall log, detailing network traffic and associated security events, is a prime example of a security log suitable for event stream processing.

Benefits of Viewing Logs as Event Streams

Viewing logs as event streams offers several significant advantages over treating them as static text files. These benefits translate into improved operational efficiency, enhanced security, and better decision-making capabilities.

- Real-time Monitoring and Alerting: Event streams enable real-time monitoring of system behavior. By processing events as they occur, organizations can set up alerts to be triggered by specific patterns or anomalies, allowing for immediate responses to critical issues. For example, detecting a sudden spike in error messages can trigger an alert, prompting immediate investigation and preventing a potential service outage.

- Proactive Issue Detection and Resolution: Event stream processing allows for the identification of issues before they impact users. By analyzing trends and patterns in real-time, organizations can proactively address performance bottlenecks, security vulnerabilities, and other potential problems. For instance, detecting a gradual increase in database query times can prompt investigation and optimization before users experience slowdowns.

- Enhanced Security and Threat Detection: Treating logs as event streams significantly enhances security posture. Real-time analysis of security logs enables the rapid detection of suspicious activities, such as unauthorized access attempts or malicious network traffic. This allows for prompt incident response and mitigation of security threats. The ability to correlate events from multiple sources in real-time further improves threat detection capabilities.

- Improved Operational Efficiency: Automating log analysis and alerting based on event streams streamlines operational workflows. By automating the detection of issues and triggering appropriate responses, organizations can reduce manual effort and improve the efficiency of their IT operations teams. This automation also reduces the risk of human error.

- Data-Driven Decision Making: Event streams provide a rich source of data for informed decision-making. By analyzing event streams, organizations can gain insights into system performance, user behavior, and business trends. This data can be used to optimize system configurations, improve user experience, and make better strategic decisions. For example, analyzing web server logs can reveal popular content and user navigation patterns, informing content strategy and website design decisions.

Data Ingestion and Collection Methods

Collecting log data effectively is crucial for transforming logs into actionable event streams. The method chosen significantly impacts the efficiency, scalability, and reliability of the entire event processing pipeline. Selecting the appropriate ingestion strategy depends on various factors, including the volume of logs, their format, the sources from which they originate, and the desired level of real-time processing. This section will delve into the common methods for collecting log data and the considerations that drive the selection of the most suitable approach.

Common Log Data Collection Methods

Several established methods exist for ingesting log data. Each offers distinct advantages and disadvantages, making it essential to understand their characteristics to make an informed choice.

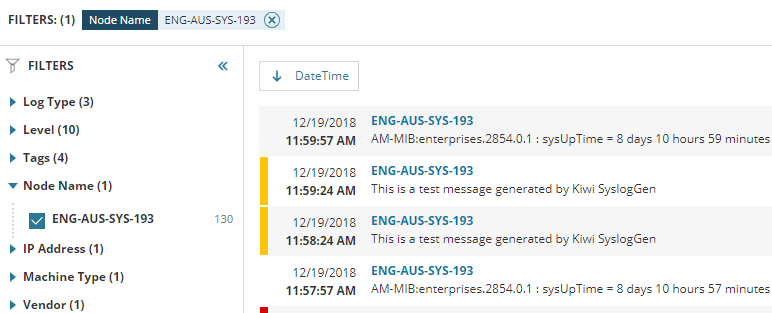

- Syslog: Syslog is a widely used protocol for transmitting log messages over a network. It is often employed by network devices, servers, and applications to send log data to a centralized logging server. Syslog’s flexibility and widespread adoption make it a reliable option for various environments. The protocol defines message formats and priorities, enabling standardized log collection.

- Filebeat: Filebeat, part of the Elastic Stack (formerly known as the ELK Stack), is a lightweight shipper designed to forward log files to Elasticsearch or Logstash. It monitors log files, reads new entries, and ships them to the configured destinations. Filebeat is known for its efficiency and ease of deployment, making it a popular choice for collecting log data from servers and applications.

- Fluentd/Fluent Bit: Fluentd and its lightweight version, Fluent Bit, are open-source data collectors designed for unified logging layers. They support a wide range of input and output plugins, enabling them to collect data from various sources and forward it to various destinations, including databases and cloud services. They are often favored for their flexibility and scalability in diverse environments.

- Custom Scripts: Custom scripts offer the greatest flexibility but require more development effort. These scripts can be written in various programming languages to read log files, parse their content, and send the data to a central logging system or data store. Custom scripts are particularly useful when dealing with custom log formats or specific data processing requirements. For example, a Python script could parse Apache access logs, extract relevant fields (IP address, timestamp, request path), and send them to a Kafka topic.

- Other Agents and Collectors: Several other agents and collectors are available, each with its strengths. For instance, rsyslog is a robust syslog implementation offering advanced features, while Splunk Universal Forwarder is a proprietary agent optimized for Splunk’s platform. The selection depends on the specific requirements of the environment and the chosen logging platform.

Considerations for Choosing an Ingestion Method

The optimal log ingestion method depends on a combination of factors, including the volume of logs generated, the format of the log data, the sources from which the logs originate, and the desired level of real-time processing.

- Log Volume: High-volume environments necessitate scalable and efficient collection methods. Systems like Filebeat, Fluentd/Fluent Bit, or dedicated logging appliances are often preferred in these cases, as they are designed to handle large data volumes. Conversely, for low-volume environments, custom scripts or simpler agents might suffice. For example, a large e-commerce platform might generate terabytes of logs daily, requiring a robust solution, while a small business website might generate only megabytes.

- Log Format: The structure of the log data significantly influences the choice of ingestion method. If logs are in a standardized format (e.g., JSON, Common Log Format), tools like Filebeat or Fluentd can often parse them directly. Custom formats may require custom parsing logic, potentially necessitating the use of custom scripts or specialized parsing capabilities within the chosen agent.

- Log Source: The source of the logs determines the available collection options. For network devices, Syslog is a common choice. For application logs on servers, Filebeat or Fluentd/Fluent Bit are often suitable. Custom scripts are helpful when dealing with logs from custom applications or legacy systems.

- Real-time Processing Requirements: If real-time processing is essential, the ingestion method must support low-latency data transfer. Tools like Filebeat and Fluentd/Fluent Bit are generally well-suited for real-time scenarios. Batch-oriented approaches may be acceptable if real-time processing is not a priority.

- Centralized Logging Platform: The choice of a centralized logging platform (e.g., Elasticsearch, Splunk, Graylog) can influence the selection of the ingestion method. Some platforms offer their agents, which are optimized for their data ingestion and processing capabilities.

Data Flow Diagram: Web Server Access Log Ingestion

This data flow diagram illustrates the ingestion process for web server access logs using Filebeat, Logstash, and Elasticsearch.

- Web Server (e.g., Apache, Nginx): The web server generates access logs in a specific format (e.g., Common Log Format or combined format).

- Filebeat: Filebeat runs on the web server and monitors the access log files.

- Filebeat – Shipping: Filebeat reads new log entries from the access log files and ships them to Logstash.

- Logstash: Logstash receives the log data from Filebeat.

- Logstash – Parsing and Filtering: Logstash parses the log data, extracts relevant fields (e.g., IP address, timestamp, request method, URL), and applies any necessary filtering or transformations.

- Logstash – Indexing: Logstash indexes the processed log data into Elasticsearch.

- Elasticsearch: Elasticsearch stores the indexed log data, making it searchable and analyzable.

- Visualization/Analysis Tools (e.g., Kibana): Tools like Kibana connect to Elasticsearch and allow users to visualize and analyze the log data, creating dashboards and reports.

Log Parsing and Transformation Techniques

Understanding how to effectively manage and analyze event streams hinges on robust log parsing and transformation techniques. These techniques are essential for extracting meaningful insights from raw log data, making it usable for analysis, monitoring, and decision-making. This section will delve into the critical aspects of log parsing and transformation, providing practical examples and a detailed look at common methodologies.

The Importance of Parsing Logs

Parsing logs is the critical first step in extracting valuable information from raw log data. The raw logs themselves are typically unstructured or semi-structured text, making them difficult to analyze directly. Parsing involves breaking down these logs into structured, easily manageable data points. This structured data enables efficient querying, analysis, and reporting. Without effective parsing, the insights contained within the logs remain hidden, limiting the ability to detect issues, understand system behavior, and make informed decisions.

Common Log Formats and Parsing Techniques

Logs are generated in various formats, each requiring specific parsing techniques. The choice of technique depends heavily on the format of the log data.

- JSON (JavaScript Object Notation): JSON is a widely used format due to its human-readability and machine-parsability.

- Parsing Technique: JSON logs are relatively straightforward to parse because they are already structured. Libraries and tools like `jq` (a command-line JSON processor), Python’s `json` library, or specialized log management systems can be used.

- Example: A JSON log entry might look like this:

"timestamp": "2024-10-27T10:00:00Z", "level": "INFO", "message": "User logged in successfully", "user_id": "12345"Parsing would extract fields like “timestamp”, “level”, “message”, and “user_id” for analysis.

- CSV (Comma-Separated Values): CSV is a simple, text-based format often used for data exchange.

- Parsing Technique: CSV logs require splitting the lines based on the comma delimiter and, in some cases, handling quoted fields. Libraries such as Python’s `csv` module, or dedicated tools can perform this parsing.

- Example: A CSV log entry might look like this:

timestamp,level,message,user_id 2024-10-27T10:00:00Z,INFO,User logged in successfully,12345Parsing would split the line into comma-separated values.

- Apache Access Logs: Apache access logs follow a specific format, typically the Common Log Format (CLF) or the Combined Log Format (CLF).

- Parsing Technique: Parsing Apache access logs often involves using regular expressions or dedicated log parsing tools like `goaccess`. These tools are designed to handle the specific format of the logs, extracting relevant fields like IP address, timestamp, request method, URL, status code, and user agent.

- Example: A typical Apache access log entry might look like this:

192.168.1.1 --[27/Oct/2024:10:00:00 +0000] "GET /index.html HTTP/1.1" 200 1234Parsing would extract fields such as the IP address (192.168.1.1), timestamp, HTTP method (GET), requested URL (/index.html), and HTTP status code (200).

Log Transformation Techniques

Once parsed, log data can be transformed to make it more useful. Various techniques are used to manipulate the parsed data to derive insights.

| Technique | Description | Use Case |

|---|---|---|

| Filtering | Selecting specific log entries based on criteria. This can involve matching values in certain fields (e.g., only including logs with an error level of “ERROR”) or filtering based on time windows. | Identifying critical errors, focusing on specific user activities, or isolating events related to a particular time period. |

| Enrichment | Adding contextual information to log entries. This can involve looking up information from external sources, such as IP address geolocation or user details from a database. | Providing context to log data, such as identifying the geographic location of a user accessing a service or linking a log entry to a specific user profile. For example, enriching an Apache access log with geolocation data to visualize user activity on a map. |

| Aggregation | Summarizing log data to identify trends and patterns. This includes calculating counts, sums, averages, and other statistical measures over a period. | Identifying performance bottlenecks, detecting anomalies, or tracking key metrics, such as the number of errors per minute or the average response time of a service. For instance, aggregating web server access logs to calculate the average response time for a specific URL. |

| Masking/Redaction | Removing or obfuscating sensitive information from logs to protect privacy and comply with regulations. | Protecting Personally Identifiable Information (PII) such as email addresses, credit card numbers, or other sensitive data before storing or sharing logs. |

| Transformation | Modifying existing log fields. This can involve converting data types, changing formats, or creating new fields based on existing ones. | Preparing data for analysis, such as converting timestamps to a standard format, extracting relevant information from complex fields, or calculating new metrics. For instance, converting a timestamp field from a raw string to a datetime object for easier time-series analysis. |

Stream Processing Technologies and Frameworks

Processing logs as event streams necessitates the utilization of robust stream processing technologies. These technologies enable real-time analysis, transformation, and action based on incoming log data. Several frameworks have emerged as industry leaders, each with its own strengths and weaknesses. This section will delve into three prominent frameworks: Apache Kafka, Apache Flink, and Apache Spark Streaming, comparing and contrasting their features, performance, and suitability for various scenarios.

Popular Stream Processing Frameworks

Understanding the capabilities of different stream processing frameworks is crucial for selecting the right tool for the job. The following frameworks are widely adopted and offer a range of functionalities suitable for diverse log processing requirements.

- Apache Kafka: Initially developed by LinkedIn, Apache Kafka is a distributed streaming platform designed for building real-time data pipelines and streaming applications. It acts as a central nervous system for event streams, providing high throughput, fault tolerance, and scalability. Kafka excels at ingesting, storing, and distributing event streams. It uses a publish-subscribe model, where producers write data to topics, and consumers subscribe to those topics to read the data.

- Apache Flink: Apache Flink is a powerful, open-source stream processing framework designed for both batch and stream processing. It offers low-latency, high-throughput processing, and supports stateful computations. Flink’s core strength lies in its ability to handle complex event processing and provide exactly-once processing semantics. It’s known for its sophisticated fault tolerance mechanisms and its support for both stream and batch processing within the same framework.

- Apache Spark Streaming: Apache Spark Streaming is a component of the Apache Spark ecosystem that enables real-time processing of data streams. It works by dividing the input stream into micro-batches and processing each batch using Spark’s core engine. While Spark Streaming provides a unified framework for batch and stream processing, it generally has higher latency compared to Flink. It leverages Spark’s in-memory processing capabilities for fast computation and integrates seamlessly with other Spark components like Spark SQL and MLlib.

Framework Comparison

Choosing the right stream processing framework depends on the specific requirements of the log processing task. A comparative analysis considering features, performance, and ease of use can help in making an informed decision.

| Feature | Apache Kafka | Apache Flink | Apache Spark Streaming |

|---|---|---|---|

| Primary Use Case | Data ingestion and distribution | Complex event processing, low-latency stream processing | Stream processing with batch processing capabilities |

| Processing Model | Publish-subscribe | True stream processing | Micro-batch processing |

| Latency | Low (primarily for data transport) | Low | Medium |

| Throughput | High | High | High |

| Fault Tolerance | Robust (replication, consumer groups) | Excellent (state management, exactly-once processing) | Good (checkpointing) |

| State Management | Limited (primarily at the consumer level) | Advanced (built-in stateful operations) | Basic (checkpointing) |

| Ease of Use | Relatively easy to set up and use for data ingestion | Requires more understanding of stream processing concepts | Easier to use for those familiar with Spark |

| Integration | Excellent integration with other Kafka components (e.g., Kafka Connect) | Seamless integration with various data sources and sinks | Strong integration with the Spark ecosystem |

Scenario Suitability

The optimal framework varies depending on the application. Consider a scenario involving real-time fraud detection in financial transactions.

- Scenario: Real-time fraud detection in financial transactions. The system needs to analyze transaction logs, identify suspicious patterns (e.g., unusual transaction amounts, transactions from unfamiliar locations), and trigger alerts in real-time.

- Framework: Apache Flink would be most suitable for this scenario.

- Advantages:

- Low Latency: Flink’s low-latency processing capabilities are critical for detecting fraud in real-time, enabling immediate action to be taken.

- Stateful Computations: Flink’s ability to maintain state allows the system to track transaction history, user behavior, and other relevant data to identify anomalies and patterns indicative of fraud. For example, the system could track the total amount spent by a user in a day or identify a sudden surge in transactions from a specific IP address.

- Complex Event Processing (CEP): Flink’s CEP capabilities enable the detection of complex patterns, such as a series of suspicious transactions occurring in a short time frame.

- Exactly-Once Processing: Flink’s exactly-once processing semantics ensures that each transaction is processed exactly once, preventing data inconsistencies and ensuring the accuracy of fraud detection.

Data Enrichment and Contextualization

Data enrichment is a crucial step in turning raw log data into actionable insights. It involves adding supplementary information to log events to provide a richer context, enabling more effective analysis, correlation, and decision-making. By supplementing the original data, we transform isolated events into comprehensive narratives.

Techniques for Enriching Log Data

Several techniques can be employed to enrich log data, enhancing its value and utility. These techniques leverage various data sources to add context and meaning to raw log events.

- Geolocation Enrichment: Enriching IP addresses with geographical information. This involves mapping IP addresses to their corresponding locations (country, city, latitude, longitude). This is typically achieved by using geolocation databases. This enrichment is essential for understanding the geographic distribution of events, identifying potential security threats originating from specific regions, and analyzing user behavior based on location.

- User Profile Enrichment: Integrating log data with user profile information. This involves linking log events to user attributes such as username, department, job title, and access roles. This allows for a more granular analysis of user activity, facilitating the identification of anomalous behavior and enabling better access control management.

- Asset Inventory Enrichment: Adding information about the assets involved in log events. This involves associating IP addresses, hostnames, or other identifiers with detailed information about the corresponding assets, such as operating system, installed software, and hardware specifications. This provides a complete picture of the environment and aids in identifying vulnerabilities and managing IT infrastructure.

- Threat Intelligence Enrichment: Integrating log data with threat intelligence feeds. This involves matching log events against known indicators of compromise (IOCs) and malicious activities. This enables the early detection of security threats, providing actionable alerts for investigation and response.

- Application Context Enrichment: Supplementing log events with application-specific information. This involves adding details about the application that generated the log, such as application version, transaction ID, and error codes. This improves the understanding of application performance, helps in identifying bugs, and streamlines troubleshooting processes.

Data Sources for Enrichment

Various data sources can be leveraged for data enrichment, providing the necessary context to enhance the value of log events.

- Geolocation Databases: Databases that map IP addresses to geographic locations. Examples include MaxMind GeoIP and IP2Location. These databases are essential for enriching log data with location-based information.

- User Profile Databases: Databases that store user-related information, such as user accounts, roles, and permissions. Examples include Active Directory, LDAP, and custom user management systems. These databases are crucial for associating log events with user identities.

- Asset Inventory Systems: Systems that maintain a record of IT assets, including hardware, software, and configurations. Examples include CMDB (Configuration Management Database) systems and network scanners. These systems provide information about the assets involved in log events.

- Threat Intelligence Feeds: Feeds that provide information about known threats, malicious actors, and indicators of compromise (IOCs). Examples include STIX/TAXII feeds, commercial threat intelligence providers (e.g., CrowdStrike, Recorded Future), and open-source intelligence (OSINT) sources. These feeds help identify potential security threats.

- Application Metadata: Data about the applications generating the logs, such as application versions, configuration settings, and error codes. This information can be extracted from application configuration files, databases, or APIs. This is essential for application-specific analysis and troubleshooting.

Enhancing Log Event Value with Enrichment Examples

Data enrichment significantly enhances the value of log events by providing additional context. Consider the following examples, which demonstrate the before and after effects of data enrichment.

Before Enrichment:

192.168.1.10 -

-[2023-10-27 10:00:00] "GET /index.html HTTP/1.1" 200 1234

After Geolocation Enrichment:

192.168.1.10 (City: London, Country: UK)

-- [2023-10-27 10:00:00] "GET /index.html HTTP/1.1" 200 1234

In this example, the original log event only contained the IP address. After enrichment with geolocation data, the event now includes the city and country, offering immediate context about the user’s location.

Before Enrichment:

user123 accessed file.txt from 10.0.0.5

After User Profile Enrichment:

user123 (John Doe, IT Department) accessed file.txt from 10.0.0.5 (Server01)

In this example, the initial log event only identifies the username. After enrichment with user profile data and asset inventory data, the event reveals the user’s full name, department, and the server name, providing much richer context for investigation.

Before Enrichment:

172.16.0.10 -[2023-10-27 10

00:00] "POST /login.php" 401 -

After Threat Intelligence Enrichment:

172.16.0.10 (Malicious IP, associated with known brute-force attempts)[2023-10-27 10

00:00] "POST /login.php" 401 -

In this example, the original log event provides little context beyond the attempted login. After enrichment with threat intelligence, the event now indicates that the IP address is associated with malicious activity, immediately highlighting a potential security threat.

Real-time Monitoring and Alerting

Real-time monitoring and alerting are crucial components of event stream analysis, enabling proactive responses to operational issues and performance degradation. By continuously analyzing event streams, organizations can gain immediate insights into system behavior, detect anomalies, and trigger alerts to address problems before they impact users or critical business functions. This proactive approach is essential for maintaining system stability, optimizing performance, and ensuring a positive user experience.

Role of Real-time Monitoring and Alerting in Event Stream Analysis

Real-time monitoring and alerting systems play a vital role in event stream analysis by providing immediate visibility into system health and performance. They allow for the identification of critical issues and the initiation of corrective actions in a timely manner.

- Early Anomaly Detection: By continuously analyzing event streams, real-time monitoring systems can detect anomalies that may indicate underlying problems. These anomalies can include spikes in error rates, unusual request latency, or deviations from expected resource utilization patterns.

- Proactive Problem Resolution: Upon detecting an anomaly, the system can trigger alerts, notifying relevant teams or automated systems to initiate corrective actions. This proactive approach minimizes downtime and reduces the impact of issues on users.

- Performance Optimization: Real-time monitoring provides insights into system performance, allowing for optimization efforts. By identifying bottlenecks, inefficient code, or resource constraints, organizations can improve system efficiency and responsiveness.

- Trend Analysis: Real-time monitoring can be used to identify trends and patterns in event data. This information can be used to predict future issues, plan for capacity upgrades, and optimize system configurations.

- Compliance and Security: Real-time monitoring can be used to monitor security events, such as unauthorized access attempts or suspicious activities. It can also be used to ensure compliance with regulatory requirements.

Examples of Metrics Monitored in Real-time

Several metrics are commonly monitored in real-time to assess system health and performance. These metrics provide insights into various aspects of system behavior and can be used to trigger alerts when thresholds are exceeded.

- Error Rates: Monitoring error rates, such as the percentage of failed requests or the number of exceptions, is critical for identifying problems. A sudden increase in error rates can indicate a bug, a configuration issue, or a resource constraint. For example, if an e-commerce website sees a 5% increase in “500 Internal Server Error” responses within a 5-minute window, an alert should be triggered.

- Request Latency: Request latency, the time it takes to process a request, is a key indicator of performance. High latency can lead to a poor user experience. For instance, if the average response time for a critical API endpoint exceeds 2 seconds for more than 1 minute, an alert can be generated.

- Resource Utilization: Monitoring resource utilization, such as CPU usage, memory consumption, and disk I/O, is essential for identifying resource constraints. High resource utilization can lead to performance degradation or system crashes. An example is when CPU utilization on a database server consistently exceeds 90% for more than 10 minutes.

- Throughput: Monitoring throughput, the rate at which data is processed, helps assess system capacity and performance. A sudden drop in throughput may indicate an issue. For instance, if the number of processed transactions per second falls below a certain threshold, an alert can be activated.

- Availability: Monitoring system availability ensures services are accessible to users. Alerts are triggered when services become unavailable. A practical example is when a critical web application is down for more than 1 minute.

- Queue Lengths: Monitoring queue lengths in message queues or task queues helps identify bottlenecks. A growing queue indicates the system is unable to process tasks quickly enough. An example is when a message queue’s length exceeds 10,000 messages.

Procedure for Setting Up Alerts

Setting up alerts based on specific event patterns or thresholds involves several steps. This procedure ensures that alerts are relevant, actionable, and effective.

- Define Objectives and Goals: Clearly define the goals of the monitoring and alerting system. What specific issues or problems are you trying to address? For example, is the objective to minimize downtime, improve user experience, or detect security breaches?

- Identify Key Metrics: Determine the specific metrics that need to be monitored to achieve the defined objectives. This may include error rates, request latency, resource utilization, and throughput.

- Establish Thresholds: Set thresholds for each metric that, when exceeded, will trigger an alert. These thresholds should be based on historical data, performance benchmarks, and business requirements. For example, set an error rate threshold of 1% for a critical API endpoint.

- Define Alert Conditions: Specify the conditions under which an alert should be triggered. This may involve exceeding a threshold for a specific duration, detecting a sudden spike in a metric, or observing a specific event pattern.

- Configure Alert Notifications: Configure the system to send notifications when alerts are triggered. Notifications can be sent via email, SMS, or other communication channels. Ensure that the right people are notified, based on the severity of the alert.

- Specify Alert Severity Levels: Assign severity levels (e.g., critical, warning, informational) to each alert. This helps prioritize responses and ensures that the most critical issues are addressed first.

- Define Escalation Paths: Establish escalation paths for alerts, including who to notify and when. If an alert is not acknowledged or resolved within a certain timeframe, escalate it to the next level of support.

- Test and Validate Alerts: Test the alerting system to ensure that alerts are triggered correctly and notifications are delivered to the appropriate recipients. Simulate various scenarios to validate the alert configurations.

- Document Alerts and Procedures: Document all alerts, their triggers, and the corresponding response procedures. This ensures consistency and facilitates troubleshooting.

- Review and Refine: Regularly review the alerting system and refine the thresholds, conditions, and notification settings based on performance data and changing business requirements.

Pattern Recognition and Anomaly Detection

Identifying patterns and anomalies within event streams is crucial for extracting valuable insights, detecting unusual behavior, and proactively addressing potential issues. This process transforms raw log data into actionable intelligence, enabling organizations to improve operational efficiency, enhance security posture, and optimize performance. Effective pattern recognition and anomaly detection systems are essential components of any robust log management strategy.

Techniques for Identifying Patterns and Anomalies

Several techniques can be employed to identify patterns and anomalies within event streams. These techniques range from simple rule-based approaches to sophisticated machine learning models. Choosing the right technique depends on the nature of the data, the desired level of accuracy, and the resources available.

- Rule-based Systems: These systems define explicit rules to identify specific patterns or anomalies. Rules can be based on regular expressions, threshold values, or combinations of different criteria. They are often straightforward to implement and understand but can be inflexible and may struggle to adapt to evolving patterns.

- Statistical Analysis: Statistical methods analyze the distribution of data points to identify deviations from the norm. Techniques include calculating moving averages, standard deviations, and percentiles. These methods are useful for detecting unusual fluctuations in metrics like response times or error rates.

- Machine Learning: Machine learning algorithms can automatically learn patterns from data and identify anomalies. Different types of machine learning models are suitable for different scenarios. Unsupervised learning algorithms are often used to detect anomalies without requiring labeled data.

- Sequence Mining: This technique focuses on identifying sequences of events that occur frequently or infrequently. It can be used to detect unusual event sequences that may indicate a security breach or a system malfunction.

- Time-series Analysis: Time-series analysis techniques are specifically designed for analyzing data that changes over time. They can be used to identify trends, seasonality, and anomalies in time-based data, such as server load or network traffic.

Examples of Pattern Recognition Algorithms

Various algorithms can be applied for pattern recognition, each with its strengths and weaknesses. The choice of algorithm depends on the characteristics of the event streams and the specific patterns or anomalies being sought.

- Regular Expressions: Regular expressions are a powerful tool for pattern matching within text-based log entries. They define search patterns that can identify specific strings, formats, or sequences of characters. For example, a regular expression can be used to extract IP addresses, timestamps, or error codes from log files.

For instance, the regular expression

\b\d1,3\.\d1,3\.\d1,3\.\d1,3\bcan be used to find IP addresses in log entries. - Machine Learning Models:

- K-Means Clustering: K-means clustering groups similar data points together. Anomalies are identified as data points that fall far from the clusters. This algorithm is useful for detecting outliers in data with numerical features.

- Isolation Forest: Isolation Forest is an unsupervised machine learning algorithm specifically designed for anomaly detection. It isolates anomalies by randomly partitioning the data space. Anomalies are isolated with fewer partitions compared to normal data points.

- One-Class SVM: One-Class SVM (Support Vector Machine) is an unsupervised learning algorithm that learns a boundary around normal data points. Data points outside this boundary are considered anomalies.

Simplified Anomaly Detection Process Illustration

The following illustration provides a simplified overview of an anomaly detection process, outlining the key components and their functions.

Illustration: Anomaly Detection Process

The diagram depicts a sequential process for anomaly detection in event streams.

1. Data Source

The starting point of the process is the event stream data source. This could be various log files, network traffic data, or other event sources.

2. Data Ingestion

The data is ingested from the data source. This step involves collecting and preparing the data for analysis.

3. Data Preprocessing

The data is preprocessed. This involves cleaning the data, handling missing values, and transforming the data into a suitable format for the chosen algorithm.

4. Feature Extraction

Relevant features are extracted from the preprocessed data. This might involve extracting numerical values, creating new features from existing ones, or converting text data into numerical representations.

5. Anomaly Detection Algorithm

An anomaly detection algorithm is applied to the feature data. This could be a rule-based system, a statistical method, or a machine learning model.

6. Anomaly Scoring

The algorithm assigns an anomaly score to each data point. This score reflects the degree to which a data point deviates from the expected pattern.

7. Thresholding

A threshold is applied to the anomaly scores. Data points with scores above the threshold are flagged as anomalies.

8. Alerting and Reporting

Alerts are generated when anomalies are detected. Reports summarizing the detected anomalies are generated and presented to relevant stakeholders.

9. Feedback Loop

A feedback loop allows for continuous improvement. This involves reviewing the results of the anomaly detection process, adjusting the algorithms and thresholds as needed, and incorporating new information.

The diagram illustrates the key steps in anomaly detection, from data ingestion to alerting and reporting, with the feedback loop enabling continuous refinement.

Data Storage and Indexing Strategies

Choosing the right data storage and indexing strategy is crucial for efficiently managing and analyzing event streams. The optimal approach directly impacts the performance, scalability, and cost-effectiveness of your log analysis pipeline. Effective storage and indexing allow for rapid retrieval of relevant events, enabling timely insights and informed decision-making. Without a well-defined strategy, you risk slow query times, data loss, and ultimately, the inability to leverage the full potential of your event data.

Importance of Choosing the Right Strategy

Selecting an appropriate storage and indexing strategy is paramount for several reasons. It dictates how quickly you can access and analyze your data, directly influencing your ability to respond to incidents, identify trends, and make proactive decisions. Consider these key aspects:

- Performance: Indexing optimizes query performance by providing a way to quickly locate relevant data. Without proper indexing, queries can become slow and resource-intensive, especially as the volume of data grows.

- Scalability: The chosen storage solution should be able to scale to accommodate increasing data volumes and query loads. This ensures that your analysis pipeline can handle future growth without performance degradation.

- Cost-Effectiveness: Different storage options have varying costs associated with storage, compute resources, and maintenance. Choosing a cost-effective solution is essential for managing your budget and ensuring a positive return on investment.

- Data Retention and Compliance: Some industries have specific data retention requirements. Your storage strategy must support these requirements while complying with relevant regulations.

Different Storage Options

Various storage options are available for event streams, each with its own strengths and weaknesses. The best choice depends on your specific needs, including data volume, query patterns, budget, and desired features. Here are some popular choices:

- Elasticsearch: Elasticsearch is a distributed, RESTful search and analytics engine built on Apache Lucene. It’s widely used for log analysis due to its powerful search capabilities, flexible data modeling, and scalability.

- Splunk: Splunk is a commercial platform designed for searching, analyzing, and visualizing machine-generated data. It offers robust features for log management, security analysis, and business intelligence.

- Cloud-Based Solutions: Cloud providers like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure offer managed log management and analytics services. These services often provide scalable storage, powerful indexing, and integrated tools for analysis. Examples include AWS CloudWatch Logs, Google Cloud Logging, and Azure Monitor.

Comparison Table for Different Storage Options

The following table compares the features, scalability, and cost considerations of Elasticsearch, Splunk, and cloud-based solutions. Note that specific pricing and features can vary based on the chosen cloud provider and the configuration of the solution.

| Feature | Elasticsearch | Splunk | Cloud-Based Solutions (Example: AWS CloudWatch Logs) |

|---|---|---|---|

| Data Storage | Distributed, scalable, document-oriented database (JSON) | Proprietary data format, indexed | Cloud-managed storage (e.g., S3 for archiving) |

| Indexing | Highly customizable, inverted index | Automatic indexing, field extraction | Managed indexing, automated field extraction |

| Search Capabilities | Powerful search with full-text search, aggregations, and scripting | Splunk Processing Language (SPL) for advanced search and analysis | search, filter by time, metrics and log fields |

| Scalability | Highly scalable, distributed architecture; can scale horizontally | Scalable, but typically requires more upfront investment in hardware or licensing | Highly scalable, managed by the cloud provider |

| Cost | Open-source; cost depends on infrastructure (servers, storage) | Commercial, based on data volume or user licenses | Pay-as-you-go, based on data ingestion, storage, and query usage |

| Ease of Use | Requires more setup and configuration; steeper learning curve | User-friendly interface and pre-built dashboards; easier to get started | Easy to set up and integrate with other cloud services |

| Features | Flexible data modeling, rich plugin ecosystem, advanced analytics capabilities | Real-time monitoring, security analysis, business intelligence features | Integration with other cloud services, automated alerting, monitoring dashboards |

Note: The table above is a general comparison and specific features, scalability, and costs can vary. Always consult the latest documentation and pricing information from the respective vendors.

Security Considerations and Best Practices

Treating logs as event streams introduces significant security considerations. The sensitive nature of log data, which often includes personally identifiable information (PII), financial data, and system activity details, necessitates robust security measures. Failing to adequately secure these event streams can lead to data breaches, compliance violations, and reputational damage. This section Artikels the security implications and best practices for securing log data.

Security Implications of Treating Logs as Event Streams

The shift from static log files to real-time event streams significantly amplifies the security risks. The continuous flow of data and the increased accessibility for processing can create vulnerabilities if not properly managed.

- Data Breaches: Real-time event streams, if compromised, can expose sensitive information immediately. Attackers can leverage access to these streams to identify vulnerabilities, exfiltrate data, or gain unauthorized access to systems.

- Compliance Violations: Regulations such as GDPR, HIPAA, and PCI DSS mandate the protection of sensitive data. Inadequate security measures can lead to non-compliance, resulting in hefty fines and legal repercussions.

- Insider Threats: Improperly secured event streams can be exploited by malicious insiders. Unauthorized access to or modification of log data can be used to conceal malicious activities, manipulate audit trails, or steal sensitive information.

- Denial-of-Service (DoS) Attacks: Attackers might target the event stream infrastructure to disrupt monitoring and alerting capabilities. Overloading the stream processing pipeline or the data storage backend can render security monitoring ineffective.

- Data Integrity Issues: Tampering with log data can compromise the accuracy and reliability of security investigations and incident response efforts.

Best Practices for Securing Log Data

Implementing robust security measures is crucial to protect log data throughout its lifecycle. This includes access control, encryption, data masking, and continuous monitoring.

- Access Control: Implement strict access controls to limit who can access the event streams and the data within them. This involves using role-based access control (RBAC) to grant permissions based on job responsibilities.

- Encryption: Encrypt log data both in transit and at rest. This ensures that even if the data is intercepted, it remains unreadable. Use strong encryption algorithms like AES-256.

Encryption in transit typically involves using TLS/SSL to secure the communication channels between log sources, collectors, and processing systems. Encryption at rest involves encrypting the data stored in databases, data lakes, or other storage solutions.

- Data Masking and Anonymization: Mask or anonymize sensitive data within the logs. This involves replacing sensitive information like PII, credit card numbers, and other confidential details with masked or anonymized values.

- Authentication and Authorization: Enforce strong authentication mechanisms to verify the identity of users and systems accessing the event streams. Implement authorization policies to control what resources authenticated users can access.

- Network Segmentation: Segment the network to isolate the event stream infrastructure from other parts of the network. This limits the impact of a security breach by preventing attackers from easily moving laterally across the network.

- Regular Auditing and Monitoring: Regularly audit access to the event streams and monitor for suspicious activities. Implement intrusion detection and prevention systems to identify and respond to potential threats.

- Secure Configuration: Securely configure all components of the event stream pipeline, including log sources, collectors, processors, and storage systems. Regularly review and update configurations to address security vulnerabilities.

- Data Retention Policies: Establish and enforce data retention policies to limit the storage duration of log data. This helps to minimize the risk of data breaches and comply with regulatory requirements.

- Security Information and Event Management (SIEM): Integrate the event stream with a SIEM system to centralize log data, correlate events, and provide real-time security monitoring and alerting.

- Vulnerability Management: Regularly scan the event stream infrastructure for vulnerabilities and apply security patches promptly.

Checklist for Implementing Security Measures

The following checklist summarizes key steps to secure event streams:

- [ ] Define access control policies based on the principle of least privilege.

- [ ] Implement encryption for data in transit and at rest.

- [ ] Mask or anonymize sensitive data within the logs.

- [ ] Enforce strong authentication and authorization mechanisms.

- [ ] Segment the network to isolate the event stream infrastructure.

- [ ] Implement intrusion detection and prevention systems.

- [ ] Regularly audit and monitor access to event streams.

- [ ] Securely configure all components of the event stream pipeline.

- [ ] Establish and enforce data retention policies.

- [ ] Integrate the event stream with a SIEM system.

- [ ] Regularly scan for vulnerabilities and apply security patches.

- [ ] Conduct regular security audits and penetration testing.

Use Cases and Practical Applications

Treating logs as event streams unlocks a wealth of opportunities for real-time analysis and proactive decision-making. This approach moves beyond simple log aggregation and enables organizations to gain deep insights, respond rapidly to critical events, and optimize their operations. The following sections detail specific use cases and provide practical examples of how event stream analysis delivers tangible benefits.

Fraud Detection

Fraud detection is a prime example of where event stream analysis excels. By analyzing real-time data, organizations can identify and prevent fraudulent activities as they occur.

- Real-time Transaction Monitoring: Event streams can analyze transaction data as it arrives, flagging suspicious activities such as unusual transaction amounts, transactions from unfamiliar locations, or rapid sequences of transactions.

- Behavioral Analysis: Analyzing user behavior patterns within event streams can detect anomalies indicative of fraud. For example, a sudden change in spending habits, login attempts from multiple locations, or attempts to access sensitive data outside of normal hours can trigger alerts.

- Example: A credit card company uses event stream processing to monitor transactions in real-time. When a transaction exceeds a pre-defined threshold or originates from a high-risk country, the system immediately flags it for review. This real-time analysis significantly reduces fraudulent charges and minimizes financial losses.

Performance Monitoring

Event stream analysis is invaluable for monitoring the performance of systems and applications, enabling proactive identification and resolution of performance bottlenecks.

- Application Performance Monitoring (APM): Event streams ingest application logs, metrics, and traces to provide a real-time view of application health. This allows for the identification of slow transactions, error rates, and resource utilization issues.

- Infrastructure Monitoring: Event stream processing can analyze infrastructure logs (e.g., server logs, network logs) to identify performance degradation, resource exhaustion, and other infrastructure-related problems.

- Example: An e-commerce company uses event stream processing to monitor website performance. By analyzing server logs, application logs, and user clickstream data in real-time, they can identify slow-loading pages, high error rates, and other performance issues. This enables them to quickly address these problems and maintain a positive user experience.

Security Incident Response

Event stream analysis is a powerful tool for security incident response, providing real-time visibility into security threats and enabling rapid containment and remediation.

- Threat Detection: By analyzing security logs (e.g., firewall logs, intrusion detection system logs, authentication logs) in real-time, organizations can identify malicious activities such as unauthorized access attempts, malware infections, and data breaches.

- Incident Investigation: Event stream processing enables security analysts to quickly investigate security incidents by correlating data from multiple sources. This provides a comprehensive view of the incident and helps to determine the scope and impact of the attack.

- Example: A financial institution uses event stream processing to monitor security logs. When a suspicious login attempt is detected, the system triggers an alert and correlates the login attempt with other relevant data, such as firewall logs and network traffic logs. This enables security analysts to quickly investigate the incident and take appropriate action, such as blocking the IP address or isolating the affected system.

Industry-Specific Scenario: Healthcare

In the healthcare industry, the ability to treat logs as event streams can significantly improve patient care and operational efficiency.

- Use Case: Monitoring patient data in real-time to detect anomalies.

- Industry: Healthcare.

- Benefits:

- Early Detection of Critical Events: Real-time analysis of patient data streams, including vital signs, medication administration logs, and lab results, can identify early warning signs of critical events such as sepsis, cardiac arrest, or adverse drug reactions.

- Improved Patient Outcomes: By detecting and responding to critical events quickly, healthcare providers can improve patient outcomes and reduce mortality rates.

- Reduced Costs: Early detection and intervention can reduce the need for costly emergency room visits and hospitalizations.

- Example: A hospital uses event stream processing to monitor patient vital signs in real-time. If a patient’s heart rate suddenly spikes and their oxygen saturation drops, the system immediately alerts the nursing staff, enabling them to intervene quickly and potentially save the patient’s life.

Final Review

In conclusion, treating logs as event streams represents a significant advancement in data analysis and operational efficiency. By embracing the methodologies Artikeld, organizations can unlock valuable insights, proactively address issues, and fortify their security posture. This journey, starting with understanding the fundamentals and culminating in advanced analytics, offers a transformative approach to data management. Implementing these strategies will allow you to turn your logs from static records into a dynamic, intelligent source of business value.

Common Queries

What are the primary benefits of treating logs as event streams?

Viewing logs as event streams enables real-time monitoring, faster anomaly detection, proactive issue resolution, improved operational efficiency, and data-driven decision-making.

Which stream processing framework is best for handling high-volume log data?

Apache Kafka is often favored for its robust architecture and ability to handle massive data volumes and high throughput, making it suitable for large-scale log processing.

How does data enrichment enhance the value of log events?

Data enrichment adds context to log events by incorporating external data sources like geolocation databases or user profiles, providing a more comprehensive understanding of the events.

What are the key security considerations when processing logs as event streams?

Security considerations include access control, data encryption, data masking, and implementing robust authentication and authorization mechanisms to protect sensitive log data.