Embarking on the journey of establishing a private Docker registry unlocks a world of enhanced security, control, and efficiency for your containerized applications. This guide delves into the intricacies of setting up your own private registry, offering a step-by-step approach to help you navigate the process with confidence. From understanding the fundamental benefits to mastering advanced configurations, we’ll equip you with the knowledge to securely store, manage, and distribute your Docker images.

We’ll explore the advantages of private registries over public ones, emphasizing security scenarios where they become indispensable. You’ll learn about various implementation options, from the straightforward Docker Registry v2 to more sophisticated solutions like Harbor and Nexus Repository Manager. Our exploration includes practical setup instructions, security configurations, and strategies for seamless integration with your CI/CD pipelines, ensuring a robust and streamlined workflow.

Introduction to Private Docker Registries

A private Docker registry serves as a secure and controlled repository for storing and managing Docker images. It provides a centralized location for teams to share, version, and deploy containerized applications, offering greater control over image distribution and security. Understanding the benefits and use cases of a private registry is crucial for organizations leveraging Docker in their development and deployment pipelines.

Core Benefits of Using a Private Docker Registry

Utilizing a private Docker registry offers several advantages over relying solely on public registries. These benefits contribute to improved security, control, and efficiency within the software development lifecycle.

- Enhanced Security: Private registries allow organizations to control who can access and pull images. This is particularly important for sensitive applications or those containing proprietary code. Access can be restricted based on user roles, project affiliations, or network locations. This controlled access significantly reduces the risk of unauthorized image access and potential vulnerabilities.

- Improved Control and Compliance: Organizations can enforce image scanning and vulnerability analysis before images are deployed. This ensures that only approved and secure images are used. This level of control is critical for meeting regulatory requirements and internal security policies.

- Increased Efficiency: Private registries improve build and deployment times by reducing reliance on external network connections to pull images from public registries. Caching images locally also speeds up deployments, especially in environments with limited bandwidth or high latency. This leads to faster iteration cycles and improved developer productivity.

- Image Versioning and Management: Private registries facilitate better image versioning and management. Organizations can track image changes, roll back to previous versions, and easily manage image dependencies. This is crucial for maintaining a stable and reliable deployment environment.

- Offline Availability: Private registries can be configured to operate within a local network, making images available even when there’s no internet connection. This is particularly beneficial for environments with limited or unreliable internet access, such as air-gapped networks.

Comparison of Private vs. Public Docker Registries

Understanding the key differences between private and public Docker registries helps in making informed decisions about which option best suits an organization’s needs. The following table summarizes the key differences:

| Feature | Public Docker Registry (e.g., Docker Hub) | Private Docker Registry |

|---|---|---|

| Accessibility | Publicly accessible | Restricted access (authentication required) |

| Security | Less control over image access | Enhanced security through access control and image scanning |

| Control | Limited control over image content and lifecycle | Full control over image content, versioning, and lifecycle |

| Cost | Free (with limitations) or paid plans | Can be self-hosted (free) or using a paid service |

| Use Cases | Sharing open-source images, general-purpose images | Storing proprietary images, managing internal applications, meeting compliance requirements |

Scenarios Where a Private Registry is Essential for Security

In certain situations, using a private Docker registry is not just recommended, but a critical security requirement. These scenarios highlight the importance of a private registry for protecting sensitive information and maintaining a secure environment.

- Storing Proprietary Code: When an application contains proprietary code or intellectual property, a private registry is essential. This prevents unauthorized access to the source code and protects against reverse engineering or intellectual property theft. For example, a financial services company developing a trading platform would need a private registry to safeguard its algorithms and financial models.

- Handling Sensitive Data: Applications that process sensitive data, such as Personally Identifiable Information (PII) or financial data, must be stored in a secure environment. A private registry allows organizations to implement strict access controls, image scanning, and vulnerability management, ensuring that sensitive data is protected from unauthorized access and breaches. Consider a healthcare provider storing patient records in a containerized application; a private registry is crucial for HIPAA compliance.

- Meeting Compliance Requirements: Many industries have strict compliance requirements for data security and access control. A private registry provides the necessary infrastructure to meet these requirements. For example, organizations dealing with government data must adhere to regulations such as FedRAMP or similar standards, which mandate the use of secure and controlled environments for storing and managing data.

- Air-Gapped Environments: In environments with no internet access, such as those used by government agencies or defense contractors, a private registry is the only option for distributing and managing Docker images. This ensures that images can be deployed and updated without relying on external network connections.

- Protecting Against Supply Chain Attacks: A private registry allows organizations to control the origin and integrity of their Docker images, reducing the risk of supply chain attacks. By verifying the source of images and scanning them for vulnerabilities, organizations can prevent malicious code from entering their environment. This is particularly important for applications that rely on third-party libraries or dependencies.

Choosing a Docker Registry Implementation

Choosing the right Docker registry implementation is crucial for the success of your private registry. The selection process should be based on your specific needs, team size, security requirements, and budget. Several options are available, each with its own strengths and weaknesses. Careful consideration of these factors will ensure you choose a solution that meets your current and future requirements.

Different Types of Private Docker Registry Implementations

Several options are available when choosing a private Docker registry, each with its own set of features and capabilities. These implementations range from the simple and straightforward to the complex and feature-rich. Understanding the different options available allows you to make an informed decision.

| Implementation | Pros | Cons |

|---|---|---|

| Docker Registry v2 |

|

|

| Harbor |

|

|

| Nexus Repository Manager |

|

|

Key Features to Consider When Selecting a Registry Solution

Selecting a Docker registry solution involves considering several key features that align with your specific requirements. These features significantly impact the registry’s usability, security, and overall performance.

- Security: Security is paramount when choosing a registry solution. This includes authentication, authorization, image signing, and vulnerability scanning. Ensure the registry supports the necessary security features to protect your images.

- Scalability: Consider the potential growth of your organization and the number of images you will store. The registry should be able to scale to handle increased traffic and storage demands. Horizontal scaling, allowing for the addition of more nodes to the cluster, is a key feature for high availability.

- User Interface (UI): A user-friendly UI simplifies image management, especially for teams that are new to Docker. A well-designed UI can improve efficiency and reduce the learning curve.

- Integration with CI/CD Pipelines: The registry should integrate seamlessly with your existing CI/CD pipelines. This integration streamlines the build, test, and deployment processes. Ensure compatibility with your preferred CI/CD tools.

- Storage Backend: The storage backend determines where your images are stored. Consider the performance, scalability, and cost of different storage options, such as local disk, cloud storage (e.g., AWS S3, Google Cloud Storage), or object storage.

- High Availability: High availability ensures that your registry remains accessible even in the event of hardware failures. Implement features like replication and load balancing to achieve high availability.

- Vulnerability Scanning: Built-in vulnerability scanning identifies potential security risks in your images. This allows you to address vulnerabilities before they are deployed to production. Tools like Clair or Trivy can be integrated to perform these scans.

- Role-Based Access Control (RBAC): RBAC allows you to define user roles and permissions, controlling who can access and modify images. This enhances security and helps to prevent unauthorized access.

- Image Signing: Image signing verifies the integrity of your images and ensures that they have not been tampered with. This is a critical security feature, particularly in production environments.

- Replication: Image replication enables you to copy images between registries, improving availability and performance in geographically distributed environments.

Setting up a Basic Docker Registry using Docker Registry v2

Now that we’ve explored the rationale behind private Docker registries and considered different implementation options, let’s dive into setting up a fundamental Docker Registry v2 using Docker Compose. This setup provides a straightforward, self-contained environment suitable for initial testing and development. It’s a valuable starting point before moving on to more complex, production-ready deployments.This section will guide you through the process, covering persistent storage configuration and providing a complete Docker Compose file for immediate use.

Setting up a Basic Docker Registry v2 using Docker Compose

Setting up a basic Docker Registry v2 with Docker Compose involves defining the registry service within a `docker-compose.yml` file. This file specifies the image to use, the ports to expose, and any volumes for persistent storage. Docker Compose simplifies the process of managing the registry’s dependencies and configuration.To begin, we need to create a `docker-compose.yml` file. The following steps Artikel the necessary configurations:

- Create the `docker-compose.yml` file: Start by creating a file named `docker-compose.yml` in a suitable directory. This file will contain the definition of our registry service.

- Define the `registry` service: Within the `docker-compose.yml` file, define a service named `registry`. This service will use the official Docker Registry v2 image from Docker Hub. Specify the image using the `image` key:

image: registry:2. - Expose the registry’s port: The Docker Registry typically listens on port

5000. Use the `ports` key to map the host’s port 5000 to the container’s port 5000

ports:"5000

5000" . This allows you to access the registry from your host machine.

- Configure persistent storage: To ensure that the images stored in the registry persist even after the container is stopped or restarted, we’ll configure persistent storage. This is accomplished using Docker volumes. Add a `volumes` section to the `registry` service:

volumes:. This creates a named volume called `registry-data` and mounts it inside the container at `/var/lib/registry`. This is where the registry stores the image data.registry-data

/var/lib/registry

- Define the named volume: At the top level of the `docker-compose.yml` file, define the `registry-data` volume using the `volumes` key. This ensures the volume is created and managed by Docker Compose.

Creating the Docker Compose file for a simple registry setup

The following is the complete `docker-compose.yml` file that implements the steps Artikeld above. This file creates a functional Docker Registry v2 that persists image data across container restarts.“`yamlversion: “3.9”services: registry: image: registry:2 ports:

“5000

5000″ volumes:

registry-data

/var/lib/registryvolumes: registry-data:“`To use this file:

- Save the file: Save the above content into a file named `docker-compose.yml`.

- Navigate to the directory: Open a terminal or command prompt and navigate to the directory where you saved the `docker-compose.yml` file.

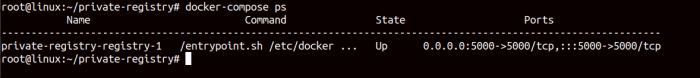

- Start the registry: Run the command `docker-compose up -d`. The `-d` flag runs the containers in detached mode, meaning they will run in the background. Docker Compose will download the registry image (if it’s not already present), create the named volume, and start the registry container.

- Verify the registry is running: Use the command `docker ps` to list running containers. You should see a container named `your-project-name_registry_1` (where `your-project-name` is based on the directory name) running, and the port 5000 should be exposed.

After completing these steps, your basic Docker Registry v2 setup is ready for use. You can now push and pull images from your private registry. Remember to tag your images with the correct registry address (e.g., `localhost:5000/my-image:latest`) before pushing them. This simple setup provides a solid foundation for exploring the capabilities of private Docker registries.

Configuring Security for Your Registry

Securing your private Docker registry is paramount to protect your container images and the sensitive information they may contain. Without proper security measures, your registry becomes a potential target for unauthorized access, image tampering, and data breaches. Implementing robust security practices is essential for maintaining the integrity, confidentiality, and availability of your containerized applications and infrastructure.

Importance of Securing Your Private Registry

Securing a private Docker registry protects your intellectual property, prevents malicious image deployment, and ensures compliance with security regulations. The images stored in your registry often contain proprietary code, configuration data, and sensitive dependencies.

- Protecting Intellectual Property: Unauthorized access could lead to the theft of your code, which is a risk. Securing your registry helps prevent such intellectual property theft.

- Preventing Malicious Image Deployment: Attackers could potentially inject malicious code into your images if the registry is not secure. Secure registry configuration ensures the integrity of images.

- Ensuring Compliance: Security regulations and industry best practices often mandate secure storage and access controls for sensitive data. Properly securing your registry helps you meet these requirements.

- Maintaining Image Integrity: Secure registries ensure images are not tampered with during storage or transfer. This is crucial for maintaining the reliability and stability of your applications.

Enabling TLS/SSL for Secure Communication

Enabling TLS/SSL (Transport Layer Security/Secure Sockets Layer) encrypts the communication between your Docker client and the registry. This prevents eavesdropping and protects the data transmitted, including image layers and metadata.To enable TLS/SSL, you’ll need a valid SSL/TLS certificate. You can obtain a certificate from a Certificate Authority (CA) or generate a self-signed certificate for testing purposes. Here’s how to configure a Docker registry using a self-signed certificate:

- Generate a Self-Signed Certificate: You can use OpenSSL to generate a self-signed certificate and private key. This example creates a certificate valid for one year.

openssl req -newkey rsa:4096 -nodes -sha256 -days 365 -subj '/CN=your-registry.example.com' -x509 -out cert.pem -keyout key.pem

- Configure the Docker Registry: Modify your Docker registry’s configuration to use the generated certificate and key. This usually involves specifying the paths to the certificate and key files in your registry’s configuration file (e.g., `config.yml` for Docker Registry v2). The configuration varies depending on the registry implementation. For example, when running a Docker Registry v2 container, you might use environment variables:

docker run -d -p 5000:5000 --name registry \ -v /path/to/certs:/certs \ -e REGISTRY_HTTP_TLS_CERTIFICATE=/certs/cert.pem \ -e REGISTRY_HTTP_TLS_KEY=/certs/key.pem \ registry:2

- Trust the Certificate on the Docker Client: Because you are using a self-signed certificate, your Docker client will not inherently trust it. You must configure your Docker client to trust the certificate. This typically involves copying the `cert.pem` file to a trusted location on your Docker client’s host machine (e.g., `/etc/docker/certs.d/your-registry.example.com:5000/ca.crt`) and restarting the Docker daemon.

Important Considerations: Self-signed certificates are suitable for testing and development environments, but are not recommended for production use. In production, use certificates issued by a trusted Certificate Authority (CA) to ensure proper trust and avoid security warnings.

Implementing User Authentication and Authorization

User authentication and authorization are critical for controlling access to your private registry. Authentication verifies the identity of the user, while authorization determines what actions that user is permitted to perform.There are several methods to implement authentication and authorization. The choice of method depends on your specific requirements and infrastructure.

- Using Basic Authentication: Docker Registry v2 supports basic authentication using username and password credentials. This method is simple to implement but can be less secure than other methods. You typically configure the registry to use a password file to store user credentials.

docker run -d -p 5000:5000 --name registry \ -e REGISTRY_AUTH=htpasswd \ -e REGISTRY_AUTH_HTPASSWD_PATH=/auth/htpasswd \ -e REGISTRY_AUTH_HTPASSWD_REALM="Registry Realm" \ -v /path/to/auth:/auth \ registry:2

You can then use the `htpasswd` utility to create and manage the password file.

- Using OAuth/OpenID Connect: Integrating your registry with an OAuth/OpenID Connect provider (e.g., Google, GitHub, Active Directory) allows users to authenticate using their existing accounts. This provides a more secure and user-friendly authentication experience. The registry must be configured to integrate with the chosen OAuth/OpenID Connect provider.

- Using Token-Based Authentication: This involves issuing short-lived tokens to authenticated users. The tokens are then used to authorize requests to the registry. This is a common and secure approach. The registry must be configured to issue and validate tokens.

- Implementing Role-Based Access Control (RBAC): RBAC allows you to define roles with specific permissions. Users are then assigned to these roles, which controls their access to registry resources (e.g., push images, pull images, delete images). This provides fine-grained control over access. You can define roles such as ‘administrator’, ‘developer’, and ‘read-only’.

Pushing and Pulling Images from Your Registry

Now that your private Docker registry is set up and potentially secured, the next crucial step is to interact with it by pushing and pulling Docker images. This process allows you to store and retrieve your custom-built images, making them accessible across your infrastructure. This section details the steps involved in these operations and provides guidance for troubleshooting common issues.

Tagging and Pushing Docker Images

Before pushing images, they must be tagged appropriately to reflect the location of your private registry. The tag includes the registry’s address, the repository name, and the image tag (e.g., version). This tagging process is essential for Docker to correctly identify and store the image within your registry.Here’s how to tag and push images:

- Tagging an Image: Use the `docker tag` command. The general format is:

docker tag <image_name>:<tag> <registry_address>/<repository_name>/<image_name>:<tag>For example, if your registry is running at `registry.example.com:5000` and you want to push an image named `my-app` with the tag `1.0`, the command would be:

docker tag my-app:1.0 registry.example.com:5000/my-project/my-app:1.0 - Pushing the Image: Once tagged, push the image to your registry using the `docker push` command:

docker push <registry_address>/<repository_name>/<image_name>:<tag>Using the previous example, the command would be:

docker push registry.example.com:5000/my-project/my-app:1.0Docker will then upload the image layers to your registry.

Pulling Images from Your Registry

Pulling images from your private registry is straightforward once the images have been pushed. The Docker client needs to be configured to trust your registry, especially if it uses self-signed certificates or is not publicly accessible.To pull an image:

- Pulling the Image: Use the `docker pull` command, specifying the full image name, including the registry address and tag.

docker pull <registry_address>/<repository_name>/<image_name>:<tag>For example:

docker pull registry.example.com:5000/my-project/my-app:1.0 - Docker Client Configuration: If your registry uses a self-signed certificate, you may need to configure the Docker client to trust the certificate. This usually involves copying the certificate to a specific location on the Docker host and restarting the Docker service. The exact location depends on your operating system. For instance, on Linux systems using systemd, you might place the certificate in `/etc/docker/certs.d/registry.example.com:5000/ca.crt`.

Troubleshooting Common Push/Pull Errors

Several issues can arise during the push and pull processes. Understanding these errors and their solutions is crucial for maintaining a functional Docker registry.Here are some common errors and their troubleshooting steps:

- Connection Refused or Timeout Errors: These errors often indicate that the Docker client cannot reach the registry.

- Verify Registry Availability: Ensure the registry is running and accessible from the Docker client host. Check network connectivity using tools like `ping` or `telnet`.

- Firewall Rules: Check firewall rules on both the Docker client and the registry server to ensure traffic on the registry’s port (e.g., 5000) is allowed.

- DNS Resolution: Confirm the Docker client can resolve the registry’s hostname.

- Unauthorized or Authentication Errors: These errors usually mean that the Docker client is not authenticated to the registry.

- Docker Login: If your registry requires authentication, use the `docker login` command:

docker login <registry_address>Then, enter your username and password when prompted.

- Registry Configuration: Ensure the registry is configured correctly to accept the authentication method used by the Docker client (e.g., basic authentication, token-based authentication).

- Credentials: Verify the username and password are correct.

- Docker Login: If your registry requires authentication, use the `docker login` command:

- Certificate Errors: These errors occur when the Docker client does not trust the registry’s certificate.

- Trusting the Certificate: As described earlier, copy the registry’s CA certificate to the appropriate location on the Docker client host.

- Certificate Verification: Ensure the certificate is valid and not expired.

- Image Not Found Errors: These errors usually occur if the image name or tag is incorrect.

- Verify the Image Name and Tag: Double-check the image name and tag used in the `docker push` and `docker pull` commands. Ensure they match the image’s tag in the registry.

- Repository Existence: If you’re using a repository, confirm it exists in the registry.

- Layer Upload Errors: These errors can happen during the push process if there are issues with the image layers.

- Docker Daemon Status: Ensure the Docker daemon is running correctly on both the client and server.

- Disk Space: Check for sufficient disk space on both the client and the server.

- Network Stability: Ensure a stable network connection during the push operation. Large images can take a long time to upload, and a dropped connection can cause issues.

Advanced Registry Configuration: Storage Backends

Docker registries, by default, utilize a local filesystem for storing image layers and metadata. However, for production environments, particularly those needing scalability, high availability, and durability, this default storage mechanism is often insufficient. Leveraging cloud-based or network-attached storage solutions offers significant advantages. This section explores various storage backends compatible with Docker Registry v2, focusing on their configuration and benefits.

Different Storage Backends Available for Docker Registries

Choosing the right storage backend is crucial for the performance, reliability, and cost-effectiveness of your Docker registry. Several options are available, each with its own strengths and weaknesses.

- Local Filesystem: The default storage backend, suitable for testing and development. It’s simple to set up but lacks scalability and redundancy. Data is stored directly on the host’s filesystem.

- Amazon S3: A popular choice for cloud-based storage. S3 provides excellent scalability, durability, and cost-effectiveness. Images are stored as objects in an S3 bucket.

- Google Cloud Storage (GCS): Similar to S3, GCS offers scalable and reliable object storage. It’s a good option for users within the Google Cloud ecosystem. Images are stored as objects in a GCS bucket.

- Microsoft Azure Blob Storage: Azure Blob Storage provides a cost-effective and scalable object storage solution within the Microsoft Azure cloud. Images are stored as blobs within an Azure storage account.

- OpenStack Swift: An open-source object storage system that provides a scalable and redundant storage solution. It’s often used in private cloud environments.

- Ceph: A distributed object storage system that offers high performance and scalability. It’s suitable for large-scale deployments.

- Other Storage Backends: Docker Registry v2 supports other storage backends through plugins or custom configurations. This can include NAS devices, other cloud storage providers, and custom solutions.

The selection of a storage backend should be based on factors such as cost, performance requirements, geographic distribution needs, and existing infrastructure. For instance, a small team might find local filesystem adequate initially, but as the team and image repository grow, migrating to a cloud-based solution like S3 becomes increasingly practical. Similarly, organizations already using Google Cloud might find GCS the most natural choice.

Configuration Steps for a Specific Storage Backend (Amazon S3)

Configuring an S3 backend for your Docker registry involves several steps. These steps ensure that the registry can securely store and retrieve image data from your S3 bucket. The specific configuration often involves setting environment variables.

- Create an S3 Bucket: Within your AWS account, create an S3 bucket. Choose a region appropriate for your users and ensure the bucket has the correct permissions. The bucket name must be globally unique.

- Create an IAM User with S3 Access: Create an IAM user with the necessary permissions to access the S3 bucket. This user will be used by the Docker registry to interact with the bucket. The user needs permissions to read, write, and delete objects within the bucket.

- Obtain AWS Credentials: Obtain the Access Key ID and Secret Access Key for the IAM user. These credentials will be used to configure the Docker registry.

- Configure the Docker Registry: Configure the Docker registry to use the S3 backend. This is typically done by setting environment variables when running the registry container.

- Test the Configuration: Push and pull images to verify that the registry is correctly using the S3 backend.

The IAM user’s permissions are critical. The registry requires the ability to perform actions like `GetObject`, `PutObject`, and `DeleteObject` on the S3 bucket. Incorrect permissions will prevent the registry from functioning correctly.

Configuration Example for an S3 Backend Using Environment Variables

The following is an example Docker Compose configuration illustrating how to configure a Docker Registry v2 to use Amazon S3 as its storage backend. This configuration uses environment variables to provide the necessary S3 credentials and bucket information.“`yamlversion: “3.9”services: registry: image: registry:2 ports:

“5000

5000″ environment: REGISTRY_STORAGE: s3 REGISTRY_STORAGE_S3_REGION: us-east-1 # Replace with your S3 region REGISTRY_STORAGE_S3_BUCKET: your-docker-registry-bucket # Replace with your bucket name REGISTRY_STORAGE_S3_ACCESSKEY: YOUR_AWS_ACCESS_KEY_ID # Replace with your Access Key ID REGISTRY_STORAGE_S3_SECRETKEY: YOUR_AWS_SECRET_ACCESS_KEY # Replace with your Secret Access Key # Optionally, set the S3 endpoint if you’re using a non-standard S3-compatible service.

# REGISTRY_STORAGE_S3_ENDPOINT: s3.amazonaws.com # Example – leave this out for AWS S

3. restart

always“`In this example:* `REGISTRY_STORAGE: s3` specifies that the S3 storage driver should be used.

- `REGISTRY_STORAGE_S3_REGION` specifies the AWS region where your S3 bucket resides.

- `REGISTRY_STORAGE_S3_BUCKET` specifies the name of your S3 bucket.

- `REGISTRY_STORAGE_S3_ENDPOINT` is optional and can be used if you are using an S3-compatible service (e.g., MinIO).

`REGISTRY_STORAGE_S3_ACCESSKEY` and `REGISTRY_STORAGE_S3_SECRETKEY` are the AWS credentials. Important

Never hardcode credentials directly in a production configuration file. Consider using secrets management tools or environment variable injection methods provided by your orchestration platform.

To deploy this configuration, you would save it as a `docker-compose.yml` file and then run `docker-compose up -d`. After the container starts, you should be able to push and pull images to and from your registry, and the image data will be stored in your S3 bucket.

Automating Registry Operations

Automating operations is crucial for maintaining a healthy and efficient private Docker registry. It minimizes manual intervention, reduces the potential for human error, and ensures consistent performance. This section focuses on automating image pushing and pulling, garbage collection, and registry backups, providing a comprehensive approach to streamlining registry management.

Automating Image Pushing and Pulling

Automating image pushing and pulling streamlines the deployment process and ensures that the correct images are readily available. This automation is particularly beneficial in continuous integration and continuous deployment (CI/CD) pipelines.To automate these processes, a scripting approach is commonly used. Here’s an example using a simple Bash script:“`bash#!/bin/bash# ConfigurationREGISTRY_URL=”your-registry.example.com:5000″USERNAME=”your_username”PASSWORD=”your_password”IMAGE_NAME=”your-image-name”IMAGE_TAG=”latest”# Docker loginecho “$PASSWORD” | docker login -u “$USERNAME” –password-stdin “$REGISTRY_URL”# Build and Push the imagedocker build -t “$REGISTRY_URL/$IMAGE_NAME:$IMAGE_TAG” .docker push “$REGISTRY_URL/$IMAGE_NAME:$IMAGE_TAG”# Docker logout (optional, for security)docker logout “$REGISTRY_URL”echo “Image pushed successfully!”“`This script performs the following actions:* Configuration: Defines variables for the registry URL, username, password, image name, and tag.

It’s crucial to store sensitive information like passwords securely (e.g., using environment variables or a secrets management system).

Docker Login

Logs in to the private registry using the provided credentials. The `–password-stdin` option securely passes the password.

Build and Push

Builds a Docker image and tags it with the registry URL and image name. Then, it pushes the image to the registry.

Docker Logout (Optional)

Logs out of the registry for added security after the push operation is complete.This script can be integrated into CI/CD pipelines, such as those provided by Jenkins, GitLab CI, or GitHub Actions. The pipeline would trigger the script automatically upon code changes, building, tagging, and pushing the updated images to the registry. The process would typically involve the following steps:

- Code changes are committed and pushed to the repository.

- The CI/CD system detects the change and initiates a build process.

- The build process runs the Docker build script.

- The Docker image is built, tagged, and pushed to the private registry using the automated script.

- The updated image is then deployed to the target environment.

Organizing Automatic Garbage Collection

Garbage collection is essential for maintaining a clean and efficient Docker registry. It removes unused image layers and manifests, reclaiming storage space and improving registry performance. The Docker Registry v2 supports garbage collection, but it needs to be triggered manually or automated.The process of automating garbage collection involves the following steps:

- Configuration: Ensure that your registry’s storage backend supports garbage collection. For example, the `s3` backend for the Docker Registry v2 supports garbage collection.

- Scheduling: Schedule the garbage collection process to run periodically. This can be done using tools like `cron` or systemd timers.

- Execution: The garbage collection process typically involves running a specific command against the registry.

Here’s an example of a Bash script that can be used to trigger garbage collection:“`bash#!/bin/bash# ConfigurationREGISTRY_URL=”your-registry.example.com:5000″REGISTRY_DATA_DIR=”/var/lib/registry” # Example, adjust based on your setup# Stop the registrydocker stop registry# Run garbage collectiondocker run –rm -v “$REGISTRY_DATA_DIR:/var/lib/registry” registry:2 garbage-collect /var/lib/registry/config.yml# Start the registrydocker start registryecho “Garbage collection completed.”“`This script does the following:* Configuration: Defines variables for the registry URL and the directory where the registry stores its data.

Stop the Registry

Halts the registry service to prevent interference during the garbage collection process.

Run Garbage Collection

Executes the `garbage-collect` command using a temporary Docker container. This command analyzes the registry’s data and removes unused layers and manifests. The volume mount ensures that the container has access to the registry’s data. The `/var/lib/registry/config.yml` file is a configuration file for the registry.

Start the Registry

Restarts the registry service after garbage collection is complete.To schedule this script, you can use `cron`. For example, to run the script weekly on Sundays at 2:00 AM, you would add the following line to your crontab (using `crontab -e`):“`

- 2

- 0 /path/to/your/garbage_collection_script.sh

“`The frequency of garbage collection depends on the volume of image pushes and pulls. For active registries, weekly or bi-weekly runs are often sufficient. It’s crucial to monitor the registry’s storage usage and adjust the schedule as needed.

Strategies for Automated Registry Backups

Regular backups are critical for disaster recovery and data protection in a Docker registry. Backups ensure that you can restore your images and registry data in case of data loss, hardware failure, or other unforeseen events.The backup strategy depends on the storage backend used by the registry. For example, if using an S3-compatible storage backend, the backup process may be simplified by the backend’s features.Here are some common backup strategies:

- Full Backups: Regularly create complete copies of the registry’s data. This ensures that you have a comprehensive snapshot of all images and metadata. This is typically the most straightforward approach for initial setup.

- Incremental Backups: Only back up the changes that have occurred since the last backup. This reduces the time and resources required for backups, especially for large registries. This requires a mechanism to track changes.

- Storage Backend Features: Leverage the backup and replication features provided by the storage backend itself. For example, if using an S3-compatible backend, use its object versioning and replication capabilities.

Here’s a general example using `rsync` for backing up a registry using a local directory:“`bash#!/bin/bash# ConfigurationREGISTRY_DATA_DIR=”/var/lib/registry” # Example, adjust based on your setupBACKUP_DIR=”/path/to/your/backup/directory”# Create the backup directory if it doesn’t existmkdir -p “$BACKUP_DIR”# Run rsync to create a backuprsync -avz –delete “$REGISTRY_DATA_DIR/” “$BACKUP_DIR”echo “Backup completed.”“`This script performs the following:* Configuration: Defines variables for the registry’s data directory and the backup directory.

Create Backup Directory

Ensures the backup directory exists.

Run rsync

Uses `rsync` to synchronize the contents of the registry’s data directory with the backup directory. The `–delete` option ensures that files deleted from the registry are also deleted from the backup. The `-avz` options provide a good balance of features (archive mode, verbose output, and compression).The script can be scheduled using `cron`. For example, to run the script daily at 3:00 AM, you would add the following line to your crontab:“`

- 3

- /path/to/your/backup_script.sh

“`When restoring from a backup, the process involves stopping the registry, restoring the data from the backup to the registry’s data directory, and then restarting the registry. The exact steps will vary depending on the chosen storage backend and backup method.

Integrating with CI/CD Pipelines

Integrating your private Docker registry with a CI/CD (Continuous Integration/Continuous Delivery) pipeline is crucial for automating the build, testing, and deployment of your containerized applications. This integration streamlines the development lifecycle, reduces manual intervention, and ensures consistent deployments. Properly configured, the CI/CD pipeline will automatically build, tag, push, and deploy Docker images to your private registry, ready for use in your production environment.

Integrating with CI/CD Systems

CI/CD systems, such as Jenkins, GitLab CI, CircleCI, and Azure DevOps, provide robust features for automating software development workflows. Integrating with a private Docker registry typically involves configuring the CI/CD system to: authenticate with the registry, build Docker images, tag the images appropriately, and push the images to the registry. Each CI/CD system has its specific configuration, but the underlying principles remain consistent.

Example Configurations for Pushing Images

The following examples demonstrate how to configure a CI/CD pipeline to push Docker images to a private registry using Jenkins and GitLab CI.

Jenkins Example

Jenkins uses a declarative pipeline defined in a `Jenkinsfile`. This file describes the build, test, and deployment stages. The example below shows how to push a Docker image to a private registry.“`groovypipeline agent docker image ‘docker:latest’ // Use a Docker agent to run Docker commands args ‘–network=host’ // Allows the container to access the host’s network.

Adjust as needed for your registry access. environment REGISTRY_URL = ‘your-private-registry.example.com:5000’ DOCKER_USERNAME = ‘your_docker_username’ DOCKER_PASSWORD = ‘your_docker_password’ IMAGE_NAME = ‘your-image-name’ IMAGE_TAG = “$env.BUILD_NUMBER” // Use the build number as the tag stages stage(‘Build and Push’) steps sh ‘docker login -u “$DOCKER_USERNAME” -p “$DOCKER_PASSWORD” “$REGISTRY_URL”‘ sh “docker build -t $REGISTRY_URL/$IMAGE_NAME:$IMAGE_TAG .” sh “docker push $REGISTRY_URL/$IMAGE_NAME:$IMAGE_TAG” “`In this example:* The `docker` agent specifies a Docker container to execute the pipeline steps, ensuring Docker commands are available.

- Environment variables store sensitive information like registry credentials. This is best practice.

- The `docker login` command authenticates with the private registry.

- `docker build` creates the Docker image.

- `docker push` pushes the image to the private registry with a tag based on the build number.

GitLab CI Example

GitLab CI uses a `.gitlab-ci.yml` file to define the CI/CD pipeline. The following example shows how to push a Docker image to a private registry.“`yamlstages: – build – deployvariables: REGISTRY_URL: your-private-registry.example.com:5000 IMAGE_NAME: your-image-name IMAGE_TAG: “$CI_COMMIT_SHORT_SHA” # Use the short commit SHA as the tagbuild_job: stage: build image: docker:latest # Use the official Docker image services:

docker

dind # Use Docker-in-Docker for running Docker commands before_script:

docker login -u “$CI_REGISTRY_USER” -p “$CI_REGISTRY_PASSWORD” “$REGISTRY_URL”

script:

docker build -t “$REGISTRY_URL/$IMAGE_NAME

$IMAGE_TAG” .

docker push “$REGISTRY_URL/$IMAGE_NAME

$IMAGE_TAG”deploy_job: stage: deploy image: alpine/git # Or your deployment image dependencies:

build_job # Run after the build job

script:

echo “Deploying image $REGISTRY_URL/$IMAGE_NAME

$IMAGE_TAG” # Deployment steps here (e.g., update Kubernetes deployment)“`In this example:* The `image: docker:latest` specifies the Docker image for the build job.

`services

docker

dind` enables Docker-in-Docker, allowing Docker commands within the build job.

- The `before_script` section logs in to the private registry using predefined GitLab CI/CD variables (e.g., `CI_REGISTRY_USER`, `CI_REGISTRY_PASSWORD`). These variables are automatically populated when you configure your GitLab project to use a private registry.

- `docker build` and `docker push` perform the build and push operations.

- The `IMAGE_TAG` is dynamically generated using the commit SHA, which is a good practice for versioning.

Managing Image Versions in CI/CD

Managing image versions effectively is crucial for maintaining a stable and reproducible deployment process. Several strategies can be employed to manage image versions within a CI/CD pipeline.

- Using Semantic Versioning: Implement a semantic versioning scheme (e.g., `major.minor.patch`) to clearly communicate changes and dependencies. This allows for better control over deployments and rollbacks.

- Tagging with Build Numbers: Use the CI/CD build number as the image tag (e.g., `image-name:123`). This provides a unique identifier for each build.

- Tagging with Git Commit Hashes: Utilize the Git commit hash (or a shortened version) as the image tag (e.g., `image-name:abcdefg`). This ties the image directly to a specific code commit.

- Using Latest Tag Cautiously: The `:latest` tag should be used with caution. It can be convenient for development but can lead to unpredictable behavior in production environments if the image is updated without proper testing. Consider using `:latest` only in specific environments.

- Immutable Tags: Create tags that, once applied, are never changed. This ensures that a specific tag always refers to the same image. This is highly recommended for production environments.

- Image Lifecycle Management: Implement a strategy for cleaning up old images in your registry to save storage space. Some registries offer built-in tools for this, or you can automate this with scripts.

Choosing the appropriate versioning strategy depends on your project’s specific needs and the complexity of your deployment process.

Monitoring and Logging

Monitoring and logging are crucial for maintaining a healthy and efficient private Docker registry. They provide insights into the registry’s performance, identify potential issues, and enable proactive troubleshooting. Effective monitoring and logging practices help ensure the availability, reliability, and security of your container image storage and distribution.

Importance of Monitoring Your Registry

Monitoring your Docker registry provides critical visibility into its operations, allowing you to maintain its performance and identify potential problems before they escalate. This proactive approach ensures that your registry remains reliable and accessible for your development and deployment workflows.

- Performance Tracking: Monitoring helps track key performance indicators (KPIs) such as response times for image pushes and pulls, storage usage, and the number of concurrent requests. This data enables you to identify bottlenecks and optimize the registry’s performance.

- Resource Utilization: Monitoring resource utilization, including CPU, memory, and disk I/O, is essential for ensuring that the registry has sufficient resources to handle the workload. This prevents performance degradation and potential service outages.

- Error Detection: Monitoring allows for the detection of errors, such as failed image pushes or pulls, authentication failures, and storage errors. Early detection of these errors enables you to take corrective action before they impact users.

- Security Auditing: Monitoring logs provide valuable information for security auditing, including tracking user activity, detecting suspicious behavior, and identifying potential security breaches. This helps to maintain the integrity and confidentiality of your container images.

- Capacity Planning: By analyzing historical data on storage usage and image traffic, you can forecast future resource needs and plan for capacity upgrades. This ensures that your registry can scale to meet growing demands.

Setting Up Monitoring with Prometheus and Grafana

Prometheus and Grafana are popular open-source tools that can be used together to monitor your Docker registry effectively. Prometheus collects metrics from the registry, and Grafana visualizes these metrics through dashboards.

Setting up Prometheus and Grafana involves the following steps:

- Deploy Prometheus: Deploy Prometheus, configured to scrape metrics from your Docker registry. This typically involves creating a configuration file (e.g., `prometheus.yml`) that specifies the registry’s endpoint for metrics.

- Configure the Docker Registry to Expose Metrics: Ensure that your Docker registry is configured to expose metrics in a format that Prometheus can understand, usually through a dedicated metrics endpoint (e.g., `/metrics`).

- Deploy Grafana: Deploy Grafana and configure it to connect to your Prometheus instance as a data source.

- Create Dashboards: Create dashboards in Grafana to visualize key metrics from your Docker registry. Common metrics to monitor include:

- Number of image pushes and pulls

- Storage usage

- HTTP request success and error rates

- Response times

- CPU and memory usage

For example, a Grafana dashboard might display a graph showing the number of image pulls over time, allowing you to quickly identify trends and potential performance issues.

Analyzing Registry Logs for Troubleshooting and Performance Optimization

Analyzing Docker registry logs is a critical step in troubleshooting issues and optimizing performance. Logs provide detailed information about registry operations, including errors, warnings, and informational messages.

Key aspects of log analysis include:

- Log Levels: Understanding the different log levels (e.g., debug, info, warn, error) helps you filter and focus on relevant information. Errors and warnings often indicate problems that require immediate attention.

- Log Format: Familiarize yourself with the log format used by your registry implementation. Common formats include JSON and text-based formats. Understanding the structure of the logs allows you to extract relevant data.

- Error Analysis: Identify and analyze error messages to diagnose and resolve issues. Error messages often provide clues about the cause of the problem, such as authentication failures, storage errors, or network connectivity issues.

- Performance Analysis: Analyze logs to identify performance bottlenecks, such as slow image pushes or pulls. Look for patterns in the logs that indicate performance issues, such as long response times or high resource utilization.

- Log Aggregation: Consider using a log aggregation tool, such as the ELK stack (Elasticsearch, Logstash, and Kibana) or Splunk, to centralize and analyze logs from multiple sources. This makes it easier to search, filter, and visualize log data.

For instance, a log entry indicating a “disk full” error would prompt you to investigate storage capacity and potentially increase the storage allocated to your registry. Analyzing logs in this way allows you to identify and resolve issues proactively, ensuring the continued smooth operation of your private Docker registry.

Scaling Your Private Registry

As your private Docker registry gains traction and usage grows, scaling becomes crucial to maintain performance, availability, and reliability. This section explores strategies and techniques to ensure your registry can handle increased load and demands. Effective scaling prevents bottlenecks, ensures fast image pushes and pulls, and provides a seamless experience for your users.

Strategies for Scaling Your Registry

To accommodate growth, several scaling strategies can be employed. These strategies address different aspects of the registry’s architecture, from the front-end access to the back-end storage.

- Horizontal Scaling: This involves adding more instances of the registry to handle increased traffic. This is a common and effective approach, especially when dealing with unpredictable load.

- Vertical Scaling: Increasing the resources (CPU, memory, storage) of existing registry instances. While simpler initially, this approach has limitations and can eventually hit hardware ceilings.

- Caching: Implementing caching mechanisms to store frequently accessed images closer to the users. This reduces the load on the registry and improves image retrieval times.

- Optimized Storage: Selecting and configuring a storage backend that can handle the expected load and performance requirements. Choosing the right storage solution is critical for scalability.

- Load Balancing: Distributing incoming requests across multiple registry instances to ensure even distribution of the workload and high availability.

- Content Delivery Network (CDN): Utilizing a CDN to cache and distribute images globally, improving download speeds for users in different geographical locations.

Comparing Different Scaling Options

Each scaling option presents its own advantages and disadvantages. The best approach often involves a combination of strategies tailored to the specific needs of your environment.

| Scaling Option | Advantages | Disadvantages |

|---|---|---|

| Horizontal Scaling | Highly scalable, fault-tolerant, can handle unpredictable load, relatively easy to implement. | Requires careful planning of infrastructure, potential complexity in managing multiple instances. |

| Vertical Scaling | Simple to implement initially, requires no architectural changes. | Limited by hardware constraints, can become expensive as resources are added, single point of failure. |

| Caching | Improves image retrieval times, reduces load on the registry, can be cost-effective. | Requires careful configuration and maintenance, cache invalidation strategies are critical. |

| Load Balancing | Distributes traffic, improves availability, can handle high traffic volumes. | Adds complexity, requires proper configuration and monitoring. |

| CDN | Improves download speeds globally, reduces load on the registry, offloads content delivery. | Can be expensive, requires integration and configuration. |

Descriptive Illustration of a Horizontally Scaled Registry Architecture

A horizontally scaled registry architecture typically involves multiple instances of the registry service, fronted by a load balancer. This design ensures high availability and the ability to handle significant traffic increases.

The illustration below depicts a horizontally scaled Docker registry.

Components:

- Users/Clients: These represent the Docker clients that push and pull images from the registry.

- Load Balancer: This is the entry point for all requests to the registry. It distributes the traffic across multiple registry instances. Common load balancers include HAProxy, Nginx, or cloud-based load balancers provided by AWS, Azure, or Google Cloud.

- Registry Instances: These are the Docker registry services running on separate servers or containers. They are responsible for storing and serving the Docker images. In this example, there are three registry instances, but the number can be scaled up or down as needed.

- Storage Backend: This is where the Docker images are stored. It could be an object storage service like Amazon S3, Google Cloud Storage, Azure Blob Storage, or a distributed file system. The storage backend is shared by all registry instances.

- Database (Optional): Some registry implementations use a database to store metadata about the images. This database is also shared across all registry instances.

Traffic Flow:

- Users initiate a push or pull request to the registry.

- The load balancer receives the request and distributes it to one of the available registry instances based on a configured load balancing algorithm (e.g., round robin, least connections).

- The selected registry instance interacts with the storage backend to store or retrieve the image data.

- If the registry uses a database, the registry instance also interacts with the database to update or retrieve metadata.

Benefits:

- High Availability: If one registry instance fails, the load balancer automatically redirects traffic to the remaining instances.

- Scalability: New registry instances can be added to handle increased traffic.

- Performance: Distributing the load across multiple instances improves response times.

Example:

Imagine a company, “ExampleCorp,” using a private Docker registry. Initially, they have a single registry instance. As the number of developers and projects grows, the registry experiences performance issues. To address this, ExampleCorp implements a horizontally scaled architecture with three registry instances and a load balancer. The load balancer distributes the traffic, ensuring that no single instance is overloaded.

If one instance fails, the load balancer automatically directs traffic to the remaining instances, maintaining high availability. As ExampleCorp’s usage continues to grow, they can easily add more registry instances to handle the increased load. This approach allows ExampleCorp to support its expanding development teams and projects without performance degradation or downtime.

Registry Maintenance and Updates

Regular maintenance and timely updates are critical for the health, security, and performance of your private Docker registry. Neglecting these aspects can lead to vulnerabilities, data loss, and service disruptions. This section Artikels essential maintenance practices and procedures to ensure your registry operates smoothly and securely.

Importance of Regular Registry Maintenance

Regular maintenance is paramount for several reasons, including security, performance, and data integrity. A well-maintained registry reduces attack surfaces, optimizes resource utilization, and safeguards your valuable image data. Ignoring maintenance can lead to significant problems down the line.

- Security: Regularly updating your registry software is crucial to patch security vulnerabilities. Docker registry software, like any software, is subject to security flaws. Attackers can exploit these flaws to gain unauthorized access, steal images, or disrupt service.

- Performance: Over time, the registry database can become fragmented or bloated. Regular maintenance tasks, such as garbage collection, can optimize the database and improve performance. A poorly maintained registry can experience slow image pushes and pulls, leading to frustrated users and reduced productivity.

- Data Integrity: Data corruption can occur due to hardware failures, software bugs, or other unforeseen events. Regular backups and data validation procedures protect against data loss. Without backups, a catastrophic failure could lead to the permanent loss of all your images.

- Compliance: Many organizations are subject to regulatory compliance requirements. Maintaining a secure and up-to-date registry helps meet these requirements.

Process for Updating Your Registry Software

Updating your registry software involves several steps, including backing up your data, downloading the latest version, and restarting the registry. The exact steps may vary depending on your registry implementation (e.g., Docker Registry v2, Harbor, etc.) and deployment method (e.g., Docker Compose, Kubernetes). However, the general process remains consistent.

- Backup Your Registry Data: Before updating, always create a backup of your registry data, including images and configuration files. This is a critical step to prevent data loss in case of a failed update. Detailed steps for backing up your data are provided later in this section.

- Download the Latest Version: Obtain the latest version of the registry software from the official source. This might involve pulling a new Docker image, downloading a package, or accessing a software repository, depending on the implementation.

- Stop the Running Registry: Stop the currently running registry instance. This ensures that no new data is written during the update process. The method for stopping the registry depends on how it was deployed (e.g., `docker stop

` if running in a Docker container, or using a Kubernetes command if deployed on Kubernetes). - Update the Registry: Replace the existing registry software with the new version. If you’re using Docker, this typically involves pulling the new image and restarting the container. If using a package manager, you might run an update command.

- Configure the Updated Registry: Apply any necessary configuration changes required by the new version. This may involve updating configuration files or environment variables. Review the release notes for the new version to understand any configuration changes.

- Start the Updated Registry: Start the updated registry instance and verify that it is running correctly. Check the logs for any errors or warnings.

- Test the Registry: Push and pull a test image to verify that the registry is functioning correctly. This confirms that images can be stored and retrieved successfully.

- Monitor the Registry: Monitor the registry’s performance and logs after the update to identify and resolve any issues. Use monitoring tools to track metrics like storage usage, network traffic, and error rates.

Steps for Backing Up and Restoring Your Registry Data

Backing up and restoring your registry data are essential components of a robust maintenance strategy. Regular backups protect against data loss caused by hardware failures, software bugs, or human error. The specific backup and restore methods depend on the storage backend you are using for your registry.

- Identify Your Storage Backend: Determine the storage backend used by your registry. Common backends include local filesystem, Amazon S3, Google Cloud Storage, and Azure Blob Storage. The backup and restore procedures will vary based on the backend.

- Backing Up the Registry Data:

- Local File System: If you are using the local file system, you can simply back up the directory where the registry stores its images. You can use standard tools like `rsync`, `tar`, or `cp` to create a copy of the data. For example:

rsync -avz /path/to/registry/data /path/to/backup/location - Cloud Storage (e.g., S3, GCS, Azure Blob Storage): If you are using cloud storage, use the cloud provider’s tools (e.g., AWS CLI, `gsutil`, `azcopy`) to create a backup of the storage bucket. Configure the backup to run regularly. For example, with the AWS CLI:

aws s3 sync s3://your-registry-bucket s3://your-backup-bucket - Database (if applicable): If your registry uses a database (e.g., for metadata), back up the database regularly. Use the database’s native backup tools.

- Local File System: If you are using the local file system, you can simply back up the directory where the registry stores its images. You can use standard tools like `rsync`, `tar`, or `cp` to create a copy of the data. For example:

- Restoring the Registry Data:

- Local File System: Restore the data to the original location. If the registry is running, you may need to stop it before restoring the data.

rsync -avz /path/to/backup/location /path/to/registry/data - Cloud Storage: Restore the data from the backup bucket to the registry’s storage bucket using the cloud provider’s tools.

aws s3 sync s3://your-backup-bucket s3://your-registry-bucket - Database (if applicable): Restore the database from the backup using the database’s native restore tools.

- Local File System: Restore the data to the original location. If the registry is running, you may need to stop it before restoring the data.

- Test the Restore: After restoring the data, test the registry by pushing and pulling images to ensure that the restore was successful. This step is crucial to verify the integrity of the restored data.

- Automate Backups: Automate the backup process using scripting and scheduling tools like `cron` or a task scheduler. Automating backups ensures that they are performed regularly and consistently.

- Implement Backup Retention Policies: Define and implement backup retention policies to manage storage space and comply with data retention requirements.

Final Review

In conclusion, setting up a private Docker registry is a valuable investment for any organization serious about containerization. By following the guidance provided, you can create a secure, efficient, and scalable environment for managing your Docker images. Remember to prioritize security, automate your operations, and continually monitor your registry for optimal performance. Embrace the power of a private registry and unlock the full potential of your containerized applications, leading to a more secure and streamlined development and deployment lifecycle.

Common Queries

What are the primary benefits of using a private Docker registry?

Private registries offer enhanced security by controlling access to your images, improved performance by storing images closer to your infrastructure, and greater control over image versions and lifecycle management.

How does a private registry differ from a public Docker registry?

Public registries, like Docker Hub, are open to everyone. Private registries restrict access to authorized users, providing greater security and control over who can pull and push images. They also allow for customization and integration with internal systems.

Is it possible to integrate a private Docker registry with existing authentication systems?

Yes, most private registry implementations support integration with various authentication systems, such as LDAP, Active Directory, or custom solutions, to manage user access and permissions securely.

How do I handle image storage and backups within a private registry?

You can configure storage backends like local filesystems, cloud storage (e.g., Amazon S3, Google Cloud Storage), or network-attached storage. Backups should be performed regularly to protect your images, using tools and strategies tailored to your chosen storage backend.