Migrating to the cloud promises scalability and cost efficiency, but achieving these benefits hinges on a critical step: right-sizing cloud resources during migration planning. This process, often overlooked, involves meticulously matching your existing workloads with the appropriate cloud instance types and sizes, preventing both performance bottlenecks and unnecessary spending. Inefficient resource allocation can lead to significant financial losses and hinder the full potential of cloud adoption.

This comprehensive guide delves into the essential aspects of right-sizing, from assessing your current infrastructure and selecting the optimal migration strategy to leveraging cloud provider tools and implementing post-migration monitoring. We will explore techniques for analyzing workload resource demands, choosing appropriate instance types, and optimizing costs. By understanding and implementing these strategies, organizations can ensure a smooth and cost-effective transition to the cloud, maximizing the return on their investment.

Understanding the Need for Cloud Resource Right-Sizing

Cloud resource right-sizing is a critical practice in cloud migration and management. It involves optimizing the allocation of cloud resources to match actual workload demands, avoiding both over-provisioning and under-provisioning. This strategic approach directly impacts cost efficiency, performance, and overall cloud infrastructure effectiveness.

Challenges of Over-Provisioned and Under-Provisioned Cloud Resources

Inefficient resource allocation presents significant challenges. Over-provisioning leads to wasted expenditure, while under-provisioning can cripple application performance and availability.Over-provisioning involves allocating more resources than are necessary for a workload. This typically results in:

- Increased Cloud Costs: Paying for unused compute, storage, and network resources. This can be a substantial financial drain, especially in environments with numerous virtual machines or large data storage needs. For example, a company that consistently over-provisions its virtual machines by 30% could see a similar percentage increase in its monthly cloud bill.

- Wasted Energy Consumption: Cloud resources consume energy, and over-provisioned resources contribute to unnecessary energy use, indirectly increasing costs and impacting environmental sustainability.

- Complex Management: Managing resources that are not being fully utilized can complicate infrastructure management, leading to increased operational overhead.

Under-provisioning, conversely, involves allocating insufficient resources to meet workload demands. This leads to:

- Performance Degradation: Applications run slower, leading to poor user experience and potentially lost business opportunities. For instance, an e-commerce website that experiences slow page load times during peak hours due to under-provisioned compute resources could see a decrease in sales.

- Service Outages: Insufficient resources can lead to system crashes and outages, disrupting business operations and damaging a company’s reputation.

- Reduced Scalability: Difficulty scaling resources to meet sudden demand spikes, such as during a marketing campaign or a major product launch.

Impact of Inefficient Resource Allocation on Cloud Costs and Performance

Inefficient resource allocation has a direct and measurable impact on both cloud costs and application performance. These impacts can be quantified and understood through careful monitoring and analysis.Consider the following examples:

- Example 1: Compute Instance Oversizing. A web application initially provisioned on a large compute instance (e.g., a 16-core, 64GB RAM instance) experiences average CPU utilization of only 15% and RAM utilization of 20%. Right-sizing to a smaller instance (e.g., a 4-core, 16GB RAM instance) could result in a 75% reduction in compute costs without affecting application performance. The savings would be calculated based on the hourly or monthly cost difference between the two instance types.

- Example 2: Storage Over-Provisioning. A database server provisioned with 1TB of storage utilizes only 200GB. The unused storage space incurs unnecessary storage costs. Right-sizing would involve reducing the storage capacity to approximately 300GB (allowing for future growth), thereby decreasing storage costs. This cost reduction can be determined by comparing the price per GB of the original storage volume with the price of the right-sized volume.

- Example 3: Performance Degradation Due to Under-Provisioning. A batch processing job requires 4 hours to complete when provisioned with 2 CPU cores and 8GB RAM. Increasing the resources to 8 CPU cores and 32GB RAM reduces the processing time to 1 hour. This faster processing time translates to improved business agility and responsiveness. The benefits can be measured in terms of time savings and potentially increased output.

These examples demonstrate that optimizing resource allocation is not merely a cost-saving exercise; it is also a critical factor in ensuring optimal application performance and overall business efficiency.

Benefits of Right-Sizing Cloud Resources Before, During, and After Migration

Implementing right-sizing strategies at different stages of the cloud migration lifecycle yields significant benefits. Before Migration:

- Accurate Cost Estimation: Right-sizing existing on-premises resources provides a more accurate basis for estimating cloud costs, preventing overspending.

- Informed Cloud Architecture Design: Understanding resource requirements helps design a cloud architecture that is optimized for cost and performance.

- Reduced Migration Risk: Identifying resource bottlenecks before migration allows for proactive planning and mitigation strategies, reducing the risk of performance issues after the migration.

During Migration:

- Seamless Transition: Right-sizing ensures that migrated applications perform optimally from the start, minimizing disruption.

- Cost Control: Continuous monitoring and adjustment of resource allocation during migration help control cloud spending.

- Optimized Performance: By monitoring resource utilization during migration, organizations can quickly identify and address any performance issues, ensuring a smooth transition.

After Migration:

- Continuous Optimization: Ongoing monitoring and right-sizing ensure that resources are continuously optimized for cost and performance.

- Improved Resource Utilization: Maximizing resource utilization leads to cost savings and improved efficiency.

- Enhanced Scalability: Right-sizing provides a foundation for effective scaling to meet changing business needs.

Assessing Current Infrastructure and Workloads

The accurate assessment of existing on-premises infrastructure and workloads is a critical first step in cloud resource right-sizing during migration planning. This phase provides the foundational data necessary to make informed decisions about the appropriate cloud resources needed, minimizing both over-provisioning (leading to wasted costs) and under-provisioning (resulting in performance bottlenecks and user dissatisfaction). A comprehensive understanding of current resource utilization patterns allows for a smoother, more efficient, and cost-effective transition to the cloud.

Identifying Methods for Evaluating On-Premises Infrastructure Utilization

Evaluating on-premises infrastructure utilization requires a multi-faceted approach, combining the collection of historical data with real-time monitoring. This allows for a comprehensive understanding of resource consumption, revealing trends and identifying peak demands. Several methods are employed to gather the necessary data, each providing a different perspective on resource usage.

- Performance Monitoring Tools: Performance monitoring tools, such as Nagios, Zabbix, or SolarWinds, are essential for collecting real-time and historical data on CPU utilization, memory usage, disk I/O, and network traffic. These tools typically utilize agents installed on servers or network devices to gather metrics, which are then stored and visualized for analysis. They often provide alerting capabilities to notify administrators of performance anomalies.

- Operating System (OS) Level Monitoring: Operating systems provide built-in tools for monitoring resource utilization. For example, `top`, `vmstat`, and `iostat` on Linux, and Performance Monitor (PerfMon) on Windows, offer real-time insights into CPU, memory, disk, and network performance. Analyzing data from these tools provides granular details about individual processes and their resource consumption.

- Virtualization Platform Monitoring: If the infrastructure is virtualized (e.g., VMware vSphere, Microsoft Hyper-V), the virtualization platform provides detailed monitoring capabilities. These tools offer metrics at the virtual machine (VM) level, allowing administrators to understand the resource consumption of individual VMs and identify potential bottlenecks. Historical data can also be used to analyze resource trends over time.

- Network Monitoring Tools: Network monitoring tools, such as Wireshark or tcpdump, can be used to analyze network traffic patterns, identify bandwidth usage, and detect potential network bottlenecks. These tools capture network packets and provide detailed information about network communication, including the source and destination of traffic, the amount of data transferred, and the protocols used.

- Storage Performance Monitoring: Understanding storage performance is crucial. Tools such as `iostat` (Linux) and Performance Monitor (Windows) can monitor disk I/O, providing insights into read/write operations, latency, and throughput. These metrics help identify storage-related bottlenecks. Monitoring tools should also track storage capacity utilization to predict future needs.

Detailing Procedures for Profiling Existing Workloads to Understand Their Resource Consumption Patterns

Profiling existing workloads is a crucial process for understanding how applications consume resources. This involves analyzing historical data, observing real-time performance, and identifying patterns of resource usage. A thorough workload profile enables accurate right-sizing decisions and minimizes the risk of performance degradation after migration.

- Data Collection Period: Establish a defined data collection period, typically ranging from several weeks to several months, to capture a representative sample of workload behavior. This period should encompass different operational cycles, including peak and off-peak times, to provide a comprehensive view of resource demands. Consider seasonal variations and business cycles.

- Baseline Establishment: Establish a baseline of resource consumption during the data collection period. This baseline serves as a reference point for comparing future performance and identifying anomalies. The baseline should include metrics such as CPU utilization, memory usage, disk I/O, and network traffic.

- Identifying Peak Resource Consumption: Analyze the collected data to identify peak resource consumption periods. Determine the highest levels of CPU utilization, memory usage, and other relevant metrics. This information is crucial for right-sizing cloud resources to handle peak loads effectively. Consider factors such as batch jobs, end-of-month processing, or seasonal events.

- Workload Categorization: Categorize workloads based on their resource consumption patterns. Grouping similar workloads together simplifies the analysis and allows for the identification of common characteristics. For example, categorize workloads based on their CPU-intensive, memory-intensive, or I/O-intensive nature.

- Application-Level Profiling: Analyze resource consumption at the application level. Identify which applications consume the most resources and determine their resource requirements. This information is crucial for optimizing application performance and ensuring that the migrated applications perform efficiently in the cloud. Use application performance monitoring (APM) tools to gain detailed insights.

- Dependency Mapping: Map dependencies between applications and services. Understanding the dependencies between workloads is critical for ensuring that the cloud environment is properly configured to support the migrated applications. Consider the impact of dependencies on resource consumption and performance.

Summarizing Key Metrics to Track During the Assessment Phase

The assessment phase requires the collection and analysis of a wide range of metrics to understand infrastructure utilization and workload behavior. These metrics provide the data necessary to make informed decisions about cloud resource right-sizing. The table below summarizes the key metrics to track during the assessment phase, along with descriptions of their importance.

| Metric | Description | Importance | Measurement Unit |

|---|---|---|---|

| CPU Utilization | Percentage of CPU cores used by the server or VM. | Indicates CPU capacity usage and potential bottlenecks. | Percentage (%) |

| Memory Usage | Amount of RAM used by the server or VM. | Identifies memory pressure and potential performance issues. | Gigabytes (GB) or Percentage (%) |

| Disk I/O | Rate of read and write operations to the storage devices. | Indicates storage performance and potential bottlenecks. | Operations per second (IOPS) or Megabytes per second (MB/s) |

| Network I/O | Rate of data transfer over the network. | Identifies network bandwidth usage and potential bottlenecks. | Megabits per second (Mbps) or Gigabytes (GB) |

| Disk Space Utilization | Percentage of disk space used by the server or VM. | Indicates storage capacity usage and potential capacity constraints. | Percentage (%) or Gigabytes (GB) |

| Network Latency | Time taken for data packets to travel between two points. | Indicates network performance and potential latency issues. | Milliseconds (ms) |

| Application Response Time | Time taken for an application to respond to user requests. | Measures application performance and user experience. | Milliseconds (ms) |

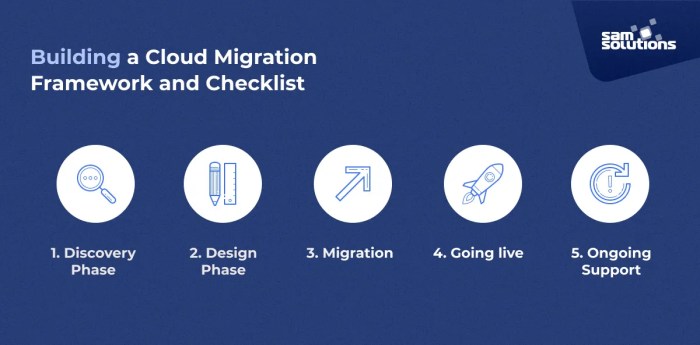

Choosing the Right Migration Strategy and Tools

Selecting the appropriate migration strategy and tools is critical for successful cloud resource right-sizing. The chosen approach significantly impacts the effort required, the potential for optimization, and the overall cost-effectiveness of the migration. Careful consideration of workload characteristics, business requirements, and available tools is essential for making informed decisions.

Cloud Migration Strategies and Their Impact on Right-Sizing

Different cloud migration strategies offer varying degrees of control over resource allocation and optimization, directly influencing the right-sizing process. The choice of strategy dictates the extent to which existing resources can be re-evaluated and adapted to the cloud environment.

- Rehosting (Lift and Shift): This strategy involves moving applications and their associated infrastructure to the cloud with minimal changes. It is often the fastest and least disruptive approach, suitable for quickly migrating workloads. However, right-sizing opportunities are limited, as the focus is on replicating the existing infrastructure. While initial right-sizing can be performed by matching existing on-premise resource utilization to cloud instances, deeper optimization is usually deferred until after the migration.

This approach often results in initial over-provisioning, necessitating subsequent right-sizing efforts. For example, a company migrating a legacy application using rehosting might initially provision virtual machines in the cloud that are equivalent to the on-premise servers. Post-migration analysis of CPU and memory utilization would then inform subsequent right-sizing, potentially leading to the downsizing of instances and cost savings.

- Replatforming (Lift, Tinker, and Shift): Replatforming involves making some modifications to the application to leverage cloud-native features without fundamentally changing its architecture. This approach offers opportunities for moderate right-sizing. By adapting the application to utilize cloud services like managed databases or containerization, resource utilization can be improved. For instance, migrating a database to a cloud-managed service can free up resources previously allocated to database server management, enabling right-sizing of compute instances.

This often requires a more in-depth understanding of the workload’s resource consumption patterns.

- Refactoring (Re-architecting): Refactoring involves redesigning and rewriting the application to fully utilize cloud-native services and architectures. This strategy provides the most significant opportunities for right-sizing. By breaking down the application into microservices, scaling individual components becomes more efficient, leading to optimized resource allocation. Consider an e-commerce platform that refactors its monolithic application into microservices. Each microservice, such as product catalog, shopping cart, and payment processing, can be scaled independently based on its resource demands.

This allows for precise right-sizing, avoiding over-provisioning and reducing costs.

- Repurchasing: This involves replacing an existing application with a Software-as-a-Service (SaaS) solution. Right-sizing in this context is primarily about selecting the appropriate SaaS tier based on usage requirements. This is often the easiest and fastest approach, but it offers the least control over resource allocation and optimization. The vendor manages the underlying infrastructure, and the customer pays for the service based on consumption or a subscription model.

- Retiring: This strategy involves decommissioning applications that are no longer needed. It is the simplest and most effective way to right-size by eliminating the associated resource consumption. This can be a significant cost-saving measure, especially for unused or underutilized applications.

Decision Tree for Selecting the Appropriate Migration Strategy

A decision tree can guide the selection of the optimal migration strategy based on workload characteristics and business priorities. The tree considers factors such as application complexity, technical debt, business agility requirements, and the desired level of cloud optimization.

The decision tree begins with an initial assessment of the application’s complexity.

- High Complexity: If the application is complex with high technical debt, refactoring or repurchasing might be the most suitable options, assuming business requirements align with these strategies.

- Moderate Complexity: For applications with moderate complexity, replatforming can be a viable option, providing a balance between effort and cloud optimization.

- Low Complexity: Applications with low complexity can be rehosted, offering a quick migration path.

Following complexity assessment, the decision tree incorporates business agility requirements.

- High Agility Requirements: If the business requires high agility and speed, rehosting or repurchasing may be favored.

- Moderate Agility Requirements: Replatforming can be a good fit for moderate agility needs.

- Low Agility Requirements: Refactoring provides the most flexibility but requires a longer timeframe.

Finally, the decision tree considers the desired level of cloud optimization.

- High Optimization Desired: Refactoring offers the greatest potential for optimization.

- Moderate Optimization Desired: Replatforming provides moderate optimization opportunities.

- Low Optimization Desired: Rehosting offers the least optimization, but is a faster migration approach.

By following this decision tree, organizations can systematically evaluate their workloads and select the migration strategy that best aligns with their specific needs and objectives, directly impacting the right-sizing efforts.

Comparison of Cloud Migration Tools and Their Right-Sizing Capabilities

Various cloud migration tools offer different features and capabilities that support right-sizing efforts. The choice of tool should align with the selected migration strategy and the specific needs of the organization.

The following table compares several popular cloud migration tools, evaluating their right-sizing capabilities.

| Tool | Migration Strategy Support | Right-Sizing Features | Automation Capabilities | Cost Analysis |

|---|---|---|---|---|

| AWS Migration Hub | Rehosting, Replatforming, Refactoring | Instance recommendations based on utilization metrics, cost optimization suggestions. | Automated assessment, planning, and tracking of migrations. | Detailed cost reporting and optimization recommendations. |

| Azure Migrate | Rehosting, Replatforming | VM sizing recommendations, cost estimation based on on-premises resource utilization. | Automated assessment, migration, and post-migration optimization. | Cost analysis and comparison between on-premises and Azure environments. |

| Google Cloud Migrate for Compute Engine | Rehosting | VM sizing based on on-premises resource utilization, automated instance selection. | Automated migration of VMs to Google Cloud. | Cost estimation and optimization recommendations based on Google Cloud pricing. |

| CloudEndure Migration | Rehosting | Limited right-sizing capabilities; focuses on replicating existing infrastructure. | Automated replication and cutover of workloads. | Basic cost estimation based on target cloud instance selection. |

| Carbonite Migrate | Rehosting | Focuses on data migration and replication, limited right-sizing features. | Automated data replication and migration. | Provides cost estimates based on the target cloud platform. |

The table highlights the strengths and weaknesses of each tool concerning right-sizing. AWS Migration Hub and Azure Migrate offer more comprehensive right-sizing features, including instance recommendations and cost optimization suggestions. Tools like CloudEndure Migration and Carbonite Migrate primarily focus on data migration and replication, with limited right-sizing capabilities. The selection of the appropriate tool should align with the chosen migration strategy and the specific right-sizing needs of the organization.

For example, an organization planning a rehosting migration would likely find AWS Migration Hub or Azure Migrate more suitable due to their right-sizing features, while an organization focusing on data replication might opt for CloudEndure Migration or Carbonite Migrate.

Analyzing Workload Resource Requirements

Understanding workload resource requirements is a critical step in cloud migration planning. Accurate analysis enables the effective allocation of resources, ensuring optimal performance and cost efficiency within the cloud environment. This process involves a detailed examination of existing infrastructure and application behavior to determine the necessary compute, storage, and network resources for each workload.

Techniques for Analyzing Workload Resource Demands

Several techniques can be employed to analyze workload resource demands in a cloud environment. These methods provide a comprehensive understanding of resource consumption patterns and help in making informed decisions about resource allocation.

- Baseline Measurement: Establish a baseline of resource usage for each workload before migration. This involves monitoring CPU utilization, memory consumption, disk I/O, and network traffic over a defined period. This baseline serves as a reference point for comparing resource demands after migration and identifying potential bottlenecks.

- Performance Testing: Conduct performance testing using realistic workloads and simulated user traffic. This helps assess how the application performs under various load conditions and identify resource limitations. Performance tests can include load testing, stress testing, and endurance testing. For example, a web application can be subjected to a load test simulating thousands of concurrent users to determine its CPU and memory requirements.

- Profiling and Code Analysis: Profile application code to identify performance bottlenecks and resource-intensive operations. This can involve using profiling tools to analyze CPU usage, memory allocation, and database queries. Code analysis helps optimize the application and reduce its resource demands.

- Dependency Mapping: Map dependencies between different components of the application and its underlying infrastructure. Understanding these dependencies is crucial for determining the impact of resource changes on overall performance. For instance, if a database server is a critical dependency, ensure sufficient resources are allocated to support its performance.

- Scenario Planning: Develop scenario plans to anticipate future resource demands. This involves considering factors like projected user growth, seasonal variations in traffic, and the introduction of new features. Scenario planning allows for proactive resource allocation and prevents performance degradation during peak periods.

Using Monitoring Tools to Track Resource Usage in Real-Time

Real-time monitoring is essential for tracking resource usage and identifying performance issues in the cloud environment. Several monitoring tools provide valuable insights into resource consumption patterns, allowing for proactive adjustments and optimization.

- Cloud Provider’s Native Monitoring Tools: Leverage the native monitoring tools provided by the cloud provider. These tools offer detailed metrics on resource utilization, such as CPU utilization, memory usage, disk I/O, and network traffic. They often provide dashboards and alerts for specific thresholds. For example, AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring provide comprehensive monitoring capabilities.

- Third-Party Monitoring Solutions: Utilize third-party monitoring solutions for advanced features and integrations. These tools often provide more granular monitoring, customizable dashboards, and integration with other IT systems. Popular choices include Datadog, New Relic, and Dynatrace.

- Setting up Alerts and Notifications: Configure alerts and notifications based on predefined thresholds. When resource utilization exceeds these thresholds, alerts are triggered, notifying administrators of potential issues. This enables proactive intervention and prevents performance degradation.

- Analyzing Historical Data: Review historical data to identify trends and patterns in resource usage. This analysis helps in forecasting future resource demands and optimizing resource allocation. For example, analyzing historical CPU usage data can reveal peak usage periods and inform scaling decisions.

- Implementing Custom Dashboards: Create custom dashboards to visualize key performance indicators (KPIs) and resource metrics. This allows for easy monitoring and quick identification of performance bottlenecks. Dashboards can be tailored to specific workloads and applications.

Best Practices for Optimizing Resource Allocation in the Cloud

Optimizing resource allocation is crucial for achieving cost efficiency and ensuring optimal performance in the cloud. Implementing best practices helps in effectively managing resources and minimizing waste.

- Right-Sizing Instances: Choose instance sizes that match the actual resource requirements of the workload. Avoid over-provisioning resources, which leads to unnecessary costs. Regularly review instance sizes and adjust them based on real-time resource usage.

- Automated Scaling: Implement automated scaling to dynamically adjust resources based on demand. Scaling can be based on CPU utilization, memory usage, or other performance metrics. Automated scaling ensures that resources are available when needed and minimizes costs during periods of low demand.

- Storage Optimization: Select appropriate storage types based on the performance and cost requirements of the workload. Consider using SSDs for performance-intensive applications and object storage for archival data. Regularly review storage usage and optimize data placement.

- Network Optimization: Optimize network configuration and bandwidth allocation to ensure efficient data transfer. Consider using content delivery networks (CDNs) for distributing content and reducing latency. Monitor network traffic and identify potential bottlenecks.

- Cost Management Tools: Utilize cost management tools to monitor and control cloud spending. These tools provide insights into resource consumption and help identify cost optimization opportunities. Implement budgeting and forecasting to manage cloud costs effectively.

- Regular Performance Reviews: Conduct regular performance reviews to assess resource utilization and identify areas for optimization. These reviews should involve analyzing monitoring data, reviewing instance sizes, and evaluating application performance.

- Leveraging Reserved Instances or Committed Use Discounts: Consider using reserved instances or committed use discounts to reduce cloud costs. These pricing models offer significant discounts for long-term resource commitments. Evaluate resource usage patterns and choose the appropriate pricing model.

- Implementing Resource Tags: Use resource tags to categorize and organize cloud resources. Tags allow for better tracking of resource usage and facilitate cost allocation. They can also be used for automating tasks and implementing resource policies.

Selecting Cloud Instance Types and Sizes

Choosing the appropriate cloud instance types and sizes is a critical step in cloud migration, directly impacting performance, cost, and scalability. A well-informed selection process ensures optimal resource utilization, avoids over-provisioning (leading to unnecessary expenses), and prevents under-provisioning (which can degrade application performance). This section details the factors involved in selecting cloud instance types and sizes and provides a practical mapping guide for common workload types.

Factors in Choosing Cloud Instance Types

The selection of cloud instance types involves a careful evaluation of several key factors. Understanding these factors allows for a more informed decision-making process, leading to a more efficient and cost-effective cloud infrastructure.

- CPU (Central Processing Unit): The CPU is responsible for executing instructions and processing data. The number of vCPUs (virtual CPUs) and their clock speed are crucial. Consider the CPU utilization of on-premises servers to determine the necessary vCPU count in the cloud. For example, a workload consistently utilizing 80% of a 4-core CPU might benefit from migrating to a cloud instance with 4 vCPUs.

- Memory (RAM – Random Access Memory): Memory is used to store data and instructions that the CPU actively uses. Adequate memory prevents performance bottlenecks. Analyze the memory consumption of the existing infrastructure. A server consistently using 16GB of RAM on-premises would likely require at least 16GB of RAM in the cloud.

- Storage: Storage requirements include the size, type (e.g., SSD, HDD), and performance characteristics (e.g., IOPS – Input/Output Operations Per Second) of the storage. Choose storage options based on the application’s I/O demands. For databases requiring high I/O, SSD-backed storage is often preferred. Consider the total storage capacity needed and the expected growth rate.

- Network: Network performance is crucial for applications that transfer large amounts of data. Consider network bandwidth (measured in Gbps) and latency. Applications with high network demands, such as video streaming services, will require instances with higher network capabilities. Network performance impacts the speed of data transfer between the instance and the internet or other cloud resources.

- Instance Type Specifics: Cloud providers offer various instance families optimized for different workloads. General-purpose instances provide a balance of CPU, memory, and network resources. Compute-optimized instances prioritize CPU performance, while memory-optimized instances focus on RAM capacity. Consider the specific requirements of the application when selecting the instance family.

- Cost: Different instance types and sizes have varying costs. Consider the pricing models offered by the cloud provider (e.g., on-demand, reserved instances, spot instances). Optimizing for cost involves balancing performance needs with budgetary constraints. Using reserved instances for stable workloads can lead to significant cost savings.

Mapping On-Premises Resources to Cloud Instance Sizes

Mapping on-premises resources to cloud instance sizes requires a methodical approach, taking into account the utilization patterns and performance characteristics of the existing infrastructure. This process involves analyzing resource consumption metrics, identifying bottlenecks, and selecting cloud instances that provide comparable or improved performance.

- Assess Resource Utilization: Gather data on CPU utilization, memory usage, disk I/O, and network traffic from on-premises servers. Use monitoring tools to collect metrics over a representative period (e.g., a week or a month) to understand peak and average resource demands.

- Identify Bottlenecks: Analyze the collected data to identify resource bottlenecks. Bottlenecks can be the limiting factor in application performance. For example, if the CPU is consistently at 100% utilization, the application is likely CPU-bound.

- Choose Cloud Instance Type and Size: Based on the resource utilization and identified bottlenecks, select a cloud instance type and size that meets the application’s needs. Consider the instance’s vCPU count, memory capacity, storage options, and network capabilities.

- Test and Optimize: After migrating to the cloud, monitor the application’s performance and resource utilization. Fine-tune the instance size as needed. Over time, the application’s performance and resource consumption can be optimized to reduce costs and improve efficiency.

Mapping Process for Common Workload Types

The following table illustrates a sample mapping process for common workload types. This is a guideline, and the specific instance sizes may vary depending on the cloud provider and the application’s specific requirements.

| Workload Type | On-Premises Resource (Example) | Cloud Instance Recommendation (Example) | Considerations |

|---|---|---|---|

| Web Server | 4 vCPU, 8 GB RAM, 100 GB SSD | Cloud Provider A: General Purpose Instance (e.g., m5.large) 2 vCPUs, 8 GB RAM, 100 GB SSD; Cloud Provider B Standard Instance (e.g., B2s)

| Consider traffic volume and peak load. Horizontal scaling (adding more instances) may be preferred over vertical scaling (increasing instance size) for high-traffic websites. |

| Database Server (OLTP) | 8 vCPU, 32 GB RAM, 500 GB SSD, 10,000 IOPS | Cloud Provider A: Memory Optimized Instance (e.g., r5.2xlarge) 8 vCPUs, 64 GB RAM, 500 GB SSD; Cloud Provider B Database Optimized Instance (e.g., D8s v5)

| Prioritize memory and storage I/O. Choose an instance with sufficient RAM to cache frequently accessed data and SSD-backed storage for high performance. Database-specific instance types often provide optimized configurations. |

| Application Server | 4 vCPU, 16 GB RAM, 200 GB SSD | Cloud Provider A: General Purpose Instance (e.g., m5.xlarge) 4 vCPUs, 16 GB RAM, 200 GB SSD; Cloud Provider B Compute Optimized Instance (e.g., F4s v2)

| Consider the application’s CPU and memory requirements. Monitor CPU utilization and memory usage to identify bottlenecks. The choice of the instance family depends on the application’s needs. |

| File Server | 2 vCPU, 4 GB RAM, 1 TB HDD | Cloud Provider A: General Purpose Instance (e.g., t3.medium) 2 vCPUs, 4 GB RAM, 1 TB HDD; Cloud Provider B General Purpose Instance (e.g., B2ms)

| Prioritize storage capacity. Consider the expected file transfer rate and the number of concurrent users. Choose the appropriate storage type based on the performance requirements. |

Right-Sizing Strategies for Different Workload Types

Effective cloud resource right-sizing requires tailored approaches depending on the specific workload characteristics. Different application architectures, resource demands, and scaling patterns necessitate customized strategies to optimize performance and cost. This section explores right-sizing methodologies for virtual machines, containerized applications, and database instances, providing actionable insights for cloud migration planning.

Right-Sizing Approaches for Virtual Machines (VMs)

VMs represent a fundamental building block in cloud infrastructure, and their right-sizing is crucial for overall efficiency. The approach involves a thorough analysis of resource utilization, performance metrics, and workload patterns. This ensures that VMs are provisioned with the appropriate CPU, memory, storage, and network resources to meet their operational requirements without unnecessary over-provisioning.To effectively right-size VMs, consider the following strategies:

- Performance Monitoring and Analysis: Continuously monitor key performance indicators (KPIs) such as CPU utilization, memory usage, disk I/O, and network throughput. Utilize cloud provider monitoring tools (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) or third-party monitoring solutions to collect and analyze these metrics over time. Identify resource bottlenecks and underutilized resources. For example, if a VM consistently shows low CPU utilization (e.g., below 20%) and ample available memory, it might be over-provisioned and could be downsized.

- Workload Profiling: Understand the nature of the workload running on the VM. Is it CPU-bound, memory-bound, or I/O-bound? Workload profiling involves analyzing application behavior to identify resource-intensive operations and their impact on performance. This understanding informs the selection of appropriate instance types and sizes.

- Instance Type Selection: Choose instance types that align with the workload’s resource requirements. Cloud providers offer a variety of instance families optimized for different use cases (e.g., compute-optimized, memory-optimized, storage-optimized). Select the instance family and size that best matches the workload’s profile. For instance, a database server might benefit from a memory-optimized instance with a high memory-to-CPU ratio.

- Right-Sizing Tools: Employ right-sizing tools provided by cloud vendors or third-party vendors to automate the process. These tools analyze historical performance data and recommend instance size adjustments. AWS Compute Optimizer, for example, provides recommendations for EC2 instances based on performance metrics.

- Automation and Scripting: Automate the right-sizing process using scripting or infrastructure-as-code (IaC) tools. This allows for dynamic adjustments based on changing workload demands. For example, you can use auto-scaling groups to automatically scale the number of VMs based on CPU utilization or other metrics, and you can use scripts to change instance sizes based on the load.

- Testing and Validation: Before making any changes to VM sizes, test the impact on application performance and stability. Conduct performance tests under realistic load conditions to validate that the right-sized VMs meet the required service-level agreements (SLAs).

Strategies for Right-Sizing Containerized Applications

Containerized applications, orchestrated by platforms like Kubernetes, offer flexibility and scalability. Right-sizing containerized workloads involves optimizing resource requests and limits, ensuring efficient resource utilization and preventing resource contention. The goal is to allocate resources effectively to each container, maximizing the utilization of the underlying infrastructure.The following strategies are essential for right-sizing containerized applications:

- Resource Requests and Limits: Define resource requests and limits for each container in the deployment configuration (e.g., Kubernetes Pod definition).

- Requests specify the minimum amount of resources (CPU and memory) that a container needs to run.

- Limits define the maximum amount of resources a container can consume.

Properly setting these values is crucial for resource allocation and preventing resource exhaustion. For example, if a container consistently consumes 500MB of memory, set the memory request to 500MB and the memory limit to a slightly higher value (e.g., 600MB) to allow for bursts in memory usage.

- Monitoring Container Resource Usage: Monitor the resource consumption of each container using tools like Kubernetes’ built-in metrics server or third-party monitoring solutions (e.g., Prometheus, Grafana). Analyze CPU utilization, memory usage, and other relevant metrics to identify resource bottlenecks and underutilized resources.

- Horizontal Pod Autoscaling (HPA): Implement HPA to automatically scale the number of container instances (Pods) based on resource utilization metrics. HPA allows you to dynamically adjust the capacity of your application to meet changing demand. For example, you can configure HPA to scale the number of Pods based on CPU utilization, increasing the number of Pods when CPU utilization exceeds a threshold and decreasing the number of Pods when utilization drops below a threshold.

- Vertical Pod Autoscaling (VPA): Use VPA to automatically adjust the resource requests and limits of individual containers based on their resource usage. VPA analyzes historical resource usage and dynamically modifies the requests and limits to optimize resource allocation. This can help prevent resource over-provisioning and ensure that containers have the resources they need.

- Container Image Optimization: Optimize container images to reduce their size and improve startup time. Smaller images consume fewer resources and can be deployed more quickly. Use techniques like multi-stage builds and image layering to minimize the size of the image.

- Resource Quotas: Enforce resource quotas at the namespace level to limit the total amount of resources that can be consumed by containers within that namespace. This helps prevent resource exhaustion and ensures that all applications have access to the resources they need.

Approaches for Right-Sizing Database Instances

Database instances often represent a critical component of cloud-based applications, and right-sizing them is vital for performance and cost optimization. Database right-sizing involves analyzing database performance, workload patterns, and resource utilization to determine the optimal instance size and configuration. This ensures that the database can handle the workload efficiently while minimizing unnecessary costs.Effective right-sizing of database instances involves these key approaches:

- Performance Monitoring and Analysis: Implement comprehensive performance monitoring for the database instance. Monitor key metrics such as CPU utilization, memory usage, disk I/O, query response times, and connection pool utilization. Use database-specific monitoring tools (e.g., MySQL Enterprise Monitor, PostgreSQL’s pg_stat_statements) or cloud provider monitoring services (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) to collect and analyze these metrics.

- Workload Characterization: Analyze the database workload to understand its characteristics. Is the workload read-heavy, write-heavy, or a mix of both? Are there specific queries or operations that are resource-intensive? Understanding the workload helps determine the optimal instance type and configuration. For example, a read-heavy workload might benefit from a larger instance with more memory and a high-performance storage configuration.

- Instance Type Selection: Choose the appropriate database instance type based on the workload’s requirements. Cloud providers offer a variety of instance types optimized for different database workloads. Consider factors such as CPU, memory, storage I/O, and network performance. For example, a database that performs many write operations might benefit from an instance with high-performance storage (e.g., SSD-backed storage).

- Database Configuration Optimization: Optimize the database configuration to improve performance. This includes tuning database parameters such as buffer pool size, connection pool size, query optimization settings, and indexing strategies. For example, increasing the buffer pool size can improve performance by reducing disk I/O.

- Scaling Strategies: Implement scaling strategies to handle fluctuations in database workload.

- Vertical Scaling involves increasing the resources (CPU, memory, storage) of the existing database instance.

- Horizontal Scaling involves adding more database instances to the cluster.

The choice of scaling strategy depends on the database technology and workload characteristics.

- Database Replication and Sharding: Utilize database replication and sharding to improve performance and scalability. Replication allows you to distribute read operations across multiple replicas, reducing the load on the primary database instance. Sharding involves partitioning the database across multiple instances, allowing you to scale horizontally.

- Cost Optimization: Implement cost optimization strategies such as reserved instances or committed use discounts to reduce the cost of database instances. Regularly review database instance usage and identify opportunities for cost savings.

Utilizing Cloud Provider Tools for Right-Sizing

Cloud providers offer a suite of native tools and services designed to facilitate resource optimization, enabling businesses to reduce costs and improve performance. These tools provide valuable insights into resource utilization patterns, allowing for informed decisions regarding instance sizing and configuration. Leveraging these capabilities is crucial for achieving efficient cloud resource management.

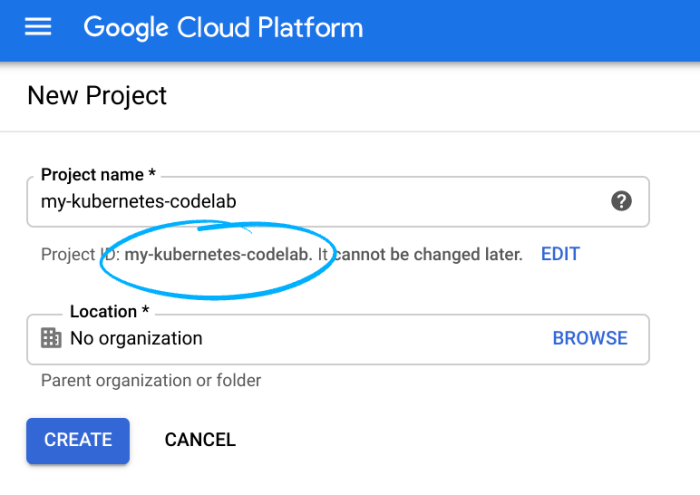

Identifying Built-in Tools and Services for Resource Optimization

Major cloud providers – Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) – each provide comprehensive tools for resource optimization. These tools analyze resource usage, identify inefficiencies, and recommend appropriate adjustments. Understanding the functionalities of these tools is the first step toward effective right-sizing.

- AWS: AWS offers a robust set of tools, including AWS Cost Explorer for cost analysis, AWS Compute Optimizer for instance recommendations, and Amazon CloudWatch for monitoring and alerting.

- Azure: Azure provides Azure Advisor for personalized recommendations, Azure Monitor for comprehensive monitoring, and Azure Cost Management + Billing for detailed cost analysis and optimization.

- GCP: GCP features Cloud Monitoring for performance monitoring, Cloud Cost Management for cost optimization, and recommendations from the Google Cloud Platform console based on resource utilization data.

Demonstrating the Use of Tools to Identify Underutilized Resources

Cloud provider tools employ various metrics and analysis techniques to identify underutilized resources. This typically involves monitoring CPU utilization, memory usage, network I/O, and disk I/O over a defined period. Tools then flag instances that consistently exhibit low utilization levels, suggesting potential right-sizing opportunities.

For instance, consider a scenario using AWS Compute Optimizer. The tool analyzes historical utilization data of EC2 instances and provides recommendations. If an instance consistently uses less than 20% CPU and has ample memory available, Compute Optimizer might recommend downsizing to a smaller instance type or even a less expensive instance family. This recommendation is based on a data-driven analysis, not assumptions.

Azure Advisor works similarly, assessing resource usage and offering specific recommendations. If a virtual machine in Azure is consistently underutilized, Azure Advisor will suggest a smaller VM size. The advisor’s recommendations are usually categorized by their impact (High, Medium, Low) and provide an estimated cost savings if the recommendation is implemented. This enables users to prioritize optimization efforts based on potential financial benefits.

Google Cloud’s recommendation engine within the GCP console uses a similar approach. It monitors various resource metrics, including CPU utilization, memory consumption, and network traffic. If a virtual machine is consistently underutilized, GCP will suggest a smaller instance size. The console presents the current instance’s utilization, the recommended instance size, and the potential cost savings. This clear presentation allows users to easily understand and act on the recommendations.

Elaborating on Cost-Saving Features Offered by Cloud Providers

Cloud providers offer various cost-saving features that can be leveraged to minimize resource consumption and reduce overall cloud spending. These features often complement the right-sizing tools, enabling users to further optimize their cloud infrastructure.

- Reserved Instances/Committed Use Discounts: AWS Reserved Instances, Azure Reserved Instances, and GCP Committed Use Discounts offer significant discounts on compute resources in exchange for a commitment to use those resources for a specified period (typically one or three years). These are particularly effective for workloads with predictable resource needs.

- Spot Instances/Preemptible VMs: AWS Spot Instances, Azure Spot VMs, and GCP Preemptible VMs allow users to bid on spare compute capacity at a significant discount. These instances can be terminated with short notice, making them suitable for fault-tolerant and non-critical workloads.

- Auto Scaling: All major cloud providers offer auto-scaling capabilities. Auto scaling automatically adjusts the number of running instances based on demand, scaling up during peak periods and scaling down during off-peak periods. This ensures that resources are only used when needed, minimizing costs.

- Rightsizing Recommendations: As discussed previously, the built-in tools provide right-sizing recommendations. These recommendations suggest resizing instances based on utilization data, which can lead to significant cost savings.

- Cost Management Tools: AWS Cost Explorer, Azure Cost Management + Billing, and GCP Cloud Cost Management provide detailed insights into cloud spending, allowing users to identify areas where costs can be reduced. These tools enable the tracking of spending by resource, project, and other criteria, providing a granular view of cloud costs.

For example, consider a company using AWS. By implementing Reserved Instances for their consistently running production servers, they can save up to 72% compared to on-demand pricing. For their development and testing environments, they can use Spot Instances, saving up to 90% compared to on-demand pricing. Additionally, using auto-scaling groups for their web servers ensures that they only pay for the resources they actually need.

Through a combination of these features, they can significantly reduce their overall cloud spending.

Monitoring and Performance Tuning Post-Migration

Ongoing monitoring and performance tuning are critical phases in the cloud migration lifecycle. They ensure the migrated workloads operate optimally, maintain performance levels, and provide cost-efficiency. Neglecting these activities can lead to performance degradation, increased operational costs, and potentially, a failure to realize the full benefits of cloud adoption. Proactive monitoring and tuning are essential to identify and address issues before they impact users or business operations.

Importance of Ongoing Monitoring and Performance Tuning

The post-migration phase requires a continuous cycle of monitoring, analysis, and optimization. This process allows organizations to adapt to changing workloads, identify bottlenecks, and fine-tune resource allocation. Monitoring provides real-time insights into the performance of applications and infrastructure, enabling rapid response to issues. Performance tuning involves making adjustments to resource configurations, code, or application architecture to improve efficiency and reduce costs.

Effective monitoring and tuning directly contribute to the following benefits: improved application performance, reduced operational costs, enhanced resource utilization, proactive identification and resolution of issues, and continuous optimization for cloud environments.

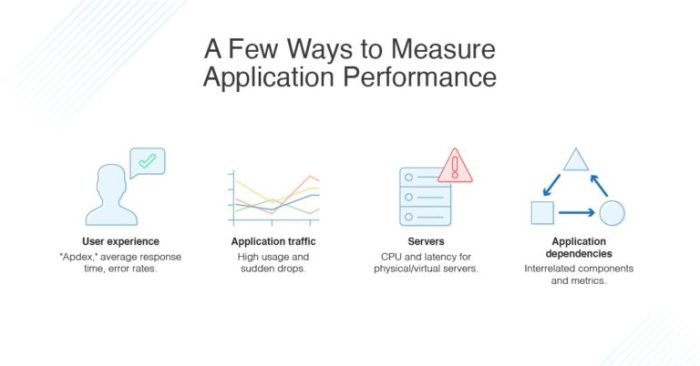

Key Performance Indicators (KPIs) to Track

Establishing a robust set of KPIs is fundamental for effective monitoring. These metrics provide a quantifiable basis for assessing the performance of migrated workloads and identifying areas for improvement. The selection of KPIs should align with the specific characteristics of each workload and the business objectives.

- Application Response Time: Measures the time it takes for an application to respond to user requests. This metric is crucial for assessing the user experience. For example, if a web application’s average response time increases from 500ms to 2 seconds after migration, it indicates a potential performance issue.

- Error Rate: Indicates the percentage of requests that result in errors. High error rates can signal application problems, infrastructure issues, or configuration errors. Tracking error rates allows for prompt identification and resolution of issues impacting user experience. For instance, monitoring an e-commerce platform’s error rate might reveal a spike in 500 server errors following a database upgrade.

- CPU Utilization: Reflects the percentage of CPU resources being used by the virtual machines or containers. High CPU utilization can indicate a need for scaling up resources or optimizing code. If a server consistently shows CPU utilization above 80%, it might benefit from a larger instance type.

- Memory Utilization: Measures the amount of memory being used by the system. High memory utilization can lead to performance degradation, especially if the system starts using swap space. Monitoring memory utilization is critical to ensure adequate memory allocation for workloads. A database server experiencing frequent swapping could benefit from increased memory.

- Disk I/O: Measures the read and write operations performed on storage devices. High disk I/O can indicate storage bottlenecks. Analyzing disk I/O helps identify whether the storage infrastructure is performing optimally. For example, a web server with high disk I/O might benefit from SSD storage.

- Network Throughput: Indicates the amount of data transferred over the network. Monitoring network throughput is essential for ensuring sufficient bandwidth for applications. A sudden drop in network throughput might indicate a network issue or a performance bottleneck.

- Cost: Tracks the costs associated with cloud resources. Monitoring costs allows organizations to identify areas for optimization and ensure they are meeting their budget. For example, a gradual increase in cloud spending could indicate inefficient resource allocation.

Design of a Dashboard Illustrating Metrics to Monitor

A well-designed dashboard is essential for visualizing and interpreting the monitored KPIs. The dashboard should provide a consolidated view of the system’s health, enabling quick identification of issues and trends. It should be customizable to reflect specific application and infrastructure needs.

The dashboard design includes several key components, such as:

- Overall Status Section: This section provides a high-level overview of the system’s health, including the overall application status (e.g., operational, degraded, or down), average response time, and error rate. A color-coded system (e.g., green for good, yellow for warning, red for critical) can be used to indicate the status of each metric.

- Performance Metrics Section: This section presents detailed performance metrics, such as CPU utilization, memory utilization, disk I/O, and network throughput. Each metric should be displayed with a time-series graph to visualize trends over time.

- Cost Analysis Section: This section displays the cost associated with cloud resources, including a breakdown by resource type (e.g., compute, storage, networking). Graphs can illustrate cost trends and provide insights into spending patterns.

- Alerting and Notifications Section: This section provides information on alerts triggered by predefined thresholds for critical metrics. It also includes details on the alert’s severity, the affected resource, and the time the alert was triggered.

- Customizable Views: The dashboard should allow users to customize the view to focus on specific metrics or applications. Users can filter the data based on various criteria, such as time range, application, or resource type.

An example dashboard layout might include the following:

Top Section (Overview):

- Application Status: (Green, Yellow, Red Indicator)

- Average Response Time: (Numeric Value, Trend Graph)

- Error Rate: (Percentage, Trend Graph)

Middle Section (Performance Metrics):

- CPU Utilization: (Trend Graph for each server/instance)

- Memory Utilization: (Trend Graph for each server/instance)

- Disk I/O: (Trend Graph for each server/instance)

- Network Throughput: (Trend Graph for each server/instance)

Bottom Section (Cost and Alerts):

- Cost: (Total Cost, Cost Breakdown by Service, Trend Graph)

- Alerts: (List of Active Alerts with Severity, Resource, and Timestamp)

This dashboard design enables administrators and operations teams to quickly assess the performance of the migrated workloads, identify potential issues, and take corrective actions. The dashboard should be continuously updated and refined based on the evolving needs of the application and infrastructure.

Cost Optimization Strategies

Migrating to the cloud offers significant opportunities for cost optimization. However, realizing these benefits requires a proactive approach to manage and control cloud spending. This section details strategies for optimizing costs across compute, storage, and network resources, along with methods for leveraging reserved and spot instances to further reduce expenditures.

Optimizing Compute Costs

Compute resources often represent a significant portion of cloud spending. Effective cost optimization requires a multifaceted approach that includes right-sizing instances, utilizing auto-scaling, and employing efficient instance purchasing models.

- Right-Sizing: As discussed previously, ensuring that compute instances are appropriately sized for their workloads is fundamental. Over-provisioned instances lead to wasted resources and higher costs. Regularly monitor CPU utilization, memory usage, and network I/O to identify opportunities for downsizing or consolidation.

- Auto-Scaling: Implement auto-scaling to automatically adjust the number of instances based on demand. This ensures that resources are available when needed and minimizes idle capacity during periods of low activity. Auto-scaling can be configured based on various metrics, such as CPU utilization, memory usage, and request queue length.

- Instance Purchasing Models: Leverage different instance purchasing models to optimize costs. This includes reserved instances for predictable workloads, spot instances for fault-tolerant workloads, and savings plans for flexible workloads.

- Instance Families and Generations: Choose the most cost-effective instance families and generations for specific workloads. For example, compute-optimized instances are suitable for CPU-intensive tasks, while memory-optimized instances are better for memory-intensive applications. Consider newer instance generations, as they often offer improved performance and cost efficiency.

- Containerization: Containerizing applications allows for more efficient resource utilization. Containers package applications and their dependencies, making them portable and easier to deploy across different environments. This can lead to higher instance density and reduced costs.

Optimizing Storage Costs

Storage costs can accumulate rapidly, especially with large datasets. Optimizing storage costs involves selecting the appropriate storage tiers, managing data lifecycle, and leveraging storage-specific features.

- Storage Tiers: Choose the appropriate storage tier based on data access frequency. For example, frequently accessed data should be stored in a standard or performance tier, while infrequently accessed data can be stored in a cheaper archive tier.

- Data Lifecycle Management: Implement data lifecycle management policies to automatically move data between different storage tiers based on its age and access patterns. This ensures that data is stored in the most cost-effective tier.

- Data Compression and Deduplication: Utilize data compression and deduplication techniques to reduce storage space and costs. Compression reduces the size of data files, while deduplication identifies and eliminates redundant data.

- Object Storage: Consider using object storage for storing large amounts of unstructured data, such as images, videos, and backups. Object storage is typically more cost-effective than block storage for these types of workloads.

- Storage Capacity Planning: Regularly monitor storage usage and plan for future capacity needs. Proactively manage storage resources to avoid unnecessary costs.

Optimizing Network Costs

Network costs, including data transfer charges and network bandwidth, can also be significant. Optimizing network costs involves minimizing data transfer, using content delivery networks (CDNs), and selecting cost-effective network configurations.

- Data Transfer Optimization: Minimize data transfer between regions and between the cloud and on-premises environments. This can be achieved by caching data closer to users, using compression, and optimizing data transfer patterns.

- Content Delivery Networks (CDNs): Utilize CDNs to cache content at edge locations, reducing latency and data transfer costs. CDNs distribute content across a global network of servers, providing faster access to users.

- Network Bandwidth Planning: Plan for network bandwidth needs and select the appropriate network configurations. Consider using private network connections for high-volume data transfers to reduce costs.

- Network Monitoring: Implement network monitoring to identify and address network bottlenecks and optimize network performance. Monitor data transfer rates and network usage patterns to identify areas for cost optimization.

- Virtual Private Cloud (VPC) Design: Design VPCs efficiently to minimize data transfer charges. This includes placing resources in the same availability zone when possible and using private IP addresses for internal communication.

Leveraging Reserved Instances and Spot Instances

Cloud providers offer various purchasing options beyond on-demand instances, including reserved instances and spot instances, to help reduce costs.

- Reserved Instances: Reserved instances provide significant cost savings compared to on-demand instances, in exchange for a commitment to use a specific instance type for a defined period (typically one or three years). They are ideal for workloads with predictable resource requirements, such as databases and application servers. The discount offered by reserved instances can be substantial, often ranging from 30% to 70% compared to on-demand pricing.

- Spot Instances: Spot instances offer even lower prices than reserved instances, but they are subject to availability. Spot instances utilize spare compute capacity and can be terminated by the cloud provider with little notice if the spot price exceeds the bid price or if the capacity is needed. Spot instances are suitable for fault-tolerant workloads, such as batch processing jobs, that can handle interruptions.

- Savings Plans: Savings Plans are a flexible pricing model that offers discounts on compute usage in exchange for a commitment to a consistent amount of usage (measured in dollars per hour) over a one- or three-year period. Savings Plans provide flexibility in instance types and sizes, making them suitable for workloads with changing resource requirements.

Cost Benefit Comparison of Instance Purchasing Options

The following table provides a comparison of the cost benefits associated with different instance purchasing options. This comparison is a generalization, and actual costs will vary depending on the cloud provider, instance type, region, and specific terms of each offering. The table illustrates the potential cost savings and trade-offs associated with each option.

| Purchasing Option | Cost | Flexibility | Commitment | Use Cases |

|---|---|---|---|---|

| On-Demand Instances | Highest | Highest | None | Development, testing, and workloads with unpredictable resource needs. |

| Reserved Instances | Lower than On-Demand | Lower | 1 or 3 years | Stable workloads, predictable resource needs (e.g., databases, application servers). |

| Savings Plans | Lower than On-Demand, similar to Reserved Instances | High | 1 or 3 years | Workloads with variable resource needs, flexibility across instance families. |

| Spot Instances | Lowest | Lowest (subject to interruption) | None (but instance may be terminated) | Fault-tolerant workloads, batch processing, and tasks where interruptions are acceptable. |

Automation and Infrastructure as Code (IaC) for Right-Sizing

Automating cloud resource right-sizing processes is crucial for achieving efficiency, scalability, and cost optimization. Infrastructure as Code (IaC) tools enable the programmatic definition and management of cloud infrastructure, including resource sizing, configuration, and deployment. This approach promotes consistency, repeatability, and reduces the potential for human error, leading to more effective right-sizing strategies.

Automating Right-Sizing Processes with IaC Tools

IaC tools facilitate automated right-sizing by allowing resources to be defined and managed through code. This code can then be version-controlled, tested, and deployed consistently across different environments.

- Provisioning and Configuration: IaC tools can automatically provision and configure cloud resources based on predefined specifications. This includes selecting instance types, adjusting storage sizes, and configuring network settings. For example, using Terraform, a developer can define the desired state of an EC2 instance, including its size (e.g., `t3.medium`), storage volume, and security group rules.

- Dynamic Resource Adjustment: IaC enables the dynamic adjustment of resource sizes based on real-time performance metrics and workload demands. Auto-scaling features, often integrated with IaC tools, automatically scale resources up or down to meet fluctuating traffic loads, ensuring optimal resource utilization and performance.

- Automated Deployment and Updates: IaC simplifies the deployment and update processes. When right-sizing adjustments are needed, such as changing the instance type or storage size, the IaC code can be modified, and the changes deployed automatically, minimizing downtime and manual intervention.

Examples of IaC Scripts for Provisioning and Managing Cloud Resources

IaC scripts, such as those written in Terraform or CloudFormation, provide the blueprints for cloud infrastructure. These scripts define the desired state of the infrastructure, and the IaC tool then provisions and manages the resources accordingly.

- Terraform Example (AWS EC2 Instance): A simplified Terraform script for provisioning an AWS EC2 instance.

resource "aws_instance" "example" ami = "ami-0c55b84e545e63665" # Example AMI ID (replace with a valid one) instance_type = "t3.medium" tags = Name = "example-instance"

- This script defines an EC2 instance using a specific AMI, instance type (`t3.medium`), and tags.

- CloudFormation Example (AWS Auto Scaling Group): A CloudFormation template that defines an auto-scaling group.

Resources: MyLaunchTemplate: Type: AWS::EC2::LaunchTemplate Properties: LaunchTemplateData: InstanceType: t3.medium ImageId: ami-0c55b84e545e63665 # Example AMI ID (replace with a valid one) MyAutoScalingGroup: Type: AWS::AutoScaling::AutoScalingGroup Properties: LaunchTemplate: LaunchTemplateId: !Ref MyLaunchTemplate Version: !GetAtt MyLaunchTemplate.LatestVersionNumber MinSize: 1 MaxSize: 3

- This template defines a launch template with an instance type and AMI, and an auto-scaling group that uses the launch template. The auto-scaling group can automatically scale the number of instances between 1 and 3 based on defined metrics.

- Dynamic Right-Sizing Example: Integrating IaC with monitoring tools allows for dynamic adjustments. For example, a script could monitor CPU utilization. If the CPU utilization of an instance consistently exceeds 80%, the script can automatically trigger an IaC update (e.g., changing the instance type from `t3.medium` to `t3.large`) to provide more resources. This process is often integrated with auto-scaling groups to handle scaling automatically.

Benefits of Automating Right-Sizing to Improve Efficiency and Reduce Manual Effort

Automating right-sizing provides numerous advantages, significantly improving operational efficiency and reducing the burden of manual intervention. These benefits translate into tangible cost savings and enhanced system performance.

- Increased Efficiency: Automation streamlines the right-sizing process, eliminating the need for manual resource allocation and configuration. This saves time and reduces the potential for errors.

- Reduced Manual Effort: IaC scripts automate the deployment and management of cloud resources, minimizing the need for manual intervention. This frees up IT staff to focus on more strategic initiatives.

- Improved Consistency: IaC ensures that resource configurations are consistent across all environments. This reduces the risk of configuration drift and improves the reliability of applications.

- Enhanced Scalability: Automated right-sizing, especially when combined with auto-scaling, allows cloud resources to scale up or down dynamically in response to workload demands. This ensures optimal resource utilization and performance.

- Cost Optimization: Automated right-sizing, including selecting the appropriate instance sizes and automatically scaling resources, helps to reduce cloud costs by ensuring that resources are utilized efficiently and only when needed. For example, if a workload only needs extra resources during peak hours, an auto-scaling group can automatically scale up during these times and scale down when the demand decreases, avoiding the cost of over-provisioning.

- Faster Deployment and Updates: With IaC, changes to resource configurations can be deployed quickly and reliably. This accelerates the deployment of new applications and updates to existing ones.

Case Studies: Real-World Examples of Successful Right-Sizing

Successful cloud resource right-sizing requires strategic planning and execution. Examining real-world case studies provides invaluable insights into the challenges, solutions, and benefits associated with this process. These examples demonstrate how organizations have optimized their cloud infrastructure, leading to significant cost savings and improved performance.

Company A: E-commerce Platform

Company A, a large e-commerce platform, experienced fluctuating traffic patterns throughout the day and year, leading to over-provisioning during off-peak times and performance bottlenecks during peak periods. This resulted in wasted resources and a poor customer experience.

The company undertook a comprehensive right-sizing initiative that included the following steps:

- Analyzing historical traffic data to identify peak and off-peak demand patterns.

- Implementing auto-scaling rules to dynamically adjust compute resources based on real-time demand.

- Optimizing database instance sizes to match actual workload requirements.

- Migrating to more cost-effective storage solutions for infrequently accessed data.

These efforts led to:

- A 35% reduction in monthly cloud infrastructure costs.

- A 20% improvement in website loading times during peak hours.

- Enhanced scalability and resilience to handle sudden traffic spikes.

Company B: Software-as-a-Service (SaaS) Provider

Company B, a SaaS provider, initially provisioned its cloud resources based on estimated peak loads, resulting in significant over-provisioning and high operational costs. Their initial approach lacked granular monitoring and resource allocation strategies.

The company addressed this by:

- Implementing detailed monitoring of CPU utilization, memory usage, and network I/O across all application components.

- Using performance testing tools to benchmark application performance under various load conditions.

- Employing containerization and orchestration tools (e.g., Kubernetes) to efficiently manage and scale application instances.

- Leveraging cloud provider recommendations for instance type selection based on observed workload patterns.

The outcomes included:

- A 40% reduction in cloud spending.

- Improved application performance and responsiveness.

- Increased agility in deploying and scaling new features.

Company C: Financial Services Firm

Company C, a financial services firm, had a complex on-premises infrastructure that they migrated to the cloud. They initially lifted and shifted their existing infrastructure, leading to inefficient resource utilization and increased cloud costs.

Their right-sizing strategy involved:

- Conducting a thorough assessment of all workloads, including their resource requirements and dependencies.

- Refactoring applications to leverage cloud-native services and architectures.

- Implementing serverless computing for event-driven workloads.

- Optimizing database configurations and scaling strategies.

The results were:

- A 50% reduction in infrastructure costs compared to the initial lift-and-shift approach.

- Significant improvements in application performance and scalability.

- Enhanced security and compliance posture.

Key Learnings from the Case Studies:

- Data-Driven Decision Making: Success hinges on detailed analysis of resource utilization and workload patterns.

- Strategic Use of Automation: Automation tools, such as auto-scaling and Infrastructure as Code (IaC), are crucial for dynamic resource management.

- Cloud-Native Architecture: Embracing cloud-native services and architectures leads to improved efficiency and cost savings.

- Continuous Monitoring and Optimization: Right-sizing is an ongoing process that requires continuous monitoring, performance tuning, and adjustments.

End of Discussion

In conclusion, right-sizing cloud resources is not a one-time task but an ongoing process vital for maximizing cloud performance and minimizing costs. By thoroughly assessing your infrastructure, selecting the right migration strategies, and continuously monitoring your resource utilization, you can unlock the full potential of the cloud. Implementing automation, leveraging cloud provider tools, and optimizing costs are key to a successful and efficient cloud migration.

The strategies discussed provide a roadmap for organizations seeking to optimize their cloud environment and achieve their business objectives.

Expert Answers

What are the primary risks of over-provisioning cloud resources?

Over-provisioning leads to unnecessary cloud spending, as you pay for resources you don’t fully utilize. This can significantly increase your monthly cloud bill without providing any tangible performance benefits.

How can I determine if my current on-premises resources are being underutilized?

Utilize monitoring tools to track CPU, memory, and storage usage. Analyze these metrics over time to identify consistent periods of low utilization, indicating underutilized resources. Historical data is crucial for accurate assessment.

What are the key differences between rehosting, replatforming, and refactoring migration strategies concerning right-sizing?

Rehosting (lift and shift) often requires right-sizing to match on-premises resources to cloud equivalents. Replatforming involves some code modifications, allowing for better optimization. Refactoring, the most complex, offers the greatest opportunity for right-sizing by redesigning applications for the cloud.

How frequently should I review and adjust my cloud resource allocation after migration?

Regular monitoring is essential. Aim to review resource allocation at least monthly, or more frequently if you experience significant changes in workload demand. Implement automated alerts to detect anomalies and trigger adjustments.

What are the benefits of using Infrastructure as Code (IaC) for right-sizing?

IaC enables automation of resource provisioning and scaling, ensuring consistency and repeatability. It allows for easier adjustments to resource allocation, version control, and simplified management, ultimately improving efficiency and reducing human error.