Performing a Proof of Concept (POC) for migration is a critical step in assessing the feasibility and effectiveness of transitioning systems to a new environment. This process allows for a controlled evaluation, minimizing risks and providing valuable insights before a full-scale migration. A well-executed POC can identify potential challenges, optimize the migration strategy, and ensure a smooth transition, saving time, resources, and preventing costly errors.

This guide delves into the essential steps involved in conducting a successful migration POC. From defining the scope and objectives to selecting the appropriate tools and methods, each stage is meticulously examined. Furthermore, the emphasis is on rigorous testing, validation, and comprehensive documentation, enabling informed decision-making and a robust migration plan. The presented information is designed to be a comprehensive and informative resource for anyone embarking on a migration project.

Define the Scope of the POC

A crucial element in executing a successful Proof of Concept (POC) for a migration project is establishing a well-defined scope. This scope serves as the foundational document, outlining the specific objectives, boundaries, and deliverables of the POC. A clear scope prevents scope creep, manages expectations, and ensures that the POC remains focused on its intended goals, ultimately leading to more accurate assessments and informed decision-making regarding the full migration.

Importance of Defining the Scope

Defining the scope meticulously is paramount for the POC’s efficacy. A poorly defined scope can lead to several detrimental outcomes, including wasted resources, inaccurate evaluations, and ultimately, a failed migration project. A well-defined scope provides a clear understanding of what the POC will and will not address. This understanding ensures that the effort and resources invested are targeted and efficient.

It also serves as a reference point throughout the POC, allowing stakeholders to evaluate progress, identify deviations, and make necessary adjustments. A clearly articulated scope facilitates communication among all involved parties, including project managers, technical teams, and business stakeholders, fostering a shared understanding of the POC’s objectives and success criteria. This shared understanding minimizes misunderstandings and ensures that everyone is working towards the same goals.

Checklist for Scope Definition

A comprehensive scope definition should encompass several key elements. These elements, when meticulously documented, provide a solid framework for the POC and guide its execution.

- Objectives: Clearly state the specific goals of the POC. What questions are you trying to answer? What are you trying to prove or disprove? Objectives should be SMART (Specific, Measurable, Achievable, Relevant, Time-bound). For example, “Validate the feasibility of migrating 10% of the application’s users to a cloud-based platform within two weeks, with a maximum downtime of 1 hour.”

- In-Scope Components: Specify the systems, applications, data, and processes that are included within the POC. Be precise. For example, “The POC will focus on migrating the customer database, including user authentication and profile data.”

- Out-of-Scope Components: Clearly define what is excluded from the POC. This is just as important as defining what is included. For example, “The POC will not include the migration of legacy reporting systems or the integration with third-party payment gateways.”

- Success Criteria: Define the metrics that will be used to determine the success of the POC. These should be measurable and directly related to the objectives. Examples include performance benchmarks (e.g., transaction processing time), cost savings, security posture, and user experience.

- Migration Approach: Artikel the planned approach for the migration. This includes the migration strategy (e.g., lift-and-shift, re-platforming, re-architecting), the tools and technologies to be used, and the high-level migration steps.

- Timeline: Establish a realistic timeline for the POC, including start and end dates, and key milestones. A Gantt chart or similar visual representation can be helpful.

- Resources: Identify the resources required for the POC, including personnel, infrastructure, software, and budget.

- Assumptions: Document any assumptions made during the scope definition. These are factors that are believed to be true but are not definitively confirmed. This transparency helps manage risks. For example, “Assumption: Network bandwidth will be sufficient for data transfer during the migration.”

- Risks and Mitigation: Identify potential risks that could impact the POC and Artikel mitigation strategies. This proactive approach helps minimize the impact of unforeseen challenges. For example, “Risk: Data corruption during migration. Mitigation: Implement data validation and backup procedures.”

- Deliverables: Define the expected outputs of the POC, such as reports, documentation, and presentations.

Examples of Well-Defined and Poorly-Defined Scopes

The contrast between a well-defined and a poorly-defined scope is stark, significantly influencing the POC’s success. Consider these examples: Well-Defined Scope:* Objective: Evaluate the performance of migrating the existing on-premise web application to AWS using a lift-and-shift approach, focusing on user experience and cost optimization.

In-Scope

The web application’s core functionality, including user authentication, data storage (relational database), and reporting.

Out-of-Scope

Integration with external APIs, legacy reporting systems, and the mobile application.

Success Criteria

Maintain existing application performance (measured by average response time and error rates), achieve a 15% reduction in infrastructure costs, and minimize user downtime during the migration.

Timeline

4 weeks.This scope provides a clear understanding of the POC’s objectives, boundaries, and success criteria. Poorly-Defined Scope:* Objective: Test the migration to the cloud.

In-Scope

The application.

Out-of-Scope

Everything else.

Success Criteria

See if it works.

Timeline

As needed.This scope is vague and lacks crucial details, leaving significant room for misinterpretation and potential failure. The lack of specifics makes it difficult to measure success, manage resources, and ensure the POC stays on track.

Identify Migration Objectives

Establishing clear and measurable objectives is paramount when designing a Proof of Concept (POC) for a migration project. These objectives provide a framework for evaluating the success of the POC, ensuring that the migration strategy aligns with the overall business goals. A well-defined set of objectives allows for a systematic assessment of risks, challenges, and potential benefits, ultimately informing the decision to proceed with a full-scale migration.

Critical Objectives of a Migration POC

The primary aim of a migration POC is to validate the technical feasibility and business viability of a migration strategy. This encompasses several critical objectives that must be addressed. The following points Artikel these core areas.

- Technical Validation: Confirming that the proposed migration architecture can successfully transfer data, applications, and infrastructure components from the source environment to the target environment. This includes verifying compatibility, performance, and functionality.

- Performance Assessment: Evaluating the performance characteristics of the migrated system, including response times, throughput, and resource utilization. This ensures that the target environment can handle the workload and meet the required service levels.

- Risk Mitigation: Identifying and mitigating potential risks associated with the migration process, such as data loss, downtime, security vulnerabilities, and compatibility issues. The POC should provide insights into these risks and allow for the development of mitigation strategies.

- Cost Estimation: Providing a realistic estimate of the total cost of the migration, including hardware, software, labor, and ongoing operational expenses. This helps in making informed decisions about the financial viability of the migration.

- Process Optimization: Refining the migration process to improve efficiency, reduce downtime, and minimize the risk of errors. This includes streamlining data transfer, application deployment, and testing procedures.

Common Migration Goals

Migration projects often target specific business goals to justify the investment and effort. These goals should be clearly defined and measurable within the POC. The following list highlights common migration goals and provides examples.

- Cost Reduction: Migrating to a more cost-effective infrastructure, such as cloud-based services, to reduce operational expenses.

- Measurement: Track infrastructure costs (e.g., compute, storage, network) before and after migration within the POC. Analyze pricing models of both environments and compare the total cost of ownership (TCO).

- Improved Performance: Enhancing application performance by migrating to a platform with better resource allocation, scalability, and availability.

- Measurement: Measure key performance indicators (KPIs) such as transaction processing time, latency, and throughput. Utilize monitoring tools to compare performance metrics between the source and target environments. A real-world example would be a financial institution migrating its trading platform to a cloud environment.

Before the migration, the average transaction processing time was 500 milliseconds. After the migration, the POC demonstrated a reduction to 250 milliseconds, resulting in a 50% performance improvement.

- Measurement: Measure key performance indicators (KPIs) such as transaction processing time, latency, and throughput. Utilize monitoring tools to compare performance metrics between the source and target environments. A real-world example would be a financial institution migrating its trading platform to a cloud environment.

- Enhanced Security: Strengthening security posture by migrating to a platform with advanced security features and compliance capabilities.

- Measurement: Conduct vulnerability assessments and penetration testing on both environments. Compare the security configurations, encryption methods, and access controls. Verify compliance with relevant industry regulations. For example, a healthcare provider migrates patient data to a HIPAA-compliant cloud environment.

The POC demonstrates that the new environment offers robust encryption at rest and in transit, along with automated security patching and compliance reporting, meeting the necessary regulatory requirements.

- Measurement: Conduct vulnerability assessments and penetration testing on both environments. Compare the security configurations, encryption methods, and access controls. Verify compliance with relevant industry regulations. For example, a healthcare provider migrates patient data to a HIPAA-compliant cloud environment.

- Increased Scalability: Providing the ability to scale resources up or down rapidly to meet fluctuating demands.

- Measurement: Simulate load testing to assess the system’s ability to handle increased traffic and data volumes. Monitor resource utilization during peak loads and evaluate the responsiveness of the system. A retail company migrates its e-commerce platform to a cloud environment and conducts a POC to simulate a surge in traffic during a holiday season.

The POC shows that the cloud platform can automatically scale compute resources to handle a 5x increase in concurrent users without impacting performance.

- Measurement: Simulate load testing to assess the system’s ability to handle increased traffic and data volumes. Monitor resource utilization during peak loads and evaluate the responsiveness of the system. A retail company migrates its e-commerce platform to a cloud environment and conducts a POC to simulate a surge in traffic during a holiday season.

- Improved Disaster Recovery: Enhancing business continuity and disaster recovery capabilities by migrating to a platform with robust backup, recovery, and failover mechanisms.

- Measurement: Test the recovery time objective (RTO) and recovery point objective (RPO) of the target environment. Simulate disaster scenarios and verify the ability to restore data and applications quickly. For instance, a manufacturing company migrates its critical production systems to a geographically diverse cloud environment.

The POC includes a simulated failure of the primary data center, demonstrating the ability to failover to a secondary data center within 15 minutes with minimal data loss, thus validating a strong disaster recovery strategy.

- Measurement: Test the recovery time objective (RTO) and recovery point objective (RPO) of the target environment. Simulate disaster scenarios and verify the ability to restore data and applications quickly. For instance, a manufacturing company migrates its critical production systems to a geographically diverse cloud environment.

Measuring Success of Each Objective

To accurately assess the success of the POC, specific metrics and measurement techniques must be employed for each objective. This section Artikels the key metrics and methods.

- Technical Validation:

- Metrics: Successful data transfer, application compatibility, functional equivalence.

- Methods: Data integrity checks, application testing (functional, performance, security), system integration testing. The POC includes the migration of a database from an on-premises environment to a cloud database service. After migration, a data integrity check verifies that all data is transferred correctly, and functional testing confirms that all application features work as expected.

- Performance Assessment:

- Metrics: Response time, throughput (transactions per second), resource utilization (CPU, memory, disk I/O).

- Methods: Performance testing, load testing, monitoring tools (e.g., Prometheus, Grafana), and application performance monitoring (APM) tools. A typical POC involves migrating a web application to a new server environment. Load tests are conducted to measure the average response time under different user loads. If the response time increases by more than 20% under peak load, the performance objective may not be met.

- Risk Mitigation:

- Metrics: Number of identified risks, severity of risks, effectiveness of mitigation strategies.

- Methods: Risk assessments, security audits, vulnerability scanning, penetration testing, data loss prevention (DLP) testing. The POC includes a security audit and penetration testing of a migrated application. The results identify several vulnerabilities, such as SQL injection flaws. The POC then demonstrates the implementation of security patches and other mitigation strategies, such as Web Application Firewalls (WAF), to address these vulnerabilities, reducing the risk of exploitation.

- Cost Estimation:

- Metrics: Infrastructure costs, labor costs, software licensing costs, and total cost of ownership (TCO).

- Methods: Cost analysis, vendor quotes, resource utilization analysis. The POC involves migrating a set of virtual machines to a cloud environment. The POC tracks the cost of compute, storage, and network resources used by the migrated VMs over a specific period (e.g., one month). By comparing these costs with the costs in the on-premises environment, the POC provides a clear estimate of the potential cost savings or increases.

- Process Optimization:

- Metrics: Migration time, downtime, number of errors, and efficiency gains.

- Methods: Process mapping, workflow analysis, and time tracking. The POC focuses on streamlining the data migration process. Before the POC, the data migration took 24 hours and involved several manual steps. During the POC, the migration process is automated, reducing the time to 8 hours and minimizing the number of errors, demonstrating a significant improvement in efficiency.

Select the Target Environment

The selection of the target environment is a critical step in a proof of concept (POC) for migration. The choice directly impacts the feasibility, cost, and overall success of the POC. Careful consideration of various factors is necessary to ensure the chosen environment aligns with the migration objectives and provides a realistic representation of the final migrated state. This decision significantly influences the resources required, the performance observed, and the insights gained during the POC phase.

Factors to Consider When Choosing the Target Environment for the POC

The selection of the target environment is influenced by several critical factors. These factors must be thoroughly evaluated to determine the most suitable platform for the POC.

- Compatibility with Source Environment: The target environment must be compatible with the applications, data formats, and infrastructure components of the source environment. Incompatibility can lead to significant rework, delays, and inaccurate POC results. For example, migrating a legacy application reliant on specific operating system versions or database types necessitates a target environment that supports these dependencies.

- Scalability and Performance: The target environment’s ability to scale and its performance characteristics should align with the anticipated needs of the migrated workloads. If the production environment requires high availability and scalability, the POC environment should simulate these capabilities. This might involve selecting a cloud provider with auto-scaling features or configuring on-premise infrastructure with redundancy.

- Security Requirements: The target environment must meet the organization’s security requirements, including data protection, access control, and compliance regulations. The POC should evaluate the security posture of the target environment and ensure it aligns with the organization’s security policies. For instance, if the organization must comply with HIPAA regulations, the target environment must offer the necessary security controls and certifications.

- Cost Considerations: The cost of the target environment, including infrastructure, services, and operational expenses, should be a significant factor. A cost analysis should be performed to compare different environments and identify the most cost-effective option for the POC. Cloud environments often offer pay-as-you-go pricing, which can be beneficial for a POC, while on-premise infrastructure requires upfront capital expenditure and ongoing maintenance costs.

- Operational Complexity: The operational complexity of the target environment should be considered, including the skills required to manage and maintain the infrastructure. Some environments, such as managed cloud services, offer simpler management compared to self-managed on-premise infrastructure. This can impact the resources required to conduct and evaluate the POC.

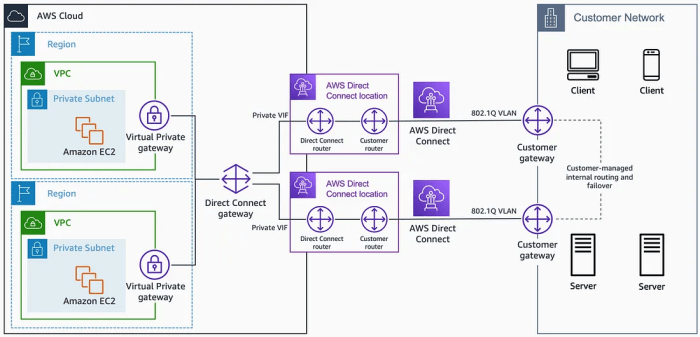

- Data Transfer Capabilities: The ability to efficiently transfer data from the source to the target environment is crucial. The target environment should support the necessary data transfer methods, such as network bandwidth and data migration tools. For example, migrating large datasets to a cloud environment may require using specialized data transfer services to minimize downtime and costs.

- Vendor Lock-in: Consider the potential for vendor lock-in when choosing a target environment, particularly when selecting cloud providers. Assess the ease of migrating away from the target environment if necessary. Evaluate the portability of applications and data to other platforms.

Comparison Table of Target Environments

A comparison table facilitates the evaluation of different target environments. The table provides a structured overview, enabling a direct comparison of key features, advantages, and disadvantages.

| Feature | Cloud Provider (e.g., AWS, Azure, GCP) | On-Premise Infrastructure | Hybrid Cloud |

|---|---|---|---|

| Infrastructure Management | Managed by provider. Reduced operational overhead. | Managed internally. Requires dedicated IT staff and resources. | Combination of cloud and on-premise. Requires careful orchestration. |

| Scalability | Highly scalable with auto-scaling capabilities. | Limited scalability based on hardware capacity. Requires capacity planning. | Scalability depends on the components in each environment. |

| Cost Model | Pay-as-you-go, variable costs. | Fixed capital expenditure, plus ongoing operational costs. | Combination of cloud and on-premise cost models. |

| Security | Shared responsibility model. Provider manages infrastructure security; customer manages application security. | Full responsibility for security. Requires robust security practices and tools. | Security posture depends on the security practices in both environments. |

| Performance | Performance varies based on chosen services and configurations. | Performance depends on hardware specifications and resource allocation. | Performance is determined by the specific workload and environment. |

| Data Transfer | Data transfer services available. Network bandwidth is a factor. | Data transfer via network or physical media. | Data transfer between environments can be complex. |

| Compliance | Compliance certifications available. | Requires meeting compliance requirements internally. | Compliance depends on the specific environment and workload. |

Impact of Environment Selection on POC Outcomes

The selection of the target environment has a direct impact on the outcomes of the POC. The environment’s characteristics influence the accuracy, reliability, and overall value of the POC results.

- Realistic Representation: A well-chosen environment provides a more realistic representation of the final migrated state. If the target environment significantly differs from the planned production environment, the POC may not accurately reflect the challenges and benefits of the migration. For example, if the production environment will use a specific database technology, the POC should also use that technology to ensure compatibility and performance testing.

- Performance Metrics: The target environment affects the performance metrics gathered during the POC. Factors such as network latency, storage performance, and processing power influence the observed application performance. Inaccurate performance data can lead to incorrect sizing and resource allocation decisions for the production environment.

- Cost Estimates: The cost of the target environment directly impacts the accuracy of the cost estimates for the migration. Using a cost-effective environment for the POC can help ensure the overall project remains within budget. If the POC uses a cloud environment with pay-as-you-go pricing, the actual cost of running the POC can provide a baseline for estimating the cost of running the migrated application in the production environment.

- Risk Assessment: The target environment’s security posture and operational complexity influence the risk assessment performed during the POC. Choosing a target environment with robust security features can help mitigate security risks, while a complex environment may increase the operational risks.

- Learning and Knowledge Transfer: The POC environment can also serve as a learning platform for the migration team. Working with the target environment allows the team to gain experience with new technologies, tools, and processes. This knowledge transfer is crucial for successful migration. For example, if the organization is migrating to a cloud environment, the POC allows the team to learn about cloud services, configuration management, and monitoring tools.

Choose the Data for Migration

The selection of appropriate data for a Proof of Concept (POC) migration is a critical determinant of its success. This stage directly impacts the accuracy of the POC’s findings and the overall feasibility assessment of the migration strategy. A well-defined data selection process ensures that the POC effectively simulates the target environment and identifies potential challenges before a full-scale migration.

The goal is to choose a data subset that is representative of the entire dataset, reflecting its volume, complexity, and characteristics, allowing for realistic testing and validation.

Representative Data Selection Process

The process of selecting representative data for the POC involves several key steps. Initially, understanding the characteristics of the entire dataset is crucial. This involves analyzing data types, volumes, access patterns, and dependencies. Next, define the criteria for data selection, which should align with the migration objectives and the specific aspects being tested. These criteria might include data volume, data complexity (e.g., the presence of various data types, relationships, and formats), and data diversity (e.g., representing different user groups, applications, or business processes).

The chosen data subset must accurately reflect the characteristics of the production environment. Finally, validate the selected data to ensure its representativeness. This validation can involve comparing key metrics and statistics between the selected subset and the original dataset.

Data Selection Methodologies

Several methodologies can be employed for data selection, each with its advantages and disadvantages. The choice of methodology depends on the specific requirements of the POC and the characteristics of the data.

- Full Dataset Migration: In some cases, migrating the entire dataset might be feasible, especially for smaller datasets or when the objective is to test the end-to-end migration process. This approach provides the most comprehensive test environment, but it can be time-consuming and resource-intensive. It’s appropriate for verifying the migration of all data and assessing performance across the full scope.

- Sampled Data Migration: This involves selecting a representative sample of the data.

- Random Sampling: Involves selecting data points randomly from the entire dataset. This approach is simple to implement and provides a statistically representative sample if the dataset is homogeneous.

- Stratified Sampling: The dataset is divided into strata (subgroups) based on specific characteristics (e.g., data types, business units), and a random sample is taken from each stratum. This ensures representation from all key data categories, even if some categories are smaller than others.

- Cluster Sampling: The dataset is divided into clusters (groups of data points), and a random selection of clusters is chosen for migration. This is useful when data is naturally grouped.

- Filtered Data Migration: This involves selecting data based on specific criteria or filters. For example, selecting data related to a particular business unit or a specific time period. This is useful when testing a specific aspect of the migration, such as the performance of a particular application or a specific data type.

Data Volume and Complexity Considerations

The volume and complexity of the data significantly impact the data selection process and the overall POC.

- Data Volume: The volume of data directly influences the time and resources required for the POC.

For instance, migrating terabytes of data requires more time and resources than migrating gigabytes.

It is essential to consider the storage capacity of the target environment and the bandwidth available for data transfer. In cases of large datasets, techniques like data compression and incremental migration might be considered.

- Data Complexity: Data complexity refers to the structure, relationships, and variety of data types within the dataset. Complex data often involves intricate relationships between different data elements.

For example, data with multiple tables, foreign key constraints, and complex queries represents a higher degree of complexity.

This complexity can impact the migration process. The chosen data subset should reflect the complexity of the original dataset. This means including various data types, relationships, and formats.

Select Migration Tools and Methods

The selection of appropriate migration tools and methods is a critical juncture in the Proof of Concept (POC) phase. The choices made here directly impact the success of the migration, influencing factors such as downtime, data integrity, and the overall effort required. A thorough evaluation process, coupled with a clear understanding of available options, is essential for a successful POC.

Evaluating and Selecting Migration Tools

The process of selecting migration tools requires a structured evaluation based on several key criteria. This evaluation should be objective, comparing the features and capabilities of different tools against the specific requirements of the POC. The selection process should consider factors such as the source and target environments, the volume and type of data, the desired level of automation, and the available budget.To evaluate and select migration tools, the following aspects should be considered:

- Compatibility: Ensure the tool supports both the source and target environments, including operating systems, databases, and application platforms. The tool should be compatible with the versions of the software in use.

- Data Migration Capabilities: Assess the tool’s ability to handle the specific data types and structures involved in the migration. Evaluate its support for different data formats, schemas, and any specific data transformations required.

- Performance: Consider the tool’s migration speed, including the data transfer rate and the time required for data validation. Performance is especially important for large datasets or time-sensitive migrations.

- Automation: Evaluate the level of automation the tool offers, including automated data mapping, pre-migration checks, and post-migration validation. Automation can significantly reduce manual effort and the risk of errors.

- Data Integrity and Security: Assess the tool’s capabilities for ensuring data integrity during migration, including data validation and error handling. Also, evaluate the security features, such as data encryption and access controls.

- Monitoring and Reporting: Examine the tool’s monitoring and reporting features, including the ability to track progress, identify issues, and generate detailed reports. This is essential for understanding the migration process and identifying any bottlenecks.

- Cost: Consider the tool’s licensing costs, including any ongoing maintenance fees. The cost should be weighed against the tool’s features and benefits. Consider if the tool is open-source, free, or requires a paid license.

- Support and Documentation: Evaluate the availability of vendor support, including documentation, training, and technical assistance. Comprehensive documentation and responsive support are crucial for resolving any issues during the migration.

Common Migration Methods

Several migration methods are commonly employed, each with its own set of advantages and disadvantages. The selection of the appropriate method depends on the specific requirements of the migration, including the desired level of change, the acceptable downtime, and the complexity of the application and data.Here are common migration methods:

- Lift-and-Shift (Rehosting): This method involves migrating applications and data to a new environment with minimal changes. It’s a relatively quick and straightforward approach, suitable for scenarios where the application is largely compatible with the target environment. The focus is on moving the existing infrastructure to a new location.

- Re-platforming: Re-platforming involves making some modifications to the application to run it on a different platform, while still preserving its core functionality. This might include changing the operating system or database. The aim is to optimize the application for the new environment without major architectural changes.

- Re-factoring: Re-factoring involves making more significant changes to the application’s code to improve its performance, scalability, or maintainability. This method often involves rewriting parts of the application while preserving its functionality. It is often done to take advantage of new features or capabilities in the target environment.

- Re-architecting: This method involves a complete redesign of the application, often to take advantage of cloud-native services and architectures. It’s the most comprehensive approach, suitable for scenarios where the application needs significant changes to meet new requirements or leverage the benefits of the target environment.

- Rebuilding: Rebuilding involves rewriting the application from scratch, often to take advantage of modern technologies and architectures. This method is used when the existing application is outdated or difficult to migrate. It is the most time-consuming and resource-intensive approach.

- Replace: This method involves replacing the existing application with a new, off-the-shelf solution or a custom-built application that provides similar functionality. This is often used when the existing application is no longer supported or meets the organization’s needs.

Demonstrating a Specific Migration Tool within the POC

To demonstrate the use of a specific migration tool within the POC, we can use the example of migrating a small, on-premises database to a cloud-based database using AWS Database Migration Service (DMS).The following steps Artikel the demonstration:

- Setup the Source and Target Databases: Create a sample on-premises database (e.g., MySQL) and set up a target database in the cloud (e.g., Amazon RDS for MySQL).

- Configure AWS DMS: Create an AWS DMS replication instance, which is the server that will perform the migration.

- Create Endpoints: Configure endpoints for both the source and target databases, specifying connection details such as the database server address, port, username, and password.

- Create a Database Migration Task: Define a migration task, specifying the source and target endpoints, the migration type (e.g., full load and CDC – Change Data Capture), and any necessary table mappings or transformations.

- Run the Migration Task: Start the migration task, which will begin transferring data from the source database to the target database.

- Monitor the Migration: Use the AWS DMS console to monitor the progress of the migration, including the number of tables migrated, the amount of data transferred, and any errors encountered.

- Validate the Data: After the migration is complete, validate the data in the target database to ensure that it matches the data in the source database. This can be done by comparing the number of rows, running checksums, or performing other data validation checks.

This demonstration illustrates how to use a specific tool, AWS DMS, to perform a database migration within the POC. The choice of AWS DMS allows for a clear, demonstrable example that is relatively simple to set up and execute. The process involves configuring endpoints, creating a migration task, running the task, monitoring progress, and validating the data. This allows for an evaluation of the tool’s capabilities and provides a tangible demonstration of its functionality.

Design the Migration Process

Designing the migration process is a critical step in the Proof of Concept (POC). A well-defined process ensures a controlled and measurable migration, minimizing risks and providing valuable insights for the full-scale migration. This stage translates the previously defined objectives, scope, and tool selections into a concrete plan of action. It involves meticulous planning, including identifying dependencies, defining the order of operations, and establishing rollback strategies.

Steps in Designing the Migration Process

The design phase necessitates a systematic approach. The following steps are crucial in establishing a robust migration process for the POC.

- Assess the Source Environment: This involves a comprehensive understanding of the existing infrastructure, including applications, databases, network configurations, and dependencies. It’s crucial to identify potential compatibility issues and data formats that might need transformation during migration. Data discovery tools can be used to automatically scan and analyze the source environment.

- Define the Migration Timeline: Develop a detailed timeline, including start and end dates for each migration phase, and critical milestones. This timeline should account for potential delays, such as unexpected issues or resource constraints. Gantt charts are often employed to visually represent the project schedule, tasks, and dependencies.

- Establish Data Migration Strategy: Determine the specific approach for data migration. This may involve a “big bang” migration, where all data is migrated at once, or a phased approach, migrating data in smaller batches. The choice depends on factors like data volume, downtime tolerance, and application dependencies.

- Develop a Testing and Validation Plan: Create a thorough testing strategy to validate the migrated data and applications. This includes pre-migration testing (testing the migration tools and processes in a controlled environment), post-migration testing (verifying data integrity and application functionality in the target environment), and performance testing (measuring the performance of applications after migration).

- Design a Rollback Strategy: A robust rollback plan is essential to mitigate risks. This plan should Artikel the steps to revert to the original source environment if the migration fails or if significant issues arise in the target environment. This involves backing up the source environment and creating procedures to restore it.

- Document the Process: Document every step of the migration process, including configurations, procedures, and troubleshooting guides. This documentation is invaluable for the POC and serves as a foundation for the full-scale migration.

Migration Workflow Flowchart

A visual representation of the migration process aids in understanding the sequential steps and interdependencies. The flowchart below provides a generalized overview of the workflow.

Start -> Assess Source Environment -> Define Migration Scope -> Select Migration Tools -> Prepare Target Environment -> Data Extraction & Transformation -> Data Migration -> Data Validation & Testing -> Application Migration -> Application Testing -> Rollback Plan Implemented? (Yes/No) -> (If Yes) Rollback to Source Environment -> (If No) Migration Complete -> End

The flowchart illustrates the key stages, starting with an assessment of the source environment and concluding with either successful migration or rollback. Each stage has specific activities, such as data extraction, transformation, migration, and validation. The rollback plan acts as a safety net.

Examples of Successful and Unsuccessful Migration Process Designs

Analyzing real-world examples provides valuable insights into effective and ineffective migration strategies.

- Successful Example: A large financial institution migrated its core banking system to a cloud environment. The successful design involved a phased approach, starting with a pilot migration of a small subset of data and applications. The team meticulously documented the process, created a comprehensive testing plan, and established a robust rollback strategy. The phased approach allowed for continuous monitoring and adjustments, leading to a smooth and successful migration.

They used tools like AWS Database Migration Service (DMS) for data migration, minimizing downtime and ensuring data consistency. The meticulous planning and testing, with clearly defined rollback procedures, were key factors in their success.

- Unsuccessful Example: A manufacturing company attempted a “big bang” migration of its enterprise resource planning (ERP) system without adequate testing or a rollback plan. The migration encountered numerous issues, including data corruption, application incompatibility, and prolonged downtime. The lack of pre-migration testing and the absence of a viable rollback strategy resulted in significant business disruption. The company underestimated the complexity of the migration and failed to adequately prepare the target environment.

This led to operational inefficiencies and financial losses. The absence of clear communication and insufficient resource allocation further compounded the problems.

The successful example highlights the importance of a phased approach, rigorous testing, and a well-defined rollback strategy. The unsuccessful example underscores the critical need for thorough planning, adequate testing, and a fallback plan to minimize risks.

Execute the Migration

The execution phase of a Proof of Concept (POC) migration is a critical juncture where the theoretical design and planning converge with practical implementation. Meticulous execution, comprehensive logging, and proactive monitoring are essential to ensure a successful migration and to gather valuable insights for the full-scale migration. This section will detail the specific steps involved, the necessary monitoring processes, and the potential challenges that may arise during the execution phase.

Migration Execution Steps

The execution of the migration involves a structured sequence of steps designed to transfer data and applications from the source environment to the target environment. Careful adherence to these steps minimizes risks and facilitates efficient problem-solving.

- Pre-migration Preparation: This stage ensures the target environment is adequately provisioned and configured to receive the migrated data and applications. This includes verifying network connectivity, security settings, and the availability of necessary resources like storage and compute power. Any pre-requisite software or configurations must be installed and validated.

- Data Backup: A complete and verifiable backup of the source data is paramount. This backup serves as a safety net, enabling rollback to the original state in case of migration failures or data corruption. The backup process should be documented, including the methods, tools, and the verification steps undertaken to confirm data integrity.

- Migration Initiation: The migration process is initiated using the selected migration tools and methods. This involves configuring the tools with the appropriate source and target environment details, selecting the data to be migrated, and defining the migration schedule (if applicable).

- Data Transfer: The selected data is transferred from the source to the target environment. The speed and method of transfer depend on factors such as data volume, network bandwidth, and the chosen migration tools. Data transfer may involve techniques like bulk transfer, incremental synchronization, or a combination of both.

- Data Transformation (if applicable): If data transformation is required, it is performed during or after the data transfer. This might involve data cleansing, formatting, or schema mapping to ensure compatibility with the target environment. The transformation process must be thoroughly tested to ensure data integrity and accuracy.

- Application Deployment and Configuration: Once the data is migrated, the applications are deployed and configured in the target environment. This involves installing application components, configuring application settings, and integrating with the migrated data.

- Testing and Validation: Rigorous testing and validation are performed to ensure the migrated data and applications function correctly in the target environment. This includes functional testing, performance testing, and security testing. Data integrity checks are also crucial to confirm data accuracy and completeness.

- Cutover and Verification: The cutover involves switching users and systems to the target environment. Post-cutover verification confirms that all applications and data are operational and accessible. This includes verifying system performance, data accuracy, and user experience.

- Rollback Plan Execution (if needed): In case of migration failure, the rollback plan is executed to revert to the original state. This involves restoring the data from the backup and reverting any configuration changes made during the migration. The rollback process must be tested and documented.

Logging and Monitoring Processes

Robust logging and monitoring are essential for tracking the progress of the migration, identifying potential issues, and ensuring data integrity. Implementing these processes provides valuable insights into the migration process, allowing for real-time adjustments and informed decision-making.

- Comprehensive Logging: Detailed logs should be generated at every stage of the migration process. These logs should capture events such as data transfer progress, errors, warnings, and system performance metrics. Logging should include timestamps, user context, and relevant configuration details.

- Real-time Monitoring: Real-time monitoring tools should be employed to track key performance indicators (KPIs) such as data transfer rates, resource utilization, and application performance. Monitoring dashboards should provide a consolidated view of the migration status, allowing for quick identification of bottlenecks or anomalies.

- Alerting and Notifications: Automated alerting mechanisms should be configured to notify the migration team of critical events, such as migration failures, performance degradation, or security breaches. These alerts should be triggered based on predefined thresholds and should provide actionable information for rapid response.

- Performance Metrics: Track metrics such as data transfer speed (GB/hour), latency, resource utilization (CPU, memory, disk I/O), and application response times. Analyzing these metrics over time allows for identifying performance trends and optimizing the migration process.

- Error Handling and Reporting: Establish clear procedures for handling errors and reporting issues. Errors should be categorized and prioritized based on their severity. Reporting should include detailed information about the error, its impact, and the steps taken to resolve it.

Potential Challenges and Mitigation Strategies

Migration projects often encounter unforeseen challenges. Proactive planning and the implementation of mitigation strategies are crucial for minimizing risks and ensuring a successful outcome.

- Network Connectivity Issues: Network connectivity problems can disrupt data transfer and slow down the migration process.

- Mitigation: Implement robust network monitoring, ensure sufficient bandwidth, and utilize network optimization techniques. Pre-testing network performance between source and target environments. Consider using a dedicated network for the migration process.

- Data Inconsistencies: Data inconsistencies can occur during data transfer or transformation, leading to data corruption or loss.

- Mitigation: Implement data validation checks, data cleansing routines, and data transformation mapping. Perform thorough testing and validation of the migrated data. Use checksums and data integrity checks.

- Application Compatibility Issues: Applications may not function correctly in the target environment due to compatibility issues or configuration problems.

- Mitigation: Conduct thorough application compatibility testing before migration. Ensure the target environment meets the application’s requirements. Develop detailed deployment and configuration procedures.

- Performance Bottlenecks: Performance bottlenecks can slow down the migration process and impact application performance in the target environment.

- Mitigation: Monitor resource utilization and identify bottlenecks. Optimize data transfer and transformation processes. Scale up resources in the target environment if necessary. Optimize the database schema and queries.

- Security Vulnerabilities: Security vulnerabilities can arise during the migration process, exposing data to unauthorized access or breaches.

- Mitigation: Implement robust security measures, including encryption, access controls, and network segmentation. Conduct security audits and vulnerability assessments. Adhere to security best practices throughout the migration process.

- Data Volume and Transfer Time: Large data volumes can lead to extended migration times, impacting project timelines.

- Mitigation: Optimize data transfer methods, such as using parallel transfers or data compression. Implement incremental migration strategies. Consider using a data staging area.

Test and Validate the Migrated System

Thorough testing and validation are critical phases following a migration Proof of Concept (POC). They ensure the migrated system functions correctly, meets performance requirements, and maintains data integrity. This stage provides the necessary evidence to validate the migration strategy and identify any issues before a full-scale migration. Rigorous testing minimizes the risk of operational disruptions and data loss.

Importance of Post-Migration Testing and Validation

Post-migration testing and validation are essential to confirm the success of the migration process. This process verifies that the migrated system operates as expected and that the business objectives are achieved. Failure to adequately test can lead to serious consequences, including data corruption, system downtime, and user dissatisfaction. The testing phase also allows for the identification of any performance bottlenecks or compatibility issues that may not have been apparent during the initial planning stages.

Test Cases for Post-Migration Validation

A comprehensive testing strategy includes various test cases to cover different aspects of the migrated system. This ensures that all functionalities are properly migrated and operational.

- Functional Testing: This verifies that all system functionalities operate as expected after the migration. Test cases cover core business processes, user interfaces, and integrations with other systems. For example, testing the ability to create, read, update, and delete (CRUD) operations on data within the migrated database.

- Performance Testing: This evaluates the performance of the migrated system under various load conditions. It assesses response times, throughput, and resource utilization. Performance testing may involve simulating a high volume of user requests to identify potential performance bottlenecks. A common example is stress testing, where the system is subjected to extreme loads to determine its breaking point.

- Data Integrity Testing: This ensures that the data has been migrated accurately and consistently. It includes checking data completeness, accuracy, and consistency across different systems. Test cases might involve comparing data sets between the source and target systems using checksums or other validation methods.

- Security Testing: This verifies that the migrated system maintains the same level of security as the original system. It includes testing access controls, data encryption, and vulnerability scanning. Penetration testing can be performed to simulate attacks and identify security weaknesses.

- Compatibility Testing: This verifies that the migrated system is compatible with existing hardware, software, and network infrastructure. This may involve testing the system with different operating systems, web browsers, and mobile devices.

- Disaster Recovery Testing: This validates the disaster recovery plan by simulating failures and verifying the ability to restore the system and data. It assesses the recovery time objective (RTO) and recovery point objective (RPO) to ensure business continuity. This could involve simulating a server outage and confirming the ability to failover to a backup system.

- User Acceptance Testing (UAT): This involves end-users testing the migrated system to ensure it meets their business requirements. It includes user-defined test cases and scenarios to validate the functionality and usability of the system from the end-user’s perspective.

Examples of Validation Reports

Validation reports provide a documented record of the testing process and its outcomes. These reports should include detailed information about the tests performed, the results obtained, and any identified issues.

Here are some examples of what validation reports might include:

- Functional Testing Report: This report documents the functional tests performed, including test cases, expected results, actual results, and any deviations. It provides a summary of the system’s functionality and identifies any functional defects.

- Performance Testing Report: This report provides the results of performance tests, including response times, throughput, resource utilization, and error rates. It includes graphs and charts to visualize performance metrics and identify performance bottlenecks.

- Data Integrity Report: This report documents the data validation process, including data comparisons, checksums, and data consistency checks. It identifies any data discrepancies and ensures data integrity across systems.

- Security Testing Report: This report documents the results of security testing, including vulnerability scans, penetration tests, and access control audits. It identifies security vulnerabilities and provides recommendations for remediation.

- User Acceptance Testing (UAT) Report: This report documents the results of UAT, including user feedback, test case results, and any issues or concerns raised by end-users. It provides a summary of user acceptance and identifies any areas that require further refinement.

Measure and Analyze Results

Measuring and analyzing the results of the Proof of Concept (POC) migration is critical for determining its success and informing the full-scale migration strategy. This phase involves collecting data, performing rigorous analysis, and identifying areas for improvement. The insights gained here directly influence the refinement of the migration plan and the allocation of resources.

Methods for Measuring and Analyzing Results

To effectively evaluate the POC migration, several methods are employed to gather and interpret relevant data. This multi-faceted approach ensures a comprehensive understanding of the system’s performance and identifies potential issues.

- Performance Metrics Collection: Gathering data on key performance indicators (KPIs) is fundamental. These metrics provide quantifiable evidence of the migrated system’s performance compared to the original system. The data collected should encompass a range of aspects, including response times, throughput, resource utilization (CPU, memory, disk I/O), and error rates. Tools like performance monitoring software, system logs, and application-specific monitoring dashboards are employed to collect this data continuously during the POC.

- Functional Testing: Verifying the functional correctness of the migrated system is crucial. This involves executing a series of tests designed to validate that the system’s functionalities operate as expected. Test cases should cover various scenarios, including normal operations, edge cases, and error conditions. The results of these tests are documented, and any discrepancies between the expected and actual outcomes are meticulously recorded.

Test management tools and test automation frameworks are often used to streamline this process.

- User Acceptance Testing (UAT): Involving end-users in the testing process provides valuable feedback on the usability and performance of the migrated system from a user’s perspective. This is particularly important for assessing the impact of the migration on user workflows and productivity. UAT typically involves providing users with access to the migrated system and asking them to perform specific tasks or scenarios. Their feedback, including observations on the system’s responsiveness, ease of use, and any encountered issues, is carefully collected and analyzed.

- Data Integrity Verification: Ensuring the integrity of the migrated data is paramount. This involves verifying that data has been accurately and completely transferred from the source system to the target system. Data integrity checks should cover various aspects, including data consistency, completeness, and accuracy. Techniques such as data validation rules, checksums, and data reconciliation reports are used to identify and address any data discrepancies.

- Cost Analysis: Evaluating the costs associated with the POC migration is essential for determining the overall feasibility and economic viability of the full-scale migration. This involves analyzing the costs of resources, including infrastructure, personnel, and tools. Cost-benefit analysis is often performed to compare the costs of the migration with the potential benefits, such as reduced operational expenses, improved performance, and enhanced scalability.

Comparison Table of Performance

A structured comparison is vital for assessing the performance differences between the original and migrated systems. The following table provides a framework for comparing key performance indicators, enabling a clear understanding of the migration’s impact.

| Metric | Original System | Migrated System | Difference/Impact |

|---|---|---|---|

| Average Response Time (ms) | 250 | 280 | Increased by 30 ms (12% increase) |

| Transaction Throughput (transactions/second) | 1000 | 950 | Decreased by 50 transactions/second (5% decrease) |

| CPU Utilization (%) | 60 | 70 | Increased by 10% |

| Memory Utilization (%) | 75 | 78 | Increased by 3% |

| Error Rate (%) | 0.5 | 1.2 | Increased by 0.7% |

Note: These values are illustrative examples. Actual results will vary based on the specific systems and migration process.

Identifying and Addressing Issues

The analysis of the POC results often reveals issues that require attention. A systematic approach to identifying and addressing these issues is crucial for the successful migration.

- Issue Identification: The first step involves identifying the issues discovered during the analysis phase. This includes reviewing the performance metrics, test results, user feedback, and any reported errors or anomalies. The issues should be categorized based on their nature (e.g., performance, functional, data integrity) and severity.

- Root Cause Analysis: Determining the root cause of each issue is critical. This may involve examining system logs, monitoring resource utilization, and conducting further testing. Techniques like the “5 Whys” method can be employed to drill down to the underlying cause of the problem. For example, if the average response time has increased, the “5 Whys” might lead to the identification of a database bottleneck.

- Remediation Planning: Developing a plan to address each identified issue is essential. The plan should include specific actions, timelines, and resource allocation. The remediation strategies may vary depending on the nature of the issue. For example, performance issues might be addressed by optimizing code, upgrading hardware, or adjusting system configurations. Data integrity issues may require data cleansing, data transformation, or re-migration of the affected data.

- Implementation and Testing: Implementing the remediation plan and testing the solutions is a critical step. This involves making the necessary changes to the migrated system and then conducting thorough testing to verify that the issue has been resolved and that the changes have not introduced any new problems. The testing should include both functional testing and performance testing.

- Documentation and Lessons Learned: Documenting the issues, the root causes, the remediation steps, and the outcomes is crucial. This documentation serves as a valuable resource for future migrations and helps to avoid repeating the same mistakes. Lessons learned from the POC should be incorporated into the full-scale migration plan to improve its effectiveness.

Document the POC Findings

Documenting the findings of a Proof of Concept (POC) is crucial for several reasons. It provides a comprehensive record of the entire process, from initial objectives to final outcomes. This documentation serves as a vital decision-making tool, enabling stakeholders to assess the viability and feasibility of the proposed migration strategy. Furthermore, it establishes a baseline for future reference, allowing for the identification of lessons learned, best practices, and potential pitfalls.

Accurate and detailed documentation ensures transparency, accountability, and facilitates effective communication among project teams and stakeholders. It also serves as a critical component in building a strong business case for the full-scale migration project.

Importance of Documenting POC Findings

The documentation of a migration POC offers multiple benefits, encompassing strategic, operational, and financial aspects. These benefits contribute to the overall success of the migration project.

- Decision-Making Support: Detailed documentation provides the data and insights necessary for informed decision-making. This allows stakeholders to assess the technical and business viability of the proposed migration.

- Risk Mitigation: Thorough documentation enables the early identification of potential risks and challenges associated with the migration process. This allows for proactive mitigation strategies to be developed.

- Knowledge Sharing: The POC report serves as a central repository of knowledge, facilitating the sharing of information and expertise across project teams and stakeholders.

- Process Improvement: Documenting the entire process allows for the identification of areas for improvement in the migration strategy, tools, and methods.

- Cost Optimization: By analyzing the results of the POC, organizations can refine their migration plans to optimize costs and resource allocation.

- Compliance and Auditability: A well-documented POC provides a clear audit trail, ensuring compliance with regulatory requirements and industry standards.

POC Report Template

A structured POC report is essential for presenting findings in a clear and concise manner. The following template provides a framework for documenting the key aspects of the POC.

- Executive Summary: This section provides a high-level overview of the POC, including its objectives, methodology, key findings, and recommendations. It should be concise and easily understandable by non-technical stakeholders.

- Introduction: The introduction provides context for the POC, including the rationale for the migration, the scope of the project, and the target audience.

- Objectives: Clearly defined objectives are the foundation of the POC. This section should Artikel the specific goals the POC aimed to achieve.

- Methodology: This section details the approach taken to conduct the POC. It should include:

- Environment Setup: Description of the source and target environments, including hardware, software, and network configurations.

- Data Selection: Explanation of the data chosen for migration, including the rationale for its selection.

- Tools and Methods: Description of the migration tools and methods used, including their configuration and usage.

- Migration Process: Step-by-step description of the migration process, including any customizations or modifications.

- Results: This section presents the findings of the POC. It should include:

- Migration Success Rate: Percentage of data successfully migrated.

- Performance Metrics: Key performance indicators (KPIs) such as migration speed, downtime, and resource utilization.

- Data Integrity: Verification of data integrity after migration.

- Security Assessment: Evaluation of the security posture of the migrated data.

- Cost Analysis: Analysis of the costs associated with the POC, including labor, tools, and infrastructure.

- Analysis: The analysis section provides an interpretation of the results, identifying key trends, patterns, and insights.

- Challenges and Lessons Learned: This section highlights the challenges encountered during the POC and the lessons learned from the experience.

- Recommendations: Based on the findings, the recommendations section provides specific actions to be taken for the full-scale migration.

- Conclusion: The conclusion summarizes the key findings and their implications.

- Appendices: The appendices may include supporting documentation such as detailed test results, configuration files, and screenshots.

Examples of Key Takeaways

Real-world examples illustrate the value of documented POC findings, differentiating successful and unsuccessful migration efforts.

- Successful Migration POC: A financial services company conducted a POC to migrate its core banking system to a cloud environment. The POC report documented the following key takeaways:

- Objective: Validate the feasibility of migrating a critical application to the cloud.

- Methodology: Used a phased approach, migrating a subset of data and testing various migration tools.

- Results: Demonstrated a 98% success rate in data migration, with minimal downtime and improved performance. Cost analysis revealed a 20% reduction in infrastructure costs.

- Recommendations: Proceed with the full-scale migration, leveraging the selected tools and methods.

This successful POC provided the necessary confidence and justification to proceed with the full migration, resulting in significant cost savings and performance improvements.

- Unsuccessful Migration POC: A retail company conducted a POC to migrate its e-commerce platform to a new platform. The POC report highlighted several critical issues:

- Objective: Assess the performance and scalability of the new platform.

- Methodology: Selected a small dataset and tested the platform’s ability to handle a limited number of transactions.

- Results: The platform experienced significant performance degradation during peak load testing, with a high error rate. Data integrity issues were also identified. Cost analysis showed that the new platform would be more expensive to maintain.

- Recommendations: Re-evaluate the platform selection and address the identified performance and data integrity issues.

This unsuccessful POC allowed the company to avoid a costly and potentially damaging full-scale migration. It highlighted the importance of thorough testing and careful platform selection. The detailed documentation provided insights into the shortcomings of the new platform, preventing a larger failure.

Closing Summary

In conclusion, a well-planned and executed Proof of Concept (POC) is indispensable for a successful migration. By methodically following the Artikeld steps, organizations can mitigate risks, optimize their migration strategy, and ensure a smooth transition to the target environment. The insights gained from the POC provide a solid foundation for a full-scale migration, leading to improved performance, cost savings, and enhanced operational efficiency.

The meticulous approach to data selection, tool evaluation, and process design underscores the importance of a systematic approach to migration projects.

Clarifying Questions

What is the primary benefit of conducting a migration POC?

The primary benefit is risk mitigation. A POC allows for the identification and resolution of potential issues before a full-scale migration, reducing the likelihood of costly errors and downtime.

How much data should be included in the POC?

The data volume should be representative of the full dataset, typically a subset that includes a variety of data types and complexities. The goal is to simulate the real-world migration scenario accurately without overwhelming the POC environment.

What are the key performance indicators (KPIs) to measure during a migration POC?

Key KPIs include migration speed, data integrity, application performance in the target environment, resource utilization (CPU, memory, storage), and cost effectiveness.

How long should a migration POC take?

The duration of a POC depends on the complexity of the migration and the size of the data. It typically ranges from a few days to a few weeks, allowing sufficient time for execution, testing, and analysis.

What happens if the POC fails?

A POC failure provides valuable insights. The results should be analyzed to identify the root causes of the failure, and the migration strategy should be adjusted accordingly. It’s a learning experience that prevents the same issues from occurring during the full migration.