Data migration, a critical undertaking for modern businesses, presents a complex interplay of technological shifts and operational risks. This process, essential for upgrading infrastructure, adopting new technologies, or consolidating data, can inadvertently disrupt business operations. Therefore, the meticulous planning and execution of migration strategies are paramount to ensure business continuity, minimizing downtime, and safeguarding data integrity throughout the transition.

This comprehensive guide delves into the core principles and practical steps required to navigate the challenges of migration while maintaining uninterrupted business functionality. From pre-migration assessments and strategic planning to post-migration support and optimization, we will explore proven methodologies and best practices. This ensures a smooth and secure transition, allowing businesses to leverage the benefits of migration without compromising their day-to-day operations.

Understanding Business Continuity and Migration

Business continuity and migration are critical aspects of modern business operations, demanding careful planning and execution. Successfully navigating these processes ensures operational resilience and minimizes disruption, allowing organizations to adapt and thrive in a dynamic environment. This section will explore the fundamental concepts underpinning business continuity and the intricacies of migration within a business context.

Core Principles of Business Continuity Planning

Business continuity planning (BCP) is a proactive approach to ensure an organization can continue its critical operations despite disruptive events. It encompasses a set of strategies, plans, and procedures designed to mitigate risks and maintain essential business functions.

- Risk Assessment and Impact Analysis: This involves identifying potential threats (e.g., natural disasters, cyberattacks, system failures) and assessing their likelihood and impact on business processes. The impact analysis quantifies the potential financial, operational, and reputational damage.

- Business Impact Analysis (BIA): A BIA is a comprehensive assessment of the potential consequences of disruptions to critical business functions. It identifies the time-sensitive processes, resources, and dependencies required for business survival. The BIA typically determines Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO).

- Recovery Time Objective (RTO): The maximum acceptable downtime for a business process. For example, a financial trading platform might have an RTO of minutes.

- Recovery Point Objective (RPO): The maximum acceptable data loss in the event of a disruption. For example, a customer database might have an RPO of one hour.

- Strategy Development: Based on the risk assessment and BIA, organizations develop specific strategies to address potential disruptions. These strategies may include:

- Data Backup and Recovery: Implementing robust data backup and recovery procedures, including offsite backups, to ensure data availability.

- Failover and Redundancy: Establishing redundant systems and failover mechanisms to automatically switch to backup systems in case of primary system failures.

- Alternate Worksite and Telecommuting: Planning for alternate work locations and enabling remote work capabilities to maintain operations.

- Communication Plans: Developing clear communication protocols to keep stakeholders informed during a disruption.

- Plan Development and Implementation: Creating detailed BCP documents that Artikel the procedures, responsibilities, and resources required to respond to various disruptions. Implementing the plans involves training staff, testing the plans, and ensuring the availability of necessary resources.

- Testing and Maintenance: Regularly testing and updating the BCP to ensure its effectiveness. This includes conducting simulations, drills, and exercises to validate the plan and identify areas for improvement. The BCP must be reviewed and updated periodically to reflect changes in the business environment, technology, and risks.

Definition of “Migration” in a Business Context

In a business context, “migration” refers to the process of transferring data, applications, and infrastructure from one environment to another. This could involve moving from on-premises servers to the cloud, upgrading to a new software version, or consolidating data centers.

Common Types of Data Migrations Businesses Undertake

Data migrations are diverse and tailored to specific business needs and technology strategies.

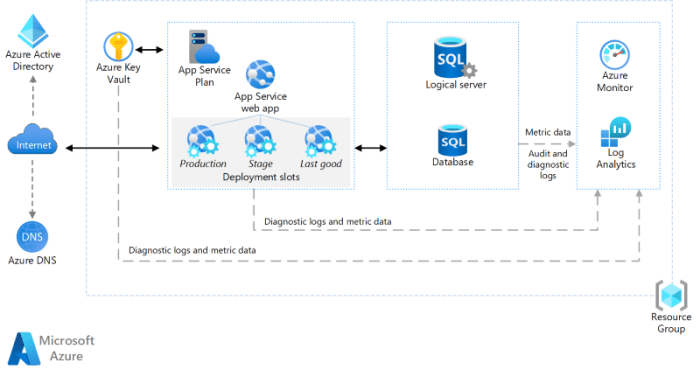

- Cloud Migration: Moving data and applications from on-premises infrastructure to a cloud environment (e.g., AWS, Azure, Google Cloud). This is often driven by cost savings, scalability, and improved agility. For example, a retail company might migrate its e-commerce platform to the cloud to handle peak traffic during holiday seasons.

- Database Migration: Transferring data from one database system to another. This can involve migrating from an older database to a newer version, switching to a different database platform (e.g., from Oracle to PostgreSQL), or consolidating multiple databases.

- Application Migration: Moving applications from one platform or environment to another. This can involve re-platforming applications to run on new operating systems or migrating to a new software architecture.

- Data Center Migration: Moving data and applications from one physical data center to another, often as part of a consolidation or relocation effort. This may involve moving to a new, more efficient data center or outsourcing data center operations.

- Storage Migration: Transferring data from one storage system to another, such as upgrading to a new storage array or moving data to a different storage tier (e.g., from on-premises to cloud storage).

Potential Risks to Business Operations During a Migration Process

Migration processes inherently carry risks that can impact business operations if not managed carefully.

- Downtime: Unplanned downtime during migration can disrupt business operations, leading to lost revenue, productivity losses, and reputational damage. The duration and impact of downtime depend on the complexity of the migration and the effectiveness of the mitigation strategies.

- Data Loss or Corruption: Data loss or corruption can occur during the migration process due to technical errors, human mistakes, or unforeseen events. This can result in data integrity issues, regulatory compliance problems, and business disruptions.

- Security Vulnerabilities: Migration projects can introduce security vulnerabilities if security measures are not adequately implemented and maintained. This can lead to data breaches, unauthorized access, and other security incidents.

- Performance Degradation: Performance degradation can occur if the target environment is not properly configured or if there are compatibility issues between the source and target systems. This can result in slow application response times, reduced productivity, and customer dissatisfaction.

- Cost Overruns: Migration projects can be expensive, and cost overruns are a common risk. This can be due to unforeseen issues, project delays, or inadequate planning.

- Compliance Issues: Migrations must adhere to regulatory compliance requirements, such as GDPR or HIPAA. Non-compliance can result in fines, legal penalties, and reputational damage.

- Application Compatibility Issues: Applications may not be fully compatible with the new environment, requiring code modifications or other adjustments. This can lead to delays, increased costs, and performance issues.

Pre-Migration Planning and Assessment

Effective pre-migration planning and assessment are paramount to ensuring business continuity during any IT infrastructure migration. This phase meticulously prepares the organization for the transition, minimizing disruption and mitigating potential risks. A comprehensive approach involves identifying critical functions, assessing the current IT landscape, evaluating potential challenges, and establishing robust communication channels. This proactive stance significantly increases the likelihood of a successful and seamless migration process.

Identifying Critical Business Functions and Their Dependencies

Identifying critical business functions is the cornerstone of a successful migration strategy. It involves a detailed analysis to determine which functions are essential for the organization’s ongoing operations and survival. This process necessitates a clear understanding of each function’s role, impact, and interdependencies. Failure to prioritize these functions can lead to significant operational downtime and financial losses.

- Business Impact Analysis (BIA): A BIA systematically evaluates the potential impact of disruptions to business functions. It considers factors such as financial loss, reputational damage, and regulatory non-compliance. This analysis helps prioritize functions based on their criticality.

- Function Mapping: Function mapping involves documenting all business functions and their interdependencies. This process includes identifying the systems, applications, data, and personnel required for each function. For example, consider an e-commerce business; order processing, payment gateway integration, and inventory management are critical functions, all interdependent.

- Dependency Analysis: Dependency analysis identifies the resources that each critical function relies on. These resources include hardware, software, network infrastructure, and external services. For instance, the order processing function depends on the availability of the e-commerce platform, the database server, and the payment gateway.

- Recovery Time Objective (RTO) and Recovery Point Objective (RPO) Definition: Defining RTO and RPO is crucial for establishing acceptable downtime and data loss parameters for each critical function. RTO defines the maximum acceptable time a function can be down, while RPO defines the maximum acceptable data loss. For example, for a payment processing system, an RTO of minutes and an RPO of seconds would be typical.

Creating a Checklist for Assessing the Current IT Infrastructure

A thorough assessment of the existing IT infrastructure is essential to identify potential compatibility issues, performance bottlenecks, and other challenges that may impact the migration. A well-structured checklist ensures that all relevant aspects of the infrastructure are examined systematically. This process facilitates the creation of a comprehensive migration plan.

- Hardware Inventory: Compile a detailed inventory of all hardware components, including servers, storage devices, network devices, and end-user devices. Document their specifications, age, and current utilization rates. For example, a server inventory might include the model, CPU, RAM, storage capacity, and operating system.

- Software Inventory: Document all installed software, including operating systems, applications, databases, and middleware. Note version numbers, licensing information, and dependencies. Consider the licensing implications of migrating software to a new environment.

- Network Assessment: Evaluate the network infrastructure, including network topology, bandwidth capacity, latency, and security configurations. Identify potential bottlenecks and compatibility issues. For example, assess the network’s ability to handle increased traffic during and after the migration.

- Data Assessment: Analyze the data stored on the existing infrastructure, including data volume, data types, and data storage locations. Assess data integrity and compliance requirements. Consider the need for data migration tools and strategies.

- Security Assessment: Evaluate the current security posture of the IT infrastructure, including firewalls, intrusion detection systems, and access controls. Identify potential security vulnerabilities and develop a plan to address them during the migration.

- Performance Analysis: Monitor the performance of critical systems and applications to establish baseline performance metrics. Identify potential performance issues that may impact the migration. For instance, analyze CPU utilization, memory usage, and disk I/O.

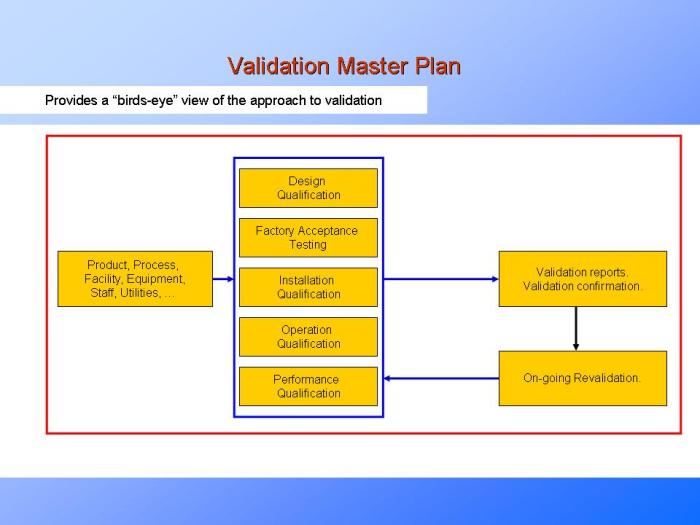

Designing a Risk Assessment Matrix to Evaluate Potential Migration Challenges

A risk assessment matrix is a valuable tool for identifying, evaluating, and mitigating potential risks associated with the migration. This matrix provides a structured approach to analyzing the likelihood and impact of various risks, allowing organizations to prioritize mitigation efforts effectively. The matrix facilitates informed decision-making and proactive risk management.

- Risk Identification: Identify potential risks associated with the migration. These risks can include data loss, downtime, security breaches, performance degradation, and compatibility issues. Examples include application incompatibility, data corruption during migration, and network outages.

- Risk Analysis: Analyze each identified risk by assessing its likelihood and impact. Use a qualitative or quantitative approach to estimate the probability of occurrence and the potential consequences.

- Risk Prioritization: Prioritize risks based on their combined likelihood and impact. Use a risk matrix to visualize the risks and determine which require the most attention. Risks with high likelihood and high impact should be prioritized.

- Risk Mitigation Planning: Develop mitigation strategies for each identified risk. These strategies may include implementing backup and recovery procedures, testing migration processes, and establishing communication protocols. For example, a data loss risk might be mitigated by creating multiple data backups.

- Risk Monitoring and Review: Continuously monitor and review the effectiveness of mitigation strategies. Update the risk assessment matrix as the migration progresses and new risks emerge.

Organizing a Communication Plan for Stakeholders Before the Migration

A well-defined communication plan is essential to keep stakeholders informed throughout the migration process. Effective communication helps manage expectations, minimize confusion, and ensure that all parties are aware of the progress, potential disruptions, and mitigation strategies. Transparency and proactive communication are key to building trust and ensuring a successful migration.

- Identify Stakeholders: Identify all stakeholders who need to be informed about the migration. These stakeholders may include internal teams, external vendors, customers, and regulatory bodies.

- Define Communication Channels: Determine the appropriate communication channels for each stakeholder group. These channels may include email, newsletters, meetings, and online portals.

- Develop Communication Content: Create clear, concise, and timely communication content. This content should include the migration timeline, potential disruptions, and mitigation plans.

- Establish Communication Frequency: Determine the frequency of communication for each stakeholder group. Regular updates are essential to keep stakeholders informed and address any concerns.

- Test Communication Plan: Test the communication plan before the migration to ensure that all channels are functioning correctly and that the content is clear and understandable.

- Monitor and Adapt: Monitor the effectiveness of the communication plan and adapt it as needed. Gather feedback from stakeholders and make adjustments to improve communication effectiveness.

Developing a Migration Strategy

A well-defined migration strategy is crucial for minimizing disruption and ensuring a successful transition during a business migration. This involves careful planning, selection of the appropriate migration approach, and a robust rollback plan. The choice of strategy depends on various factors, including business requirements, risk tolerance, and technical capabilities. A comprehensive strategy ensures a smooth transition while preserving business continuity.

Big Bang Versus Phased Migration Approaches

The choice between a “big bang” and a phased migration significantly impacts the complexity and risk associated with a business migration. Each approach has its advantages and disadvantages, making the selection dependent on the specific circumstances of the migration project.

The “big bang” migration involves a complete switchover to the new system or environment at a specific point in time. This approach minimizes the duration of the migration process, potentially reducing overall costs. However, it carries a higher risk, as any issues encountered during the cutover impact the entire business operation simultaneously. The big bang approach is often suitable for smaller businesses or those with simpler IT infrastructures.

Phased migrations, also known as incremental migrations, involve migrating components, departments, or applications in stages. This approach allows for testing and validation at each stage, mitigating risks and enabling adjustments based on real-world performance. Phased migrations are generally more complex to manage but offer greater flexibility and reduced business disruption. This approach is well-suited for large organizations with complex IT environments.

- Big Bang Migration Advantages:

- Faster completion time, leading to quicker realization of benefits.

- Potentially lower overall cost due to the shorter duration.

- Simpler project management, as the entire migration is executed at once.

- Big Bang Migration Disadvantages:

- Higher risk of downtime and disruption if the cutover fails.

- Difficult to roll back if significant issues are encountered.

- Requires extensive pre-migration testing and planning.

- Phased Migration Advantages:

- Reduced risk, as issues are isolated to specific phases.

- Allows for testing and validation at each stage.

- Greater flexibility to adapt to unforeseen challenges.

- Easier rollback, as only a portion of the system is affected.

- Phased Migration Disadvantages:

- Longer overall duration, potentially increasing costs.

- More complex project management.

- Requires careful coordination between different phases.

Selecting the Appropriate Migration Method

Choosing the correct migration method is crucial for a successful migration. The selection process involves analyzing business needs, assessing the current IT infrastructure, and evaluating the potential risks and benefits of each approach. This decision is often based on a combination of factors, including the size and complexity of the business, the criticality of the applications, and the tolerance for downtime.

The selection process starts with a thorough assessment of business requirements. This involves identifying the critical business functions, the applications that support them, and the acceptable levels of downtime. For example, a financial institution might prioritize minimal downtime for its core banking systems, while a marketing department might be more tolerant of downtime for its internal CRM system.

The next step is to evaluate the existing IT infrastructure. This includes assessing the current hardware, software, and network configurations. Understanding the existing environment is crucial for determining the compatibility of the new environment and identifying potential challenges. For instance, migrating from an older operating system to a newer one requires compatibility testing and careful planning.

Based on the business needs and infrastructure assessment, the appropriate migration method can be selected. Consider the following examples:

- Rehosting (Lift and Shift): Moving applications and data to a new infrastructure without significant changes. This approach is suitable for organizations looking for a quick migration with minimal application changes. An example is moving virtual machines from an on-premises environment to a cloud platform like AWS or Azure.

- Replatforming: Making changes to the underlying platform while keeping the application functionality intact. This might involve migrating a database to a new version or changing the operating system. An example is migrating a database from Oracle to PostgreSQL.

- Refactoring: Redesigning and rewriting the application to improve its functionality, performance, and scalability. This is a more complex approach, but it can lead to significant improvements in the long run. An example is rewriting a monolithic application into microservices.

- Repurchasing: Replacing the existing application with a new, commercially available software package. This is often a good option when the existing application is outdated or no longer supported. An example is replacing an on-premises CRM system with a cloud-based solution like Salesforce.

- Retiring: Discontinuing an application that is no longer needed. This is a simple but effective migration strategy for reducing IT costs and complexity.

Rollback Strategy in Case of Migration Failures

A comprehensive rollback strategy is an essential component of any migration plan. This strategy Artikels the steps to be taken if the migration fails or if significant issues arise during the cutover. The rollback plan ensures that the business can revert to the previous state quickly and efficiently, minimizing downtime and data loss. The effectiveness of a rollback strategy depends on thorough planning, comprehensive testing, and clear communication.

The rollback strategy should include detailed procedures for reverting to the pre-migration state. This involves restoring data, applications, and configurations to their original versions. The specific steps will vary depending on the migration method and the nature of the issues encountered. For instance, if a database migration fails, the rollback might involve restoring the database from a backup. If an application migration fails, the rollback might involve reverting to the previous version of the application.

A critical aspect of the rollback strategy is the identification of trigger points. These are specific conditions or events that will initiate the rollback process. Trigger points should be defined based on pre-defined performance metrics and thresholds. Examples of trigger points include: exceeding acceptable downtime, data corruption, critical application failures, and significant performance degradation.

The rollback process should be thoroughly tested before the migration. This involves simulating failure scenarios and verifying that the rollback procedures function correctly. Testing should be conducted in a non-production environment to minimize the risk to the live system. Regular testing ensures that the rollback plan remains effective and up-to-date. The plan should also include a clear communication plan.

This ensures that all stakeholders are informed of the situation and the steps being taken. The communication plan should define the roles and responsibilities for communication, the channels to be used, and the frequency of updates.

- Data Backups: Regular and verified backups of all data are crucial for a successful rollback. Backups should be stored in a secure and accessible location.

- Version Control: Version control systems should be used to manage application code and configurations. This allows for easy reversion to previous versions.

- Monitoring and Alerting: Implement robust monitoring and alerting systems to detect potential issues during the migration.

- Pre-Migration Testing: Thorough testing of the migration process in a non-production environment before the actual migration.

- Communication Plan: A clear communication plan to inform stakeholders of the rollback process.

Migration Project Timeline Template

A well-defined migration project timeline is crucial for managing the project effectively and ensuring that all tasks are completed on schedule. The timeline should include all the necessary tasks, their dependencies, and the estimated duration for each task. The timeline should be regularly reviewed and updated to reflect the progress of the project.

The following table provides a template for a migration project timeline. This template can be customized to fit the specific requirements of the migration project. The timeline should be detailed and should include specific milestones, deadlines, and resource allocations. The timeline should also include contingency plans to account for potential delays or issues.

| Task | Start Date | End Date | Duration | Dependencies | Resources | Status |

|---|---|---|---|---|---|---|

| Phase 1: Planning and Assessment | ||||||

| Assess Current Environment | [Start Date] | [End Date] | [Duration] | None | [Resources] | [Status] |

| Define Migration Scope | [Start Date] | [End Date] | [Duration] | Assess Current Environment | [Resources] | [Status] |

| Develop Migration Strategy | [Start Date] | [End Date] | [Duration] | Define Migration Scope | [Resources] | [Status] |

| Phase 2: Preparation | ||||||

| Set up New Environment | [Start Date] | [End Date] | [Duration] | Develop Migration Strategy | [Resources] | [Status] |

| Data Backup | [Start Date] | [End Date] | [Duration] | Set up New Environment | [Resources] | [Status] |

| Application Compatibility Testing | [Start Date] | [End Date] | [Duration] | Set up New Environment | [Resources] | [Status] |

| Phase 3: Migration Execution | ||||||

| Data Migration | [Start Date] | [End Date] | [Duration] | Data Backup, Application Compatibility Testing | [Resources] | [Status] |

| Application Migration | [Start Date] | [End Date] | [Duration] | Data Migration | [Resources] | [Status] |

| Testing and Validation | [Start Date] | [End Date] | [Duration] | Application Migration | [Resources] | [Status] |

| Phase 4: Post-Migration Activities | ||||||

| Go-Live | [Start Date] | [End Date] | [Duration] | Testing and Validation | [Resources] | [Status] |

| Post-Migration Monitoring | [Start Date] | [End Date] | [Duration] | Go-Live | [Resources] | [Status] |

| Performance Tuning | [Start Date] | [End Date] | [Duration] | Post-Migration Monitoring | [Resources] | [Status] |

This template provides a general framework, and each cell needs to be filled with specific data relevant to the migration project. For instance, under “Resources,” specify the personnel involved, such as “Database Administrator,” “Network Engineer,” or “Project Manager.” In the “Status” column, indicate the current progress, such as “Not Started,” “In Progress,” or “Completed.” The template is adaptable, allowing for the inclusion of additional tasks, dependencies, and resources based on the project’s complexity.

Data Backup and Recovery Strategies

Data backup and recovery are fundamental components of a successful migration strategy, acting as a safety net against data loss or corruption during the transition process. A well-defined and rigorously tested backup and recovery plan is crucial for maintaining business continuity and minimizing downtime in the event of unforeseen issues. The implementation of these strategies must be meticulously planned and executed to ensure data integrity and availability throughout the migration lifecycle.

Importance of Data Backup Before Migration

Data backup is essential before any migration project to mitigate the risks associated with data loss, corruption, or accidental deletion. This process creates a duplicate copy of all critical data, allowing for restoration to a pre-migration state if any problems arise during or after the migration. This safeguard protects against various potential issues, including hardware failures, software glitches, human error, and unforeseen technical challenges.

Methods for Ensuring Data Integrity During the Backup Process

Ensuring data integrity during the backup process involves several critical steps and techniques. These methods guarantee the accuracy and reliability of the backed-up data, minimizing the risk of data corruption during restoration.

- Choosing the Right Backup Method: Selecting the appropriate backup method is paramount. Full backups create a complete copy of all data, while incremental backups only copy data that has changed since the last backup (either full or incremental), and differential backups copy data that has changed since the last full backup. The optimal choice depends on the volume of data, the frequency of changes, and the recovery time objectives (RTO).

For instance, in a large-scale migration involving terabytes of data, a combination of full backups (performed less frequently) and incremental backups (performed more frequently) might be the most efficient approach.

- Implementing Data Validation: Employing checksums or hash functions (such as MD5 or SHA-256) to verify data integrity is crucial. These algorithms generate a unique fingerprint for each data block. After the backup, the checksum of the backed-up data is compared to the checksum of the original data. If the checksums match, the data integrity is confirmed.

- Verifying Backup Consistency: For databases and other transactional systems, ensuring backup consistency is essential. This involves techniques like transaction log backups and quiescing the database to prevent data corruption. For example, when migrating a large e-commerce database, a consistent backup ensures that all transactions are properly recorded and that the database can be restored to a state that accurately reflects the pre-migration business operations.

- Utilizing Redundancy: Storing backups in multiple locations (on-site and off-site) protects against various failure scenarios. This strategy ensures that a copy of the data remains available even if the primary backup location is compromised due to a disaster or other unforeseen events. Cloud-based backup solutions offer a cost-effective and scalable approach to implementing redundancy.

- Encrypting Backup Data: Protecting sensitive data during the backup process requires encryption. Encryption transforms the data into an unreadable format, safeguarding it from unauthorized access. Robust encryption algorithms, such as AES-256, should be used.

Role of a Disaster Recovery Plan in Business Continuity

A disaster recovery (DR) plan is an essential component of business continuity, specifically addressing the actions required to restore IT infrastructure and data in the event of a disruptive event. This plan Artikels the procedures for recovering critical systems and data, minimizing downtime, and ensuring business operations can resume as quickly as possible. The DR plan is intrinsically linked to the migration process, as it dictates how data is restored and systems are brought back online if migration-related failures occur.

- Defining Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO): The DR plan starts by defining the RTO, which is the maximum acceptable downtime, and the RPO, which is the maximum acceptable data loss. These objectives determine the backup frequency, the backup method, and the recovery procedures. For example, a financial institution might have a very low RTO and RPO for its core banking systems, requiring frequent backups and rapid recovery capabilities.

- Identifying Critical Systems and Data: The DR plan must identify all critical systems, applications, and data required for business operations. This includes prioritizing these assets based on their impact on business continuity. For instance, customer relationship management (CRM) data and financial transaction records are usually deemed critical.

- Documenting Recovery Procedures: The plan should include detailed, step-by-step procedures for restoring systems and data. These procedures should cover all aspects of the recovery process, from identifying the failure to restoring data from backups and verifying system functionality.

- Testing and Maintenance: Regularly testing the DR plan is crucial to ensure its effectiveness. These tests, such as failover drills and simulated disaster scenarios, validate the recovery procedures and identify any weaknesses. The plan should also be updated regularly to reflect changes in the IT infrastructure, applications, and business requirements.

Procedure for Testing Data Recovery After a Migration

Testing data recovery after a migration is a critical step to validate the effectiveness of the backup and recovery plan and to ensure that the migrated data is fully accessible and functional. This process involves restoring data from backups in a test environment and verifying that all applications and systems function correctly.

- Creating a Test Environment: A test environment should be established that mirrors the production environment as closely as possible. This includes replicating the hardware, software, and network configurations.

- Selecting Data for Restoration: Choose a representative sample of data for restoration. This sample should include critical data, applications, and databases. The selection should be based on the RTO and RPO requirements.

- Restoring Data from Backups: Initiate the data restoration process using the chosen backup method. Follow the documented recovery procedures.

- Verifying Data Integrity: Once the data is restored, verify its integrity using checksums, data validation tools, and application-specific checks.

- Testing Application Functionality: Test the functionality of all applications and systems that rely on the restored data. This includes verifying that users can access the data, that all processes function correctly, and that all reports and dashboards are accurate.

- Documenting Results and Identifying Issues: Document all test results, including any issues or errors encountered. Identify the root causes of any problems and make the necessary adjustments to the backup and recovery procedures.

- Performing Regular Tests: Schedule regular data recovery tests to ensure that the recovery plan remains effective. The frequency of testing should be determined by the criticality of the data and the rate of change.

Testing and Validation Procedures

Thorough testing and validation are critical components of a successful migration, ensuring data integrity, application functionality, and minimal disruption to business operations. These procedures are implemented both before and after the migration to identify and rectify any potential issues. A robust testing strategy minimizes the risk of data loss, system downtime, and operational inefficiencies.

Types of Testing Before and After Migration

A comprehensive testing plan involves various testing types performed at different stages of the migration process. The specific types of testing conducted vary depending on the scope and complexity of the migration.

- Pre-Migration Testing: This phase focuses on preparing the environment and identifying potential issues before any data or applications are moved.

- Environment Validation: Verifies the readiness of the target environment, including hardware, software, and network configurations. For example, checking for sufficient storage capacity, compatible operating systems, and network bandwidth.

- Compatibility Testing: Assesses the compatibility of applications and data with the new environment. This includes verifying that applications function correctly on the target platform and that data formats are supported.

- Performance Testing: Evaluates the performance of applications and infrastructure in the target environment under simulated workloads. This helps identify bottlenecks and ensure that the system can handle expected traffic levels. For example, using load testing tools to simulate user activity and measure response times.

- Data Validation: Involves verifying the integrity and accuracy of data before migration. This includes checking data formats, data types, and data consistency. For example, comparing checksums of data files between the source and target systems.

- Post-Migration Testing: This phase confirms that the migration was successful and that the system is functioning as expected in the new environment.

- Functional Testing: Verifies that all application functionalities are working correctly after the migration. This includes testing user interfaces, business processes, and data interactions. For example, testing the ability to create, read, update, and delete data within an application.

- Integration Testing: Ensures that all components of the migrated system, including applications, databases, and other services, are working together correctly. This includes testing the interactions between different modules and systems. For example, testing the flow of data between an application and a database.

- Performance Testing: Re-evaluates the performance of the migrated system under real-world workloads. This ensures that the system can handle the actual traffic and that there are no performance degradation issues. For example, monitoring CPU usage, memory consumption, and network latency.

- User Acceptance Testing (UAT): Involves end-users testing the migrated system to ensure that it meets their requirements and that they can perform their tasks effectively. This is crucial for ensuring user satisfaction and adoption. For example, users testing specific workflows and reporting any issues.

- Disaster Recovery Testing: Verifies that the disaster recovery plan is effective and that the system can be restored to a functional state in case of a failure. This includes testing the backup and recovery procedures.

Validation Steps for Migrated Data

Data validation is a crucial step in the migration process to ensure the accuracy and integrity of the migrated data. It involves various techniques to verify that the data has been migrated correctly and that there are no inconsistencies or data loss.

- Data Comparison: Comparing the data in the source and target systems to identify any discrepancies. This can be done using various methods, including:

- Record Counts: Verifying that the number of records in each table or dataset is the same in both the source and target systems.

- Checksum Verification: Calculating checksums for data files or databases and comparing them between the source and target systems. This ensures that the data has not been corrupted during the migration.

- Data Sampling: Selecting a sample of data records and comparing their values between the source and target systems. This can be done manually or using automated tools.

- Data Type Validation: Ensuring that the data types of the migrated data are correct and consistent with the target system’s requirements. For example, verifying that numeric fields are stored as numbers and that date fields are formatted correctly.

- Data Format Validation: Checking the format of the migrated data to ensure that it is consistent and compliant with the target system’s standards. This includes validating date formats, currency formats, and other data formatting rules.

- Data Consistency Checks: Verifying the consistency of data across different tables and datasets. This includes checking for referential integrity, data dependencies, and other data relationships. For example, ensuring that foreign key relationships are maintained after migration.

- Data Transformation Verification: If any data transformations were performed during the migration, verifying that the transformations were accurate and complete. This includes checking that data values have been converted correctly and that no data has been lost or corrupted.

- Data Auditing: Implementing data auditing mechanisms to track any changes to the migrated data and to identify any potential issues. This includes logging data modifications, access attempts, and other relevant events.

Automated Testing Tools versus Manual Testing

Both automated and manual testing play important roles in the testing and validation process. The choice between automated and manual testing depends on factors such as the complexity of the system, the available resources, and the desired level of test coverage.

| Feature | Automated Testing | Manual Testing |

|---|---|---|

| Execution Speed | Fast | Slow |

| Repeatability | High | Low |

| Cost | Higher upfront cost, lower long-term cost | Lower upfront cost, higher long-term cost |

| Accuracy | High (if tests are well-defined) | Variable (dependent on the tester) |

| Test Coverage | Can achieve high test coverage | May have limited test coverage |

| Human Effort | Lower (once tests are automated) | High |

| Suitable for | Regression testing, performance testing, repetitive tasks | Usability testing, exploratory testing, complex scenarios |

Automated Testing: Involves using specialized software tools to execute tests and validate the results. These tools can automate repetitive tasks, such as running test cases, verifying data, and generating reports. Automated testing is particularly effective for regression testing, performance testing, and other tasks that require repeated execution.

Manual Testing: Involves human testers executing test cases and evaluating the results. Manual testing is often used for exploratory testing, usability testing, and other tasks that require human judgment and creativity. Manual testing can be time-consuming and labor-intensive, but it can be essential for identifying issues that automated testing may miss.

Test Case Scenario for Verifying Application Functionality Post-Migration

A well-defined test case scenario is crucial for verifying application functionality after migration. This example illustrates a test case for verifying the functionality of an e-commerce application after migrating to a new platform.

- Test Case ID: TC001

- Test Case Name: Verify Successful Order Placement

- Objective: To verify that a user can successfully place an order after the migration.

- Pre-conditions:

- The e-commerce application has been migrated to the new platform.

- A user account exists and is logged in.

- The user has a valid payment method saved.

- Test Steps:

- Log in to the e-commerce application using valid credentials.

- Browse the product catalog and select a product.

- Add the selected product to the shopping cart.

- Navigate to the shopping cart and verify the product details and quantity.

- Proceed to checkout.

- Select the saved payment method.

- Confirm the shipping address.

- Review the order summary and click the “Place Order” button.

- Verify that the order is successfully placed and a confirmation message is displayed.

- Verify that an order confirmation email is received.

- Expected Results:

- The user should be able to successfully place an order.

- A confirmation message should be displayed after the order is placed.

- An order confirmation email should be received.

- The order details should be accurate.

- Actual Results: [The actual results of the test execution]

- Pass/Fail: [Based on the comparison of the actual results with the expected results]

- Notes: [Any additional notes or observations]

Communication and Stakeholder Management

Effective communication and proactive stakeholder management are critical components of a successful migration. Maintaining transparency and providing regular updates minimizes disruption, fosters trust, and ensures all parties are informed and aligned throughout the process. Failure to manage these aspects can lead to confusion, resistance, and ultimately, a less successful migration outcome.

Importance of Clear Communication

Clear and consistent communication is paramount during a business migration. It provides stakeholders with the information they need, reduces anxiety, and facilitates a smoother transition. Misinformation or a lack of communication can lead to significant problems, including operational delays, loss of productivity, and damage to stakeholder relationships.

- Reducing Uncertainty: Regular updates and clear explanations of the migration process, timelines, and potential impacts help to mitigate uncertainty and alleviate concerns among employees and other stakeholders.

- Promoting Collaboration: Open communication channels encourage collaboration and feedback, allowing stakeholders to contribute their insights and concerns, which can improve the migration strategy.

- Minimizing Disruption: Proactive communication helps to anticipate and address potential issues before they escalate, reducing disruptions to business operations.

- Building Trust: Transparency and honesty in communication build trust with stakeholders, fostering a positive environment throughout the migration.

Template for Informing Employees About the Migration Process

A well-structured communication plan ensures employees are informed and prepared. The following template provides a framework for informing employees about the migration process. This can be adapted based on the specific requirements of the business and the migration project.

Subject: Important Information About Our Upcoming [System/Application/Platform] Migration

Dear [Employee Name/Team],

We are writing to inform you about an upcoming migration of [System/Application/Platform] to [New System/Application/Platform]. This migration is an important step in [briefly explain the purpose and benefits, e.g., improving efficiency, enhancing security, etc.].

Key Details:

- What is changing: [Detailed explanation of what will be migrated, including specific components and functionalities.]

- Why we are migrating: [Explanation of the reasons for the migration, including benefits for employees and the company.]

- Timeline: [Detailed timeline of the migration, including key dates, phases, and estimated completion date.]

- Impact on you: [How the migration will affect employees, including any necessary training, downtime, or changes in workflow. Be specific about expected changes.]

- Actions required: [Any actions employees need to take before, during, or after the migration. This may include password resets, data backups, or training.]

- Support and resources: [Contact information for IT support, FAQs, training materials, and any other resources available to assist employees.]

We understand that this migration may raise questions or concerns. We are committed to providing you with the support and resources you need to navigate this transition successfully. We will be providing regular updates via [email, intranet, team meetings, etc.].

[Optional: Add a section on frequently asked questions (FAQs) and their answers.]

Thank you for your cooperation and understanding. We are confident that this migration will benefit us all.

Sincerely,

[Your Name/Department]

Methods for Managing Stakeholder Expectations

Managing stakeholder expectations involves proactively communicating, addressing concerns, and providing realistic timelines. This requires a combination of communication strategies and a commitment to transparency.

- Regular Updates: Provide regular updates on the migration’s progress, including milestones achieved, challenges encountered, and any changes to the timeline. Use multiple communication channels (email, meetings, newsletters) to ensure broad reach.

- Proactive Communication: Anticipate potential concerns and address them proactively. For example, if there is a risk of downtime, inform stakeholders well in advance and provide alternative solutions.

- Realistic Timelines: Develop realistic timelines and communicate them clearly. Avoid overpromising and under-delivering. Include buffer time for unexpected delays.

- Feedback Mechanisms: Establish mechanisms for stakeholders to provide feedback and ask questions. This could include dedicated email addresses, feedback forms, or regular Q&A sessions.

- Transparency: Be transparent about the migration’s progress, challenges, and successes. Acknowledge and address any issues openly and honestly.

- Escalation Paths: Define clear escalation paths for stakeholders to raise concerns or issues that need immediate attention.

Frequently Asked Questions (FAQ) Related to the Migration

Anticipating and addressing frequently asked questions (FAQs) is an effective way to manage stakeholder concerns and provide clear information. The following is a sample FAQ that can be adapted based on the specifics of the migration project.

Q: Why are we migrating?

A: [Provide a clear and concise explanation of the reasons for the migration, e.g., to improve performance, enhance security, or reduce costs.]

Q: What will change for me?

A: [Describe the specific changes that will affect employees, including any new systems, processes, or workflows.]

Q: When will the migration take place?

A: [Provide a detailed timeline of the migration, including key dates and phases. Be as specific as possible.]

Q: Will there be any downtime?

A: [Clearly state whether there will be downtime and, if so, how long it is expected to last. Provide details about how downtime will be managed and minimized.]

Q: How will I be trained on the new system?

A: [Describe the training plan, including the methods of training (e.g., online tutorials, in-person sessions) and the schedule.]

Q: What support will be available during and after the migration?

A: [Provide information about the support resources available, such as IT help desks, FAQs, and documentation.]

Q: What if I encounter problems during the migration?

A: [Explain the process for reporting and resolving issues, including contact information for IT support and escalation procedures.]

Q: How will my data be protected during the migration?

A: [Explain the data security measures in place, including data backup, encryption, and access controls.]

Q: Where can I find more information?

A: [Provide links to relevant resources, such as the project website, documentation, and contact information.]

Monitoring and Performance Management

Effective monitoring and performance management are critical throughout the migration process and post-migration. This ensures the system functions optimally and identifies potential issues proactively. Implementing robust monitoring allows for continuous improvement and helps to maintain business continuity.

Identifying Key Performance Indicators (KPIs) to Monitor During Migration

Establishing appropriate KPIs is essential for measuring the success of the migration and identifying areas needing attention. These metrics provide quantifiable data to assess performance against defined goals.

- Downtime: Measure the duration of any service interruptions. This KPI directly reflects the impact on business operations. For example, if a migration is scheduled for a weekend, downtime should ideally be zero.

- Data Transfer Rate: Track the speed at which data is being transferred. This KPI helps to identify bottlenecks and optimize the transfer process. Monitoring the transfer rate is crucial, especially during large-scale migrations. If the transfer rate is consistently low, it may indicate network issues or inefficient transfer methods.

- Error Rate: Monitor the number of errors encountered during data migration. A high error rate may signal issues with data integrity or compatibility. Analyzing the types of errors (e.g., data corruption, schema mismatches) can guide corrective actions.

- Resource Utilization: Assess CPU usage, memory consumption, and network bandwidth utilization. This KPI is crucial for ensuring that the migration process does not overload existing infrastructure. Monitoring resource utilization helps prevent performance degradation and ensures adequate capacity for the migration.

- Transaction Throughput: Measure the number of transactions processed per unit of time. This KPI provides insights into the impact of the migration on application performance. Monitoring transaction throughput is especially critical for online transaction processing (OLTP) systems. A drop in throughput can indicate performance bottlenecks.

- Migration Completion Rate: Track the percentage of data or applications migrated successfully. This provides an overview of the migration progress. The migration completion rate is a fundamental KPI to gauge the overall progress and success of the migration process.

Methods for Tracking System Performance After the Migration

Following the migration, continuous monitoring is vital to ensure the system functions as expected and to identify any performance degradations. Various methods can be employed to effectively track system performance.

- Application Performance Monitoring (APM) Tools: Utilize APM tools to track application performance metrics such as response times, error rates, and transaction throughput. These tools often provide detailed insights into the application’s behavior. Examples include Dynatrace, New Relic, and AppDynamics.

- Infrastructure Monitoring Tools: Employ infrastructure monitoring tools to monitor server resources (CPU, memory, disk I/O), network performance, and database performance. These tools provide a comprehensive view of the infrastructure’s health. Tools like Prometheus with Grafana, Nagios, and Zabbix are commonly used.

- User Experience Monitoring: Implement user experience monitoring to track website or application response times and availability from the user’s perspective. This helps to identify performance issues that affect end-users. Tools like Pingdom and SolarWinds Web Performance Monitor can be used.

- Synthetic Monitoring: Use synthetic monitoring to simulate user interactions and proactively identify performance issues. This involves simulating user actions (e.g., logging in, submitting forms) and measuring the time it takes to complete them.

- Real User Monitoring (RUM): Implement RUM to capture performance data from real user interactions. This provides insights into how users experience the application.

- Database Monitoring: Monitor database performance metrics such as query response times, database connections, and disk I/O. Database monitoring tools can help to identify and resolve database-related performance issues.

- Log Analysis: Analyze system logs for errors, warnings, and performance-related events. Log analysis tools help to identify root causes of performance problems.

Tools for Real-Time Monitoring of Network Traffic and Server Loads

Real-time monitoring tools provide immediate visibility into network traffic and server loads, enabling proactive identification and resolution of performance issues. These tools collect data at high frequencies, allowing for rapid response to changes in system behavior.

- Network Monitoring Tools:

- Wireshark: A network protocol analyzer used to capture and analyze network traffic in real-time. It can identify bottlenecks and security issues.

- Nagios: An open-source monitoring system that can monitor network devices, servers, and applications. It provides real-time alerts and performance graphs.

- SolarWinds Network Performance Monitor: A commercial network monitoring tool that provides real-time performance data, alerts, and visualizations.

- PRTG Network Monitor: A comprehensive network monitoring solution that monitors network traffic, server loads, and application performance.

- Server Monitoring Tools:

- Prometheus: An open-source monitoring system that collects metrics from various sources and provides real-time monitoring and alerting capabilities. Prometheus is frequently paired with Grafana for data visualization.

- Grafana: A data visualization and monitoring tool that can be used to create dashboards and visualize real-time data from Prometheus and other sources.

- Zabbix: An open-source monitoring solution that monitors servers, network devices, and applications. It provides real-time data and alerting capabilities.

- Munin: A network and system monitoring tool that provides historical data and real-time graphs of server performance metrics.

Creating a Dashboard to Visualize Migration Progress and System Performance

A well-designed dashboard is essential for visualizing migration progress and system performance in a concise and easily understandable format. This dashboard provides a central location for monitoring key metrics and identifying potential issues.

- Dashboard Components:

- Progress Bars: Display the percentage of data migrated or applications successfully migrated. This provides a clear visual representation of the migration’s status.

- Graphs and Charts: Visualize performance metrics such as CPU usage, memory consumption, and network bandwidth utilization. These provide insights into performance trends over time.

- Alerts and Notifications: Display alerts for critical events, such as high error rates or system outages. These alerts enable quick responses to critical issues.

- Key Performance Indicators (KPIs): Display the most important KPIs in an easily readable format. This allows for a quick assessment of the system’s health.

- Real-Time Data: Show live data updates for network traffic, server loads, and other key metrics. This ensures the dashboard reflects the current system state.

- Dashboard Design Principles:

- Clear and Concise Information: The dashboard should present information in a clear and easy-to-understand format.

- Customization: Allow for customization to display the most relevant metrics for the specific migration.

- User-Friendly Interface: The dashboard should be easy to navigate and use.

- Real-Time Updates: The dashboard should update data in real-time to provide up-to-date information.

- Dashboard Examples:

- Prometheus and Grafana: A popular combination for creating real-time dashboards. Prometheus collects metrics, and Grafana visualizes the data.

- New Relic: Provides comprehensive dashboards for monitoring application and infrastructure performance.

- Dynatrace: Offers AI-powered dashboards for real-time monitoring and problem detection.

Security Considerations During Migration

Data migration, while essential for modernization and scalability, introduces significant security vulnerabilities. The process involves moving sensitive data across various environments, increasing the attack surface and exposing information to potential threats. A comprehensive understanding of these risks and the implementation of robust security measures are paramount to a successful and secure migration.

Security Risks Associated with Data Migration

Data migration presents numerous security risks that must be addressed proactively. These risks span across various stages of the migration process and can compromise data confidentiality, integrity, and availability.

- Data Breaches During Transit: The transmission of data between source and destination environments creates opportunities for interception and unauthorized access. This is especially critical when using insecure communication channels or when data is not properly encrypted. For instance, a study by Verizon’s Data Breach Investigations Report (DBIR) consistently highlights that a significant percentage of data breaches involve stolen credentials or compromised systems, which could be exploited during data migration.

- Insider Threats: Individuals with privileged access to data, both within the organization and third-party vendors involved in the migration, pose a substantial risk. Malicious actors or negligent employees can inadvertently or intentionally compromise data security. According to a report by the Ponemon Institute, insider threats are a leading cause of data breaches, emphasizing the importance of rigorous access controls and employee background checks.

- Misconfiguration and Human Error: Errors during the migration process, such as incorrect configuration of security settings or failure to apply security patches, can leave systems vulnerable. Human error is a persistent factor in data breaches. For example, a misconfigured cloud storage bucket can lead to a massive data leak, as seen in several high-profile incidents reported by security firms like SANS Institute.

- Malware Infections: Migrating data through infected systems or networks can introduce malware into the new environment, leading to data corruption, system compromise, and operational disruption. This includes risks associated with phishing attacks targeting migration teams or the exploitation of vulnerabilities in migration tools. The rise of ransomware attacks targeting data migration processes underscores the need for stringent security measures.

- Data Loss and Corruption: Improperly handled data during migration can result in data loss or corruption. This includes issues with data integrity, ensuring data remains consistent throughout the migration process. Failure to maintain data integrity can have significant legal and financial implications.

- Compliance Violations: Failure to adhere to regulatory requirements, such as GDPR, HIPAA, or PCI DSS, during data migration can result in substantial fines and reputational damage. For example, a healthcare provider migrating patient data without proper encryption and access controls would violate HIPAA regulations.

Security Best Practices for Protecting Data in Transit

Protecting data in transit is a critical aspect of a secure migration strategy. Employing robust encryption, secure communication protocols, and access control mechanisms is essential to mitigate risks during data transfer.

- Encryption: Implement strong encryption protocols, such as TLS/SSL, to protect data during transit. This ensures that data is unreadable to unauthorized parties. Utilize industry-standard encryption algorithms like AES-256. For instance, when migrating data to a cloud environment, ensure that data is encrypted both in transit and at rest using encryption keys managed securely.

- Secure Communication Channels: Utilize secure protocols, such as SFTP, SCP, or HTTPS, to transfer data. Avoid using unencrypted protocols like FTP. Regularly update and patch these protocols to address known vulnerabilities. For example, using SFTP instead of FTP prevents eavesdropping on data transfers.

- Network Segmentation: Segment the network to isolate the migration process from the rest of the production environment. This limits the impact of any potential security breaches. Implement a demilitarized zone (DMZ) for staging data before migration.

- Access Controls: Implement strict access controls and the principle of least privilege, limiting access to data only to authorized personnel. Use multi-factor authentication (MFA) to verify user identities. Regularly review and audit access permissions.

- Data Integrity Checks: Implement checksums or cryptographic hashes to verify the integrity of data during transit. This ensures that data has not been tampered with during the migration. Compare the checksums of the source and destination data to confirm data consistency.

- Monitoring and Logging: Implement comprehensive monitoring and logging to track data transfer activities. Monitor for suspicious activity, such as unauthorized access attempts or unusual data transfer patterns. Regularly review security logs for anomalies.

- Data Masking and Redaction: Before migration, mask or redact sensitive data to protect it from unauthorized access. This is particularly important for data containing personally identifiable information (PII) or protected health information (PHI).

Securing the New Environment Post-Migration

Securing the new environment after migration is crucial to ensure the ongoing protection of data. This involves implementing robust security controls, maintaining regular security assessments, and adopting a proactive security posture.

- Security Hardening: Harden the new environment by configuring security settings according to industry best practices and security benchmarks. This includes disabling unnecessary services, applying security patches, and configuring firewalls.

- Access Control Management: Implement a robust access control system to manage user access and permissions. Regularly review and update access permissions to reflect changes in user roles and responsibilities. Enforce the principle of least privilege.

- Network Security: Configure network security controls, such as firewalls, intrusion detection/prevention systems (IDS/IPS), and web application firewalls (WAFs), to protect the new environment from external threats. Segment the network to isolate critical systems and data.

- Data Encryption at Rest: Encrypt sensitive data at rest using strong encryption algorithms. This protects data from unauthorized access in case of a storage device breach. Implement key management practices to securely manage encryption keys.

- Vulnerability Management: Implement a vulnerability management program to regularly scan for vulnerabilities and apply security patches promptly. This includes regular vulnerability assessments and penetration testing.

- Security Monitoring and Incident Response: Implement a security monitoring system to detect and respond to security incidents. Develop an incident response plan to handle security breaches effectively. Regularly review security logs and alerts.

- Data Loss Prevention (DLP): Implement DLP solutions to prevent sensitive data from leaving the environment without authorization. This includes monitoring data movement and enforcing data security policies.

- Regular Security Audits: Conduct regular security audits to assess the effectiveness of security controls and identify areas for improvement. Audits should be performed by qualified security professionals.

Security Audit Checklist for Verifying Data Protection Measures

A comprehensive security audit checklist helps verify the effectiveness of data protection measures throughout the migration process. This checklist should cover various aspects of security, from data in transit to the post-migration environment.

| Area | Checklist Item | Verification Method | Status |

|---|---|---|---|

| Data in Transit | Encryption of data during transit is implemented using TLS/SSL or equivalent protocols. | Verify the use of HTTPS, SFTP, or other secure protocols. Check the certificate configuration. | |

| Secure communication channels (e.g., SFTP, SCP) are used for data transfer. | Review network traffic analysis and configuration files. | ||

| Data integrity checks (checksums, hashes) are implemented to verify data integrity. | Compare checksums of data at source and destination. Review data validation logs. | ||

| Access Control | Access controls are implemented and enforced using the principle of least privilege. | Review user access permissions and group memberships. | |

| Multi-factor authentication (MFA) is enabled for all privileged accounts. | Verify MFA configuration for critical accounts. | ||

| Access logs are reviewed regularly to detect unauthorized access attempts. | Review access logs and security event logs. | ||

| Environment Security | Network segmentation is implemented to isolate the migration process and critical systems. | Review network diagrams and firewall rules. | |

| Firewalls and intrusion detection/prevention systems (IDS/IPS) are configured and operational. | Review firewall and IDS/IPS configuration and logs. | ||

| Security patches are applied regularly to all systems and applications. | Verify patch management processes and patch levels. | ||

| Data at Rest | Data encryption at rest is implemented for sensitive data. | Verify encryption configuration for storage devices and databases. | |

| Key management practices are in place to securely manage encryption keys. | Review key management policies and procedures. | ||

| Monitoring and Logging | Security monitoring systems are in place to detect and respond to security incidents. | Review security monitoring configuration and alerts. | |

| Comprehensive logging is enabled to track data transfer activities. | Review logging configurations and log retention policies. | ||

| Compliance | Data migration processes comply with relevant regulatory requirements (e.g., GDPR, HIPAA, PCI DSS). | Review data protection policies and procedures. | |

| Regular security audits are conducted to assess the effectiveness of security controls. | Review audit reports and findings. |

Post-Migration Support and Maintenance

Following a successful migration, the operational phase demands a robust support structure and proactive maintenance strategy to ensure system stability, optimal performance, and user satisfaction. This phase is crucial for realizing the benefits of the migration and mitigating potential disruptions.

Importance of Post-Migration Support

Post-migration support is essential for several key reasons, directly impacting the long-term success and return on investment of the migration project.

- User Adoption and Satisfaction: Providing readily available support helps users adapt to the new environment, reducing frustration and promoting a positive user experience. This is directly correlated with the successful adoption of the new system.

- Issue Resolution: Promptly addressing user issues, technical glitches, and performance bottlenecks minimizes downtime and prevents problems from escalating, thus safeguarding business operations.

- System Optimization: Ongoing support allows for continuous monitoring and fine-tuning of the system, ensuring optimal performance and resource utilization. This includes identifying and resolving performance bottlenecks.

- Security and Compliance: Regular security updates, vulnerability patching, and adherence to compliance requirements are vital to maintain a secure and compliant environment post-migration.

- Knowledge Transfer and Training: Support services often facilitate knowledge transfer and provide additional training, ensuring that users and administrators are well-equipped to utilize and manage the migrated system effectively.

Procedure for Handling User Issues and Support Requests

Establishing a well-defined procedure for managing user issues and support requests is crucial for providing efficient and effective post-migration support. This procedure should encompass multiple channels for issue reporting and a tiered support structure.

- Issue Reporting Channels: Multiple channels should be available for users to report issues.

- Help Desk: A centralized help desk, often using ticketing systems, provides a primary point of contact for users.

- Email: A dedicated support email address for reporting issues.

- Phone: A phone number for immediate assistance.

- Self-Service Portal: A knowledge base or FAQ section for users to find solutions to common issues.

- Triage and Prioritization: Upon receiving a support request, the support team should triage and prioritize the issue based on its impact and urgency. Issues are typically categorized by severity:

- Critical: System outage or severe performance degradation impacting a large number of users.

- High: Significant impact on a user or a group of users, preventing them from performing essential tasks.

- Medium: Moderate impact, causing inconvenience or delays.

- Low: Minor issues, such as cosmetic errors or usability suggestions.

- Issue Resolution: Issues are addressed based on their priority.

- Level 1 Support: Handles basic troubleshooting and user assistance, often resolving common issues.

- Level 2 Support: Investigates more complex issues that Level 1 support cannot resolve.

- Level 3 Support: Escalates issues to specialized technical experts or vendors for advanced troubleshooting and resolution.

- Documentation and Knowledge Base: Maintaining a comprehensive knowledge base is crucial for efficient support. This includes:

- Creating documentation: documenting solutions, troubleshooting steps, and frequently asked questions.

- Updating the knowledge base: regularly updating the knowledge base with new information and solutions.

- Feedback and Continuous Improvement: Gathering feedback from users and analyzing support data to identify areas for improvement.

Need for Ongoing System Maintenance

Ongoing system maintenance is essential for maintaining the stability, security, and performance of the migrated system. Neglecting maintenance can lead to a gradual decline in performance, increased security vulnerabilities, and ultimately, system failure.

- Performance Monitoring: Continuously monitoring system performance metrics, such as CPU usage, memory utilization, disk I/O, and network latency, is crucial. This allows for the early identification of performance bottlenecks.

- Capacity Planning: Regularly reviewing resource utilization and projecting future capacity needs is necessary to prevent performance degradation due to resource exhaustion.

- Security Patching: Applying security patches and updates promptly to address vulnerabilities and protect against security threats is a crucial aspect of maintenance.

- Data Backup and Recovery: Ensuring that data backup and recovery procedures are regularly tested and maintained is essential for disaster recovery and data protection.

- Configuration Management: Maintaining and documenting system configurations, including hardware and software settings, is important for consistency and troubleshooting.

- Software Updates: Regularly updating software components, including operating systems, applications, and databases, to ensure optimal performance and compatibility.

Schedule for Regular System Updates and Patching

Implementing a structured schedule for system updates and patching is crucial for maintaining system security and stability. This schedule should be based on a risk assessment, the criticality of the systems, and vendor recommendations. The schedule should incorporate both regular and emergency patching procedures.

- Regular Patching Schedule:

- Frequency: Monthly patching is generally recommended, often coinciding with vendor-released patch cycles.

- Testing: Patches should be tested in a non-production environment before deployment to production systems to minimize the risk of disruption.

- Implementation: Patching should be implemented during off-peak hours to minimize the impact on users.

- Emergency Patching Schedule:

- Response Time: For critical security vulnerabilities, a rapid response is essential. The response time should be based on the severity of the vulnerability and the potential impact on the business.

- Prioritization: Critical vulnerabilities should be addressed immediately, while other vulnerabilities can be addressed as part of the regular patching schedule.

- Update and Patching Procedure:

- Notification: Communicate patch deployment schedules and any potential service disruptions to users.

- Backup: Create system backups before patching to facilitate rollback if necessary.

- Monitoring: Monitor the system post-patching to ensure stability and performance.

- Examples:

- Microsoft Windows: Microsoft typically releases security updates on the second Tuesday of each month (Patch Tuesday).

- Linux Distributions: Patching schedules vary depending on the distribution, with security updates often released on a continuous basis.

Leveraging Automation Tools

Migration processes are inherently complex, involving numerous repetitive tasks prone to human error and potential for significant downtime. Implementing automation tools is crucial for streamlining these processes, minimizing risks, and ensuring business continuity. Automation, when applied strategically, can significantly improve efficiency, reduce operational costs, and facilitate a smoother transition to the new environment.

Identifying Automation Tools for Streamlining Migration

Several categories of automation tools are available to assist with various aspects of the migration process. The selection of the right tools depends on the specific needs of the migration project, the complexity of the infrastructure, and the chosen migration strategy.

- Data Migration Tools: These tools automate the transfer of data between source and destination environments. They often include features for data mapping, transformation, and validation. Examples include:

- AWS Database Migration Service (DMS): A fully managed service that helps migrate databases to AWS quickly and securely. It supports various database types and offers features like schema conversion and continuous replication.

- Azure Database Migration Service: Similar to AWS DMS, this service simplifies the migration of on-premises databases to Azure. It supports a wide range of database platforms and offers assessment and migration capabilities.

- Informatica PowerCenter: A comprehensive data integration platform that can be used for migrating and transforming data. It offers extensive connectivity options and supports complex data transformation requirements.

- Infrastructure Automation Tools: These tools automate the provisioning and management of infrastructure resources, such as servers, networks, and storage. Examples include:

- Terraform: An infrastructure-as-code (IaC) tool that allows you to define and provision infrastructure using declarative configuration files. It supports multiple cloud providers and on-premises environments.

- Ansible: An automation engine that can be used to configure systems, deploy applications, and orchestrate IT processes. It uses a simple, human-readable language (YAML) to define automation tasks.

- Chef/Puppet: Configuration management tools that automate the process of configuring and managing servers. They ensure that systems are consistently configured and compliant with defined policies.