Distributed systems, crucial for modern applications, often face network unreliability. Understanding and mitigating the impact of packet loss, latency spikes, and partitions is paramount to ensuring system functionality and performance. This comprehensive guide delves into the intricate design considerations necessary for building robust and resilient distributed systems.

From fundamental design principles to advanced strategies for data consistency and communication, this document explores a wide range of practical techniques. It also emphasizes the critical role of monitoring, recovery mechanisms, and security in creating fault-tolerant systems. Real-world case studies and performance evaluation methods are highlighted to illustrate the practical application of these principles.

Introduction to Network Unreliability

Distributed systems rely on interconnected networks for communication and data exchange. However, network infrastructure is inherently unreliable. This unreliability manifests in various ways, impacting the functionality and performance of the distributed system. Understanding these failures and their potential consequences is crucial for designing robust and resilient systems.Network unreliability in distributed systems stems from the inherent limitations and vulnerabilities of the underlying network infrastructure.

These limitations can range from temporary glitches to catastrophic failures, significantly impacting the system’s ability to function as intended. The challenges posed by network unreliability are multifaceted, demanding a proactive and thoughtful approach to system design.

Network Failure Types

Network failures are diverse and can manifest in various forms. Understanding these diverse types of failures is fundamental to mitigating their impact on distributed systems. This includes transient problems, such as packet loss and latency spikes, as well as more persistent issues like network partitions.

- Packet Loss: Packets transmitted over a network may not always reach their destination. This loss can stem from various causes, including network congestion, hardware failures, or errors during transmission. The frequency and extent of packet loss can vary greatly, depending on the network conditions. For instance, a high-speed network with robust error-checking mechanisms may experience minimal packet loss, whereas a congested network or one with faulty hardware may exhibit significantly higher rates of packet loss.

- Latency Spikes: Unexpected delays in data transmission can significantly impact the performance of distributed systems. These spikes can be caused by factors like network congestion, routing issues, or temporary hardware failures. Latency spikes are often temporary, but they can still disrupt the flow of information and impact the system’s responsiveness.

- Network Partitions: A network partition occurs when a network is divided into isolated segments. This division can arise from various causes, including physical link failures, software malfunctions, or deliberate actions. Partitioning can lead to data inconsistencies and hinder communication between different parts of the distributed system.

- Congestion: Overloaded networks often experience congestion, which leads to delayed or dropped packets. This phenomenon is often exacerbated by high traffic volumes or network bottlenecks. Congestion can significantly impact the performance of distributed systems, as it causes delays in message delivery.

Impact on System Functionality and Performance

Network unreliability can have a profound impact on the functionality and performance of a distributed system. Failures in communication can lead to data loss, inconsistencies, and even system crashes. Designing for such scenarios is critical to ensure the system remains functional and reliable.

- Data Loss and Inconsistency: Packet loss can lead to data loss, resulting in inconsistencies across different parts of the system. This inconsistency can corrupt the integrity of data and affect the reliability of the system.

- Reduced Performance: Latency spikes and network congestion can lead to reduced system performance. The impact of such issues is amplified in systems that require real-time interactions or high throughput.

- System Unreliability: Network unreliability can cause the system to become unavailable or unreliable, impacting user experience and business operations.

Importance of Designing for Network Unreliability

Robust distributed systems must be designed with network unreliability in mind. Anticipating potential failures and implementing appropriate mechanisms to handle them is essential for maintaining system availability, data integrity, and performance.

| Network Failure Type | Potential Consequences |

|---|---|

| Packet Loss | Data corruption, data loss, delayed responses, system instability |

| Latency Spikes | Reduced responsiveness, increased delays in data processing, poor user experience |

| Network Partitions | Data inconsistencies, inability to communicate between parts of the system, potential system failure |

| Congestion | Delayed message delivery, increased latency, system instability |

Design Principles for Resilient Systems

Building resilient distributed systems requires a proactive approach to handling network unreliability. These systems must be designed to withstand failures and maintain functionality even when components or connections are unavailable. This involves understanding and implementing crucial design principles focused on fault tolerance and redundancy.Robust design principles are essential for ensuring the continued operation of distributed systems in the face of network failures.

These principles encompass strategies to anticipate and mitigate the impact of outages, allowing the system to adapt and recover quickly. Implementing these strategies effectively is critical to maintain high availability and prevent disruptions to service.

Fault Tolerance and Redundancy Strategies

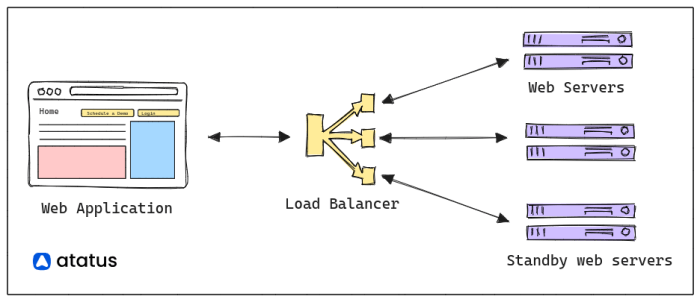

Fault tolerance and redundancy are key strategies in designing resilient systems. Fault tolerance aims to ensure the system continues to function correctly even if some components fail. Redundancy involves having backup components or systems ready to take over if a primary component fails. These strategies are complementary, working together to achieve high availability.

Implementing Fault Tolerance in Distributed Systems

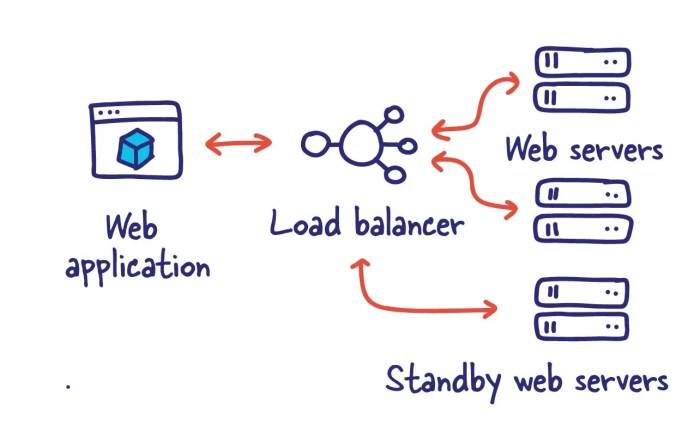

Implementing fault tolerance involves various strategies. One approach is to use redundant servers or storage devices, enabling the system to continue operating even if one server or storage device fails. Another strategy is to use a load balancer to distribute the workload across multiple servers, ensuring that no single server is overwhelmed. Further strategies include implementing techniques to detect and isolate failed components.

Backup Systems and Failover Mechanisms

Backup systems provide a copy of critical data or functionality. This allows the system to restore its state to a previous working condition if a failure occurs. Failover mechanisms automate the process of switching from a failed component to a backup component. These mechanisms ensure minimal disruption to service by rapidly transferring operations to the backup.

Examples of Implementing Fault Tolerance in Distributed Systems

Consider a web application with multiple servers. If one server fails, a load balancer can automatically redirect traffic to the remaining operational servers. This ensures users can still access the application without interruption. Similarly, database systems can utilize replication to maintain multiple copies of data across multiple servers. This redundancy ensures data availability and integrity, even if one server fails.

In cloud-based systems, these concepts are vital for handling inevitable network interruptions and maintaining service levels.

Comparison of Fault Tolerance Strategies

| Strategy | Description | Advantages | Disadvantages | Trade-offs |

|---|---|---|---|---|

| Redundancy (e.g., Replication) | Creating multiple copies of data or components | High availability, data integrity | Increased resource consumption, complexity | Balance between cost and reliability |

| Load Balancing | Distributing workload across multiple servers | Improved performance, reduced server load | Requires complex infrastructure, management overhead | Scalability versus potential for overload |

| Failover Mechanisms | Automated switching to backup components | Minimal service disruption | Requires careful configuration and testing | Speed of failover versus complexity |

Data Consistency and Replication

Maintaining data consistency across multiple nodes in a distributed system is crucial for reliability, especially in the face of network unreliability. Inconsistent data can lead to data corruption, incorrect calculations, and ultimately, system failures. Therefore, robust strategies for data replication and conflict resolution are essential for building resilient distributed systems.Data consistency models dictate how different nodes in a distributed system view and interact with the data.

Different models have varying degrees of consistency, which directly impacts the resilience of the system to network failures. Choosing the right model is vital for ensuring data integrity and system functionality under different levels of network disruption.

Data Consistency Models

Various data consistency models address the trade-off between consistency and availability in the presence of network failures. These models define the expected behavior of the system when data is replicated across multiple nodes. A key consideration is how the system handles updates to the data when network partitions or failures occur.

Techniques for Maintaining Data Consistency

Several techniques can be employed to maintain data consistency during network failures. These techniques often involve incorporating mechanisms for detecting and handling conflicts, and ensuring that the data remains consistent across all replicas.

- Optimistic Locking: This approach assumes that conflicts are infrequent. Client applications perform updates without locking. The system then checks for conflicts upon committing the updates. If a conflict is detected, the system notifies the client and provides mechanisms for resolving the conflict. This approach generally leads to higher availability but requires mechanisms for conflict detection and resolution.

- Pessimistic Locking: In contrast to optimistic locking, this approach assumes that conflicts are likely. All updates are performed under exclusive locks, preventing concurrent access to the data. While this approach guarantees consistency, it can lead to lower availability, especially in highly concurrent environments.

- Two-Phase Commit: This protocol ensures that all nodes involved in a transaction agree on the outcome. It guarantees data consistency by ensuring that either all updates are committed or none are, thus preventing partial updates. This protocol can be complex to implement, and it introduces delays in the update process.

Data Replication and Recovery Strategies

Replication strategies involve distributing data across multiple nodes. Proper replication strategies can help to improve data availability and fault tolerance. Recovery strategies are essential to restore data in the event of node failures.

- Active-Active Replication: In this strategy, all replicas are actively handling read and write requests. This approach offers high availability, but it requires sophisticated conflict resolution mechanisms to maintain data consistency. It is suitable for systems that need high availability and low latency.

- Active-Passive Replication: This strategy designates one replica as the primary node for writes and other replicas as backups. The backups are passively waiting for the primary node to fail. This method is simpler to implement than active-active replication, but it introduces latency when accessing the data.

- Data Recovery Techniques: Data recovery procedures are essential for ensuring data availability after failures. These procedures could include techniques like backups, checkpoints, or logging transactions to enable recovery to a previous consistent state.

Handling Data Conflicts in Replicated Data Stores

Data conflicts arise when multiple updates to the same data occur concurrently. Conflict resolution mechanisms are needed to reconcile these conflicts and maintain data consistency.

- Conflict Detection: Systems need to identify conflicting updates. This involves comparing the timestamps of updates or using other conflict detection mechanisms.

- Conflict Resolution Strategies: Several conflict resolution strategies can be applied. These could include choosing the latest update, using a merge algorithm to combine the updates, or allowing the users to resolve the conflict. The choice of strategy depends on the application’s requirements and the nature of the data.

Comparison of Data Consistency Models

| Consistency Model | Description | Suitability for Network Unreliability |

|---|---|---|

| Strong Consistency | All nodes see the same data at the same time. | High consistency, low availability, often unsuitable for highly unreliable networks. |

| Eventual Consistency | Updates eventually propagate to all replicas. | High availability, potentially suitable for unreliable networks, but consistency may not be immediate. |

| Sequential Consistency | Updates appear to happen in a single, globally ordered manner. | Good balance between consistency and availability, but can be complex to implement in unreliable networks. |

Communication Protocols and Mechanisms

Communication protocols are fundamental to reliable message delivery in distributed systems. They define the rules and procedures for exchanging information between nodes, ensuring data integrity and consistency despite network unreliability. Effective protocols address issues like packet loss, delays, and order discrepancies, thereby enabling robust communication in the face of network challenges. These protocols play a crucial role in maintaining data consistency and ensuring the correct operation of distributed applications.

Reliable Communication Protocols

Reliable communication protocols are designed to guarantee the successful delivery of messages, even in the presence of network failures. They employ mechanisms like acknowledgments and retransmissions to ensure that messages arrive correctly and in the intended order. This is crucial for applications where data integrity and order are critical, such as financial transactions or critical control systems.

Examples of Reliable Protocols and Their Application

Several reliable communication protocols are widely used in distributed systems. TCP (Transmission Control Protocol), a core protocol in the Internet protocol suite, is a prominent example. TCP provides reliable, ordered, and error-checked delivery of data streams. It’s commonly used in web browsing, file transfers, and other applications where maintaining data integrity is paramount. Other reliable protocols include protocols in the AMQP (Advanced Message Queuing Protocol) family, designed for message queuing, or protocols tailored for specific use cases, like in industrial automation systems.

Designing Reliable Message Delivery Protocols

Designing protocols for reliable message delivery in unreliable networks requires careful consideration of various factors. A key element is incorporating mechanisms for error detection and correction, such as checksums or cyclic redundancy checks (CRCs). Furthermore, protocols should employ strategies for handling packet loss, like retransmissions based on timeouts or acknowledgments. Strategies for managing out-of-order delivery are also vital, as are strategies for handling network partitions.

Detecting and Handling Network Partitions

Network partitions occur when the network is divided into isolated segments. In such scenarios, communication between nodes in different partitions is disrupted. Protocols need mechanisms to detect and react to these partitions. Techniques include heartbeat messages to monitor connectivity and fault tolerance strategies to maintain service in one or more partitions. Protocols may use techniques like leader election to identify a reliable node for communication in the case of a partition.

Comparison of Communication Protocols

| Protocol | Key Features | Robustness to Network Failures |

|---|---|---|

| TCP | Ordered delivery, error checking, retransmissions, flow control | High |

| UDP | Unordered delivery, no error checking, faster | Low |

| SCTP | Multi-streaming, multi-homing, reliability | High |

| AMQP | Message queuing, publish-subscribe, reliability | High (depending on implementation) |

The table above provides a simplified comparison of different communication protocols, highlighting their key features and robustness to network failures. The robustness of a protocol is influenced by its implementation and the specific network conditions.

Load Balancing and Routing

Load balancing and routing are crucial components in designing resilient distributed systems. These mechanisms distribute the workload across multiple resources and ensure data reaches its destination reliably, even in the face of network issues. Effective load balancing and routing protocols minimize the impact of network failures on overall system performance and availability.

Load Balancing Strategies

Load balancing strategies aim to distribute incoming requests across multiple servers, preventing overload on any single node. This approach enhances system resilience by mitigating the risk of a single point of failure. Diverse strategies exist, each with its strengths and weaknesses in terms of handling network fluctuations and ensuring fair resource utilization.

- Round Robin: This simple algorithm assigns requests sequentially to available servers, creating a balanced workload distribution. It’s straightforward to implement but may not be optimal if server performance varies. For instance, if one server is significantly slower than others, it will receive more requests, leading to uneven performance.

- Least Connections: This strategy directs requests to the server with the fewest active connections. This algorithm prioritizes maintaining a more even workload distribution across servers, which contributes to overall system resilience. It dynamically adapts to changing server loads, making it more robust than round robin in the face of fluctuating network conditions.

- Weighted Round Robin: This approach assigns weights to servers, influencing the distribution of requests. Servers with higher weights receive a proportionally greater share of requests, useful when servers have differing capacities or performance characteristics. For example, a server with twice the processing power of another could be assigned twice the weight, ensuring it handles a proportionally larger share of requests.

Routing Protocols for Unreliable Networks

Routing protocols are essential for guiding data packets through a network. In unreliable networks, these protocols need to be robust to handle network failures and dynamically adjust paths.

- Dynamic Routing Protocols: These protocols adapt to changing network conditions by recalculating optimal paths in response to link failures or changes in network topology. Examples include OSPF (Open Shortest Path First) and RIP (Routing Information Protocol). These protocols ensure that data can be routed around failed links, keeping the system operational even during network disruptions.

- Multipath Routing: This approach allows data packets to be sent along multiple paths simultaneously. This redundancy enhances resilience by providing alternative routes if one path fails. If one route becomes congested or unavailable, data can still be delivered via other routes, improving system responsiveness and availability.

Implementing Load Balancing Algorithms

Implementing load balancing algorithms requires careful consideration of the network environment and system architecture.

- Network Load Balancers: Dedicated hardware or software load balancers can efficiently distribute traffic across multiple servers. These tools offer advanced features for managing traffic flow, such as health checks to identify and exclude unresponsive servers. For example, a network load balancer can monitor the health of web servers and redirect traffic away from those experiencing issues, ensuring high availability.

- Proxy Servers: Proxy servers can act as intermediaries, intercepting requests and directing them to appropriate servers. They offer a flexible and cost-effective approach to load balancing, particularly for web applications. This enables a degree of control over how requests are handled and can be configured to prioritize requests based on various factors.

Dynamic Routing Adjustments

Dynamic adjustments to routing are critical for handling network failures in real-time.

- Real-time Monitoring: Monitoring network conditions, such as link latency and bandwidth utilization, allows for proactive routing adjustments. This ensures that routing tables are updated frequently to reflect the current network state, thereby preventing prolonged delays or data loss due to misrouting.

- Failure Detection Mechanisms: Implementing mechanisms to detect link failures, such as timeouts or packet loss monitoring, is vital for rapid rerouting. These mechanisms ensure that data packets are directed to functional paths as quickly as possible. This avoids situations where data is persistently sent along a failed link, resulting in delays and potentially lost data.

Load Balancing Strategies and Performance Impact

| Load Balancing Strategy | Impact on System Performance During Network Failures |

|---|---|

| Round Robin | May experience uneven performance if server performance varies significantly; can be less resilient to prolonged failures in a single server. |

| Least Connections | More resilient to fluctuating network conditions; dynamically adjusts to server load variations. |

| Weighted Round Robin | Provides flexibility to handle servers with different capacities, but requires careful weighting. |

| Multipath Routing | Enhances resilience by providing multiple paths; can experience increased overhead if routing tables become complex. |

Monitoring and Recovery Mechanisms

Effective distributed systems require robust monitoring and recovery mechanisms to ensure high availability and fault tolerance. Network unreliability is a constant threat, and proactive strategies are crucial to minimizing downtime and maintaining service levels. These mechanisms, coupled with the design principles and protocols discussed earlier, form a comprehensive approach to building resilient systems.

Importance of System Monitoring

System monitoring is critical for detecting network issues early, enabling timely intervention and preventing cascading failures. By tracking key performance indicators (KPIs), monitoring systems can identify anomalies and potential points of failure, allowing for proactive responses. This proactive approach significantly reduces the impact of network outages and maintains user experience.

Design of Monitoring Systems

Monitoring systems must be designed to collect, analyze, and visualize data from various sources within the distributed network. This includes network traffic patterns, latency, packet loss rates, and resource utilization. Alerting mechanisms should be configured to trigger notifications based on predefined thresholds, providing early warning of potential problems. Centralized dashboards offer a comprehensive view of the system’s health, allowing for rapid identification of trouble spots.

Automated Recovery Mechanisms

Automated recovery mechanisms are essential for minimizing downtime during network failures. These mechanisms should be designed to automatically detect and respond to network issues, such as routing failures or node outages. Strategies may include rerouting traffic, activating backup servers, or triggering failover mechanisms. The implementation of these mechanisms should be thoroughly tested to ensure they function reliably under various failure scenarios.

Proactive Recovery Strategies

Proactive recovery strategies involve anticipating potential failures and implementing preemptive measures. This might include dynamic load balancing, redundancy in network components, or maintaining multiple copies of critical data. For instance, in a geographically distributed system, having data replicas in multiple regions allows for seamless failover if one region experiences an outage. This proactive approach is crucial for maintaining service availability during unexpected events.

Monitoring Metrics for Network Unreliability

The following table Artikels key monitoring metrics used to detect network unreliability:

| Metric | Description | Importance |

|---|---|---|

| Packet Loss Rate | Percentage of packets lost during transmission | Indicates network congestion or instability. High packet loss suggests potential failures. |

| Latency (Round Trip Time) | Time taken for a request to travel to a destination and return | Reflects network performance. High latency indicates potential issues, like congestion or slow links. |

| Throughput | Rate of data transmission | Measures network bandwidth capacity. Low throughput can be indicative of network overload. |

| CPU Utilization | Percentage of CPU resources in use | High CPU utilization on network nodes suggests a possible bottleneck. |

| Memory Usage | Percentage of memory in use | High memory usage might lead to performance degradation or system crashes. |

| Network Interface Errors | Count of errors at network interface level | High error counts indicate hardware or software problems. |

State Management and Consistency

Maintaining a consistent and reliable system state across distributed components is crucial for fault tolerance and data integrity. Effective state management strategies are essential to ensure that the system behaves predictably and correctly, even in the presence of network partitions or node failures. This involves carefully considering how state is replicated, updated, and recovered from potential inconsistencies.

Strategies for Managing System State

Distributed systems often employ various strategies for managing their state. These include techniques like state replication, where copies of the state are maintained across multiple nodes, and state caching, which stores frequently accessed state locally to reduce network overhead. Another approach is to use a combination of both, utilizing local caches for frequently accessed data while maintaining replicated state for critical or infrequently updated information.

Ensuring Consistency During Network Failures

Ensuring consistency during network failures requires careful consideration of data consistency models. Common models include eventual consistency, where data eventually converges to a consistent state across all nodes, and strong consistency, where all nodes see the same data at all times. The choice between these models depends on the specific application requirements and the acceptable level of latency.

For example, a social media platform might opt for eventual consistency to ensure rapid updates, while a financial transaction system would likely prioritize strong consistency to guarantee accuracy and avoid conflicts.

State Machine Replication

State machine replication is a powerful technique for maintaining consistency in distributed systems. It involves replicating the state of a state machine across multiple nodes. The state machine’s operations are executed on each replica, ensuring that all replicas converge to the same state. A critical aspect of state machine replication is ensuring that the order of operations is preserved across all replicas.

- Implementing a state machine replication requires a mechanism to ensure the operations are performed in the correct order across all replicas. This can be achieved through techniques like distributed consensus protocols (e.g., Paxos, Raft). These protocols guarantee that all replicas agree on the order of operations, even in the presence of network partitions.

- A simple example involves a counter that increments with each request. Each replica maintains its own counter. When a request arrives, it is broadcast to all replicas. Each replica increments its counter, and the replicas are synchronized using the consensus protocol. This ensures that all replicas have the same counter value.

Recovering from State Inconsistencies

State inconsistencies can arise due to various factors, such as network partitions or node failures. Recovery mechanisms are essential to restore the system to a consistent state. These mechanisms can involve techniques like rollback to a previous consistent state or conflict resolution algorithms, which determine how to reconcile conflicting updates. Appropriate error handling and logging mechanisms are essential to assist in pinpointing the source of inconsistencies.

Comparison of State Management Techniques

| Technique | Impact on System Resilience | Advantages | Disadvantages |

|---|---|---|---|

| State Replication | High | Improved availability, fault tolerance | Increased complexity, potential for data inconsistencies |

| State Caching | Moderate | Reduced latency, improved performance | Potential for stale data, requires careful cache invalidation strategies |

| Eventual Consistency | High availability | Fast updates, low latency | Potential for stale data, requires careful data consistency model |

| Strong Consistency | High data integrity | Guaranteed data accuracy | Reduced availability, higher latency |

Security Considerations

Designing secure distributed systems operating in unreliable networks requires careful consideration of potential vulnerabilities. Network unreliability, while crucial for system resilience, can also create avenues for malicious actors to exploit weaknesses. A robust security architecture must be integral to the system design, not an afterthought.Effective security measures in distributed systems must anticipate and mitigate threats arising from network instability.

This includes safeguarding data integrity and confidentiality during network failures and attacks, as well as protecting against unauthorized access and manipulation.

Security Vulnerabilities in Unreliable Networks

Network unreliability can exacerbate existing security vulnerabilities and introduce new ones. Intermittent connectivity, packet loss, and delays can be exploited to disrupt communication, inject malicious data, or gain unauthorized access. For example, attackers might use these conditions to replay previously intercepted messages, potentially gaining access to sensitive information. Another risk is the compromise of intermediary nodes in a distributed network, which can lead to data breaches or man-in-the-middle attacks.

Additionally, the dynamic nature of unreliable networks can make traditional security measures less effective.

Secure System Design Principles for Network Attacks

Secure design principles for unreliable networks should prioritize data integrity, confidentiality, and availability. This includes employing encryption and authentication protocols to secure communication channels and employing intrusion detection systems to monitor network activity for anomalies. Furthermore, redundancy and failover mechanisms can mitigate the impact of network failures, maintaining system availability and minimizing data loss during attacks.

Securing Data During Network Failures

Robust data security measures are paramount in unreliable networks. Strategies include employing encryption to protect sensitive information during transit and at rest. Redundant data storage and replication across multiple nodes can ensure data availability even during failures. Data validation and checksums can help detect and recover from data corruption introduced by network unreliability.

Implementing Security Measures in Distributed Systems

Implementing security measures in distributed systems involves several key steps. First, employ cryptographic protocols to secure communication between nodes. Second, implement access control mechanisms to restrict access to sensitive resources. Third, deploy intrusion detection and prevention systems to monitor network activity for malicious behavior. These measures can significantly enhance the system’s security posture in the face of network unreliability.

An example includes using secure protocols like TLS/SSL for all communication between components, ensuring data confidentiality and integrity. Implementing a distributed key management system can securely manage encryption keys across the network, preventing unauthorized access to critical data.

Table of Security Vulnerabilities and Countermeasures

| Security Vulnerability | Description | Countermeasure |

|---|---|---|

| Man-in-the-Middle Attacks | Attacker intercepts and modifies communication between two parties. | Employ end-to-end encryption, validate communication partners, use trusted certificate authorities. |

| Denial-of-Service (DoS) Attacks | Overwhelm the system with traffic to prevent legitimate users from accessing resources. | Implement rate limiting, traffic filtering, and load balancing. Utilize redundancy to mitigate the impact of failures. |

| Data Injection Attacks | Malicious data is introduced into the system. | Validate all input data, use checksums to detect corruption, employ data sanitization techniques. |

| Unauthorized Access | Unauthorized access to sensitive data or resources. | Implement strong authentication mechanisms (multi-factor authentication), role-based access control, and regular security audits. |

Case Studies and Examples

Real-world systems demonstrate the critical need for robust design principles to handle network unreliability. Analyzing successful implementations, alongside those that faltered, provides valuable insights for building resilient distributed systems. This section explores exemplary systems, detailing their strategies for handling network partitions, message loss, and other potential failures.

Successful Implementations of Resilient Distributed Systems

Numerous distributed systems have successfully incorporated strategies to mitigate network unreliability. These systems demonstrate that careful planning and well-chosen design choices can dramatically improve system resilience. The specific design principles applied often differ, reflecting the unique requirements and constraints of each system.

- The Apache Kafka system leverages a distributed log approach for high-throughput message streaming. This design inherently addresses network unreliability by replicating data across multiple brokers. Data is persistently stored, allowing for recovery from node failures. Kafka’s design prioritizes fault tolerance and high availability, making it a prime example of how distributed systems can effectively handle network issues. The system’s distributed log structure enables efficient recovery from message loss and network partitions, enabling continuous data flow even when nodes are unavailable.

- The Cassandra database prioritizes availability and fault tolerance by employing a distributed, decentralized storage architecture. This approach allows for high availability even if multiple nodes fail simultaneously. Data is replicated across many nodes, enabling recovery from failures in individual nodes. Cassandra’s design principles focus on data consistency in the face of network unreliability, providing a compelling model for designing resilient data stores.

- The Google Spanner database utilizes a highly advanced system for global distributed transactions. Spanner’s unique combination of distributed consensus protocols, advanced replication strategies, and sophisticated monitoring mechanisms ensures the reliability and consistency of data in the face of network unreliability. This system effectively tackles network partitions and data consistency challenges. Its design highlights the importance of intricate coordination mechanisms for handling distributed data.

Lessons Learned from Failures

Examining failed systems provides valuable insights into potential pitfalls and areas needing improvement. These lessons often reveal that neglecting fundamental design principles, like data consistency protocols or robust monitoring mechanisms, can lead to cascading failures and significant operational issues.

- Several distributed systems have experienced outages due to insufficient replication strategies. Systems that did not properly account for potential network issues suffered significant data loss and service disruptions. The lessons learned underscore the importance of thorough replication and redundancy planning in distributed systems.

- In systems that lacked effective monitoring and recovery mechanisms, the impact of network outages was often magnified. The lack of robust mechanisms to detect and respond to failures resulted in longer outage durations and hampered the restoration of service. This illustrates the critical role of proactive monitoring and automated recovery strategies in ensuring system resilience.

Application of Design Principles in Case Studies

The successful implementations often exemplify the application of key design principles for network unreliability. These principles, including data replication, redundancy, and fault tolerance, are consistently applied in a variety of systems to address the inherent risks of distributed environments.

| Case Study | System | Design Principle Application | Key Lessons Learned |

|---|---|---|---|

| Apache Kafka | Message Streaming Platform | Distributed log, replication, fault tolerance | High throughput, resilience to node failures, efficient recovery from message loss |

| Cassandra | Distributed Database | Decentralized architecture, data replication, high availability | Robust handling of failures, high availability even with multiple node failures, efficient data recovery |

| Google Spanner | Global Database | Distributed consensus protocols, sophisticated replication strategies, robust monitoring | Ensuring data consistency and reliability across a global network, resilience to network partitions, effective coordination mechanisms |

Performance Evaluation and Testing

Evaluating the performance of distributed systems designed for network unreliability is crucial for ensuring their robustness and efficiency. This involves understanding how the system responds to various network conditions, including failures and delays. Effective testing methods are essential to identify potential bottlenecks and weaknesses before deployment. Thorough performance evaluation enables informed decisions about system architecture and resource allocation.

Methods for Evaluating System Performance

Performance evaluation methods should encompass a comprehensive approach to assess various aspects of the system’s behavior under stress. These include analyzing metrics such as throughput, latency, and resource utilization. Statistical analysis is crucial to identify trends and patterns, allowing for a deeper understanding of system response to network challenges. For example, analyzing the distribution of latency can reveal the frequency of high-latency events, indicating potential points of failure.

Strategies for Testing Resilience in Simulated Network Conditions

Simulating network unreliability is vital for testing the resilience of distributed systems. This includes simulating various failure scenarios, such as network partitions, packet loss, and node failures. The simulations should reflect realistic network conditions, enabling accurate assessments of system behavior. Tools like network emulators and simulators can be used to create realistic network environments. For instance, emulators can be programmed to inject delays or packet loss at specific intervals, simulating network congestion or outages.

This controlled environment allows for detailed observation of system responses.

Examples of Tools and Techniques Used in Performance Testing

Various tools and techniques are available for performance testing. Load testing tools, such as JMeter or Gatling, are commonly used to simulate high loads and measure system performance under pressure. These tools can generate a large number of requests to simulate real-world usage patterns. Furthermore, specialized network emulators, like ns-3 or Mininet, are used to create controlled network environments.

These tools provide flexibility to model various network topologies and fault conditions.

Measuring the Impact of Network Failures on System Performance

Assessing the impact of network failures is crucial for understanding the system’s resilience. This involves measuring metrics like throughput degradation, latency increase, and error rates. The impact can be measured by comparing system performance in a reliable network versus one with simulated failures. Monitoring tools can capture real-time data during tests to identify the effects of failures on specific components.

By observing the relationship between network conditions and system performance, a clear picture of system vulnerabilities emerges.

Performance Metrics and Testing Procedures for Distributed Systems

| Performance Metric | Testing Procedure | Measurement Unit |

|---|---|---|

| Throughput | Measure the rate at which data is processed and transferred. | Requests/second, Messages/minute |

| Latency | Measure the time taken for a request to be processed and returned. | Milliseconds, Microseconds |

| Error Rate | Measure the frequency of errors or failures. | Percentage, Number of errors |

| Resource Utilization | Monitor CPU, memory, and disk usage during tests. | Percentage, Units |

| Availability | Measure the uptime and downtime of the system. | Percentage, Time |

| Consistency | Verify the correctness of data after failures. | Percentage, Number of discrepancies |

This table Artikels key performance metrics and testing procedures that can be employed to evaluate distributed systems under various network conditions. Systematic measurement of these metrics enables a deeper understanding of system resilience and allows for informed adjustments to improve performance.

Future Trends and Research Directions

Designing for network unreliability in distributed systems is an ongoing process, driven by the ever-increasing complexity and scale of these systems. Emerging trends in network technologies, coupled with the need for enhanced resilience and performance, are shaping future research directions. This section will explore these evolving trends and the potential research avenues they open.

Emerging Trends in Network Technologies

Network technologies are constantly evolving, introducing new challenges and opportunities for designing resilient distributed systems. The rise of 5G and beyond, with its focus on ultra-low latency and high bandwidth, presents both opportunities and complexities. Further, the growth of edge computing and the Internet of Things (IoT) introduces new forms of network heterogeneity and variability, impacting system design.

The increased use of software-defined networking (SDN) and network function virtualization (NFV) offers greater flexibility and control but also introduces new points of failure.

Open Research Problems in Network Unreliability

Several key research problems remain in designing systems robust against network unreliability. Developing techniques for accurate network state prediction and modeling remains a critical area. Furthermore, creating adaptive routing and load-balancing strategies that react to real-time network conditions is a significant challenge. The development of fault-tolerant communication protocols that can effectively manage and recover from failures is crucial.

Potential Future Directions for Research and Development

Future research directions should focus on developing more sophisticated and adaptive mechanisms for dealing with network unreliability. This includes developing intelligent network monitoring and prediction systems. Adaptive and self-healing systems are crucial. Furthermore, integrating security considerations into fault tolerance mechanisms is critical. Finally, developing comprehensive evaluation frameworks to assess and compare different design strategies will be vital.

The emergence of novel network topologies and architectures, such as mesh networks and decentralized peer-to-peer systems, will necessitate the development of novel design approaches.

How Future Trends Can Affect System Design

The aforementioned trends will profoundly affect system design. For instance, the rise of 5G and edge computing will necessitate the development of distributed systems capable of operating in highly dynamic and heterogeneous environments. The increasing complexity of networks will require more sophisticated and automated approaches to network monitoring and management. Furthermore, distributed systems will need to become more adaptive, reacting in real-time to network conditions.

Finally, security concerns will need to be tightly integrated into resilience strategies.

Summary of Current Trends and Potential Future Research Directions

| Current Trend | Potential Future Research Direction |

|---|---|

| Increased network complexity and heterogeneity (5G, IoT, edge computing) | Development of adaptive, self-healing systems capable of operating in dynamic environments; enhanced network monitoring and prediction mechanisms |

| Software-defined networking (SDN) and network function virtualization (NFV) | Integration of security and fault tolerance into SDN and NFV frameworks; development of adaptive routing and load-balancing strategies that exploit SDN and NFV capabilities |

| Real-time network conditions | Development of accurate network state prediction models and adaptive communication protocols |

| Security concerns | Integration of security considerations into fault tolerance mechanisms; development of secure and resilient communication protocols |

Summary

In conclusion, designing for network unreliability in distributed systems requires a multi-faceted approach encompassing robust design principles, careful data management, effective communication protocols, and proactive monitoring. By addressing the challenges of network failures head-on, we can create highly resilient systems capable of handling diverse scenarios and ensuring consistent performance, even under adverse network conditions. The future of distributed systems hinges on our ability to anticipate and overcome these challenges.

Commonly Asked Questions

What are common types of network failures?

Common network failures include packet loss, latency spikes, network partitions, and connection timeouts. These failures can disrupt data transmission and lead to system instability.

How do I choose the right data consistency model for my system?

The optimal data consistency model depends on the specific needs of the application. Factors to consider include the acceptable level of data inconsistency, the frequency of updates, and the tolerance for data loss.

What are some examples of reliable communication protocols?

Reliable communication protocols like TCP and protocols employing message acknowledgment and retransmission mechanisms ensure the integrity and order of messages transmitted over unreliable networks.

How can I measure the impact of network failures on system performance?

Performance metrics such as response time, throughput, and error rates provide valuable insights into the impact of network failures on system performance. These metrics can be used to identify bottlenecks and optimize system resilience.