The evolution of computing has brought forth Function-as-a-Service (FaaS), a paradigm shift that enables developers to execute code without managing the underlying infrastructure. This architectural approach offers significant advantages, including scalability and cost efficiency. However, while cloud-based FaaS solutions dominate the landscape, deploying a FaaS platform on-premise offers unique benefits, particularly for organizations prioritizing data sovereignty, security, and control over their infrastructure.

This guide delves into the intricacies of building and managing a FaaS platform within your own environment, providing a roadmap for achieving a robust and efficient serverless experience.

This exploration encompasses various facets, from architectural design and infrastructure selection to function development, security implementation, and cost optimization. We will examine the critical components required for an on-premise FaaS platform, compare different architectural approaches, and delve into the nuances of hardware and software choices. Moreover, the guide offers practical insights into setting up a development environment, developing and deploying functions, and managing function execution and scaling.

By the end of this discussion, readers will gain a comprehensive understanding of how to build, operate, and maintain a FaaS platform on-premise, enabling them to harness the power of serverless computing while retaining control over their IT resources.

Introduction to FaaS and On-Premise Deployment

Function-as-a-Service (FaaS) represents a paradigm shift in cloud computing, enabling developers to execute code without managing the underlying infrastructure. This allows for a more agile and cost-effective approach to software development. Deploying a FaaS platform on-premise offers unique advantages compared to cloud-based deployments, particularly concerning data sovereignty, security, and control.

Core Concepts of Function-as-a-Service (FaaS)

FaaS is a serverless execution model where developers write individual functions that are triggered by events. These events can range from HTTP requests to database updates. The FaaS provider handles all aspects of infrastructure management, including scaling, provisioning, and patching.Key advantages of FaaS include:

- Reduced Operational Overhead: Developers are freed from managing servers, operating systems, and infrastructure, allowing them to focus on code.

- Automatic Scaling: The platform automatically scales resources based on demand, ensuring optimal performance and cost efficiency.

- Pay-per-Use Pricing: Users are charged only for the actual compute time consumed by their functions, leading to potentially significant cost savings, especially for applications with intermittent workloads.

- Increased Agility: Rapid deployment and iteration cycles are enabled due to the streamlined development process.

- Event-Driven Architecture: FaaS facilitates the building of event-driven applications, where functions are triggered by events, enabling highly responsive and scalable systems.

Benefits of On-Premise FaaS Deployment

Deploying a FaaS platform on-premise offers distinct advantages, particularly in environments where data residency, security, and control are paramount.

- Data Sovereignty: Organizations maintain complete control over their data, ensuring compliance with regulations that mandate data storage within specific geographical boundaries. For example, financial institutions or healthcare providers often require on-premise deployments to comply with data privacy laws such as GDPR or HIPAA.

- Enhanced Security: On-premise deployments allow for stricter control over security policies and access controls. Organizations can implement their own security measures, including firewalls, intrusion detection systems, and vulnerability scanning, tailored to their specific needs.

- Increased Control: Organizations have complete control over the infrastructure, including hardware and software, which can be customized to meet specific performance and compliance requirements. This level of control can be crucial for applications with unique performance needs or stringent security requirements.

- Reduced Latency: For applications with low-latency requirements, deploying FaaS on-premise can reduce the network latency associated with cloud-based deployments, especially if the application is located close to the users or data sources.

- Cost Optimization (for predictable workloads): For workloads with predictable and consistent resource requirements, on-premise deployments can sometimes be more cost-effective than cloud-based deployments, especially when considering the long-term costs of cloud services.

Key Differences Between Serverless and Traditional Application Architectures

Serverless architectures, including FaaS, differ significantly from traditional application architectures, primarily in how infrastructure is managed and how applications are deployed.

- Infrastructure Management: Traditional architectures require developers to manage servers, operating systems, and other infrastructure components. Serverless architectures abstract away infrastructure management, allowing developers to focus on code.

- Scaling: Traditional architectures often require manual scaling or the use of autoscaling tools. Serverless architectures automatically scale based on demand.

- Deployment: Traditional applications are typically deployed as monolithic applications or microservices, often requiring complex deployment pipelines. Serverless applications are deployed as individual functions, simplifying the deployment process.

- Resource Allocation: Traditional architectures involve allocating resources in advance, regardless of actual usage. Serverless architectures allocate resources dynamically, based on function invocations.

- Cost Model: Traditional architectures typically involve fixed costs for infrastructure, regardless of usage. Serverless architectures use a pay-per-use model, where costs are based on the number of function invocations and the resources consumed.

For example, consider a traditional web application with a database. The application server, database server, and load balancer all need to be provisioned and maintained. In a serverless architecture, the application logic could be implemented as FaaS functions, triggered by HTTP requests. The database could be a managed service, such as a cloud-based database. The FaaS platform would automatically scale the functions based on the number of requests, and the user would only be charged for the compute time consumed by the functions.

This demonstrates the efficiency of serverless architectures in managing resources and costs.

Choosing the Right Architecture

The architecture of an on-premise Function-as-a-Service (FaaS) platform dictates its performance, scalability, security, and manageability. Selecting the appropriate architecture is a critical decision, influencing the platform’s ability to meet the specific demands of the organization. This choice requires careful consideration of several factors, including resource availability, business requirements, and operational expertise.

Essential Architectural Components

A robust on-premise FaaS platform comprises several key components that work in concert to provide the core functionality of executing functions in response to events.

- API Gateway: Acts as the entry point for all function invocations. It handles routing, authentication, authorization, and rate limiting. This component is the primary interface between users, applications, and the FaaS platform. It often integrates with security protocols such as OAuth 2.0 or OpenID Connect to secure access to functions.

- Function Runtime Environment: Provides the execution environment for functions. This includes the necessary language runtimes (e.g., Node.js, Python, Go), dependencies, and isolation mechanisms to prevent interference between functions. It is designed to quickly provision and deprovision function instances.

- Function Invoker: Manages the lifecycle of function instances. It receives invocation requests from the API gateway, schedules functions for execution, monitors their execution, and handles scaling based on demand. This component is responsible for orchestrating the execution of functions.

- Event Triggering System: Enables functions to be triggered by various events, such as HTTP requests, database changes, message queue updates, or scheduled timers. It acts as the link between external events and function execution.

- Storage Layer: Provides persistent storage for function code, dependencies, and logs. It may include object storage for function code, and databases or file systems for function data and logs.

- Monitoring and Logging: Collects metrics and logs from function executions. This data is essential for monitoring performance, troubleshooting issues, and optimizing the platform. It should include dashboards for real-time monitoring and alerting capabilities.

- Resource Manager: Allocates and manages the underlying compute resources (CPU, memory, network) required by the function runtime environment. It may integrate with virtualization technologies or container orchestration systems.

Comparing Architectural Approaches

Different architectural approaches can be employed to build an on-premise FaaS platform. Each approach has its own set of trade-offs in terms of performance, resource utilization, and complexity. The following table compares three primary architectural approaches: container-based, virtual machine-based, and Kubernetes-based.

| Architecture | Pros | Cons | Ideal Use Cases |

|---|---|---|---|

| Container-Based |

|

|

|

| Virtual Machine-Based |

|

|

|

| Kubernetes-Based |

|

|

|

Selecting an Architecture

Choosing the right architecture requires careful consideration of several factors. These include resource availability, business requirements, and the team’s operational expertise.

- Resource Availability: The available hardware resources, such as CPU, memory, and storage, directly impact the choice of architecture. For example, if resources are constrained, a container-based architecture may be preferred due to its lower overhead. In contrast, a virtual machine-based architecture may require more resources, making it less suitable for resource-constrained environments.

- Business Requirements: The specific needs of the business, such as the types of applications being deployed, the expected traffic volume, and the required level of security, will influence the architectural decision. If applications require strict isolation and security, a virtual machine-based approach might be favored. If the focus is on rapid scaling and deployment, a Kubernetes-based architecture could be more suitable.

- Operational Expertise: The team’s existing skills and experience with different technologies play a crucial role. A team with expertise in containerization and orchestration might choose a Kubernetes-based architecture. In contrast, a team with experience in virtualization may opt for a virtual machine-based approach.

- Scalability Needs: Consider the anticipated growth in function invocations. Kubernetes-based architectures are designed to scale functions automatically, adapting to fluctuations in demand. If the system is expected to handle significant traffic, a highly scalable architecture is essential.

- Security Requirements: Different architectures offer varying levels of isolation and security. Virtual machines provide strong isolation, while containers require careful configuration to ensure security. Choose an architecture that aligns with the security policies and compliance requirements of the organization.

Selecting Infrastructure and Hardware

The success of an on-premise Function-as-a-Service (FaaS) platform hinges significantly on the careful selection of infrastructure and hardware. The choices made here directly impact performance, scalability, and cost-effectiveness. A poorly designed infrastructure can lead to bottlenecks, impacting function execution times and overall system reliability. Conversely, a well-engineered infrastructure will provide a robust and efficient environment for running serverless workloads, ensuring optimal resource utilization and facilitating horizontal scaling as demand fluctuates.

This section provides a detailed examination of the hardware requirements and infrastructure considerations necessary for building a resilient on-premise FaaS platform.

Hardware Requirements for On-Premise FaaS

The hardware requirements for an on-premise FaaS platform are contingent on the expected workload, the number of concurrent function invocations, and the size of the functions being executed. Underestimating these requirements can lead to performance degradation and service disruptions. It’s essential to perform load testing and capacity planning to accurately determine the necessary resources. The following factors are crucial for consideration:

- CPU: The Central Processing Unit (CPU) is a primary determinant of function execution speed. The required CPU cores depend on the computational demands of the functions.

- For CPU-intensive functions, such as image processing or scientific calculations, a higher core count and higher clock speed CPUs are recommended. Consider processors like the Intel Xeon series or AMD EPYC series, known for their high performance.

- For less CPU-intensive functions, such as simple API calls or data transformations, lower-end CPUs might suffice. However, even in these cases, it is essential to avoid under-provisioning to ensure adequate responsiveness.

- RAM: Random Access Memory (RAM) is critical for storing the code, data, and runtime environment of the functions. Insufficient RAM can lead to swapping, significantly impacting performance.

- The amount of RAM required depends on the size of the functions and the data they process. Consider the memory footprint of the function’s code, any dependencies it requires, and the size of the data it manipulates.

- Functions that handle large datasets or complex computations will require more RAM. Monitoring memory usage during load testing is vital to identify potential bottlenecks and prevent out-of-memory errors.

- A general guideline is to allocate sufficient RAM to accommodate the peak memory usage of the function plus a buffer for the operating system and the FaaS platform’s runtime environment.

- Storage: Storage is required for the function code, dependencies, logs, and potentially for temporary data storage during function execution. The type of storage significantly impacts performance.

- SSD vs. HDD: Solid State Drives (SSDs) offer significantly faster read and write speeds compared to Hard Disk Drives (HDDs). For functions that require fast access to code or data, SSDs are highly recommended. HDDs can be considered for archival storage or less performance-critical tasks.

- Storage Capacity: The required storage capacity depends on the size of the functions, the volume of logs, and the amount of temporary data storage needed. Capacity planning is essential to avoid running out of storage space.

- Network Attached Storage (NAS) and Storage Area Network (SAN): For larger deployments, consider using NAS or SAN solutions to provide centralized and scalable storage. These solutions often offer features like data redundancy and high availability.

- Network: A high-performance network is essential for inter-service communication, function invocation, and accessing external resources.

- Network Bandwidth: Ensure the network has sufficient bandwidth to handle the expected traffic. Consider the network requirements for function invocations, data transfers, and logging.

- Network Latency: Low latency is crucial for fast function execution. Minimize network latency by using a network topology optimized for the FaaS platform.

- Network Interface Cards (NICs): Use high-speed NICs, such as 10 Gigabit Ethernet or faster, to ensure sufficient network bandwidth.

Selecting the Underlying Infrastructure

The choice of infrastructure significantly impacts the operational complexity, scalability, and cost of the FaaS platform. The primary options include bare metal servers, virtual machines (VMs), or a hybrid approach. Each option has its advantages and disadvantages. The decision should be based on factors like budget, expertise, and the desired level of control.

- Bare Metal Servers: Bare metal servers provide the highest level of performance and control. They are dedicated physical servers without a hypervisor, allowing for maximum resource utilization.

- Advantages: Superior performance due to direct access to hardware, no virtualization overhead, and optimal resource allocation.

- Disadvantages: Higher initial cost, more complex management, and less flexibility in resource allocation.

- Use Cases: Ideal for workloads that require maximum performance, such as computationally intensive functions or functions with strict latency requirements.

- Virtual Machines (VMs): VMs offer a balance between performance and flexibility. They run on a hypervisor, allowing for resource virtualization and management.

- Advantages: Improved resource utilization, easier management compared to bare metal, and the ability to scale resources dynamically.

- Disadvantages: Overhead from the hypervisor, potentially lower performance compared to bare metal, and requires a robust virtualization infrastructure.

- Use Cases: Suitable for most FaaS deployments, offering a good balance between performance, cost, and management complexity.

- Hybrid Approach: A hybrid approach combines bare metal servers and VMs. It allows for leveraging the advantages of both approaches.

- Advantages: Optimized resource allocation, the ability to run performance-critical functions on bare metal, and the flexibility to scale other functions on VMs.

- Disadvantages: Increased complexity in management and requires careful planning to optimize resource utilization.

- Use Cases: Suitable for complex deployments with a mix of performance-critical and less demanding functions.

Designing a Scalable Infrastructure Setup

Designing a scalable infrastructure is essential to handle fluctuating workloads. The infrastructure must be able to automatically scale up or down based on demand. The following design principles are crucial:

- Horizontal Scaling: The primary scaling method should be horizontal scaling, which involves adding more instances of the function runtime environment as demand increases.

- Load Balancing: Implement a load balancer to distribute incoming requests across multiple function instances. This ensures that no single instance is overloaded. Consider using a round-robin or least-connections load-balancing algorithm.

- Auto-Scaling: Implement auto-scaling to automatically add or remove function instances based on metrics like CPU utilization, memory usage, or the number of pending requests. Tools like Kubernetes Horizontal Pod Autoscaler (HPA) can be used for this purpose.

- Stateless Functions: Design functions to be stateless, meaning they do not store any state locally. This allows for easy scaling, as any instance of the function can handle any request.

- External State Storage: Use external storage solutions, such as databases or object storage, to store any necessary state.

- Session Management: If session management is required, use a shared session store like Redis or Memcached.

- Resource Monitoring and Alerting: Implement robust monitoring and alerting to track resource utilization and identify potential bottlenecks.

- Monitoring Tools: Use tools like Prometheus, Grafana, or the monitoring capabilities of your chosen FaaS platform to collect and visualize metrics.

- Alerting Rules: Define alerting rules based on key metrics, such as CPU utilization, memory usage, and error rates. Configure alerts to notify administrators when thresholds are exceeded.

- Infrastructure as Code (IaC): Use IaC tools like Terraform or Ansible to automate the provisioning and management of the infrastructure. This allows for consistent deployments and easy scaling.

- Automated Provisioning: Automate the creation of virtual machines, network configurations, and load balancers.

- Configuration Management: Use IaC to configure the function runtime environment and any necessary dependencies.

- Capacity Planning: Regularly assess the infrastructure capacity and adjust resources as needed. Monitor workload patterns and forecast future demand to proactively scale the infrastructure.

- Load Testing: Conduct load testing to simulate real-world traffic and identify performance bottlenecks.

- Performance Benchmarking: Benchmark the performance of different function runtimes and infrastructure configurations to optimize resource utilization.

Choosing FaaS Platform Software

Selecting the appropriate FaaS platform software is a critical step in establishing a functional on-premise FaaS environment. The choice significantly impacts development workflows, operational overhead, and overall system performance. This section focuses on evaluating and selecting the right software, covering available options, feature comparisons, and suitability assessments.

Open-Source and Commercial FaaS Platform Options

The FaaS landscape offers a range of options, from open-source projects providing flexibility and community support to commercial platforms offering comprehensive features and vendor-backed support. Understanding the strengths and weaknesses of each category is crucial for making an informed decision.

- Open-Source Platforms: These platforms are typically free to use and offer a high degree of customization. They benefit from community contributions, fostering innovation and rapid development. However, they often require a higher level of technical expertise for deployment, maintenance, and troubleshooting.

- Commercial Platforms: Commercial platforms often provide a more user-friendly experience with features like simplified deployment, pre-built integrations, and dedicated support. They come with associated costs, which may be subscription-based or based on usage.

Feature, Pricing, and Support Comparison

A detailed comparison of available platforms reveals significant differences in features, pricing, and support models. This analysis helps in aligning platform capabilities with specific project requirements and budget constraints. The following bullet points Artikel key features of selected platforms:

- Apache OpenWhisk: An open-source, distributed serverless platform.

- Features: Supports multiple programming languages (Node.js, Python, Java, Swift, Go, PHP), event-driven architecture, REST API for function invocation, and integrated logging and monitoring.

- Pricing: Free and open-source; cost is determined by the underlying infrastructure used for deployment (e.g., cloud provider or on-premise hardware).

- Community Support: Active community with forums, mailing lists, and extensive documentation.

- Kubeless: An open-source serverless framework built on Kubernetes.

- Features: Native Kubernetes integration, supports multiple languages (Python, Node.js, Go, Ruby, PHP), automatic scaling based on Kubernetes resources, and easy deployment using Kubernetes manifests.

- Pricing: Free and open-source; cost is determined by the Kubernetes cluster infrastructure.

- Community Support: Community support through GitHub issues, Slack channels, and Kubernetes-focused forums.

- OpenFaaS: An open-source framework for building serverless functions with Docker and Kubernetes.

- Features: Simple deployment using Docker, supports a wide range of languages via templates, built-in autoscaling, REST API for function management, and integration with Prometheus for monitoring.

- Pricing: Free and open-source; cost is determined by the infrastructure used for Docker and Kubernetes deployment.

- Community Support: Large and active community with extensive documentation, tutorials, and a Slack channel.

Evaluating Platform Suitability

The selection of a FaaS platform hinges on several critical factors, including programming language support, integration capabilities, and scalability requirements. Careful consideration of these aspects ensures the chosen platform aligns with project goals.

- Programming Language Support: The platform must support the programming languages used by the development team. Some platforms support a wide range of languages through built-in features or community-contributed runtimes. Others might be more restrictive, offering support for only a subset of languages.

- Integration Capabilities: The platform should integrate seamlessly with existing infrastructure and services, such as databases, message queues, and monitoring tools. Evaluate the availability of pre-built connectors, APIs, and custom integration options.

- Scalability and Performance: The platform must be capable of handling the expected workload and scaling automatically to meet demand. Assess the platform’s performance characteristics, such as cold start times, function execution speed, and resource utilization. Consider using benchmarking tools to evaluate the platform’s scalability under simulated load conditions. For example, the results from load tests can provide insights into the platform’s ability to manage concurrent function invocations and prevent performance bottlenecks.

- Security Considerations: Evaluate the platform’s security features, including authentication, authorization, and data encryption. Ensure the platform complies with security best practices and offers mechanisms to protect against common vulnerabilities. For instance, the platform should support secure function execution environments, such as sandboxes or containerization, to isolate functions from the underlying infrastructure.

Setting Up the Development Environment

Establishing a robust development environment is crucial for efficiently building, testing, and deploying functions within an on-premise FaaS platform. This section Artikels the necessary steps to configure a local development setup, ensuring developers can rapidly iterate and validate function logic before deploying to the production environment. The guide will cover installation, configuration, and the utilization of common development tools within the FaaS context.

Organizing Steps for Local Function Development and Testing

A structured approach is vital for streamlining the development lifecycle. This involves setting up the environment, writing and testing functions, and managing dependencies.

- Install the FaaS Platform CLI: The Command Line Interface (CLI) is the primary tool for interacting with the FaaS platform. Installation typically involves downloading and installing the CLI package specific to the chosen platform and operating system.

- Configure the CLI: After installation, the CLI must be configured to connect to the local FaaS platform instance. This usually involves setting environment variables or configuring a settings file with connection details like the server address and authentication credentials.

- Create a Function Project: Utilize the CLI to initialize a new function project. This action typically generates a project structure containing the necessary files, such as function stubs, configuration files, and a `requirements.txt` (or similar) file for managing dependencies.

- Write the Function Code: Develop the function logic in the chosen programming language. The function code should adhere to the FaaS platform’s specific function signature and input/output conventions.

- Define Dependencies: Specify all external dependencies required by the function in the project’s dependency file.

- Test the Function Locally: Use the CLI to deploy and test the function locally. The platform will simulate the execution environment and allow testing without deployment to the full on-premise setup.

- Debug the Function: If the function encounters errors, use debugging tools to inspect variables, step through code, and identify the source of the problem.

- Iterate and Refactor: Repeat the steps of writing, testing, and debugging until the function behaves as expected. Refactor the code for better performance and maintainability.

- Package and Deploy: Once the function is validated, package it for deployment to the on-premise FaaS platform. The packaging process typically involves creating a container image or a deployment package.

Installing and Configuring the FaaS Platform Locally

Setting up the FaaS platform on a local machine or development server allows for rapid development and testing. The installation and configuration process varies depending on the chosen platform, but the general steps remain similar.

Here are examples of platform-specific configurations. These are illustrative and may need adaptation based on the specific platform version and system requirements.

- OpenFaaS:

- Installation: OpenFaaS typically leverages Docker. Install Docker on the local machine. Use Docker Compose to define and launch the OpenFaaS components, including the gateway, function registry, and Prometheus for monitoring.

- Configuration: After deployment, configure the `faas-cli` to connect to the local OpenFaaS gateway. This involves setting the `OPENFAAS_URL` environment variable or configuring the CLI with the gateway’s address.

- Example Command: `faas-cli login -g http://localhost:8080`

- Kubeless:

- Installation: Kubeless runs on Kubernetes. Install a local Kubernetes cluster using tools like Minikube or kind. Then, deploy Kubeless using `kubectl`.

- Configuration: The `kubectl` command-line tool is used to interact with the Kubeless cluster. The configuration is primarily managed through Kubernetes manifests and `kubectl` commands.

- Example Command: `kubectl get functions`

- Apache OpenWhisk:

- Installation: OpenWhisk can be deployed locally using Docker Compose. This setup includes the OpenWhisk core components and supporting services like CouchDB and Nginx.

- Configuration: After deployment, configure the OpenWhisk CLI (`wsk`) with the local endpoint and authentication credentials.

- Example Command: `wsk action list`

Utilizing Development Tools within the FaaS Environment

Integrating development tools into the FaaS environment streamlines the development process, improving code quality and debugging efficiency. IDEs, debugging tools, and testing frameworks play a vital role in this process.

The following illustrates the use of development tools, providing examples based on commonly used tools.

- Integrated Development Environments (IDEs): IDEs such as Visual Studio Code, IntelliJ IDEA, or Eclipse offer features such as code completion, syntax highlighting, and debugging support. Configure the IDE to recognize the FaaS platform’s function syntax and environment. This might involve installing plugins or extensions specific to the chosen platform and programming language.

- Debugging Tools:

- Local Debugging: Most FaaS platforms allow local debugging, where functions can be executed within the development environment, and a debugger can be attached. Use the debugger to step through code, inspect variables, and identify errors. Set breakpoints within the function code to pause execution at specific points.

- Remote Debugging: For debugging deployed functions, configure remote debugging. This usually involves exposing a debugging port on the function container and connecting a debugger from the local machine.

- Example: For Python functions, use the `pdb` debugger or an IDE’s debugger. For Node.js functions, use the `node –inspect` flag and a debugger like the one integrated into VS Code.

- Testing Frameworks: Utilize testing frameworks to create unit tests and integration tests. Write tests that cover different input scenarios and validate the function’s behavior.

- Unit Tests: Test individual functions in isolation.

- Integration Tests: Test the interaction between functions and other components.

- Example: Use frameworks like pytest (Python), Jest (JavaScript), or JUnit (Java) to write and run tests.

Developing and Deploying Functions

The core of any Function-as-a-Service (FaaS) platform lies in the development and deployment of individual functions. This section Artikels the process of creating, testing, packaging, and deploying these functions within an on-premise FaaS environment. It covers the necessary steps to translate code into executable units and integrate them seamlessly into the platform’s ecosystem.

Writing, Testing, and Packaging Functions

The process of function development involves several key steps, each crucial for ensuring functionality, reliability, and maintainability. These steps ensure that functions are written to adhere to best practices, tested rigorously to prevent errors, and packaged efficiently for deployment.

- Function Code Development: This is the initial stage where the function’s logic is implemented. Functions are typically written in a supported programming language, such as Python, JavaScript, Go, or Java, depending on the FaaS platform’s capabilities. Code should be modular, well-documented, and follow coding standards to enhance readability and maintainability.

- Local Testing: Before deployment, functions undergo local testing to verify their behavior. This involves running the function locally with various inputs and validating the outputs. Unit tests, integration tests, and end-to-end tests are crucial for identifying and resolving bugs early in the development cycle. Tools specific to the chosen language and testing frameworks are employed to automate the testing process.

- Packaging: Once the function is tested and verified, it needs to be packaged for deployment. This typically involves bundling the function’s code along with any necessary dependencies into a deployment package, such as a ZIP archive or a container image. The packaging process ensures that the function has everything it needs to execute in the FaaS environment. The specific method for packaging depends on the chosen FaaS platform and the programming language used.

Deployment Strategies

Deployment strategies determine how functions are made available within the FaaS platform. Different strategies offer varying levels of automation and control, impacting the speed, reliability, and scalability of function deployments.

- Manual Deployment: Manual deployment involves uploading the packaged function directly to the FaaS platform through a user interface or command-line interface. This approach is suitable for initial testing or infrequent deployments. However, it can be time-consuming and prone to errors, particularly for frequent updates.

- Automated Deployment using CI/CD Pipelines: Continuous Integration and Continuous Deployment (CI/CD) pipelines automate the process of building, testing, and deploying functions. Developers commit code changes to a version control system (e.g., Git). The CI/CD pipeline then automatically builds the function, runs tests, and deploys it to the FaaS platform upon successful completion of the tests. This approach significantly reduces the time and effort required for deployments and increases the reliability of the deployment process.

Popular CI/CD tools include Jenkins, GitLab CI, and CircleCI.

- Version Control: Using a version control system is essential for managing function code. It allows developers to track changes, revert to previous versions, and collaborate effectively. Version control systems, such as Git, provide a history of code changes, facilitating debugging and rollback if necessary. Branching and merging strategies enable parallel development and integration of new features.

Common Function Use Cases

FaaS platforms support a wide range of use cases. Understanding these common scenarios helps in designing and deploying functions that address specific business needs.

- Image Processing: Functions can be used to perform various image processing tasks, such as resizing, cropping, watermarking, and format conversion. For instance, when an image is uploaded, a function can automatically resize it for different device screens. This eliminates the need for manual image manipulation and ensures consistent image presentation across various platforms.

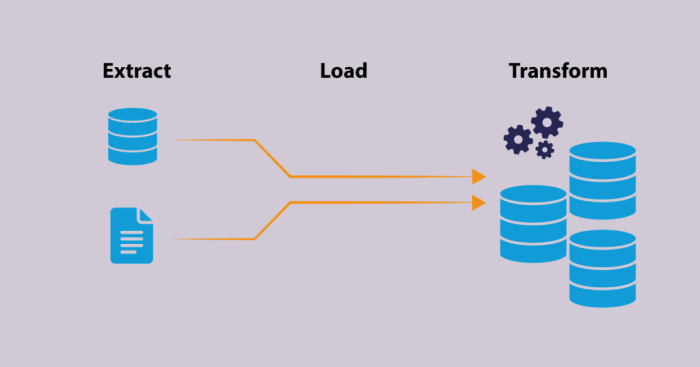

- Data Transformation: Data transformation involves converting data from one format to another, cleaning data, or enriching it with additional information. FaaS can automate these processes, allowing for seamless data integration and analysis. For example, a function could transform data from a CSV file into a JSON format or validate and clean data received from an external API.

- API Integrations: Functions can act as intermediaries, integrating with various APIs to retrieve, process, and deliver data. This includes integrating with databases, third-party services, and other applications. For example, a function could be triggered by a user request, call an external API to retrieve data, process the data, and then return the result to the user.

Example: “Hello World” Function (Python)

“`pythondef hello_world(event, context): “”” This function returns a simple “Hello, World!” message. “”” return ‘statusCode’: 200, ‘body’: ‘Hello, World!’ “`

Example: “Hello World” Function (JavaScript)

“`javascriptexports.handler = async (event) => const response = statusCode: 200, body: JSON.stringify(‘Hello, World!’), ; return response;;“`

Managing Function Execution and Scaling

Effectively managing function execution and scaling is critical for a Function-as-a-Service (FaaS) platform’s performance, resource utilization, and overall cost-effectiveness. This involves meticulous control over function behavior, allocation of resources, and the ability to dynamically adjust capacity based on demand. A well-designed system ensures functions operate reliably, efficiently, and can handle fluctuating workloads without performance degradation.

Managing Function Execution

Function execution management encompasses the control and optimization of how individual functions are run. This includes defining resource limits, managing concurrency, and setting appropriate timeout values. These controls prevent resource exhaustion, improve responsiveness, and enhance the overall stability of the FaaS platform.

- Resource Allocation: Functions require defined resources like CPU, memory, and network bandwidth. Resource allocation must be configured to prevent functions from monopolizing system resources, which could impact other functions or the platform itself.

- CPU Limits: Restricting the CPU usage of a function prevents it from consuming excessive processing power. For example, a function processing large images might be limited to a certain percentage of a CPU core to avoid impacting other concurrent function executions.

- Memory Limits: Setting memory limits is essential to prevent functions from consuming excessive memory, potentially leading to out-of-memory errors. Consider a function that processes large JSON files; if memory limits are not set, it could exhaust available memory.

- Network Bandwidth Limits: Controlling network bandwidth usage can prevent a function from overwhelming network resources, especially functions that handle high volumes of data transfer.

- Concurrency Control: Concurrency control determines how many instances of a function can run simultaneously. This is crucial for managing the platform’s ability to handle multiple requests concurrently.

- Concurrency Limits: Setting concurrency limits prevents a single function from being overloaded by a large number of concurrent requests. For example, a function that interacts with a database could have its concurrency limited to avoid overwhelming the database server.

- Queuing: Implementing a queuing mechanism can buffer incoming requests when the function’s concurrency limit is reached, preventing request drops and ensuring requests are processed in an orderly fashion.

- Timeout Settings: Setting appropriate timeout values is essential to prevent functions from running indefinitely and consuming resources. Functions that take too long to execute can block resources and impact the responsiveness of the platform.

- Function Timeout: This specifies the maximum time a function is allowed to run before being terminated. For instance, a function that processes a complex calculation might have a longer timeout than a function that simply retrieves data from a cache.

- Request Timeout: This defines the maximum time a client is willing to wait for a response from a function. This setting ensures that clients do not experience excessively long wait times.

Scaling Strategies

Scaling a FaaS platform involves adjusting the number of function instances to match the current workload. Different scaling strategies offer varying degrees of flexibility and efficiency. The optimal strategy depends on the nature of the functions, the expected workload patterns, and the available infrastructure.

- Horizontal Scaling: This involves increasing the number of function instances to handle a larger workload. It is often the preferred approach for its ability to scale elastically and handle unpredictable traffic spikes.

- Benefits: Provides high availability and fault tolerance by distributing the workload across multiple instances. It can easily handle peak loads by automatically creating more instances.

- Implementation: Requires a mechanism to automatically provision and manage function instances. This is often achieved through auto-scaling features provided by the FaaS platform.

- Example: If a function is processing image uploads, horizontal scaling would create more instances of the function to handle a sudden surge in upload requests during a marketing campaign.

- Vertical Scaling: This involves increasing the resources (CPU, memory) allocated to a single function instance. This approach is simpler to implement than horizontal scaling but has limitations.

- Benefits: Can improve the performance of individual function instances by providing more resources. This is particularly useful for functions that are CPU or memory-bound.

- Limitations: Limited by the maximum resources available on a single server. It does not inherently improve availability.

- Example: If a function performs complex mathematical calculations, increasing the CPU and memory allocated to the function can reduce its execution time.

- Auto-Scaling: This is an automated process that dynamically adjusts the number of function instances based on real-time metrics, such as CPU utilization, memory usage, and request queue length.

- Implementation: Requires a monitoring system to collect metrics and a scaling engine to automatically adjust the number of function instances.

- Benefits: Provides optimal resource utilization by automatically scaling up during peak loads and scaling down during periods of low activity. It minimizes operational costs by only allocating resources when needed.

- Example: An auto-scaling system might monitor the average CPU utilization of function instances. If the utilization exceeds a predefined threshold (e.g., 70%), the system will automatically create more function instances. When the utilization drops below a lower threshold (e.g., 30%), the system will scale down the number of instances.

Monitoring Function Performance and Resource Utilization

Comprehensive monitoring is crucial for understanding function behavior, identifying performance bottlenecks, and optimizing resource usage. A robust monitoring system provides insights into function execution times, resource consumption, and error rates. This data enables proactive troubleshooting, capacity planning, and informed decision-making regarding scaling strategies.

- Key Metrics to Monitor: A comprehensive monitoring system should track a variety of metrics to provide a holistic view of function performance and resource utilization.

- Execution Time: The time it takes for a function to complete its execution. This metric helps identify slow-performing functions and potential bottlenecks.

- Invocation Count: The number of times a function is executed. This metric provides insight into the workload handled by each function.

- Error Rate: The percentage of function invocations that result in errors. This metric is critical for identifying and resolving bugs.

- CPU Utilization: The percentage of CPU resources used by a function instance. High CPU utilization can indicate a CPU-bound function.

- Memory Usage: The amount of memory used by a function instance. High memory usage can indicate a memory leak or inefficient code.

- Network I/O: The amount of data transferred by a function instance over the network. This metric is important for functions that interact with external services.

- Request Queue Length: The number of requests waiting to be processed. A long queue indicates that the system is overloaded.

- Monitoring System Design: A well-designed monitoring system typically includes several components.

- Metric Collection: Agents or libraries that collect metrics from function instances. These agents should be lightweight and non-intrusive.

- Data Aggregation and Storage: A system for aggregating and storing collected metrics. This could involve a time-series database or a distributed data store.

- Alerting and Notification: A system for generating alerts based on predefined thresholds. Alerts should notify operators of potential issues.

- Visualization and Reporting: Tools for visualizing and reporting on collected metrics. Dashboards and reports should provide insights into function performance and resource utilization.

- Tools and Technologies: Several tools and technologies can be used to implement a monitoring system.

- Prometheus: An open-source monitoring system that collects and stores metrics as time-series data. It is widely used in containerized environments.

- Grafana: A data visualization and dashboarding tool that can be used to create interactive dashboards based on data from Prometheus and other data sources.

- Jaeger/Zipkin: Distributed tracing systems that can be used to trace requests as they flow through a distributed system, helping to identify performance bottlenecks.

- CloudWatch (AWS): A monitoring service provided by Amazon Web Services. It provides comprehensive monitoring capabilities for AWS resources and can be integrated with FaaS platforms.

- Azure Monitor (Azure): A monitoring service provided by Microsoft Azure. It provides comprehensive monitoring capabilities for Azure resources and can be integrated with FaaS platforms.

- Stackdriver (Google Cloud): A monitoring service provided by Google Cloud Platform. It provides comprehensive monitoring capabilities for Google Cloud resources and can be integrated with FaaS platforms.

Implementing Security Best Practices

On-premise FaaS platforms, while offering enhanced control and data residency, introduce unique security challenges that necessitate a robust and multifaceted approach. Securing such a platform requires meticulous attention to detail across all layers, from function code to underlying infrastructure. Failure to adequately address these concerns can lead to significant vulnerabilities, including data breaches, service disruptions, and unauthorized access.

Security Considerations for On-Premise FaaS Platforms

Understanding the security implications is paramount for building a secure FaaS platform. This involves carefully evaluating authentication, authorization, and data encryption strategies. Each of these areas presents specific challenges within the context of a serverless architecture operating on-premise.

- Authentication: Authentication verifies the identity of users or services attempting to access the platform. In a FaaS environment, authentication mechanisms must integrate seamlessly with function invocations, API gateway interactions, and administrative access. This ensures that only authorized entities can execute functions or manage the platform. Consider the use of strong passwords, multi-factor authentication (MFA), and identity providers (IdPs) like Active Directory or OpenID Connect for robust authentication.

- Authorization: Authorization determines the permissions granted to authenticated users or services. This involves defining roles, access control lists (ACLs), and policies that govern what resources each entity can access and what actions they can perform. Fine-grained authorization is crucial to prevent unauthorized function execution, data access, or system modifications. Role-Based Access Control (RBAC) is a common and effective approach, allowing administrators to define roles with specific permissions and assign users to those roles.

- Data Encryption: Data encryption protects sensitive information from unauthorized access, both in transit and at rest. Encryption should be implemented at multiple levels, including data stored in function code, databases, and object storage. Implement TLS/SSL for encrypting data in transit, and use encryption keys managed by a secure key management system (KMS) for encrypting data at rest. Regularly rotate encryption keys to mitigate the impact of potential key compromises.

Methods for Securing Functions, API Gateways, and Underlying Infrastructure

Securing the different components of an on-premise FaaS platform requires a layered approach. This includes securing the functions themselves, the API gateway that manages function invocations, and the underlying infrastructure that hosts the platform.

- Securing Functions: Function security focuses on protecting the code, its dependencies, and the resources it accesses. Implement the following practices:

- Code Reviews and Static Analysis: Regularly review function code for vulnerabilities, such as SQL injection, cross-site scripting (XSS), and insecure dependencies. Employ static analysis tools to automatically identify potential security flaws.

- Least Privilege Principle: Grant functions only the minimum permissions required to perform their tasks. Avoid granting excessive access to resources like databases or object storage.

- Input Validation and Sanitization: Validate and sanitize all input data to prevent injection attacks and other vulnerabilities. This includes validating data types, lengths, and formats.

- Dependency Management: Regularly update function dependencies to patch known vulnerabilities. Use a dependency management tool to track and manage dependencies.

- Function Isolation: Isolate functions from each other using containerization or other isolation techniques to prevent a compromised function from affecting other functions or the underlying infrastructure.

- Securing API Gateways: The API gateway is the entry point for all function invocations, making it a critical security component. Secure the API gateway through:

- Authentication and Authorization: Enforce strong authentication and authorization mechanisms at the API gateway level to control access to functions.

- Rate Limiting and Throttling: Implement rate limiting and throttling to prevent denial-of-service (DoS) attacks and protect functions from excessive load.

- Input Validation: Validate and sanitize all incoming requests to the API gateway to prevent injection attacks.

- Traffic Encryption: Use TLS/SSL to encrypt all traffic between clients and the API gateway.

- Regular Monitoring: Monitor API gateway logs for suspicious activity, such as unusual request patterns or error messages.

- Securing Underlying Infrastructure: Securing the underlying infrastructure involves protecting the servers, networks, and storage that host the FaaS platform. Employ the following practices:

- Network Segmentation: Segment the network to isolate the FaaS platform from other systems and limit the impact of a security breach.

- Regular Security Patching: Apply security patches to all servers and software components promptly.

- Intrusion Detection and Prevention Systems (IDPS): Implement IDPS to detect and prevent malicious activity.

- Security Auditing: Regularly audit the infrastructure for security vulnerabilities.

- Access Control: Implement strict access control policies to limit access to the infrastructure.

Strategies for Protecting Against Common Security Threats

On-premise FaaS platforms are vulnerable to various security threats. Implementing proactive strategies is crucial for mitigating these risks.

- Injection Attacks: Injection attacks, such as SQL injection and command injection, occur when attackers inject malicious code into input fields. Prevent injection attacks by:

- Input Validation: Validate and sanitize all user inputs to ensure they conform to expected formats and types.

- Parameterized Queries: Use parameterized queries to prevent SQL injection vulnerabilities.

- Escaping Special Characters: Escape special characters in user inputs to prevent command injection.

- Denial-of-Service (DoS) Attacks: DoS attacks aim to make a service unavailable by overwhelming it with traffic. Protect against DoS attacks by:

- Rate Limiting and Throttling: Implement rate limiting and throttling at the API gateway level to limit the number of requests from a single client.

- Load Balancing: Use load balancing to distribute traffic across multiple function instances.

- Web Application Firewall (WAF): Deploy a WAF to filter malicious traffic.

- Data Breaches: Data breaches can result in the exposure of sensitive data. Prevent data breaches by:

- Data Encryption: Encrypt data at rest and in transit.

- Access Control: Implement strict access control policies to limit access to sensitive data.

- Regular Security Audits: Conduct regular security audits to identify and address vulnerabilities.

- Data Loss Prevention (DLP): Implement DLP measures to prevent sensitive data from leaving the platform.

- Cross-Site Scripting (XSS) Attacks: XSS attacks inject malicious scripts into web pages viewed by other users. Prevent XSS attacks by:

- Input Validation: Validate and sanitize user inputs.

- Output Encoding: Encode output data to prevent malicious scripts from executing.

- Content Security Policy (CSP): Implement CSP to control the resources that a web page can load.

Integrating with Other Services

Integrating a Function-as-a-Service (FaaS) platform with existing on-premise services is crucial for realizing its full potential. This integration allows functions to interact with other components of the application ecosystem, such as databases, message queues, and storage systems. Effective integration enhances the functionality, flexibility, and overall utility of the FaaS platform.

Connecting with Databases

Functions often need to access and manipulate data stored in databases. Database integration involves establishing connections, executing queries, and managing data transactions.

- Establishing Database Connections: Functions typically connect to databases using database-specific client libraries or drivers. These libraries handle the low-level details of communication, such as establishing TCP connections, managing authentication, and sending/receiving data. Configuration typically includes database hostnames or IP addresses, port numbers, database names, usernames, and passwords. For example, to connect to a PostgreSQL database, a function might use the `psycopg2` library in Python:

import psycopg2 def my_function(event, context): try: conn = psycopg2.connect( host="your_db_host", database="your_db_name", user="your_db_user", password="your_db_password" ) cur = conn.cursor() cur.execute("SELECT- FROM your_table;") rows = cur.fetchall() conn.close() return "statusCode": 200, "body": str(rows) except Exception as e: return "statusCode": 500, "body": str(e)This code snippet demonstrates a basic connection and query execution.

Error handling is essential for robustness.

- Executing Queries and Data Manipulation: Functions execute SQL queries (for relational databases) or use database-specific APIs (for NoSQL databases) to retrieve, insert, update, or delete data. Prepared statements are often used to prevent SQL injection vulnerabilities. The choice of query language or API depends on the database type.

- Managing Transactions: Transactions ensure data consistency, particularly in operations involving multiple database updates. Functions should use transaction management features provided by the database client libraries. This typically involves beginning a transaction, executing multiple operations, and either committing the transaction (if all operations succeed) or rolling it back (if any operation fails).

- Connection Pooling: For improved performance, especially under high load, consider implementing database connection pooling. Connection pooling maintains a pool of database connections that can be reused by multiple function invocations, reducing the overhead of establishing new connections for each function execution.

Integrating with Message Queues

Message queues enable asynchronous communication between functions and other services. Functions can publish messages to queues, and other services (or functions) can consume those messages. This decoupling improves system resilience and scalability.

- Choosing a Message Queue: Popular message queue systems include RabbitMQ, Apache Kafka, and Apache ActiveMQ. The choice depends on factors such as message throughput requirements, message persistence needs, and the desired level of fault tolerance. For instance, Kafka is well-suited for high-throughput scenarios, while RabbitMQ provides more advanced routing capabilities.

- Publishing Messages: Functions use message queue client libraries to publish messages. The message format is often serialized (e.g., JSON, Protobuf) for efficient transmission. For example, using the `pika` library (for RabbitMQ) in Python:

import pika import json def my_function(event, context): try: connection = pika.BlockingConnection(pika.ConnectionParameters('your_rabbitmq_host')) channel = connection.channel() channel.queue_declare(queue='my_queue') message = json.dumps("message": "Hello from FaaS!") channel.basic_publish(exchange='', routing_key='my_queue', body=message) connection.close() return "statusCode": 200, "body": "Message published" except Exception as e: return "statusCode": 500, "body": str(e)This code publishes a JSON message to a RabbitMQ queue named ‘my_queue’.

- Consuming Messages: Functions (or other services) subscribe to message queues to consume messages. The consumption process typically involves receiving messages, processing them, and acknowledging their successful processing. Error handling is crucial to address message processing failures.

- Message Routing and Filtering: Message queue systems often support message routing and filtering mechanisms, such as topic exchanges and direct exchanges, to control how messages are delivered to consumers. This enables functions to subscribe to specific types of messages based on their content or routing keys.

Connecting with Storage Systems

Functions frequently need to interact with storage systems to store and retrieve files, images, and other data.

- Choosing a Storage System: Options include object storage (e.g., MinIO, Ceph), file storage (e.g., NFS, SMB), and block storage. The choice depends on the specific storage requirements, such as data access patterns, data size, and performance needs.

- Accessing Storage: Functions use storage system-specific APIs or SDKs to interact with the storage. These APIs provide functions for uploading, downloading, listing, and deleting objects or files.

- Authentication and Authorization: Secure access to storage systems is essential. Functions should be configured with appropriate credentials (e.g., API keys, access tokens) and authorized to perform the required operations.

- Example with Object Storage (MinIO): The following Python example demonstrates uploading a file to MinIO:

from minio import Minio import os def my_function(event, context): try: minio_client = Minio( "your_minio_endpoint", access_key="your_access_key", secret_key="your_secret_key", secure=False # Set to True if using HTTPS ) bucket_name = "your_bucket_name" file_path = "/tmp/my_file.txt" #Assuming the function has write access to /tmp with open(file_path, "w") as f: f.write("Hello, MinIO!") minio_client.fput_object(bucket_name, "my_file.txt", file_path) return "statusCode": 200, "body": "File uploaded" except Exception as e: return "statusCode": 500, "body": str(e)This example uploads a file to a specified MinIO bucket. It assumes the function has write access to the /tmp directory.

Using APIs and SDKs

APIs and SDKs facilitate integration with external services.

- API Integration: Functions can interact with other services through their APIs. This involves making HTTP requests (e.g., using the `requests` library in Python) to the service’s endpoints. API calls often involve authentication (e.g., API keys, OAuth tokens).

- SDKs: Many services provide SDKs (Software Development Kits) that simplify API interactions. SDKs typically abstract away the low-level details of making API calls, providing higher-level functions and objects for interacting with the service. For example, the AWS SDK for Python (Boto3) provides convenient access to AWS services like S3, DynamoDB, and Lambda.

- Example using `requests` library (API call):

import requests import json def my_function(event, context): try: response = requests.get("https://api.example.com/data") response.raise_for_status() # Raise HTTPError for bad responses (4xx or 5xx) data = response.json() return "statusCode": 200, "body": json.dumps(data) except requests.exceptions.RequestException as e: return "statusCode": 500, "body": str(e)This example makes a GET request to an external API and processes the JSON response.

Service Discovery and Service Mesh

Service discovery and service mesh technologies improve service communication.

- Service Discovery: Service discovery allows functions to dynamically locate and connect to other services in the on-premise environment. This is particularly important in dynamic environments where service instances may be added, removed, or scaled. Popular service discovery tools include Consul, etcd, and Kubernetes DNS.

- Service Mesh: A service mesh provides a dedicated infrastructure layer for service-to-service communication. It handles service discovery, traffic management (e.g., routing, load balancing), security (e.g., mutual TLS), and observability (e.g., metrics, tracing). Popular service mesh implementations include Istio and Linkerd.

Service mesh can significantly simplify service communication by providing a centralized platform for managing interactions between services.

- Benefits: Service discovery and service mesh enhance the reliability, scalability, and security of the FaaS platform and the overall application. They enable functions to discover and connect to other services dynamically, manage traffic efficiently, and secure service-to-service communication. For example, using a service mesh like Istio, you can apply traffic management rules (e.g., circuit breaking, retries) without modifying the function code.

Monitoring and Logging

Effective monitoring and logging are critical for maintaining a healthy and performant Function-as-a-Service (FaaS) platform on-premise. They provide insights into function behavior, identify bottlenecks, and facilitate rapid troubleshooting. A robust monitoring and logging strategy enables proactive issue detection, performance optimization, and overall platform reliability.

Tools and Techniques for Monitoring Function Performance

Monitoring function performance involves collecting and analyzing various metrics to understand how functions are executing and identify areas for improvement. This process requires a combination of metrics, logs, and tracing to provide a comprehensive view of function behavior.

- Metrics: Metrics provide quantifiable data about function performance, such as execution time, memory usage, invocation count, and error rates. They offer a high-level overview of function health and performance trends.

Metrics can be categorized as:

- Invocation Count: The number of times a function is executed. This metric is fundamental for understanding function usage patterns and identifying potential scaling needs.

- Execution Time: The duration it takes for a function to complete its execution. Analyzing execution time helps pinpoint slow functions and identify performance bottlenecks.

For example, if a function’s average execution time suddenly increases, it could indicate an issue with the function’s code, its dependencies, or the underlying infrastructure.

- Memory Usage: The amount of memory a function consumes during execution. Monitoring memory usage helps prevent functions from exceeding resource limits and causing performance degradation or failures.

- Error Rate: The percentage of function invocations that result in errors. A high error rate signals potential issues with function code, dependencies, or external services.

- Cold Start Time: The time it takes for a function instance to be initialized when no instance is available (serverless). This metric is crucial for serverless environments where cold starts can significantly impact latency.

These metrics are typically collected and aggregated by the FaaS platform and can be exposed through APIs or dashboards. Popular monitoring tools like Prometheus, Grafana, and Datadog can be used to collect, store, and visualize these metrics.

- Logs: Logs provide detailed information about function execution, including events, errors, and debug messages. They offer granular insights into function behavior and aid in troubleshooting issues.

Logging levels, such as DEBUG, INFO, WARN, and ERROR, allow developers to control the verbosity of the logs and filter out irrelevant information.

Logs should include:

- Timestamp: The time the log entry was generated.

- Function Name: The name of the function that generated the log entry.

- Log Level: The severity of the log entry (e.g., INFO, WARN, ERROR).

- Message: The actual log message, providing details about the event or error.

- Contextual Information: Additional information, such as request IDs, user IDs, or other relevant data, to aid in debugging.

Tools like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk are commonly used for log aggregation, analysis, and visualization.

- Tracing: Tracing involves tracking the flow of requests through a distributed system, including function invocations and interactions with other services. It provides a holistic view of function dependencies and performance bottlenecks.

Tracing tools like Jaeger, Zipkin, and OpenTelemetry enable developers to visualize request flows, identify slow components, and understand the impact of function invocations on the overall system.

Tracing typically involves:

- Spans: Represent individual units of work, such as a function invocation or a call to an external service.

- Traces: Group of spans that represent the complete flow of a request.

- Context Propagation: Passing trace context (e.g., trace IDs, span IDs) between services and functions to correlate related spans.

Implementing Logging and Error Handling Within Functions

Implementing effective logging and error handling within functions is essential for capturing valuable information and ensuring the resilience of the FaaS platform.

- Logging Best Practices: Following these practices will enhance the effectiveness of function logging.

- Use Structured Logging: Employ structured logging formats (e.g., JSON) to facilitate parsing and analysis of log data. This enables easier querying and filtering of logs.

- Include Contextual Information: Add relevant contextual information to log messages, such as request IDs, user IDs, and function invocation details. This helps correlate logs with specific requests and debug issues more efficiently.

- Use Appropriate Log Levels: Utilize different log levels (DEBUG, INFO, WARN, ERROR) to control the verbosity of logs and filter out irrelevant information. Use DEBUG for detailed debugging information, INFO for general events, WARN for potential issues, and ERROR for critical errors.

- Log Errors with Stack Traces: When logging errors, include the stack trace to provide detailed information about the source of the error. This is invaluable for pinpointing the root cause of the problem.

- Avoid Sensitive Data: Never log sensitive data, such as passwords or API keys, to prevent security breaches.

- Error Handling Strategies: Implement robust error handling mechanisms to gracefully handle exceptions and prevent function failures.

- Try-Catch Blocks: Use try-catch blocks to catch exceptions and handle them appropriately. This prevents unhandled exceptions from crashing the function.

- Error Propagation: Propagate errors to the caller by returning error codes or throwing custom exceptions. This allows the caller to handle the error and take appropriate action.

- Retries: Implement retry mechanisms for transient errors, such as network timeouts. This can improve the resilience of functions that interact with external services.

- Circuit Breakers: Use circuit breakers to prevent cascading failures when a dependent service is unavailable or experiencing issues. This helps protect the FaaS platform from being overwhelmed.

- Dead Letter Queues (DLQs): If a function fails repeatedly, consider using a DLQ to store the failed event or message for later analysis and reprocessing.

Example (Python):

“`python

import logging

import json

import traceback# Configure logging

logging.basicConfig(level=logging.INFO, format=’%(asctime)s – %(levelname)s – %(message)s’)def my_function(event, context):

try:

# Your function logic here

result = some_operation(event)

logging.info(f”Function executed successfully. Result: result”)

return

‘statusCode’: 200,

‘body’: json.dumps(‘message’: ‘Function executed successfully’, ‘result’: result)except Exception as e:

logging.error(f”Function failed: e”)

logging.error(traceback.format_exc()) # Log the full stack trace

return

‘statusCode’: 500,

‘body’: json.dumps(‘message’: ‘Function failed’, ‘error’: str(e))def some_operation(event):

# Simulate an error for demonstration

if ‘simulate_error’ in event and event[‘simulate_error’]:

raise ValueError(“Simulated error”)

return “Success”

“`This example demonstrates how to implement logging and error handling in a Python function, including structured logging, try-catch blocks, and logging of stack traces.

Creating a Dashboard for Visualizing Function Metrics and Logs

Creating a dashboard for visualizing function metrics and logs provides a centralized view of function performance and health. This enables quick identification of issues, performance bottlenecks, and trends.

- Dashboard Components: A comprehensive dashboard should include the following components.

- Key Metrics: Display key metrics such as invocation count, execution time, error rate, and memory usage. Use charts and graphs to visualize these metrics over time.

- Log Aggregation: Integrate with log aggregation tools to display logs in real-time. Provide filtering and search capabilities to quickly find relevant log entries.

- Alerting: Configure alerts to notify operators of critical issues, such as high error rates or exceeding resource limits.

- Function-Specific Views: Allow users to view metrics and logs for individual functions or groups of functions.

- Customizable Dashboards: Enable users to customize dashboards to display the metrics and logs most relevant to their needs.

- Dashboard Tools: Several tools are available for creating dashboards.

- Grafana: A popular open-source platform for creating dashboards and visualizing metrics from various data sources, including Prometheus, Elasticsearch, and InfluxDB. Grafana supports a wide range of chart types and allows for the creation of highly customized dashboards.

- Kibana: The visualization component of the ELK stack (Elasticsearch, Logstash, Kibana). Kibana provides powerful features for visualizing logs and other data stored in Elasticsearch, including charts, graphs, and interactive dashboards.

- Prometheus: An open-source monitoring system that collects and stores metrics. Prometheus can be used to create basic dashboards, but it is often used in conjunction with Grafana for more advanced visualization capabilities.

- Datadog: A commercial monitoring and analytics platform that provides comprehensive monitoring, logging, and tracing capabilities. Datadog offers pre-built dashboards for FaaS platforms and supports custom dashboard creation.

- Example Dashboard (Grafana): This section describes a possible Grafana dashboard setup for a FaaS platform.

A Grafana dashboard for monitoring a FaaS platform could include panels for:

- Invocation Count: A time series graph showing the number of function invocations per minute or hour. This provides insight into the workload and usage patterns.

- Average Execution Time: A time series graph displaying the average execution time of functions. This helps identify performance bottlenecks and regressions.

- Error Rate: A time series graph showing the percentage of function invocations that result in errors. This helps detect and troubleshoot issues.

- Memory Usage: A graph showing the average and maximum memory usage of functions. This helps monitor resource consumption and prevent out-of-memory errors.

- Cold Start Time: A time series graph visualizing the cold start time. This is critical for serverless environments where cold starts can impact latency.

- Logs Panel: A panel that displays recent logs from the FaaS platform, with filtering and search capabilities. This allows operators to quickly identify and troubleshoot issues.

These panels would use data sources like Prometheus or Elasticsearch to retrieve metrics and logs.

A dashboard, for example, would display the number of function invocations over time. This helps understand the usage patterns of the FaaS platform. A sudden spike in invocations could indicate a surge in user activity or a potential issue. Similarly, the average execution time of functions should be monitored to identify any performance regressions. If the average execution time increases, it may indicate that a function is experiencing performance issues.

A dashboard can also display the error rate, showing the percentage of function invocations that result in errors. A high error rate could indicate a problem with the function code or dependencies.

Cost Optimization Strategies

Optimizing the cost of an on-premise FaaS platform is crucial for achieving a favorable return on investment and realizing the benefits of serverless computing without the financial drawbacks. This involves a multifaceted approach, considering resource utilization, infrastructure management, and efficient function design. Successful cost optimization requires a deep understanding of the factors driving costs and the implementation of targeted strategies.

Factors Affecting On-Premise FaaS Platform Costs

Several key factors contribute to the overall cost of operating an on-premise FaaS platform. These factors interact with each other, influencing the total cost of ownership (TCO). Understanding these elements is the foundation for effective cost optimization.

- Hardware Costs: The initial investment in servers, storage, and networking equipment represents a significant capital expenditure. The type and capacity of hardware directly impact performance, scalability, and energy consumption. For instance, using high-performance CPUs and ample RAM allows for faster function execution but increases hardware costs.

- Software Licensing: The licensing costs for the FaaS platform software itself, as well as any supporting software like databases, operating systems, and monitoring tools, contribute to operational expenses. The licensing model (e.g., open-source, proprietary) dictates the cost structure.

- Power and Cooling: Data centers consume significant power, and the associated cooling requirements add to operational costs. Efficient hardware and optimized resource utilization can help mitigate these expenses.

- Network Costs: Data transfer costs, especially for functions that handle large amounts of data or interact with external services, can be substantial. Network configuration and bandwidth allocation play a critical role.

- Maintenance and Administration: The costs associated with system administration, software updates, security patching, and hardware maintenance contribute to ongoing expenses. Automation and efficient management practices can reduce these costs.

- Personnel Costs: Salaries for the IT staff responsible for managing the platform, developing functions, and troubleshooting issues are a significant operational expense. The skill set and experience of the team affect efficiency and problem-solving capabilities.

- Depreciation: The depreciation of hardware assets over time needs to be considered. This is an accounting method that allocates the cost of an asset over its useful life.

- Scalability Costs: Costs associated with scaling the infrastructure to meet peak demands, including additional hardware, software licenses, and operational overhead.

Strategies for Optimizing Resource Utilization and Minimizing Costs