This guide delves into the crucial retry pattern, specifically focusing on exponential backoff. Understanding how to implement this pattern effectively is essential for building robust and resilient applications. The pattern addresses intermittent failures gracefully, allowing systems to recover and continue operation. This guide will walk you through the implementation steps, offering insights into strategies for calculating delays, handling diverse errors, and optimizing performance.

Exponential backoff provides a structured approach to handling transient errors, avoiding overwhelming the system with repeated attempts. This methodology ensures that retries occur with increasing delays, minimizing the impact on resources and preventing cascading failures. This document offers practical examples, use cases, and considerations to help you implement the retry pattern effectively in your projects.

Introduction to Retry Pattern and Exponential Backoff

The retry pattern is a robust technique used in software development to handle transient failures. It allows applications to automatically attempt an operation again after a failure, increasing the chances of successful completion. This approach is particularly valuable in distributed systems where network issues, service outages, or temporary resource constraints can disrupt operations.Implementing a retry mechanism is crucial for building resilient applications that can withstand intermittent failures.

The exponential backoff strategy further enhances this resilience by gradually increasing the delay between retry attempts. This approach helps avoid overwhelming the system or the service being accessed during periods of high stress. It prioritizes the application’s ability to recover and maintain operational efficiency.

Retry Pattern Explanation

The retry pattern essentially involves attempting an operation multiple times if it fails initially. This approach is often employed when failures are likely to be transient. The pattern includes a mechanism to determine whether to retry and how many times. Successful completion of the operation terminates the retry sequence.

Exponential Backoff Strategy

The exponential backoff strategy is a crucial component of the retry mechanism. It dictates how long to wait between retry attempts. By increasing the delay exponentially after each failure, the retry mechanism avoids overwhelming the target system. This strategy helps prevent the system from being bombarded with requests during periods of instability.

Core Concepts

- Transient Failures: These are failures that are likely to resolve themselves over time. Network issues, temporary service outages, and resource constraints are common examples. Examples of such failures could be a network timeout, a database connection error, or a service becoming temporarily unavailable.

- Retry Logic: The retry logic defines the conditions under which an operation should be retried. This often includes the number of retry attempts, the maximum time allowed for retries, and the conditions that trigger a retry attempt. This logic can be implemented as a loop with a counter, a maximum retry limit, and an appropriate backoff strategy.

- Exponential Backoff: This strategy increases the delay between retry attempts by an exponential factor after each failure. This prevents overwhelming the target system with requests. For example, delays might start at 1 second, then 2 seconds, 4 seconds, 8 seconds, and so on.

- Retry Policies: Retry policies are guidelines that govern the retry process. They determine the conditions for retrying, the maximum number of attempts, and the time intervals between attempts. A robust retry policy needs to be carefully designed to balance the desire for recovery with the need to avoid excessive resource consumption.

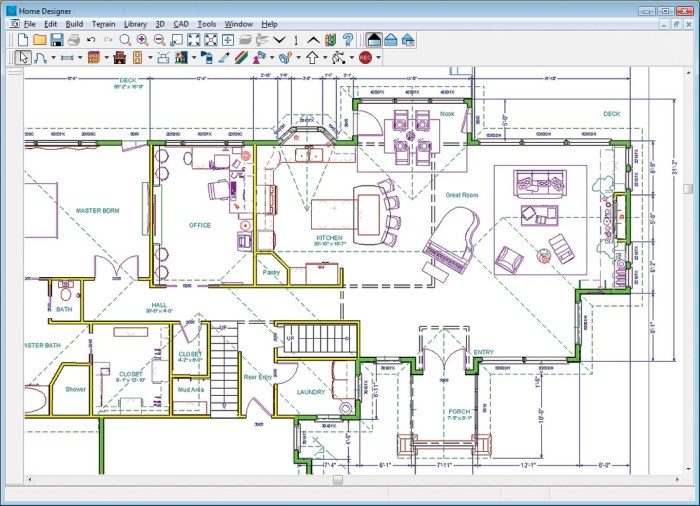

Flow Diagram

| Step | Action |

|---|---|

| 1 | Attempt the operation. |

| 2 | Check for success. If successful, exit. |

| 3 | If failed, determine if retry is allowed based on the retry policy. |

| 4 | If retry is allowed, calculate the delay using the exponential backoff strategy. |

| 5 | Wait for the calculated delay. |

| 6 | Repeat steps 1-5 until the maximum number of retries is reached or the operation succeeds. |

The flow diagram illustrates a basic retry mechanism with exponential backoff. Each subsequent retry attempt introduces an increasing delay to prevent overloading the target system. A well-defined retry policy is essential for the stability and efficiency of the system.

Implementing Exponential Backoff

Implementing exponential backoff is a crucial aspect of the retry pattern, ensuring that repeated attempts are not overwhelming the system or the target resource. This approach gradually increases the delay between retries, allowing for a more controlled and graceful recovery from transient errors. It’s especially important when dealing with services that might be temporarily overloaded or experiencing network issues.

Strategies for Calculating Exponential Backoff Delays

Various strategies exist for calculating the exponential backoff delays. These strategies determine how the delay between retries increases with each failed attempt. A crucial consideration is the need to avoid overwhelming the target resource with excessive retry requests.

- Constant Backoff: This strategy employs a fixed delay between retries. While simple, it can lead to unnecessary retries if the delay is too long. It’s also not ideal when dealing with transient errors that might resolve quickly.

- Linear Backoff: In this strategy, the delay increases linearly with each failed attempt. For example, the delay might increase by 1 second for each retry. This approach provides a more controlled increase in the delay, but it may not always be sufficient to avoid overwhelming the resource.

- Exponential Backoff: This approach increases the delay exponentially with each failed attempt. This is typically the preferred strategy, as it allows for a faster recovery from temporary errors while preventing an overwhelming flood of requests. The delay doubles or increases by a factor of 2 for each failed attempt, making the delay progressively longer. For instance, the delay could be 1, 2, 4, 8 seconds, and so on.

Examples of Exponential Backoff Implementation

Implementing exponential backoff requires careful consideration of the specific programming language and the structure of your application. Here are examples in Python, Java, and JavaScript:

- Python:

“`python

import time

import randomdef retry_with_exponential_backoff(func, max_retries=3, initial_delay=1):

retries = 0

delay = initial_delay

while retries < max_retries: try: return func() except Exception as e: retries += 1 print(f"Attempt retries failed: e") time.sleep(delay) delay-= 2 # Exponential backoff raise Exception("Maximum retries exceeded")# Example usagedef my_function(): # Simulate a potential failure. if random.random() < 0.5: raise Exception("Simulated error") return "Success!"try: result = retry_with_exponential_backoff(my_function) print(result)except Exception as e: print(f"Final error: e")``` - Java:

“`java

import java.util.concurrent.TimeUnit;public class RetryWithExponentialBackoff

public static String myFunction()

// Simulate a potential failure.

if (Math.random() < 0.5) throw new RuntimeException("Simulated error"); return "Success!"; public static void main(String[] args) throws InterruptedException int maxRetries = 3; int initialDelay = 1; int retryCount = 0; while (retryCount < maxRetries) try return myFunction(); catch (RuntimeException e) retryCount++; int delay = (int) (initialDelay- Math.pow(2, retryCount - 1)); System.out.println("Attempt " + retryCount + " failed: " + e); TimeUnit.SECONDS.sleep(delay); throw new RuntimeException("Maximum retries exceeded"); ``` - JavaScript:

“`javascript

async function retryWithExponentialBackoff(func, maxRetries = 3, initialDelay = 1000)

let retries = 0;

let delay = initialDelay;

while (retries < maxRetries) try return await func(); catch (error) retries++; console.error(`Attempt $retries failed:`, error); await new Promise(resolve => setTimeout(resolve, delay));

delay

-= 2; // Exponential backoffthrow new Error(“Maximum retries exceeded”);

// Example usage

async function myFunction()

// Simulate a potential failure.

if (Math.random() < 0.5) throw new Error("Simulated error"); return "Success!";retryWithExponentialBackoff(myFunction) .then(result => console.log(result))

.catch(error => console.error(“Final error:”, error));

“`

Comparison of Backoff Algorithms

A comparison of the different backoff algorithms can help in understanding their strengths and weaknesses.

| Algorithm | Description | Pros | Cons |

|---|---|---|---|

| Constant Backoff | Fixed delay between retries. | Simple to implement. | Ineffective for transient errors, can overwhelm resources if delay is too long. |

| Linear Backoff | Delay increases linearly with each retry. | More controlled than constant backoff. | May not be sufficient for rapidly resolving transient errors. |

| Exponential Backoff | Delay increases exponentially with each retry. | Handles transient errors effectively, prevents overwhelming resources. | Requires careful tuning of initial delay and maximum retries to avoid unnecessary delays. |

Handling Different Error Types in a Retry Mechanism

Handling various error types within a retry mechanism is crucial for robust error handling.

- Specific Error Handling: Your retry mechanism should be tailored to the specific errors expected in your application. If you anticipate particular error types, create specific handlers for them. For example, a network timeout error might warrant a longer delay than a database connection error.

- Error Logging: Detailed logging of error types, retry attempts, and delays is essential for monitoring and debugging issues. This logging helps identify patterns in failures and improve the robustness of your system.

Error Handling and Retry Logic

Implementing a retry mechanism requires careful consideration of how to determine when and how to retry failed operations. Effective error handling within the retry logic is crucial for ensuring application resilience and preventing cascading failures. This section details strategies for determining retry conditions, handling retry failures, and creating robust error handling for retry mechanisms.The retry pattern’s efficacy hinges on its ability to gracefully handle transient errors.

Robust error handling within the retry logic enables applications to recover from these transient issues without resorting to potentially harmful or inefficient responses. This section delves into the specifics of categorizing and classifying errors for effective retry logic.

Strategies for Determining Retry Conditions

Properly defining when to retry is critical for a robust retry mechanism. A well-designed retry strategy balances the need to recover from transient errors with the prevention of unnecessary retries that could lead to resource exhaustion.

- Time-Based Retries: This strategy defines retry attempts based on elapsed time since the initial failure. A common approach involves increasing the delay between retry attempts using exponential backoff. For example, if the initial retry delay is 1 second, the next attempt might be 2 seconds, then 4 seconds, and so on. This approach helps to avoid overwhelming the system with rapid retry attempts and provides time for underlying resources to recover.

- Attempt-Based Retries: A fixed number of retry attempts can be predefined. This approach is useful when the maximum number of attempts is known or when the potential for infinite retry loops is a concern. For instance, if an operation can fail due to network congestion, a finite number of retries prevents the system from continually attempting the operation during periods of high load.

- Error-Code-Based Retries: This strategy focuses on retrying operations based on specific error codes returned by the target service or system. For example, a database timeout error might be retried, whereas a database integrity violation error would likely not be. This approach allows for targeted retries, avoiding redundant attempts for non-recoverable errors.

Approaches to Handling Retry Failures

Effective handling of retry failures is essential for maintaining application stability and preventing unexpected behavior. Different approaches exist to deal with situations where a retry attempt still fails.

- Logging and Monitoring: Comprehensive logging of retry attempts, including timestamps, error codes, and retry counts, provides crucial information for troubleshooting and identifying patterns of failures. Monitoring tools can be integrated to track the success or failure of retry attempts and to alert administrators to critical issues. This ensures the application’s behavior is understood, and issues are addressed promptly.

- Circuit Breaker Pattern: When a series of retry attempts fail, a circuit breaker pattern can be employed to prevent further attempts and isolate the problematic service. This approach protects the application from further damage by preventing it from continuously attempting operations that consistently fail. The circuit breaker can be opened after a predefined number of failures and subsequently reopened after a period of inactivity.

- Degradation Strategies: If a retry attempt fails repeatedly, the application can degrade the service or operation to prevent further errors. For instance, if a database query fails due to high load, the application can return cached data or a default value to minimize impact on users and allow for recovery attempts.

Creating Robust Error Handling for Retry Mechanisms

Robust error handling within a retry mechanism requires careful consideration of error types and potential cascading effects. This includes using appropriate exception handling, logging mechanisms, and retry strategies.

- Exception Handling: Implementing robust exception handling is critical. The code should explicitly catch and handle various types of exceptions, providing appropriate responses and logging to facilitate debugging and recovery. Using exception types allows for better differentiation in handling errors.

- Error Categorization: Categorizing errors into transient and permanent categories is crucial for implementing the retry logic. Transient errors, such as network timeouts, can be retried, while permanent errors, such as database integrity violations, should not be. This categorization guides the decision-making process during retry attempts.

Categorizing and Classifying Errors for Retry Logic

Properly categorizing errors allows for targeted retries and prevents unnecessary attempts on non-recoverable failures.

- Transient Errors: These are errors that are likely to be resolved by retrying the operation. Examples include network timeouts, service outages, and temporary database lock conflicts. These should be retried with exponential backoff.

- Permanent Errors: These errors indicate a fundamental issue that cannot be resolved by retrying. Examples include invalid data input, database integrity violations, and authorization failures. These errors should not be retried, and appropriate actions should be taken to address the underlying cause.

Considerations for Exponential Backoff

Implementing exponential backoff for retry mechanisms is crucial for ensuring robustness and preventing overwhelming system resources. However, careful consideration must be given to the potential impact on overall system performance. Effective strategies need to balance the need for retries with the avoidance of excessive delays and resource consumption.Appropriate implementation of exponential backoff requires understanding the nuances of system behavior and network conditions.

This involves setting sensible initial backoff delays, establishing maximum retry attempts, and adapting the strategy based on observed system performance. Failure to consider these factors can lead to system instability and poor user experience.

Impact on System Performance

Exponential backoff, while beneficial for handling transient errors, can significantly impact system performance if not managed properly. Prolonged delays due to increasing backoff intervals can lead to increased latency for users, decreased throughput, and potential service degradation. Careful monitoring of the impact on system resources, such as CPU usage, network bandwidth, and memory allocation, is vital.

Determining Initial Backoff Delay

An appropriate initial backoff delay is crucial for a balanced retry mechanism. This delay should be long enough to allow for the transient error to resolve, but short enough to minimize the impact on user experience. Observing the typical duration of transient errors in the system is a critical first step. Analyzing historical data, such as error logs, can provide valuable insights.

A suitable starting point is often a small value, such as 100 milliseconds, and can be adjusted based on the observed behavior of the system and the type of errors encountered. This should be determined empirically, adjusting the initial delay upward or downward as needed to achieve optimal performance.

Maximum Retry Attempts

Limiting the number of retry attempts is essential to prevent indefinite delays and resource exhaustion. Setting a maximum retry count ensures that the system doesn’t get stuck in an endless loop of retries. Defining a reasonable maximum retry limit is a crucial part of the strategy, as exceeding it could indicate a more significant underlying problem that requires attention.

This should be a configurable value, allowing for adjustments based on the specific error type and context. For example, network timeouts might warrant a higher retry limit than database connection errors.

Considerations for Backoff Strategy Selection

The choice of an appropriate backoff strategy should take into account various factors influencing system behavior. This section presents a table outlining key considerations.

| Consideration | Description | Example |

|---|---|---|

| Network Conditions | Network conditions, such as intermittent connectivity or high latency, can significantly affect the effectiveness of exponential backoff. A strategy that accounts for these fluctuations is necessary. | A service experiencing network congestion might benefit from a higher initial backoff delay. |

| Resource Limits | The system’s available resources (CPU, memory, disk space) have a direct impact on the strategy’s effectiveness. Strategies must consider resource consumption during retries. | If a system is nearing its CPU capacity, a shorter initial delay and lower maximum retry attempts may be required. |

| System Load | System load can influence the impact of retries. Higher load may necessitate a more aggressive backoff strategy to avoid overwhelming the system. | During peak hours, a shorter initial backoff delay and a lower maximum retry limit might be preferable. |

| Error Rate | The frequency of errors plays a critical role in determining the appropriate backoff strategy. Strategies must adapt to changing error rates. | If error rates are consistently high, a higher initial backoff delay and lower maximum retry limit are likely necessary. |

Practical Examples and Use Cases

The retry pattern with exponential backoff is a valuable tool for robust applications, especially when dealing with external dependencies or intermittent network issues. It allows applications to gracefully handle transient errors, preventing application crashes and maintaining user experience. By strategically delaying retries with an increasing time interval, the pattern avoids overwhelming the system or the resource being accessed.

Web Application Scenarios

Implementing the retry pattern in web applications is crucial for maintaining a positive user experience. Consider a scenario where a web application needs to fetch data from a remote API. If the API call fails due to a temporary network outage or server overload, retrying the request with exponential backoff can prevent the application from failing and allow the user to access the data.

- API Calls: Web applications frequently interact with external APIs. If an API call fails, retrying with exponential backoff can handle transient network issues or server congestion. The delay between retries gradually increases, preventing excessive requests and potential overload of the API. This is vital for maintaining a stable connection and avoiding errors during data retrieval.

- Database Interactions: Database queries can encounter transient issues like network problems or temporary database lockups. The retry pattern can help maintain application availability by gracefully handling such issues, ensuring the database operations eventually succeed.

- File Transfers: Downloading or uploading large files might encounter network interruptions or server issues. Retrying file transfers with exponential backoff can ensure the file transfer completes successfully.

Real-World Use Cases

The retry pattern with exponential backoff has numerous real-world applications across various industries. Here are some examples:

- E-commerce Platforms: E-commerce websites rely heavily on external services like payment gateways and inventory systems. If these services are temporarily unavailable, retrying payment processing or checking stock availability with exponential backoff ensures the customer experience remains positive.

- Social Media Platforms: Social media applications frequently interact with external APIs for data synchronization or user activity updates. The retry pattern with exponential backoff can handle temporary service disruptions, ensuring data integrity and user experience.

- Financial Institutions: Financial transactions often require multiple API calls. Retry mechanisms with exponential backoff are crucial for maintaining transaction integrity and preventing financial losses due to transient errors in external systems.

Benefits in Web Applications

The retry pattern, combined with exponential backoff, significantly enhances the reliability and stability of web applications.

- Improved User Experience: By automatically retrying failed operations, the application can prevent frustrating errors and maintain a seamless user experience, even during temporary service disruptions.

- Increased Application Availability: The retry pattern can significantly increase the uptime and availability of the application, reducing the impact of transient errors.

- Reduced Errors: By automatically handling transient errors, the retry pattern can minimize the occurrence of application errors and improve overall application health.

Example Implementation (Conceptual)

A conceptual illustration of how to implement the retry pattern in Python:“`pythonimport timedef retry_with_exponential_backoff(func,args, –

*kwargs)

max_attempts = 3 initial_delay = 1 # seconds for attempt in range(max_attempts): try: return func(*args, – *kwargs) except Exception as e: delay = initial_delay

- (2

- * attempt)

print(f”Attempt attempt + 1 failed. Retrying in delay seconds…”) time.sleep(delay) raise Exception(“All attempts failed.”)“`This code snippet demonstrates a general retry mechanism. The `retry_with_exponential_backoff` function attempts to execute the given function (`func`) repeatedly with increasing delays.

The core logic involves a loop that tries to execute the function. If it fails, it calculates the delay based on the attempt number and exponential backoff, waits for the calculated delay, and tries again.

Implementing Retry Pattern with Libraries

Implementing the retry pattern with libraries significantly simplifies the process and improves code maintainability. Libraries provide robust retry mechanisms, abstracting away the complexity of exponential backoff and error handling. This allows developers to focus on the core logic of their application, rather than getting bogged down in low-level retry implementation details.

Popular Retry Libraries

Several popular libraries provide retry mechanisms, each with its strengths and weaknesses. Choosing the right library depends on the specific needs of the application, such as the desired level of customization and the features offered.

- Retry-4j: A Java library offering comprehensive retry capabilities. It allows for fine-grained control over retry logic, including configurable retry strategies, exponential backoff, and various error handling mechanisms. Retry-4j is well-suited for applications demanding intricate retry configurations. Its comprehensive features make it an excellent choice for projects needing detailed control over the retry process.

- Resilience4j: A Java library designed for building resilient systems. It provides a collection of decorators, including retry, circuit breaker, bulkhead, and rate limiter. This library is a powerful choice for handling transient errors in distributed systems. Its emphasis on resilience extends beyond retry, encompassing various fault tolerance patterns within a unified framework.

- Spring Retry: A Spring Boot library integrated into the Spring ecosystem. It simplifies retry configuration and integration with Spring’s transaction management. Spring Retry is ideal for Spring-based applications, leveraging Spring’s dependency injection and configuration features for seamless retry implementation.

- Polly: A .NET library offering a fluent API for implementing various resilience patterns, including retry. Its expressive syntax allows for straightforward retry configurations. Polly’s concise API makes it a good choice for projects needing a clear and expressive retry mechanism, especially in .NET environments.

Using a Library to Implement Retry with Exponential Backoff

Integrating a retry library typically involves defining a retry policy, which dictates the conditions under which a method should be retried. This policy often incorporates exponential backoff, ensuring that retry attempts are spaced out over time.

For example, using Spring Retry, you define a retry annotation, specifying the retry attempts and the backoff strategy. This approach allows for handling transient failures gracefully without interrupting the main application flow.

Choosing the Right Library

The choice of retry library depends on several factors:

- Programming Language: Libraries like Retry-4j and Resilience4j target Java, while Polly is for .NET. Spring Retry is specific to Spring-based Java applications. Choosing the appropriate library is crucial for compatibility with your project’s technological stack.

- Complexity Requirements: For basic retry scenarios, Polly’s fluent API might suffice. However, for complex retry strategies involving multiple conditions and error types, a library like Retry-4j or Resilience4j offers more flexibility.

- Existing Ecosystem: If your project leverages Spring, Spring Retry integrates seamlessly with the existing framework. Otherwise, consider libraries that provide a clean API and strong community support.

Example Integration (Spring Retry)

“`java@Retryable(value = MyException.class, maxAttempts = 3, backoff = @Backoff(delay = 1000, multiplier = 2))public void myMethod() // … your method logic …“`This Spring Retry example demonstrates annotating a method (`myMethod`) to be retried. `@Retryable` specifies the exception types (`MyException`) to catch, the maximum retry attempts (3), and the backoff strategy (exponential backoff with initial delay of 1000ms and a multiplier of 2).

This concise approach effectively handles transient errors, ensuring application resilience.

Monitoring and Logging

Effective monitoring and logging are crucial components of a robust retry mechanism. They provide visibility into the system’s behavior, enabling you to identify potential issues, track the success or failure of retry attempts, and diagnose problems efficiently. This visibility is vital for understanding the reasons behind retry failures and improving the overall system resilience.Comprehensive logging and monitoring of retry attempts and failures empower proactive problem resolution and optimization of the retry strategy.

By closely observing retry patterns, developers can identify trends, pinpoint bottlenecks, and refine the exponential backoff algorithm to minimize service disruptions and improve performance.

Importance of Logging Retry Attempts

Logging retry attempts is essential for understanding the frequency and nature of failures. Detailed logs provide valuable insight into the root causes of failures, allowing for targeted remediation efforts. This data helps identify patterns, predict potential issues, and improve the overall reliability of the system. Without logging, troubleshooting failures and understanding the effectiveness of the retry mechanism becomes significantly more difficult.

This is crucial for maintaining system health and ensuring uninterrupted service.

Monitoring Retry Attempts and Failures

Monitoring retry attempts and failures involves tracking various metrics. These metrics can include the number of retries performed, the time taken for each retry, the error codes encountered, and the eventual success or failure of the operation. This data can be collected and analyzed to identify problematic requests or server conditions. Tools for monitoring retry attempts and failures can be implemented using logging frameworks or custom monitoring systems.

These tools should allow for filtering and aggregation of the retry data.

Creating Effective Logging Mechanisms

Effective logging mechanisms should capture all relevant information about retry attempts. This includes timestamps, request IDs, the specific operation being retried, the error messages received, the backoff duration, and the success or failure status. These logs should be structured in a way that facilitates easy searching and analysis. Consider using a structured logging format, such as JSON, to make the data easily parsable by monitoring tools.

Implementing a centralized logging system is beneficial for aggregating and analyzing data from various components of the application.

Best Practices for Monitoring and Analyzing Retry Behavior

Best practices for monitoring and analyzing retry behavior include regularly reviewing logs for patterns of failure, analyzing the error messages to identify common causes, and correlating retry attempts with external events, such as system load or network conditions. This analysis helps determine if the retry mechanism is functioning as expected and if adjustments to the backoff strategy are necessary.

Alerting mechanisms can be implemented to notify administrators of significant retry failures or unusual retry patterns. By systematically reviewing logs and monitoring data, you can gain valuable insights into the system’s performance and identify opportunities for improvement. Regularly analyzing this data can also reveal potential vulnerabilities in the system’s design.

Security Considerations

Implementing retry mechanisms, while crucial for resilience, introduces potential security vulnerabilities if not carefully managed. Properly configuring retry policies is paramount to prevent abuse and maintain system integrity. Careless implementation can lead to denial-of-service attacks or unintended exposure of sensitive data.Robust security considerations are vital in the design and implementation of retry patterns. A well-designed retry mechanism should balance the need for reliability with the prevention of malicious exploitation.

Potential Security Vulnerabilities

Retry mechanisms can be exploited by malicious actors to overwhelm systems. These vulnerabilities arise from various factors, including the exponential backoff strategy and the lack of proper rate limiting. An attacker could flood a system with requests, leveraging the retry logic to amplify the impact of their attack. Improperly configured retry policies can inadvertently create opportunities for attackers to bypass rate limits, potentially leading to service disruptions or data breaches.

Mitigation Strategies

Effective mitigation strategies are crucial to address the security risks associated with retry mechanisms. These strategies involve a multi-faceted approach to safeguard against potential exploits.

- Rate Limiting: Implementing rate limiting is a crucial mitigation strategy. This involves limiting the number of requests a client can make within a specific timeframe. This approach prevents attackers from overwhelming the system with a high volume of requests. For instance, if a client makes too many requests in a short period, the system can reject further requests, effectively blocking abuse attempts.

This prevents a surge in requests that can potentially lead to denial-of-service attacks.

- IP Address Filtering: Filtering requests based on IP addresses can help identify and block malicious actors. This involves maintaining a database of known malicious IP addresses and blocking requests originating from them. This approach helps to prevent attackers from exploiting the retry mechanism by sending requests from multiple compromised systems.

- Request Validation: Implementing robust request validation is critical to preventing malicious input. This involves verifying the authenticity and integrity of requests received by the system. Validating data types, lengths, and formats can help detect malicious payloads and prevent their execution. For instance, if a request includes a suspicious string pattern, the request can be rejected to prevent potential attacks.

- Token-Based Authentication: Employing token-based authentication mechanisms can enhance the security of retry requests. This approach involves generating unique tokens for each client, which are then validated by the system before processing the requests. This approach provides an additional layer of security by verifying the identity of the client making the request.

Avoiding Abuse and Denial-of-Service Attacks

Careful configuration of the retry mechanism is essential to avoid abuse and denial-of-service (DoS) attacks. The exponential backoff strategy, while helpful for resilience, needs careful consideration to prevent malicious actors from exploiting it. A malicious user could intentionally trigger numerous failed requests, relying on the exponential backoff to overwhelm the system.

- Time-Based Limits: Setting time-based limits on retries can help mitigate DoS attacks. This involves setting a maximum time window for retry attempts. If a request fails repeatedly within this timeframe, the system can reject further attempts from that source, preventing exploitation.

- Retry Count Limits: Implementing retry count limits is another essential measure. This involves setting a maximum number of retry attempts for each request. Once the limit is reached, the request is considered failed, preventing the system from being overloaded by failed requests from a single source.

- Request Queuing: Employing request queuing can manage the flow of requests and prevent overloading. This technique involves placing incoming requests in a queue and processing them sequentially. This helps prevent a sudden surge in requests from overwhelming the system, thereby preventing DoS attacks.

Security Aspects in Retry Pattern Implementation

A comprehensive security approach necessitates integrating security measures into the retry mechanism’s implementation. The retry mechanism should be designed with security in mind from the outset. This involves careful consideration of error handling, rate limiting, and authentication.

- Robust Error Handling: Implementing robust error handling is essential. Error handling should not only handle transient failures but also identify and block malicious requests or patterns.

- Monitoring and Logging: Monitoring and logging are crucial for detecting anomalies and potential security breaches. By tracking retry attempts and associated errors, security teams can proactively identify and respond to malicious activity.

Advanced Topics and Optimization

The retry pattern, coupled with exponential backoff, provides a robust mechanism for handling transient failures. However, for optimal performance and resilience in complex systems, advanced strategies and optimizations are crucial. This section delves into sophisticated retry techniques beyond exponential backoff, performance enhancement strategies, and environment-specific optimizations.Advanced retry mechanisms extend beyond the basic exponential backoff approach to encompass a wider range of scenarios.

This involves tailoring the retry strategy to specific error types, resource constraints, and system dynamics. Understanding and implementing these advanced techniques significantly improves the reliability and performance of applications facing intermittent failures.

Alternative Retry Strategies

Beyond exponential backoff, several alternative retry strategies can be employed. These strategies adapt to various failure patterns and provide more nuanced responses.

- Jitter Introduction: Adding a random delay (jitter) to the exponential backoff can help avoid overwhelming the system under attack. This prevents a synchronized retry storm, reducing the potential for cascading failures. For example, if the initial delay is 1 second, adding jitter of 0-0.5 seconds creates a range of 0.5 to 1.5 seconds before the next retry.

This prevents all clients from retrying at the same time, spreading the load more evenly.

- Adaptive Backoff: This strategy dynamically adjusts the backoff delay based on observed failure patterns. If a service consistently fails for a particular period, the adaptive backoff increases the delay significantly, avoiding further issues. Conversely, if failures subside, the delay decreases, improving efficiency. This approach offers more intelligent adaptation to the current state of the system.

- Error-Specific Backoff: Implementing different backoff strategies for different types of errors. For instance, a network timeout might warrant a shorter backoff than a database connection failure. This ensures the retry mechanism addresses the specific nature of the failure. For example, a temporary network issue might receive a shorter delay, while a database connection problem might require a longer delay.

Performance Improvement Techniques

Optimizing the retry mechanism for performance involves several key techniques.

- Caching: Caching frequently accessed data or results can reduce the frequency of calls to external services, lowering the likelihood of retries. This is especially beneficial when the external service is prone to temporary outages. For example, a caching layer for frequently accessed product details can dramatically reduce retries.

- Throttling: Implementing throttling mechanisms prevents overwhelming the target service with excessive retry attempts. This limits the rate at which retry requests are sent, preventing service overload. For instance, limiting the number of retry attempts per second for a specific service.

- Asynchronous Retries: Executing retries asynchronously allows the application to continue processing other tasks without blocking. This is particularly useful when dealing with potentially long-running retries. For example, a background task queue for retries ensures other application operations continue without interruption.

Environment-Specific Optimization

The retry mechanism should be adaptable to different environments.

- Cloud Environments: Cloud environments often involve fluctuating resource availability. The retry mechanism needs to be resilient to transient network issues or server outages. For example, using a service mesh to handle retries for services deployed across a cloud platform.

- Microservices Architecture: In microservice architectures, the retry strategy needs to consider the dependencies between services. Circuit breakers can help prevent cascading failures in such systems. For instance, implementing a circuit breaker to protect a microservice from an unreliable downstream service.

- High-Availability Systems: In high-availability systems, the retry mechanism needs to consider the potential for failures across multiple nodes. The retry strategy should be resilient to failures in any part of the system. For example, using a distributed cache to store results and reduce the likelihood of multiple nodes failing at the same time.

Advanced Techniques

Advanced techniques like circuit breakers enhance the retry mechanism.

- Circuit Breakers: A circuit breaker is a mechanism that temporarily prevents further calls to a failing service. If the service remains unavailable after a certain number of attempts, the circuit breaker opens, preventing further calls until it is reset. This prevents cascading failures by isolating the affected service. For example, if a database service consistently fails, a circuit breaker can be implemented to prevent further attempts until the issue is resolved.

End of Discussion

In conclusion, implementing the retry pattern with exponential backoff is a powerful technique for enhancing application resilience. By understanding the core concepts, various strategies, and practical considerations, you can create applications that are more robust and less prone to failure. The use of libraries, appropriate logging, and security precautions are crucial elements to ensure the effectiveness and reliability of your retry mechanisms.

Remember to tailor your approach to the specific needs and characteristics of your application.

Q&A

What are some common error types that need to be handled in a retry mechanism?

Common error types include network timeouts, service unavailability, database connection issues, and transient application errors. A robust retry mechanism should be able to differentiate between transient and permanent failures.

How do I determine an appropriate initial backoff delay?

The initial backoff delay should be small enough to allow for quick retries of transient errors, but large enough to prevent overwhelming the system. Consider the typical response time of the target service and adjust accordingly.

What are the limitations of using exponential backoff?

Exponential backoff can lead to prolonged delays for persistent failures. It’s crucial to have a mechanism to detect and handle permanent errors, preventing infinite loops.

What is the role of logging in a retry mechanism?

Comprehensive logging of retry attempts, including the error type, delay, and success/failure status, is vital for monitoring, troubleshooting, and analyzing retry behavior.